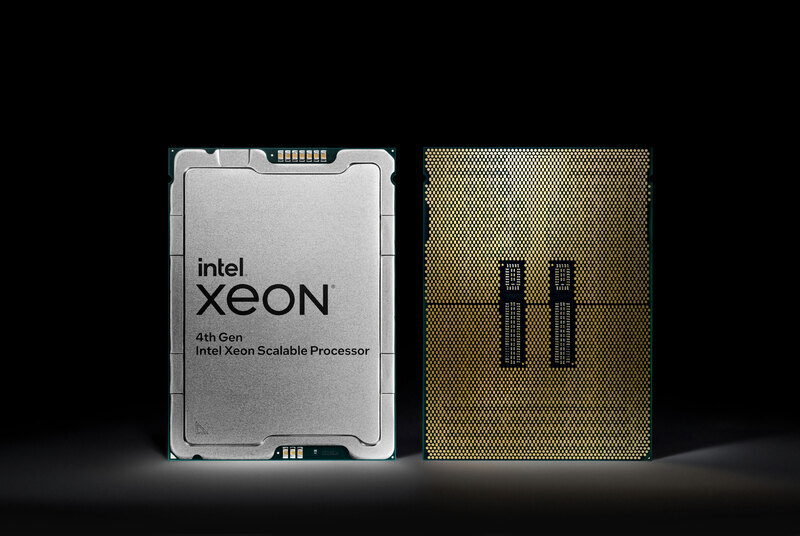

Intel has launched its 4th Gen Intel Xeon scalable processors (Sapphire Rapids), the Intel Xeon CPU Max Series (Sapphire Rapids HBM), and the Intel Data Center GPU Max Series (Ponte Vecchio). They are purpose-built to improve data center performance, efficiency, security, and capabilities for AI, cloud computing, networks, edge computing, and supercomputers. Intel is partnering with customers to offer customized solutions and systems using the new products to meet computing needs at scale.

There are currently over 100 million individual Xeon processors in use worldwide. Why are they so popular? Well, they are available in various configurations and are designed to be scalable, allowing them to be used in a wide range of applications and environments. They can also be customized for specific workloads or applications, offer a range of security features to help protect against threats like malware and data breaches, and are highly energy efficient.

4th Gen Intel Xeon Scalable CPU Models

4th Gen Intel Xeon Scalable CPU Models

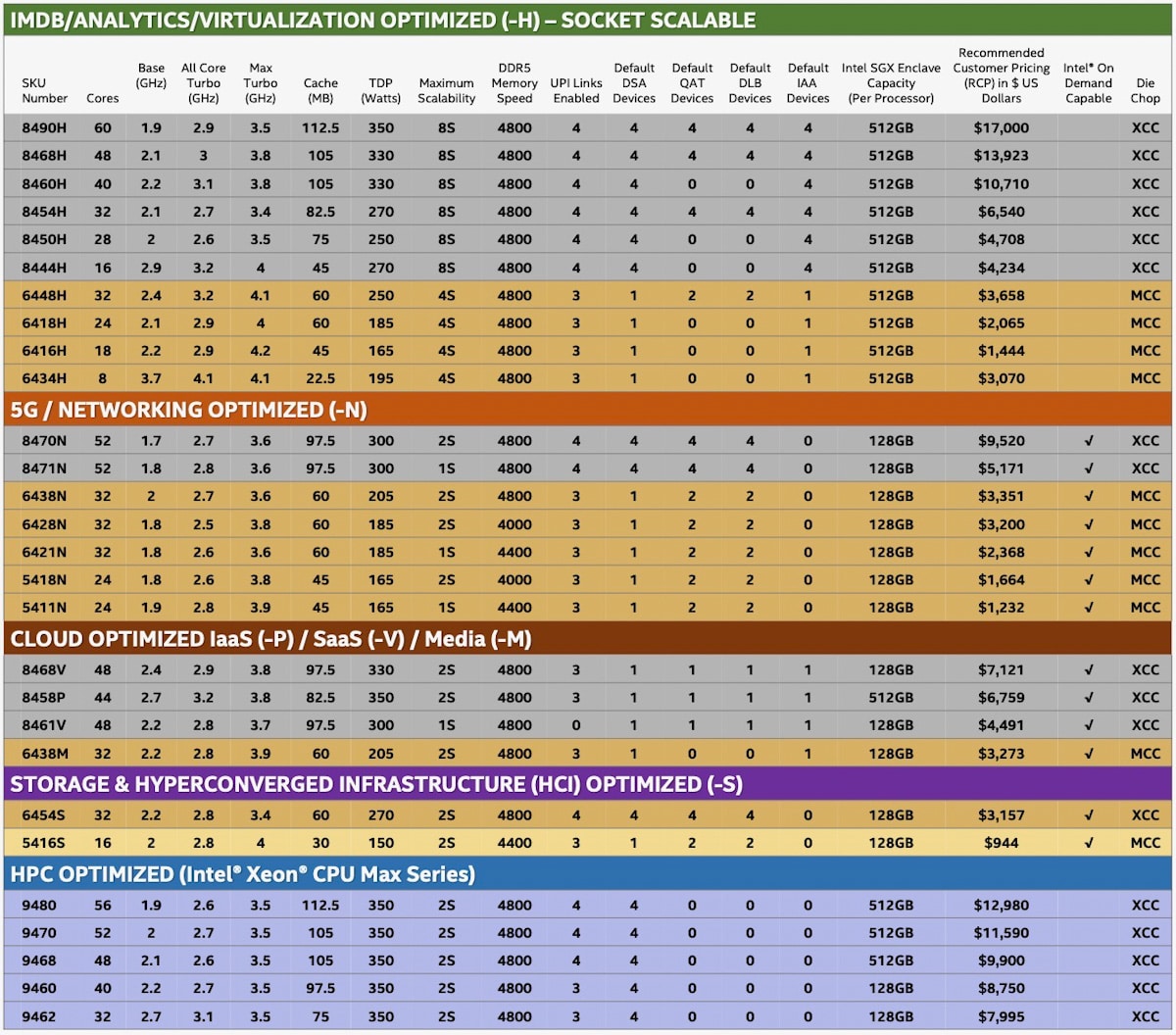

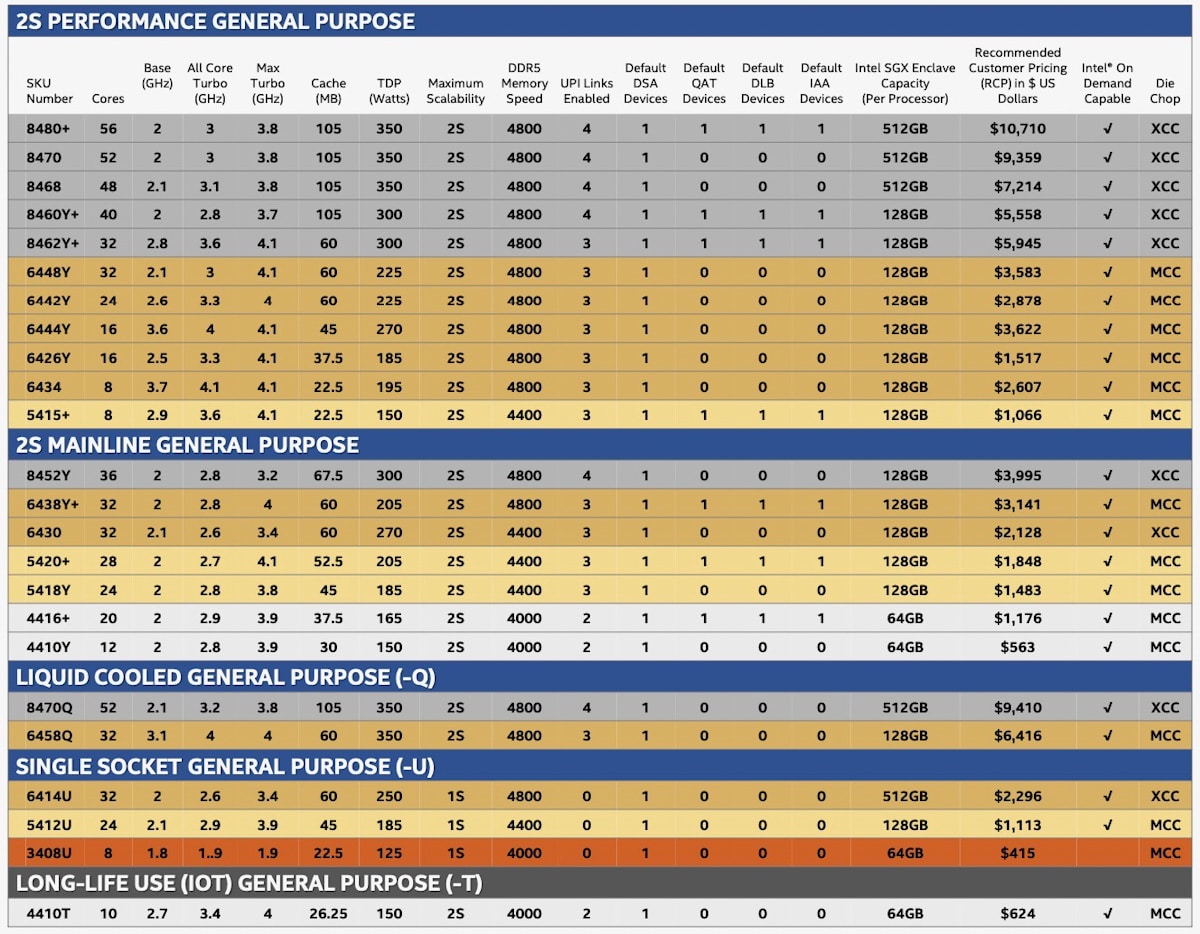

The new Xeon Models are available in six categories, including the Max 9400, Platinum 8000, Gold 6000, Gold 5000, Silver 4000, and Bronze 3000, as well as the Max Series. Each series has a range of different models that can be drilled down to their target use case:

- Performance general purpose

- Mainline general purpose

- Liquid cooling general purpose

- Single socket general purpose (“Q” Series)

- Long-life Use (IoT) general purpose (“T” Series)

- IMDB/Analytics/virtualization optimized (“H” Series)

- 5G/Networking optimized (“N” Series)

- Cloud-optimized IaaS (“P”, “V” and “M” Series)

- Storage and hyperconverged infrastructure optimized (“S” series)

- HPC optimized (i.e., the Intel Xeon CPU Max series)

For example, the powerful Platinum 8400 processors are designed for advanced data analytics, AI, and hybrid cloud data centers, offering high performance, platform capabilities, and workload acceleration, as well as enhanced hardware-based security and multi-socket processing. These processors also offer up to 60 cores per processor (an increase of 20 cores compared to the top 3rd-Gen Xeon model), eight memory channels, and AI acceleration with Intel AMX.

Intel Xeon Gold 6400 and Gold 5400 processors are optimized for data center and multi-cloud workloads. They offer enhanced memory speed, capacity, security, and workload acceleration. Intel Xeon Silver 4400 processors provide essential performance, improved memory speed, and power efficiency for entry-level data center computing, networking, and storage.

Here is a detailed rundown of each Xeon CPU and its targeted use case:

| Features | 4th Gen Intel Xeon Scalable Processors | Intel Xeon CPU Max Series | |

| Extreme Core Count (XCC) | Medium Core Count (MCC) | High Bandwidth Memory (HBM) | |

|

Die Construction |

Four tiles connected using MDF over Intel Embedded Multi-die Interconnect Bridge (EMIB) |

One monolithic chip |

Four tiles connected using MDF over Intel Embedded Multi-die Interconnect Bridge (EMIB) |

| Core Count | Up to 60 active cores | Up to 32 active cores | Up to 56 active cores |

| TDP Range | 225 to 350W | 125 to 350W | 350W |

|

Memory |

DDR5 @ 4800 (1 DPC), 4400 (2DPC), 16 Gb DRAM, 8 Channels

Intel Optane PMem 300 (Crow Pass) @4400 MT/s |

DDR5 @ 4800 (1 DPC), 4400 (2DPC), 8Channels

64 GB HBM2e memory with up to 1.14 GB/core |

|

| Intel UPI | UPI 2.0 @ 16 GT/s, up to 4 Ultra Path Interconnects | UPI 2.0 @ 16 GT/s, up to 3 Ultra Path Interconnects | UPI 2.0 @ 16 GT/s, up to 4 Ultra Path Interconnects |

| Scalability | 1 Socket, 2 Socket, 4 Socket, 8 Socket | 1 Socket, 2 Socket, 4 Socket | 1 Socket, 2 Socket |

| PCIe/Compute Express Link | PCIe 5.0 (80 lanes),

Up to 4 devices supported via Compute Express Link (CXL) 1.1 |

||

| Security | Intel SGX

Minimum Enclave Page Cache (EPC) size 256 MB |

Intel SGX (Flat mode only) | |

| Integrated IP Accelerators | Intel QAT, DLB, IAA, DSA (up to 4 devices each) | Intel QAT, DLB (up to 2 devices each), Intel DSA, IAA ( 1 device each) | Intel DSA (4 devices) |

The 4th Gen Intel Xeon Scalable processors aim to improve performance and tackle various computing challenges related to AI, analytics, networking, security, storage, and high-performance computing (HPC). These processors are remarkable for having the most built-in accelerators of any CPU.

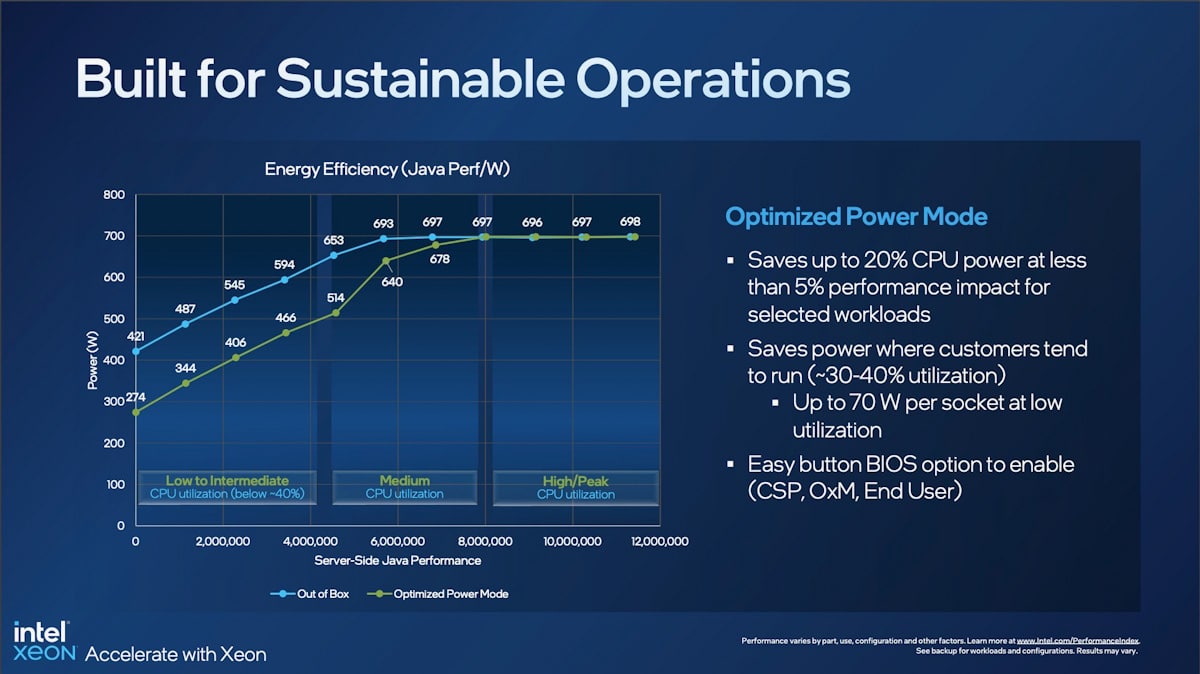

According to Intel, 4th Gen Intel Xeon Scalable customers can expect an average performance improvement in the following:

- 9x the improvement in per-watt efficiency when using built-in accelerators,

- 70-watt power saving per CPU in optimized power mode with minimal performance loss,

- Fifty-five percent reduction in the total cost of ownership and power consumption compared to previous generations.

4th Gen Intel Xeon Scalable – Power Efficiency Improved

The new Optimized Power Mode can provide up to 20 percent socket power savings with less than 5 percent performance impact for specific workloads. Innovations in air and liquid cooling can further reduce total data center energy consumption.

The 4th Gen Intel Xeon Scalable CPUs are equipped with a large number of built-in accelerators, which can help save power at the platform level and reduce the need for additional acceleration. This can help their customers achieve their sustainability goals. Moreover, the newly introduced Optimized Power Mode is expected to provide up to 20 percent socket power savings with minimal impact on performance for specific workloads.

Innovations in air and liquid cooling can further reduce total data center energy consumption. The 4th Gen Xeon processors have also been manufactured using 90 percent or more renewable electricity at Intel sites with advanced water reclamation facilities.

New Advances in AI Performance

Compared to the previous generation, the 4th Gen Xeon processors are quoted to achieve up to 10 times higher PyTorch real-time inference and training performance with the use of their Advanced Matrix Extension (Intel AMX) accelerators,

PyTorch is a machine learning framework for building and training neural networks. Real-time inference involves using a trained neural network model to make real-time predictions or decisions based on new input data. Higher PyTorch real-time inference and training performance is essential for Intel processors because it allows them to run machine learning workloads that involve real-time prediction or decision-making more efficiently.

This is especially useful in applications where quick and accurate predictions or decisions are paramount. In addition, higher performance in machine learning tasks can lead to faster training of models and more accurate predictions, as well as the ability to use larger and more complex models.

As such, Intel says that their new 4th Gen Intel Xeon Scalable processors can provide even further capabilities for natural language processing, claiming up to a 20 times speed-up on large language models.

Intel’s AI software suite, tested with over 400 machine learning and deep learning AI models across various industries and applications, can be used with the developer’s preferred AI tool to boost productivity and accelerate AI development. The suite is designed to be portable, allowing it to be used on the workstation and deployed at the edge and in the cloud.

Networking Capabilities

The 4th Gen Intel Xeon Scalable processors also offer specifically optimized models for high-performance, low-latency networks and edge workloads. These processors play a crucial role in driving a more software-defined future for industries such as telecommunications, retail, manufacturing, and smart cities. For 5G core workloads, the built-in accelerators can help increase throughput and decrease latency, while power management improvements enhance the platform’s responsiveness and efficiency.

They can also double the virtualized radio access network (vRAN) capacity compared to 3rd-gen Xeon processors (without consuming more power). In general, processors with a higher vRAN capacity can more efficiently and effectively handle network data traffic, such as reducing latency and improving overall performance. This is particularly important for applications that require real-time communication.

Intel indicates that this will allow communications service providers to double their performance-per-watt and meet their performance and energy efficiency needs. The increase in vRAN would also enable organizations to scale up or down more easily as the number of vRAN workloads changes, which means greater flexibility in network resources (e.g., without requiring additional hardware, power, or infrastructure).

HPC

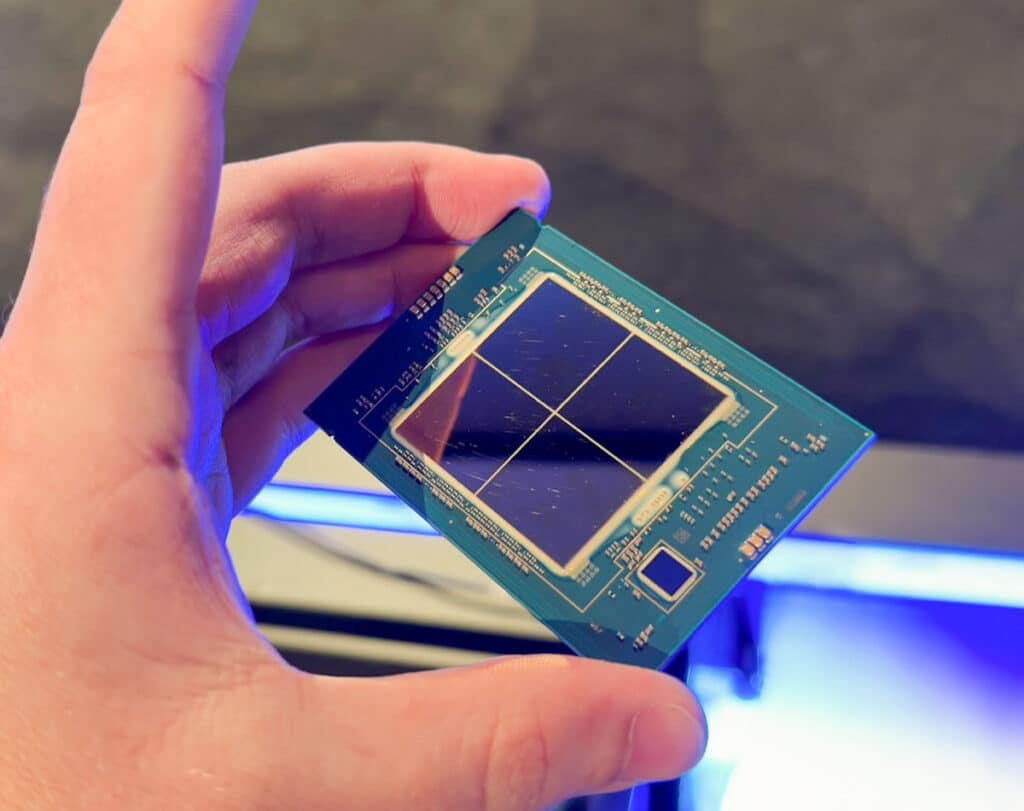

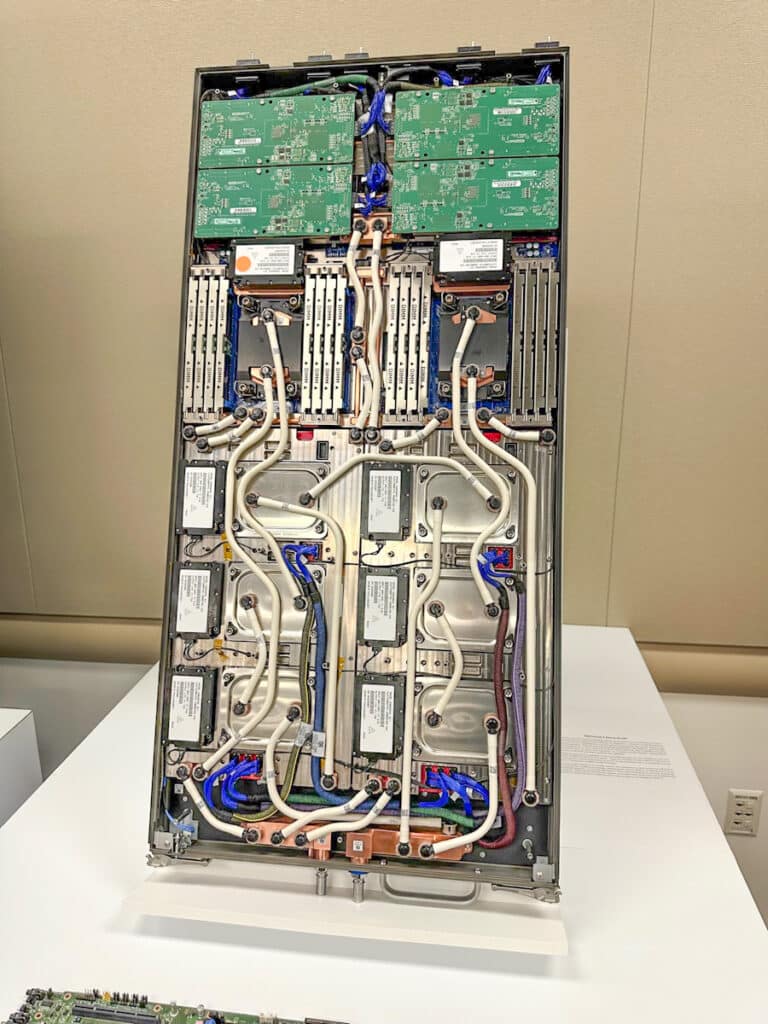

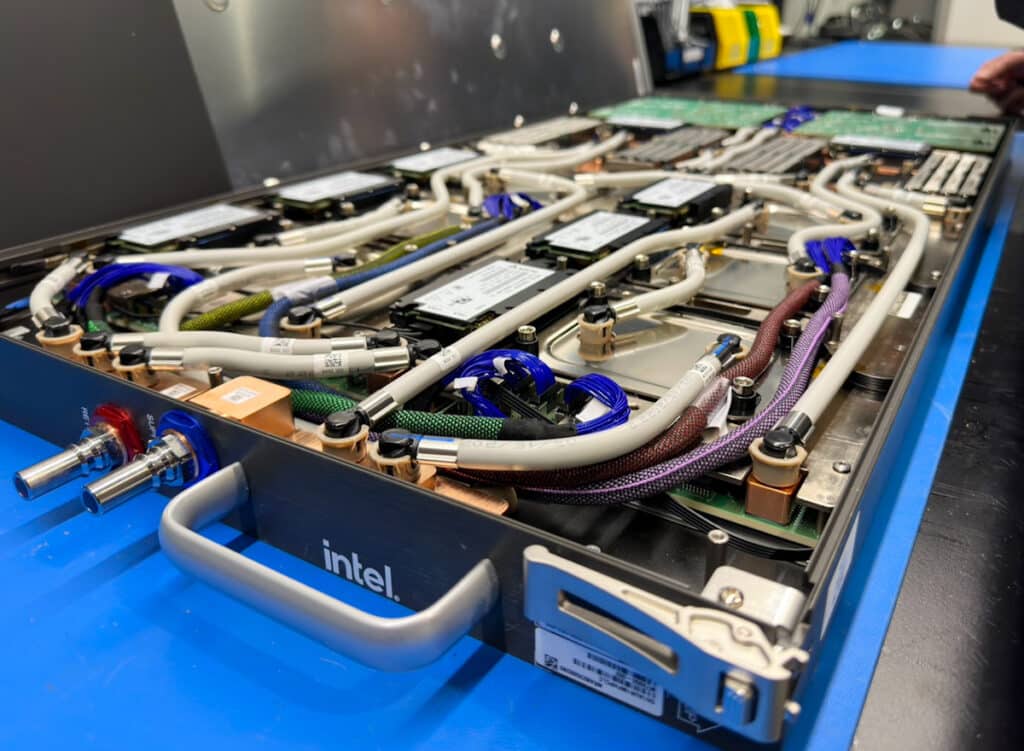

Compute blade (open chassis) at Intel HPC lab

The 4th Gen Intel Xeon Scalable and Intel Max Series products offer a scalable, balanced architecture that combines CPU and GPU with oneAPI’s open software ecosystem for demanding computing workloads in HPC and AI. Intel claims that this architecture is specifically designed to tackle the world’s most difficult problems today.

OneAPI open software is a set of tools and libraries that allow developers to write code that can run on various hardware platforms (including CPUs, GPUs, and other specialized processors) using a single set of programming interfaces. This can make it easier to develop and optimize applications for diverse computing environments.

Compute blade at Intel HPC lab

At Intel’s Jones Farm, StorageReview got a behind-the-scenes look at Borealis. Intel, HPE, and Argonne National Laboratory are working towards delivering the Aurora supercomputer, which will be implemented with the new 4th Generation Xeon, and Datacenter GPU platforms announced today.

Borealis is a two-rack mini-system located at the Jones Farm lab in Oregon that validates the Aurora system and its new technologies. It has the same architecture and design as Aurora and is being tested to validate all components of the software and liquid cooling systems before the system is installed at a large scale at Argonne National Laboratory.

Water cooling system: Lab manager at Jones Farm HPC Lab – Borealis showcases the red and blue tubes that are part of the water cooling system to keep the racks cool.

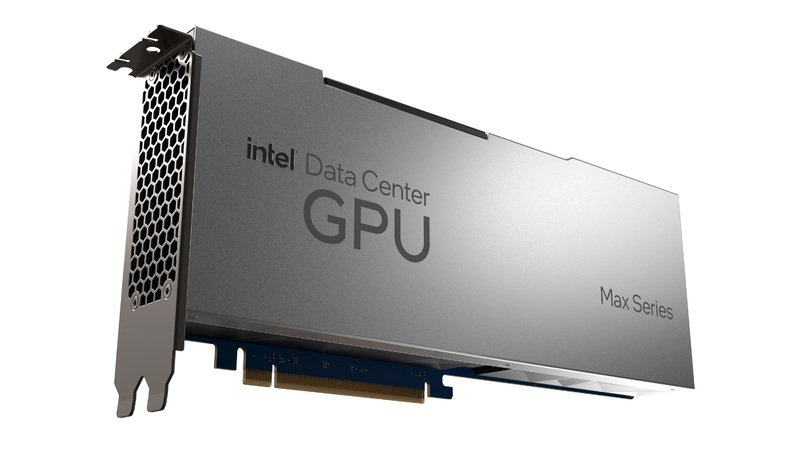

The Intel Xeon CPU / GPU Max Series

The Intel Xeon CPU Max Series is the first x86 processor with high bandwidth memory to hit the market, allowing it to accelerate many HPC workloads without requiring code changes. The company dubs the Intel Data Center GPU Max Series as their highest-density processor, which will be available in various form factors to suit a range of customer needs.

The Xeon CPU Max Series offers 64GB of high bandwidth memory on the package, which significantly increases data throughput for HPC and AI workloads. Compared to top-end 3rd Gen Intel Xeon Scalable processors, the Xeon CPU Max Series provides up to 3.7 times more performance on various real-world applications such as energy and earth systems modeling. CPU bandwidth is essential for HPC and AI workloads, as they often require a large amount of data to be processed and moved between the CPU and memory. Otherwise, it can bottleneck the system’s performance and lead to longer processing times.

The Data Center GPU Max Series, which features over 100 billion transistors in a 47-tile package, brings increased throughput to workloads that involve physics, financial services, and life sciences. When combined with the Xeon CPU Max Series, the platform can achieve up to 12.8 times greater performance than the previous generation when running the open-source code LAMMPS (Large-scale Atomic/Molecular Massively Parallel Simulator) molecular dynamics simulator.

Intel launched the Data Center GPU Flex Series back in August 2022, a Xe HPG-based card that features up to 16 (8 per GPU) X cores, 16 TFLOPS, and 16GB memory capacity.

Intel On-Demand

Intel On-Demand is a service that allows customers to expand or upgrade the accelerators and hardware-enhanced features available on most 4th Gen Intel Xeon Scalable processors. The service is managed by Intel and hardware providers and consists of an API for license ordering and a software agent for license provisioning and activation.

Customers can opt to buy On-Demand features at the time of purchase or as a post-purchase upgrade, and Intel is also working on a metering adoption model in which the features can be turned on and off as needed, and payment is based on usage. The introduction of the activation model with 4th Gen Xeon processors allows customers to choose fully-featured premium SKUs or add features at any time throughout the processor’s lifecycle.

Initial providers of On-Demand include H3C, Inspur, Lenovo, Supermicro, and Variscale, with Intel working with additional providers on their enablement plans.

Chip-level DRM, or digital rights management, refers to technology controlling access to certain computer chip features or capabilities. In the context of Intel On-Demand, chip-level DRM could potentially be used to restrict access to certain features or capabilities of the 4th Gen Intel Xeon Scalable processors unless purchased or activated through the On-Demand service. This could impact the right to repair, as it may make it more difficult for individuals or independent repair shops to access and repair certain aspects of systems equipped with Intel On-Demand.

Environmental sustainability is an important consideration when it comes to the use and disposal of technology products, including enterprise CPUs. While Intel On-Demand may allow customers to expand or upgrade the capabilities of their processors, it is unclear how this service may impact the environmental sustainability of the products. Since the TDP of the package does not change with activation, the energy consumption of the unused features and the potential for increased electronic waste due to upgrades or replacements may need to be taken into account. It is crucial for companies like Intel to consider the environmental impacts of their products and services and to work towards more sustainable solutions.

4th Gen Intel Xeon Scalable Performance Testing

4th Gen Intel Xeon Scalable processor’s emphasis on improving efficiency shines in some of our early benchmarking. These new processors are advertised as achieving a 53 percent (for general purpose compute) increase in efficiency over the 3rd Generation Xeon Scalable Processors through improved manufacturing refinements and in targeted workloads, 2.9x average performance per watt efficiency improvement utilizing built-in accelerators.

In addition to these improvements, the new-generation mid-tier chips are just as powerful as last-generation top-tier models, making them a cost-effective option for data center operators. These mid-tier chips can help data centers optimize their costs and achieve better overall efficiency by offering similar performance to the flagship models at a lower price point.

We had a few options to test the performance of Sapphire Rapids, and in order to showcase the efficiency improvements we tested a mid-range 4th generation platform with dual 8454Y($3,995) Xeons and compared it to a 3rd Generation top of the line platform with dual 8380($9,400) CPU’s. With new improvements of the 4th Generation, our midrange system was able to keep up in benchmarks with the previous generation’s flagship model.

| Cinebench | ||

| 2 x 4th Gen 8452Y (2.0GHz x 36) | 2 x 3rd Gen 8380 (2.3GHz x 40) | |

| Multi-Core | 60075 | 70540 |

| Single Core | 841 | 985 |

| Core Multiplier | 71.40x | 71.63x |

| Blender CLI Render Benchmark | ||

| 2 x 4th Gen 8452Y (2.0GHz x 36) | 2 x 3rd Gen 8380 (2.3GHz x 40) | |

| Monster | 652.526942 | 671.145395 |

| Junkshop | 401.119468 | 407.141514 |

| Classroom | 308.802541 | 320.507039 |

| Total | 1362.448951 | 1398.793948 |

Additionally, in an internal AI model training test, we noted an approximate 5 percent increase in performance, measuring 95 minutes for 3rd Generation 8380 vs. 90 minutes for 4th Generation 8452Y.

Overall, the improvements in efficiency offered by the 4th Gen Intel Xeon Scalable processors make them an attractive option for data center operators looking to reduce their power consumption and costs. The General Purpose processors offer an outstanding balance of performance and efficiency, making them a solid choice for a wide range of workloads. We are excited to get into testing specific accelerators on data center workloads, including the Data Storage Accelerator.

Market Impact

With 4th Gen Intel Xeon Scalable processors finally shipping to the enterprise, cloud providers have had these for some time, the battle is on with AMD Genoa CPUs in the data center. While at a high level, it’s really easy to look at Genoa’s massive PCI lane count and declare them the victor. But selecting the right CPU these days is far more complicated than that. There’s a delicate balance of cost, energy, performance, and qualification with additional components in the system. Oh, and let’s not neglect the importance of understanding the workload to align it with the correct CPU.

So today, there is no easy answer to the question of who’s better, Genoa or Sapphire Rapids. That will take time to play out as Dell, HPE, Supermicro, Lenovo, and others bring systems to market. With the adoption of new SSD form factors going on right now in servers, support for Gen5 throughput, and new high-speed networking and accelerator options like DPUs, the game is afoot. This is, however, not a battle of the spec sheets. The onus is on the enterprise IT organization to be as diligent and informed as possible to ensure they’re investing in systems that adequately support their application needs. And that may be the biggest challenge of all. With so many choices, sophisticated IT partners may be more critical now than ever.

Amazon

Amazon