The latest MLPerf results have been published, with NVIDIA delivering the highest performance and efficiency from the cloud to the edge for AI inference. MLPerf remains a useful measurement for AI performance as an independent, third-party benchmark. NVIDIA’s AI platform has been at the top of the list for training and inference since the inception of MLPerf, including the latest MLPerf Inference 3.0 benchmarks.

The latest MLPerf results have been published, with NVIDIA delivering the highest performance and efficiency from the cloud to the edge for AI inference. MLPerf remains a useful measurement for AI performance as an independent, third-party benchmark. NVIDIA’s AI platform has been at the top of the list for training and inference since the inception of MLPerf, including the latest MLPerf Inference 3.0 benchmarks.

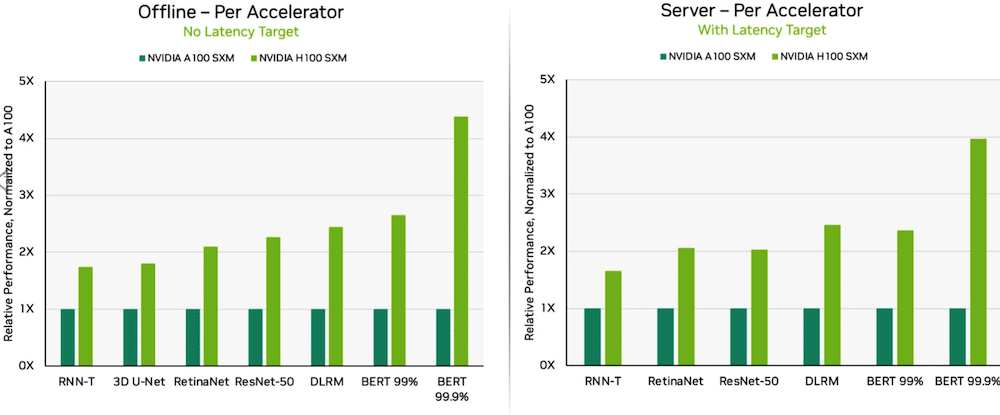

Thanks to software optimizations, NVIDIA H100 Tensor Core GPUs running in DGX H100 systems delivered the highest performance in every test of AI inference, up 54 percent from the debut in September. In healthcare, H100 GPUs delivered a 31 percent performance gains on 3D-UNet, the MLPerf benchmark for medical imaging.

Dell PowerEdge XE9680 with 8X H100 GPUs

Powered by its Transformer Engine, the H100 GPU, based on the Hopper architecture, excelled on BERT. BERT is a model for natural language processing developed by Google that learns bi-directional representations of text to significantly improve contextual understanding of unlabeled text across many different tasks. It’s the basis for an entire family of BERT-like models such as RoBERTa, ALBERT, and DistilBERT.

With Generative AI, users can quickly create text, images, 3D models, and much more. Companies, from startups to cloud service providers, are adopting generative AI to enable new business models and speed up existing ones. A generative AI tool that has been in the news lately is ChatGPT, used by millions expecting instant responses following queries and inputs.

With deep learning being deployed everywhere, performance on inference is critical, from factory floors to online recommendation systems.

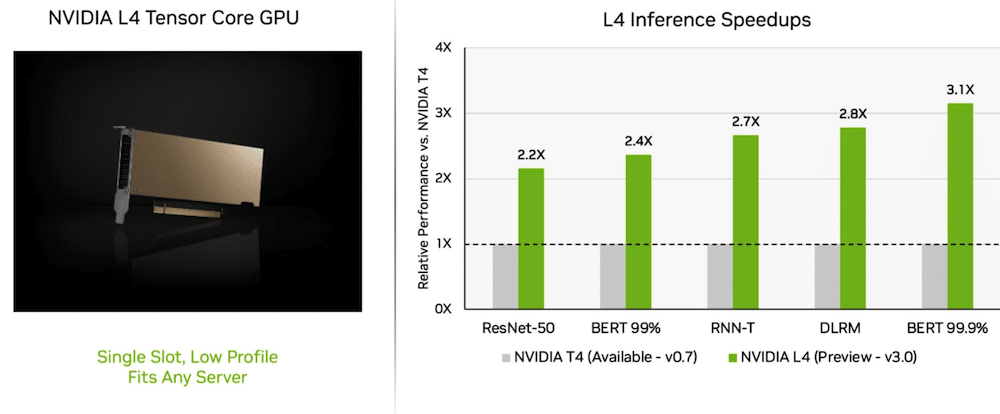

L4 GPUs Deliver Stunning Performance

On its maiden voyage, NVIDIA L4 Tensor Core GPUs performed at over 3x the speed of prior-generation T4 GPUs. The L4 GPU accelerators, packaged in a low-profile form factor, are designed to deliver high throughput and low latency in almost any server platform. The L4 Tensor GPUs ran all the MLPerf workloads, and thanks to their support for the FP8 format, the results were excellent on the performance-hungry BERT model.

In addition to the extreme AI performance, L4 GPUs deliver up to 10x faster image decode, up to 3.2x faster video processing, and over 4x faster graphics and real-time rendering performance. The accelerators, announced at GTC a couple of weeks ago, are available from system makers and cloud service providers.

What Network-Division?

NVIDIA’s full-stack AI platform showed its worth in a new MLPerf test: Network-division benchmark!

The network-division benchmark streams data to a remote inference server. It reflects the prevalent scenario of enterprise users running AI jobs in the cloud with data stored behind corporate firewalls.

On BERT, remote NVIDIA DGX A100 systems delivered up to 96 percent of their maximum local performance, slowed in part while waiting for CPUs to complete some tasks. On the ResNet-50 test for computer vision, handled solely by GPUs, they hit 100%.

NVIDIA Quantum Infiniband networking, NVIDIA ConnectX SmartNICs, and software such as NVIDIA GPUDirect played a significant role in the test results.

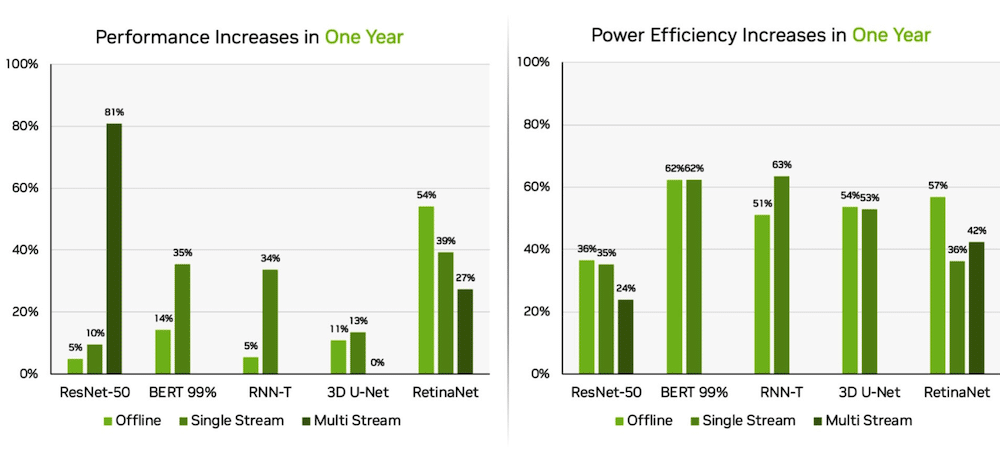

Orin improves at the Edge

Separately, the NVIDIA Jetson AGX Orin system-on-module delivered gains of up to 63 percent in energy efficiency and 81 percent in performance compared to results from last year. Jetson AGX Orin supplies inference when AI is needed in confined spaces at low power levels, including battery-powered systems.

The Jetson Orin NX 16G, a smaller module requiring less power, performed well in the benchmarks. It delivered up to 3.2x the performance of the Jetson Xavier NX processor.

NVIDIA AI Ecosystem

The MLPerf results show NVIDIA AI is backed by a broad ecosystem in machine learning. Ten companies submitted results on the NVIDIA platform in this round, including Microsoft Azure cloud service and system makers, ASUS, Dell Technologies, GIGABYTE, H3C, Lenovo, Nettrix, Supermicro, and xFusion. Their work illustrates that users can get great performance with NVIDIA AI both in the cloud and in servers running in their own data centers.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed