AMD used its “Data Center and AI Technology Premiere” event to announce new products and share how the company will shape the next phase of data center innovation. The announcement includes updates to the 4th Gen EPYC processor family, the new AMD Instinct MI300 Series accelerator family, and an updated networking portfolio.

AMD used its “Data Center and AI Technology Premiere” event to announce new products and share how the company will shape the next phase of data center innovation. The announcement includes updates to the 4th Gen EPYC processor family, the new AMD Instinct MI300 Series accelerator family, and an updated networking portfolio.

4th Gen EPYC Processor Optimized for the Modern Data Center

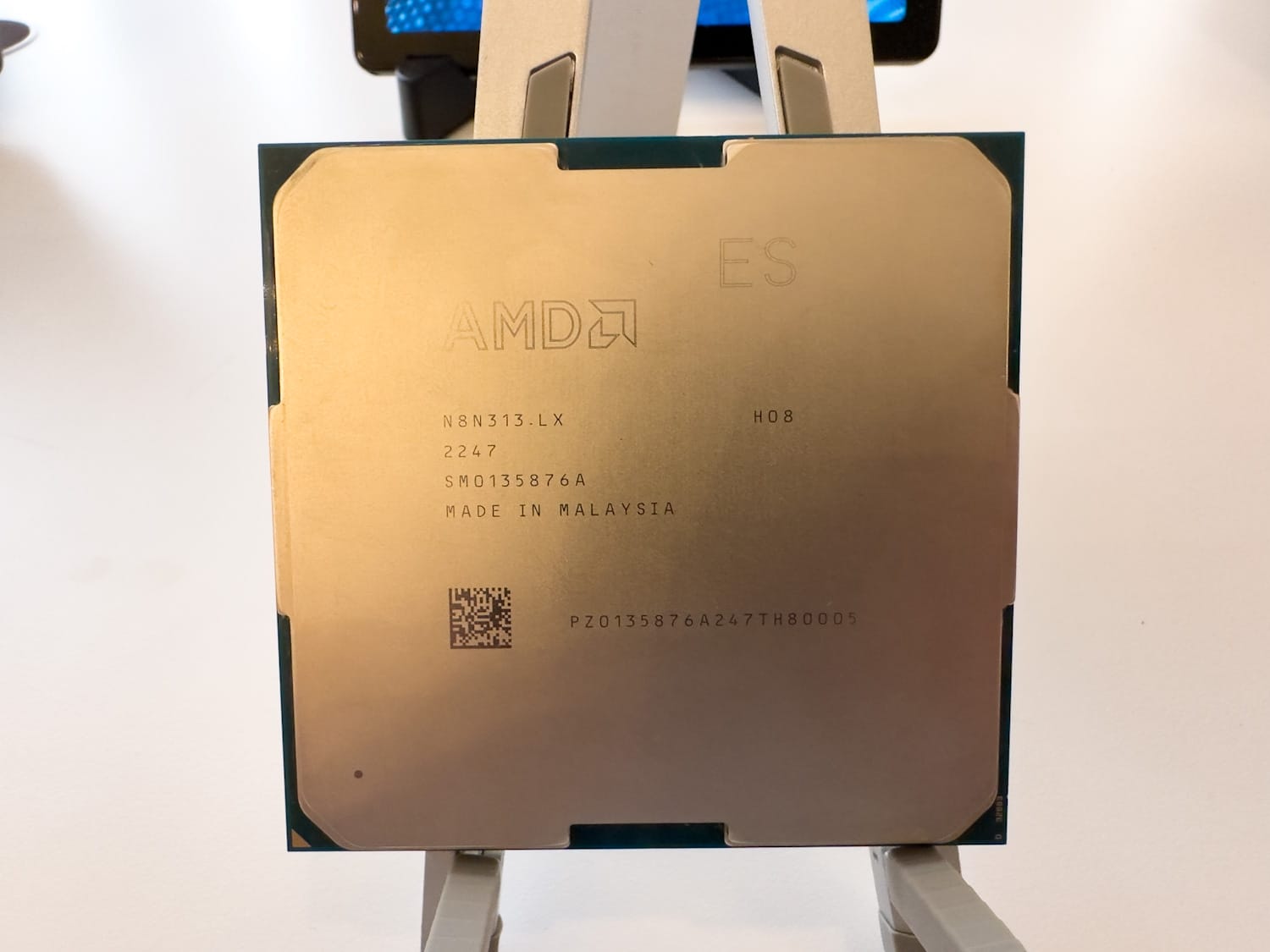

Updates to the 4th Gen EPYC family unveiled at the start of AMD’s Data Center and AI Technology Premiere include workload specialization that addresses specific business demands. AMD introduced its 4th Gen AMD EPYC 97X4 processors, previously codenamed “Bergamo,” providing greater vCPU density and increased performance targeting applications that run in the cloud.

| Model | Cores | Max Threads | Default TDP | Base Freq. (GHz) | Boost Freq. (GHz) | L3 Cache (MB) |

| 9754 | 128 | 256 | 360W | 2.25 | 3.10 | 256 |

| 9754S | 128 | 128 | 360W | 2.25 | 3.10 | 256 |

| 9734 | 112 | 224 | 320W | 2.2 | 3.0 | 256 |

AMD is making a significant push into the AI ecosystem with this announcement, which includes the new 4th Gen AMD EPYC 97X4 processors. These processors are specifically engineered to meet the specialized demands of modern workloads. With an impressive core count of 128 cores (anyone else hungry for Pie?), they provide unparalleled computational power for AI applications.

The increased core count, along with improved energy and real-estate efficiency, allows these processors to handle complex AI computations while supporting up to three times more containers per server. This advancement contributes to the growing adoption of cloud-native AI applications.

| Model | Cores | Max Threads | Default TDP | Base Freq. (GHz) | Boost Freq. (GHz) | L3 Cache (MB) |

| 9684X | 96 | 192 | 400W | 2.55 | 3.70 | 1,152 |

| 9384X | 32 | 64 | 320W | 3.10 | 3.90 | 768 |

| 9184X | 16 | 32 | 320W | 3.55 | 4.20 | 768 |

The latest AMD EPYC Zen 4 processors, equipped with 3D V-Cache, codenamed Genoa-X, have been identified as the leading x86 server CPU for technical computing in a recent SPEC.org report. These cutting-edge processors bring 3D V-Cache to the 96 core Zen 4 chips, and offer an expansive L3 cache exceeding 1GB, which facilitates rapid product development. These processors, AMD claims, can significantly speed up product development, delivering up to double the design jobs per day while using fewer servers and less energy.

Advancing the AI Platform

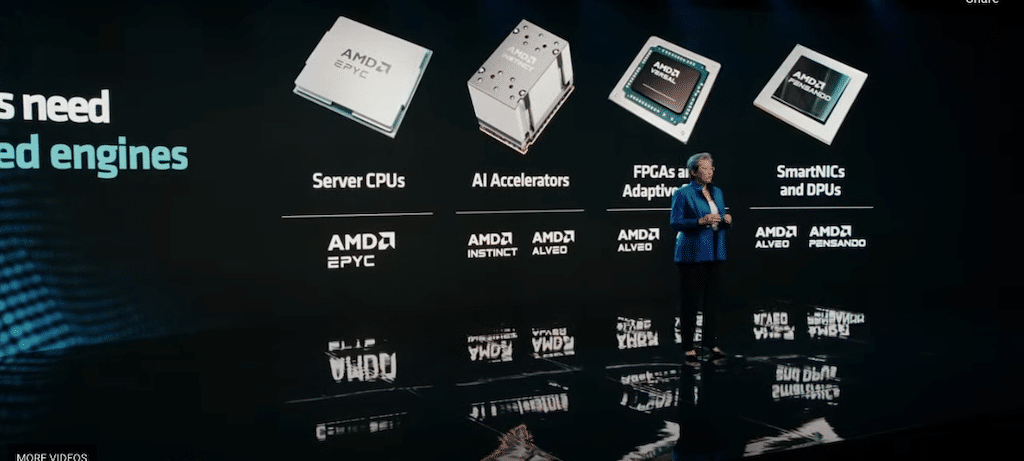

AMD presented its plan to enhance its AI Platform by offering clients a range of hardware products, from cloud to edge to endpoint, and extensive collaboration with industry software to create adaptable and widespread AI solutions.

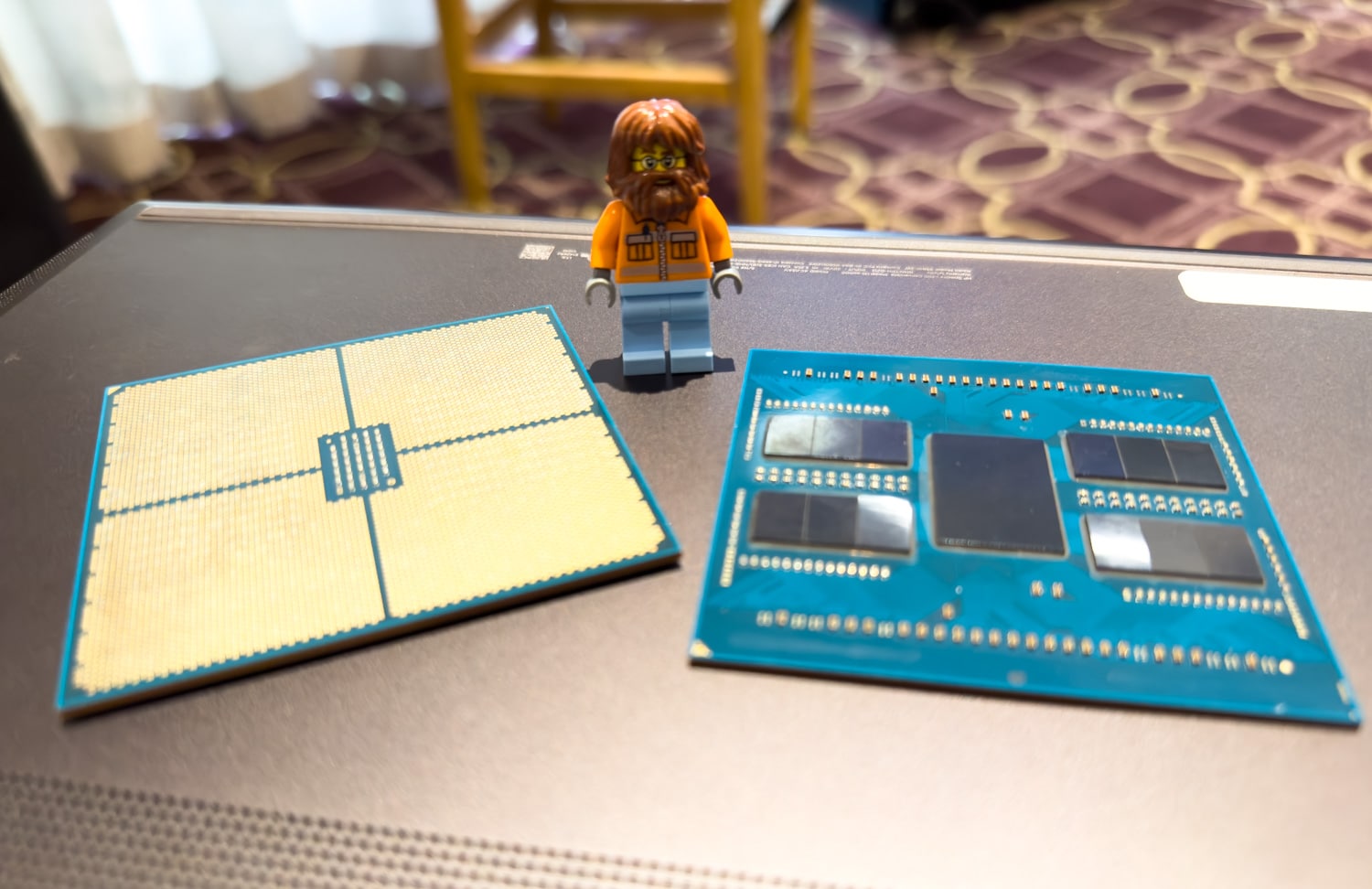

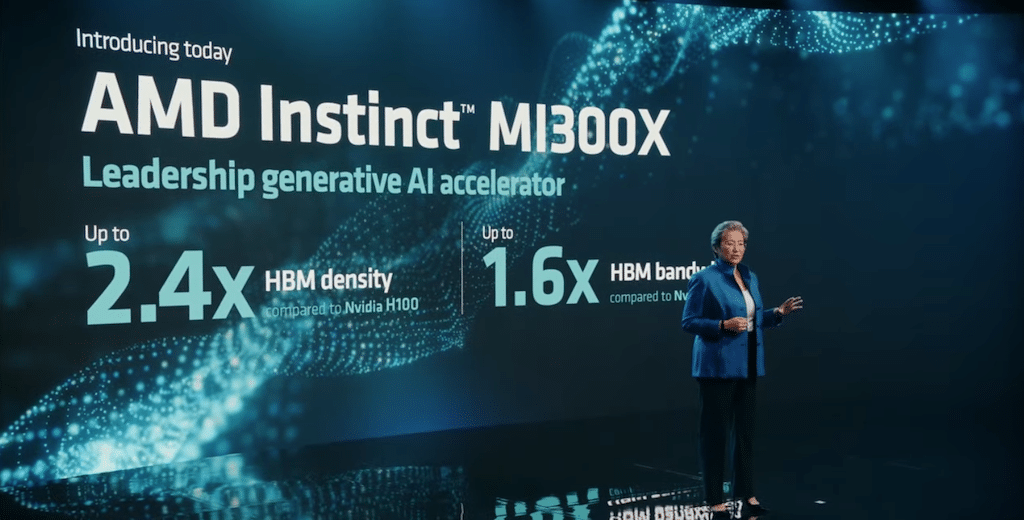

AMD shared details of the AMD Instinct MI300 Series accelerator family, including the AMD Instinct MI300X accelerator, an advanced accelerator for generative AI.

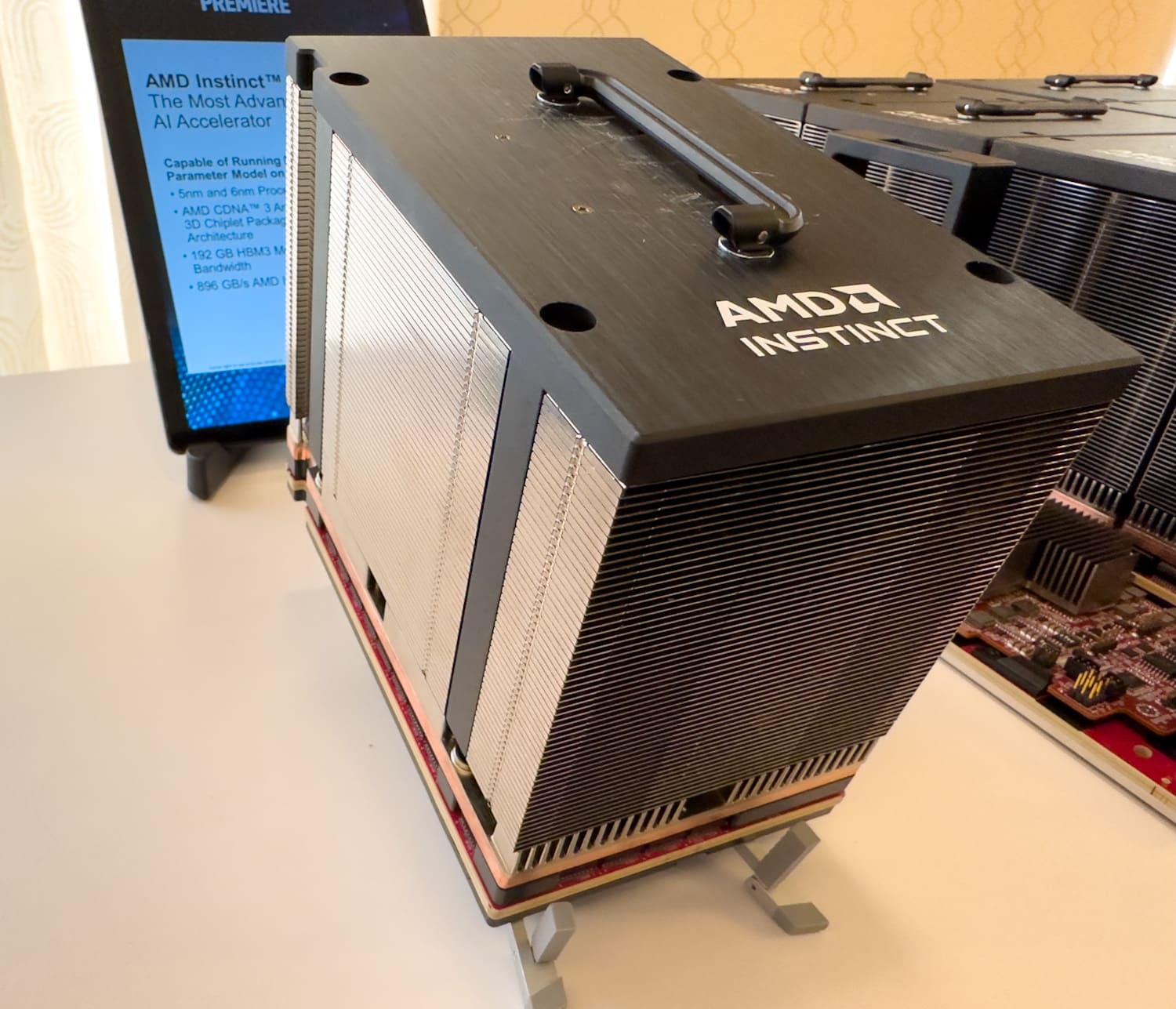

The introduction of the AMD Instinct MI300X accelerator is intriguing. This cutting-edge accelerated processing unit (APU) is part of AMD’s next-gen CDNA 3 accelerator architecture and features up to 192 GB of HBM3 memory. This extensive memory pool is designed to tackle demanding AI workloads, particularly those involving large language model (LLM) inference and generative AI. The MI300X’s vast capacity can accommodate even the largest language models, such as Falcon-40B, on a single GPU accelerator. This represents a potentially transformative step forward for AI processing and efficiency.

Introducing the AMD MI300X to the market could potentially unsettle NVIDIA’s existing supremacy as it’s crafted to challenge the reigning market leader, the NVIDIA H100. NVIDIA holds the majority stake in this lucrative and rapidly expanding market segment, boasting a market share of around 60 percent to 70 percent in AI servers. Introducing such a capable accelerator at this time will be good for the ecosystem as a whole, which is primarily tied to NVIDIA’s CUDA. An additional 20 percent is contributed by bespoke application-specific integrated chips (ASICs) crafted by cloud server providers, including Amazon’s Inferentia and Trainium chips, and Alphabet’s tensor processing units (TPUs).

AMD Infinity Architecture Platform

AMD also introduced the AMD Infinity Architecture Platform, bringing together eight MI300X accelerators into an industry-standard design for improved generative AI inferencing and training.

The MI300X is sampling to key customers starting in Q3. The first APU Accelerator for HPC and AI workloads, the AMD Instinct MI300A, is sampling to customers now.

Highlighting collaboration with industry leaders to bring together an open AI ecosystem, AMD showcased the AMD ROCm software ecosystem for data center accelerators.

A Networking Portfolio for Cloud and Enterprise

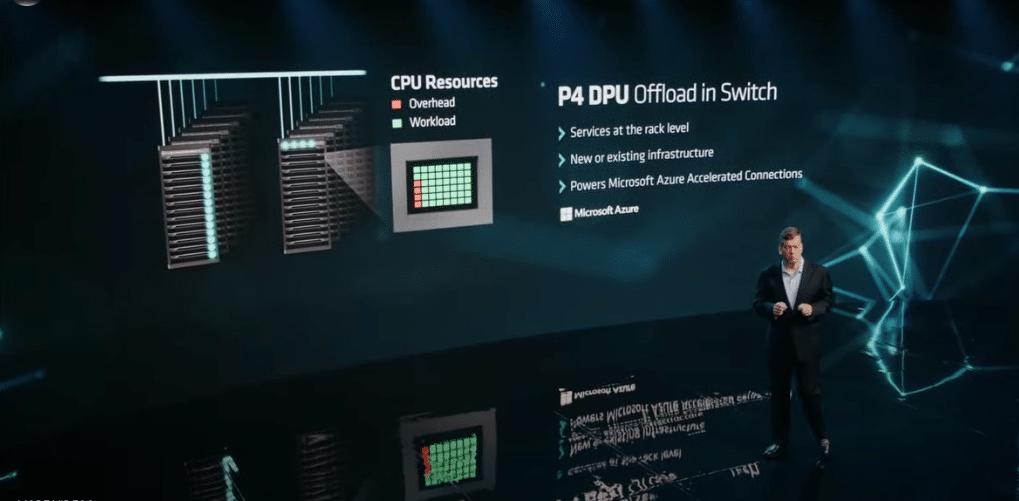

AMD also showcased its networking portfolio, which includes the AMD Pensando DPU, AMD Ultra Low Latency NICs, and AMD Adaptive NIC. AMD Pensando DPUs combine a software stack with “zero trust security” and a programmable packet processor to create an intelligent and performant DPU.

AMD highlighted the next generation of its DPU, codenamed “Giglio,” which aims to bring enhanced performance and power efficiency compared to current generation products and is expected to be available by the end of 2023.

The final announcement focused on AMD Pensando Software-in-Silicon Developer Kit (SSDK), giving customers the ability to rapidly develop or migrate services to deploy on the AMD Pensando P4 programmable DPU in coordination with the existing set of features currently implemented on the AMD Pensando platform. The AMD Pensando SSDK enables customers to put the power of the AMD Pensando DPU to work and tailor network virtualization and security features within their infrastructure.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed