Field Programmable Gate Arrays (FPGAs) allow vendors to create their own reconfigurable digital circuits; whereas a logic gate has a fixed function, an FPGA has an undefined function when it is manufactured and thus must be configured before it can be used in a circuit. Because the price has been driven down in recent years, moving the affordability from just the larger enterprise down to nearly all organizations, allowing even smaller businesses to leverage the technology.

Field Programmable Gate Arrays (FPGAs) allow vendors to create their own reconfigurable digital circuits; whereas a logic gate has a fixed function, an FPGA has an undefined function when it is manufactured and thus must be configured before it can be used in a circuit. Because the price has been driven down in recent years, moving the affordability from just the larger enterprise down to nearly all organizations, allowing even smaller businesses to leverage the technology.

Field Programmable Gate Arrays (FPGAs) allow vendors to create their own reconfigurable digital circuits; whereas a logic gate has a fixed function, an FPGA has an undefined function when it is manufactured and thus must be reconfigured before it can be used in a circuit. Because the price has been driven down in recent years, moving the affordability from just the larger enterprise down to nearly all organizations, allowing even smaller businesses to leverage the technology.

Intel has been a forerunner in this area and the company continues to invest in FPGAs as a way to get more computational power out of server platform. They see tremendous value in this versatile technology and we will most definitely hear more about FPGA support over the course of the next few years as server vendors look at AI and big data solutions. Dell touched on this in their server announcement earlier this year. To this end, Intel looks to leverage this continued momentum of FPGAs in the marketplace, as they are uniquely positioned to accelerate growth across a range of use cases when combined with Intel processors. Simply put, FPGAs look to transform the world.

FPGAs combine logic, memory, and digital signal processing blocks that can be programmed to execute any type of function with high throughput and in real time. This makes FPGAs ideal for many critical cloud and edge applications. Due to this incredible versatility, Intel dubs FPGAs as the “swiss army knife of semiconductors”; they can be programmed at any time, even at the end of the line when FPGA products have been shipped to their customers.

Intel FPGAs boast a low-latency and power-efficient path to realize real-time AI without having to batch calculations into smaller processing elements. Because of this, FPGA-powered AI provides impressively-high throughput when running ResNet-50 (Residual Network-50)—the industry-standard deep neural network that needs roughly 8 billion calculations without batching.

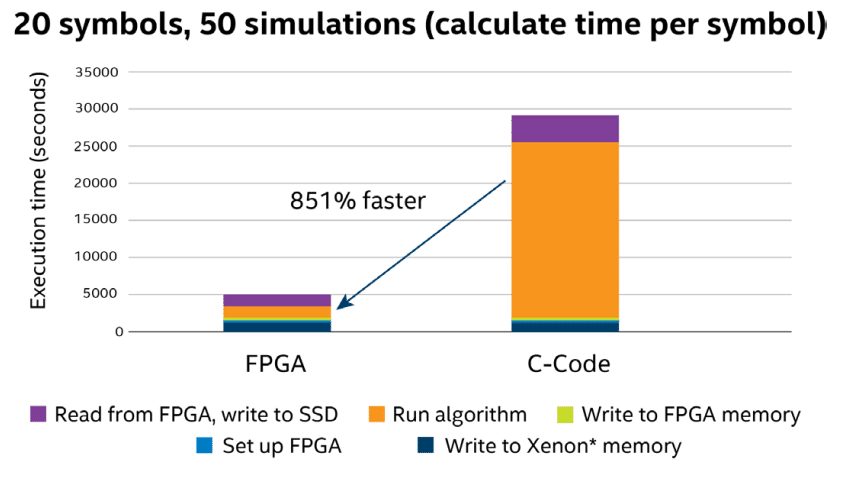

With FPGA’s programmable hardware (e.g., logic, DSP, and embedded memory), users can program any logic function they want and can optimize it for either area, performance or power. Moreover, the fabric is implemented in the hardware, so customizing and performing parallel processing is no problem. Intel states that this will allow for possible magnitudes of performance improvements compared to traditional software or GPU design methodologies.

Intel isn’t the only major player that is driving FPGA adoption, Dell EMC and Fujitsu are also backing the move towards this technology in their enterprise applications. For example, Dell recently announced two new four-socket 14th generation PowerEdge servers aimed at AI and machine learning, the PowerEdge R940xa and PowerEdge R840, both of which support Intel Xeon Scalable processors and customizable Intel Arria 10 GX Programmable Acceleration Cards (PAC) for data-intensive computations. The Arria accelerator cards work with Intel Xeon processors across workloads, such as real-time data analytics, AI, video transcoding, financial, cybersecurity and genomics. These use cases are experiencing an exponential growth in data and are benefitting from FPGA’s real-time and parallel processing capabilities.

Sign up for the StorageReview newsletter