In the high-stakes arena of enterprise AI, tech titans are in a relentless race to unveil the next significant innovation in hardware. Dell Technologies recently introduced the Dell PowerEdge XE9680 for Generative AI and is flexing it in a recent whitepaper. The PowerEdge XE9680, explicitly designed to tackle AI workloads, is not just a new entrant in the race – it’s a potential frontrunner.

In the high-stakes arena of enterprise AI, tech titans are in a relentless race to unveil the next significant innovation in hardware. Dell Technologies recently introduced the Dell PowerEdge XE9680 for Generative AI and is flexing it in a recent whitepaper. The PowerEdge XE9680, explicitly designed to tackle AI workloads, is not just a new entrant in the race – it’s a potential frontrunner.

Digging through the whitepaper, we looked at how this powerhouse redefines the AI landscape and why it might just be your business’s secret weapon to conquer the AI frontier.

Dell PowerEdge XE9680 for Generative AI

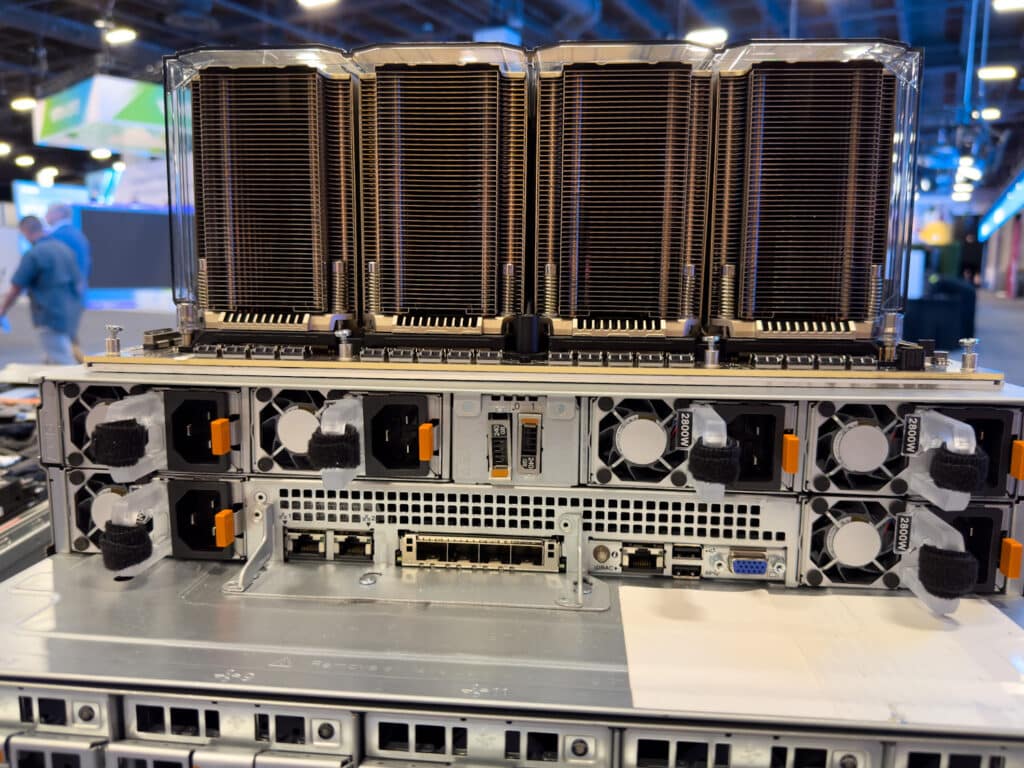

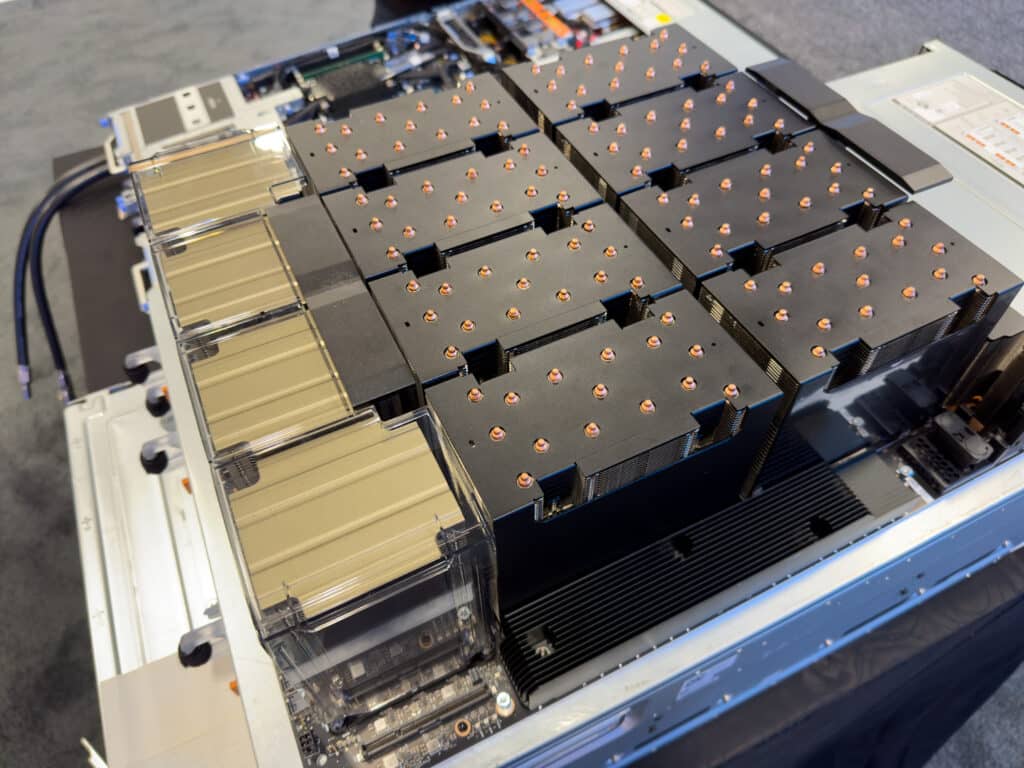

The Dell PowerEdge XE9680 is a GPU-dense rack server designed for high-performance applications targeting the fields of artificial intelligence. It is equipped with two 4th Generation Intel Xeon Scalable processors, each with up to 56 cores, and can be fitted with either eight NVIDIA HGX H100 or eight NVIDIA HGX A100 GPUs; all interconnected with NVIDIA NVLink technology.

The PowerEdge XE9680 supports DIMM Speed Up to 4800 MT/s and has 32 DDR5 DIMM slots, with a maximum RAM capacity of 4 TB. It offers up to 8 x 2.5-inch NVMe SSD drives for storage, with a maximum capacity of 122.88 TB. The server also includes a range of security features, such as cryptographically signed firmware, Data at Rest Encryption (DRE), Secure Boot, and more. It is managed through the embedded iDRAC9 system and supports a variety of management tools and integrations. The server is housed in a 6U rack form factor and weighs 235.89 lbs. (107 kg).

Dell PowerEdge XE9680 Specifications

The PowerEdge XE9680 is designed to handle AI workloads with massive memory footprints. Thanks to NVLink, the GPUs get access to a vast amount of memory from other GPUs in the system, providing high-speed access to shared resources. This significant memory capability should lead to higher performance, more complex models, and the ability to work with larger, more detailed datasets, improving the accuracy and utility by leveraging larger parameter models.

Model:

Processor Capabilities:

Physical Properties:

Memory Support:

|

I/O Capabilities:

GPU Optimization:

Artificial Intelligence Capabilities:

Drive Support:

|

Image Generation Models: The Science of Art

Ever wished you could paint like Van Gogh or sketch like Da Vinci but end up with stick figures that wouldn’t even impress a kindergartener? Enter the world of Image Generation Models (IGMs), the machine learning equivalent of a prodigious artist, minus the eccentricities and berets.

IGMs are a subset of machine learning models designed to tackle the creation of new images. IGMs fall in the broader field of generative modeling, which is all about understanding and replicating data patterns. Still, instead of generating numbers or text, these models are in the business of creating visuals. In recent months, IGMs have made news generating everything from new pieces of artwork to eerily realistic human faces.

These models have found their way into everything from art and entertainment to advertising and scientific research. They’re creating images of cells for medical diagnosis, simulating outer space, and generating selfies so realistic you can catfish yourself. As these models evolve, the requirements for running them quickly are growing.

Dell PowerEdge XE9680 for Generative AI: Powering The Edge, of Stable Diffusion

Diffusion methods, when generating new images during inference, typically depend on massive GPU clusters operating in sync and require extensive hardware runtime. However, Dell’s whitepaper reveals that with the Dell PowerEdge XE9680 server, these generative AI operations can be significantly sped up.

This allows for producing dozens to hundreds of reasonably resolved images in just a few seconds. Dell points out that by leveraging the capabilities of the Dell PowerEdge XE9680 for Generative AI, large high-resolution images (Dell used 2,096 x 2,096) can be produced from a single text prompt in a matter of seconds.

XE9680 generated with text prompt = “Portrait of happy dog, close up,”

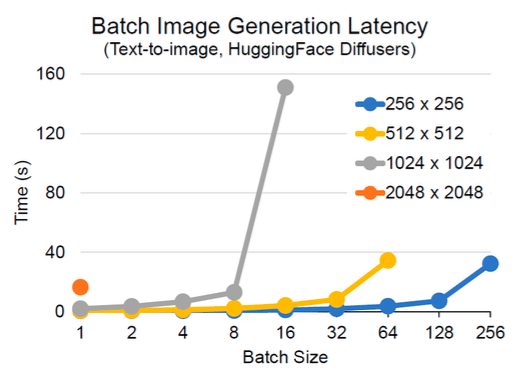

The whitepaper evaluates the image generation latency for the HuggingFace Diffusers text-to-image model on the PowerEdge XE9680 server, considering batch size and image resolution variations. The XE9680 server could generate images with resolutions up to 2,048 x 2,048.

As the batch sizes increased, so did the memory demands on the infrastructure. Therefore, huge batch sizes were achievable with lower-resolution images (such as 256 x 256). However, due to memory limitations, very high-resolution images (like 2,048 x 2,048) could only generate a batch size of one.

PowerEdge XE9680 Batch image generation times

Focusing on the batch image generation of images at 256 x 256 and 512 x 512 resolutions shows the powerful capabilities of the Dell PowerEdge XE9680 for Generative AI generating multiple images within seconds. This capability accelerates the evaluation, prompt tuning, and further evaluation required for creative design cycles. These results demonstrate how batches of 32 images at 512 x 512 resolution can be generated with the HuggingFace Diffusers package on the Dell PowerEdge XE9680 server in less than 10 seconds. Further, batches of 64 images at 256 x 256 resolution can be created in less than 5 seconds with the same platform, enabling rapid prototyping and creative design cycles for businesses and professionals.

Image Generation Benchmarks

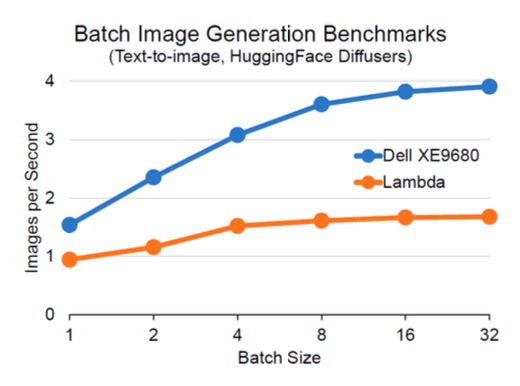

In the analysis from the whitepaper, the Dell PowerEdge XE9680 server was evaluated against the image generation latency benchmarks set by Lambda. This comparison involved a single NVIDIA H100 GPU with 80 GB RAM on the Dell PowerEdge XE9680 server and the NVIDIA H100 GPU Stable Diffusion benchmark released by the ML Labs team at Lambda.

Dell PowerEdge XE9680 image generation latency

The study demonstrated that the XE9680 surpassed Lambda’s benchmarks, achieving roughly double the throughput. Dell’s findings are presented in the same format as Lambda’s, using data from their NVIDIA H100 GPU study under identical software-defined conditions. The only difference was the hardware: Lambda used a PCIe form factor NVIDIA H100 GPU, while the Dell PowerEdge XE9680 server used an HGX form factor, which could explain some performance differences.

Business Impact of Image Generation Models

Image generation through Stable Diffusion empowers creative professionals to swiftly prototype and refine their work, such as enhancing the efficiency of marketing and advertising strategies and shortening the time-to-market. Professionals in fields such as architecture, advertising, marketing, creativity, film, special effects, photography, and art have already adopted this technology.

Generative AI technologies, like Stable Diffusion, can bring about transformative changes in enterprise operations, especially when speed is critical. In product design and development, these models can rapidly generate visual prototypes, enabling design teams to evaluate and refine concepts in real-time. This accelerates the design process and reduces the time to market.

One of the most interesting concepts comes from the retail industry. AI could quickly generate realistic images of various product configurations or color options based on customer preferences, such as “Show me this chair, but in red” or “How would that sofa look with leopard print.” For real estate and construction businesses, generative AI could swiftly create visualizations of new buildings or remodel projects, aiding in planning and sales presentations.

In the field of training and education within an enterprise, AI-generated images can be used to create realistic scenarios for employee training programs, enhancing learning outcomes. Image generation speed can significantly improve businesses’ agility, allowing them to respond to market changes, customer needs, and internal requirements more effectively.

Closing Thoughts

The Dell PowerEdge XE9680 is a high-performance server with impressive capabilities that can easily handle demanding AI workloads (at least for now). While the XE9680 is targeted toward the trending AI market, there could be other intriguing use cases in analytics and data processing for such a powerful box. It should be noted that the XE9680 underpins Dell’s Project Helix, a service to help organizations get their AI projects online in a more timely fashion.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed