Supermicro has launched a new set of solutions to advance the deployment of generative AI, marking a significant step in the evolution of infrastructure for large language models (LLMs). These SuperCluster solutions are designed as core components to support current and future AI demands.

Supermicro has launched a new set of solutions to advance the deployment of generative AI, marking a significant step in the evolution of infrastructure for large language models (LLMs). These SuperCluster solutions are designed as core components to support current and future AI demands.

This new release includes three distinct SuperCluster configurations tailored for generative AI tasks. Options include a 4U liquid-cooled system and an 8U air-cooled setup engineered for intensive LLM training and high-capacity LLM inference. Additionally, a 1U air-cooled variant featuring Supermicro NVIDIA MGX systems is geared toward cloud-scale inference applications. These systems are built to deliver unparalleled performance in LLM training, boasting features such as large batch sizes and substantial volume handling capabilities for LLM inference.

Expanding Capacity for AI Clusters

With the ability to produce up to 5,000 racks per month, Supermicro is positioned to rapidly supply complete generative AI clusters, promising faster delivery speeds to its clients. A 64-node cluster, as an example, can incorporate 512 NVIDIA HGX H200 GPUs, utilizing high-speed NVIDIA Quantum-2 InfiniBand and Spectrum-X Ethernet networking to achieve a robust AI training environment. In conjunction with NVIDIA AI Enterprise software, this config is an ideal solution for enterprise and cloud infrastructures aiming to train sophisticated LLMs with trillions of parameters.

Innovating Cooling and Performance

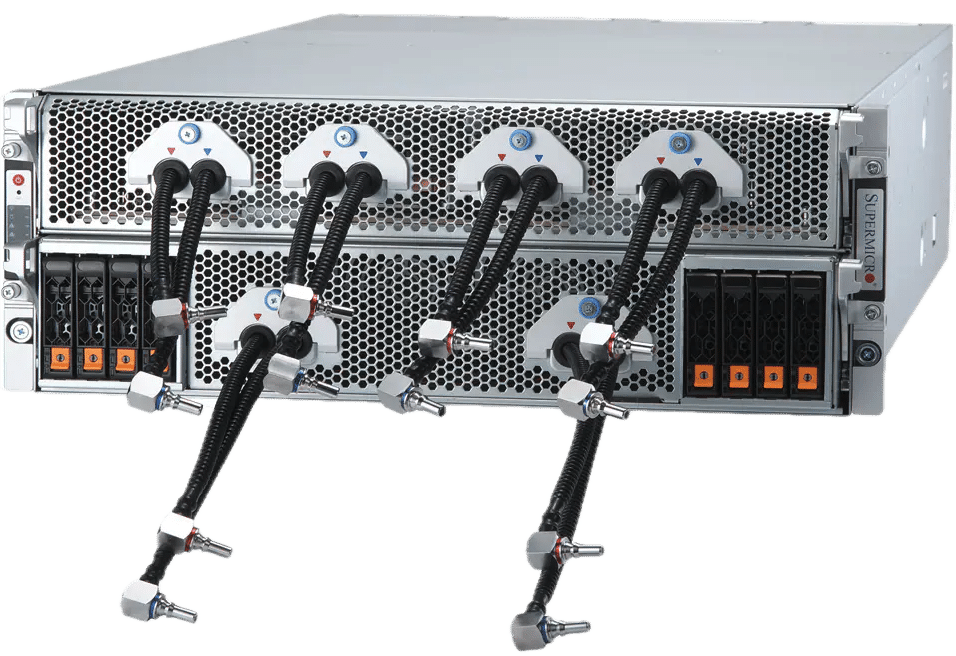

The new Supermicro 4U NVIDIA HGX H100/H200 8-GPU systems leverage liquid cooling to double the density compared to the 8U air-cooled alternatives, resulting in lower energy consumption and a decrease in the total cost of ownership for data centers. These systems support next-generation NVIDIA Blackwell architecture-based GPUs, featuring efficient cooling technologies that maintain optimal temperatures for maximum performance.

SuperCluster Specifications

The Supermicro SuperClusters are scalable solutions for training massive foundation models and creating cloud-scale LLM inference infrastructures. With a highly scalable network architecture, these systems can expand from 32 nodes to thousands, ensuring seamless scalability. Integrating advanced liquid cooling and comprehensive testing processes guarantees operational efficiency and effectiveness.

Supermicro details two primary configurations: the SuperCluster with 4U Liquid-cooled System, capable of supporting up to 512 GPUs in a compact footprint, and the SuperCluster with 1U Air-cooled NVIDIA MGX System, designed for high-volume, low-latency inference tasks. Both configurations are highlighted for their high network performance, which is essential for LLM training and inference.

Here is a quick rundown of their specifications:

SuperCluster with 4U Liquid-cooled System in 5 Racks or 8U Air-cooled System in 9 Racks

- 256 NVIDIA H100/H200 Tensor Core GPUs in one scalable unit

- Liquid cooling enabling 512 GPUs, 64-nodes, in the same footprint as the air-cooled 256 GPUs, 32-node solution

- 20TB of HBM3 with NVIDIA H100 or 36TB of HBM3e with NVIDIA H200 in one scalable unit

- 1:1 networking delivers up to 400 Gbps to each GPU to enable GPUDirect RDMA and Storage for training large language models with up to trillions of parameters

- 400G InfiniBand or 400GbE Ethernet switch fabrics with highly scalable spine-leaf network topology, including NVIDIA Quantum-2 InfiniBand and NVIDIA Spectrum-X Ethernet Platform.

- Customizable AI data pipeline storage fabric with industry-leading parallel file system options

- NVIDIA AI Enterprise 5.0 software, which brings support for new NVIDIA NIM inference microservices that accelerate the deployment of AI models at scale

SuperCluster with 1U Air-cooled NVIDIA MGX System in 9 Racks

- 256 GH200 Grace Hopper Superchips in one scalable unit

- Up to 144GB of HBM3e + 480GB of LPDDR5X unified memory suitable for cloud-scale, high-volume, low-latency, and high batch size inference, able to fit a 70B+ parameter model in one node.

- 400G InfiniBand or 400G Ethernet switch fabrics with highly scalable spine-leaf network topology

- Up to 8 built-in E1.S NVMe storage devices per node

- Customizable AI data pipeline storage fabric with NVIDIA BlueField-3 DPUs and industry-leading parallel file system options to deliver high-throughput and low-latency storage access to each GPU

- NVIDIA AI Enterprise 5.0 software

Supermicro Expands AI Portfolio with New Systems and Racks Using NVIDIA Blackwell Architecture

Supermicro is also announcing the expansion of its AI system offerings, including the latest in NVIDIA’s data center innovations aimed at large-scale generative AI. Among these new technologies are the NVIDIA GB200 Grace Blackwell Superchip and the B200 and B100 Tensor Core GPUs.

To accommodate these advancements, Supermicro is seamlessly upgrading its existing NVIDIA HGX H100/H200 8-GPU systems to integrate the NVIDIA HGX B100 8-GPU and B200. Furthermore, the NVIDIA HGX lineup will be bolstered with the new models featuring the NVIDIA GB200, including a comprehensive rack-level solution equipped with 72 NVIDIA Blackwell GPUs. In addition to these advancements, Supermicro is introducing a new 4U NVIDIA HGX B200 8-GPU liquid-cooled system, leveraging direct-to-chip liquid cooling technology to handle the increased thermal demands of the latest GPUs and unlock the full performance capabilities of NVIDIA’s Blackwell technology.

The new Supermicro’s GPU-optimized systems will soon be available, fully compatible with the NVIDIA Blackwell B200 and B100 Tensor Core GPUs and certified for the latest NVIDIA AI Enterprise software. The Supermicro lineup includes diverse configurations, from NVIDIA HGX B100 and B200 8-GPU systems to SuperBlades capable of housing up to 20 B100 GPUs, ensuring versatility and high performance across a wide range of AI applications. These systems include first-to-market NVIDIA HGX B200 and B100 8-GPU models featuring advanced NVIDIA NVLink interconnect technology. Supermicro indicates they are poised to deliver training outcomes for LLMs (3x faster) and support scalable clustering for demanding AI workloads, marking a significant leap forward in AI computational efficiency and performance.

Supermicro Liquid Cooling Technology

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed