At Computex 2024, ASRock Rack unveiled an array of scale-up servers leveraging the robust NVIDIA Blackwell architecture. ASRock Rack showcased several cutting-edge systems designed to meet the demands of AI, HPC, and data center workloads.

At Computex 2024, ASRock Rack unveiled an array of scale-up servers leveraging the robust NVIDIA Blackwell architecture. ASRock Rack showcased several cutting-edge systems designed to meet the demands of AI, HPC, and data center workloads.

At the center of ASRock Rack’s announcement was the ORV3 NVIDIA GB200 NVL72, a liquid-cooled rack solution. This system is built around the recently announced NVIDIA GB200 NVL72 scale-up system, which integrates 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell Tensor Core GPUs using NVIDIA NVLink technology. This configuration forms a massive GPU unit capable of faster real-time large language model (LLM) inference while reducing the total cost of ownership (TCO).

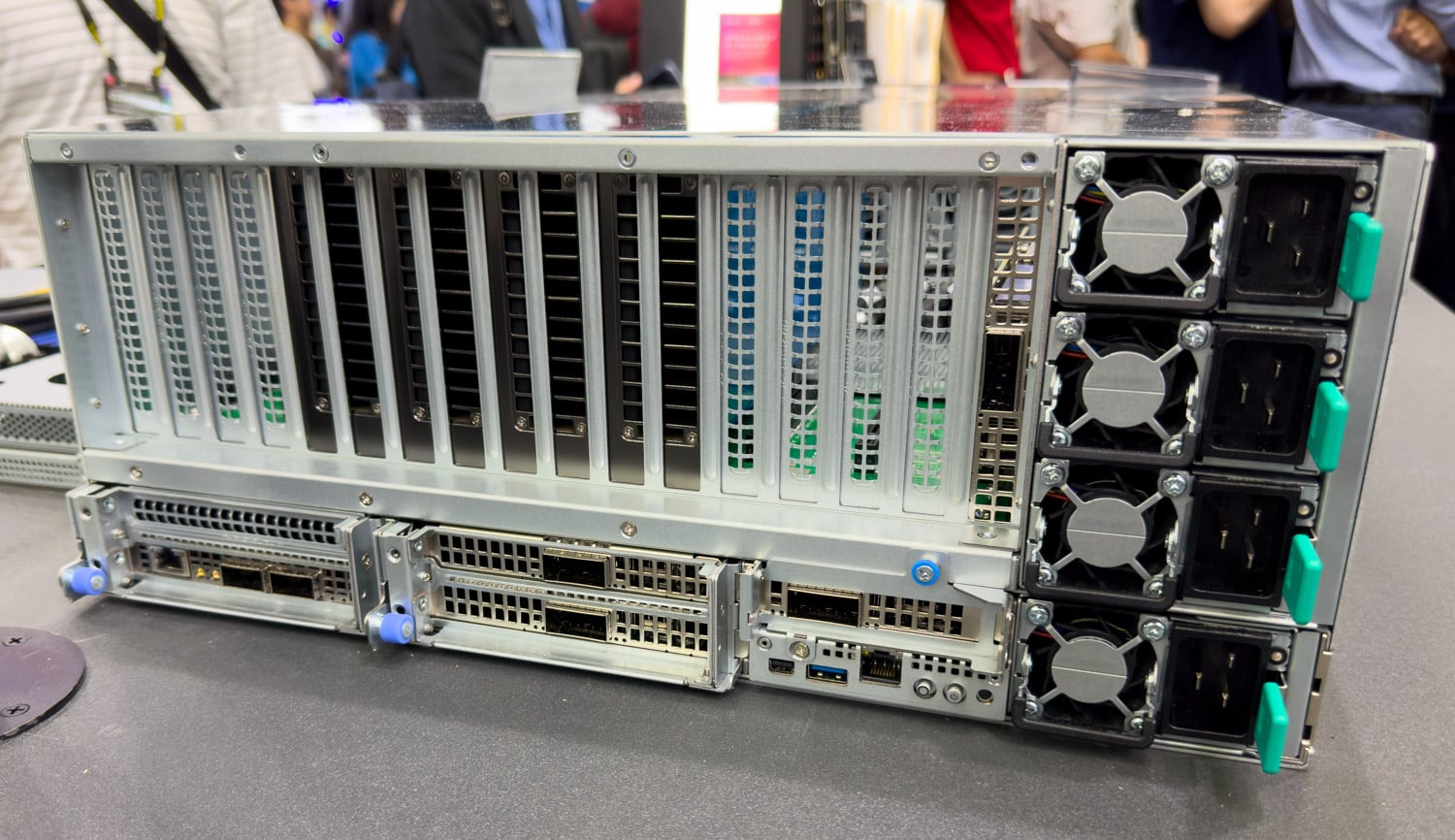

In addition to the ORV3 system, ASRock Rack introduced the 6U8X-EGS2 NVIDIA HGX B100 and 6U8X-GNR2/DLC NVIDIA HGX B200 advanced server models. These servers are equipped with the NVIDIA HGX B100 and B200 8-GPU systems, respectively.

The NVIDIA HGX B100 is a drop-in replacement for existing NVIDIA HGX H100 infrastructures, facilitating an easy transition. The 6U8X-GNR2/DLC NVIDIA HGX B200 features direct-to-chip liquid cooling technology, allowing the system to handle the thermal design power (TDP) of NVIDIA Blackwell GPUs efficiently within a 6U rackmount design. For those without liquid cooling infrastructure, ASRock Rack plans to introduce the air-cooled 8U8X-GNR2 NVIDIA HGX B200, also equipped with the NVIDIA HGX B200 8-GPU system for high-performance real-time inference on trillion-parameter models.

Enhanced Connectivity

All ASRock Rack NVIDIA HGX servers support up to eight NVIDIA BlueField-3 SuperNICs, leveraging the NVIDIA Spectrum-X platform, an end-to-end AI-optimized Ethernet solution. These systems will also be certified for the full-stack NVIDIA AI and accelerated computing platform, including NVIDIA NIM inference microservices, as part of the NVIDIA AI Enterprise software suite for generative AI.

Weishi Sa, President of ASRock Rack, emphasized the company’s commitment to providing top-tier data center solutions powered by the NVIDIA Blackwell architecture. “We are showcasing data center solutions for the most demanding workloads in LLM training and generative AI inference. We will continue expanding our portfolio to bring the advantages of the NVIDIA Blackwell architecture to mainstream LLM inference and data processing,” said Sa.

ASRock Rack is developing new products based on the NVIDIA GB200 NVL2 to extend the benefits of the NVIDIA Blackwell architecture to scale-out configurations, ensuring seamless integration with existing data center infrastructures.

Kaustubh Sanghani, Vice President of GPU Product Management at NVIDIA, highlighted the collaboration with ASRock Rack. He said, “Working with ASRock Rack, we are offering enterprises a powerful AI ecosystem that seamlessly integrates hardware and software to deliver groundbreaking performance and scalability, helping push the boundaries of AI and high-performance computing.”

NVIDIA MGX Modular Reference Architecture

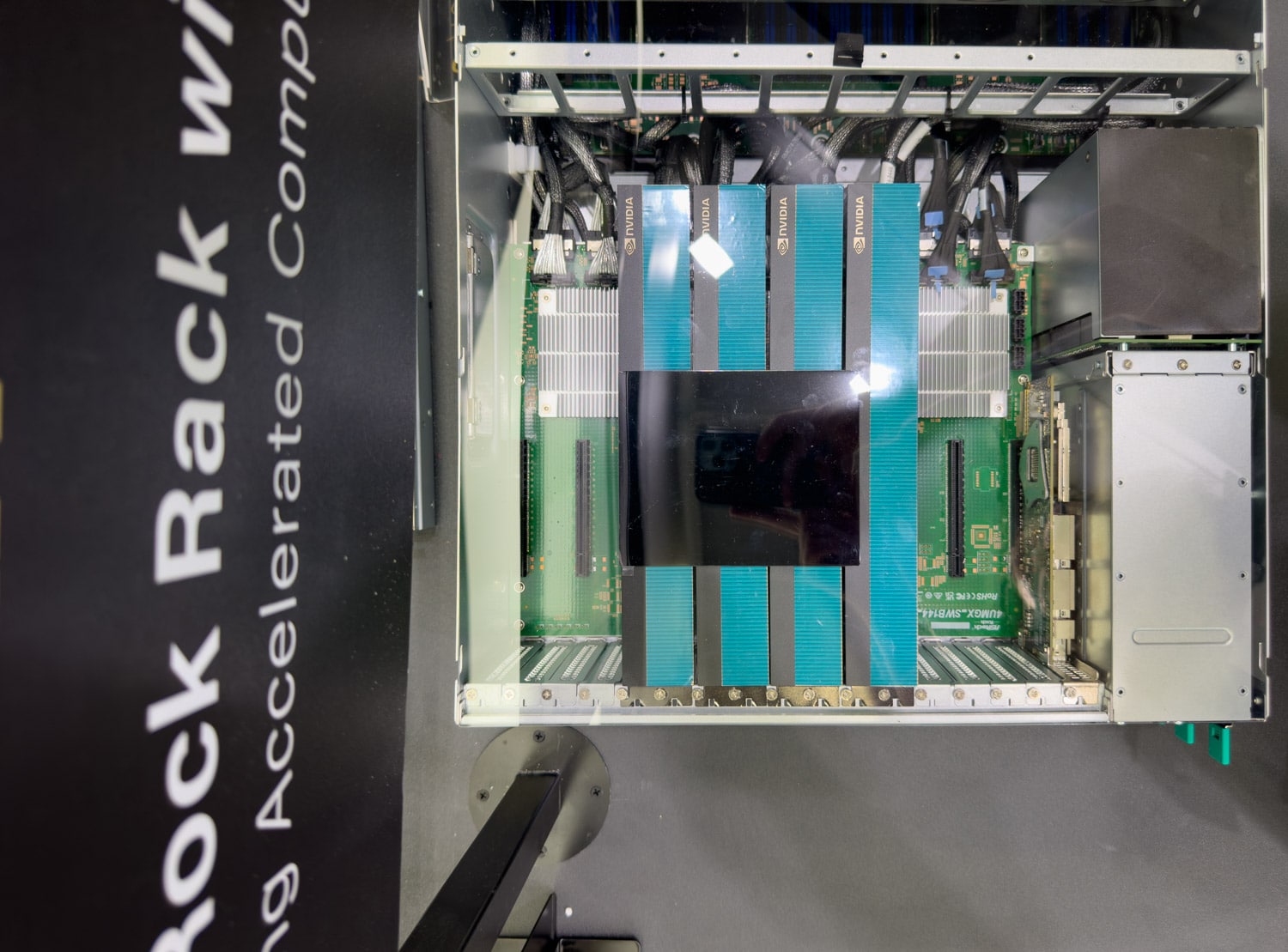

In addition to the new products, ASRock Rack presented the 4UMGX-GNR2, a dual-socket GPU server compliant with the NVIDIA MGX 4U architecture. This server supports up to eight FHFL dual-slot GPUs, such as the NVIDIA H200 NVL Tensor Core GPU, making it suitable for mainstream enterprise servers. It features five FHHL PCIe 5.0 x16 slots and one HHHL PCIe 5.0 x16 slot, supporting NVIDIA BlueField-3 DPUs and NVIDIA ConnectX®-7 NICs for multiple 200Gb/s or 400Gb/s network connections. The server also has 16 hot-swap drive bays for E1.S (PCIe 5.0 x4) SSDs.

One of the most interesting aspects of the ASRock Rack MGX platform is their implementation of the E1.S storage solution. Half of the whopping 16 E1.S drives are directly attached to the CPU, but more interestingly the other 8 are connected to the PCI-e switch that the GPU backplane is also connected to. This presents several interesting and very fascinating use cases for GPU Direct storage.

The ASRock Rack booth is expected to show off more advanced server systems, including the MECAI-NVIDIA GH200 Grace Hopper Superchip server, which ASRock Rack launched earlier in the year. ASRock Rack’s new server portfolio, powered by NVIDIA’s Blackwell architecture, represents a significant advancement in data center and AI technologies. These systems offer exceptional performance, flexibility, and scalability, meeting the needs of enterprises looking to push the boundaries of AI and high-performance computing.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed