The AMD Zen 5 architecture employs an innovative modular design, allowing AMD to create CPUs for desktops, servers, clients, and embedded.

AMD’s 2024 Tech Day unveiled the details behind the latest advancements and covered a lot of ground, particularly with their latest Zen 5 CPU and XDNA AI-centric architectures. This event emphasized AMD’s vision to revolutionize AI efficiency, power performance, and seamless integration across multiple applications, reaffirming its leadership in the high-performance computing sector.

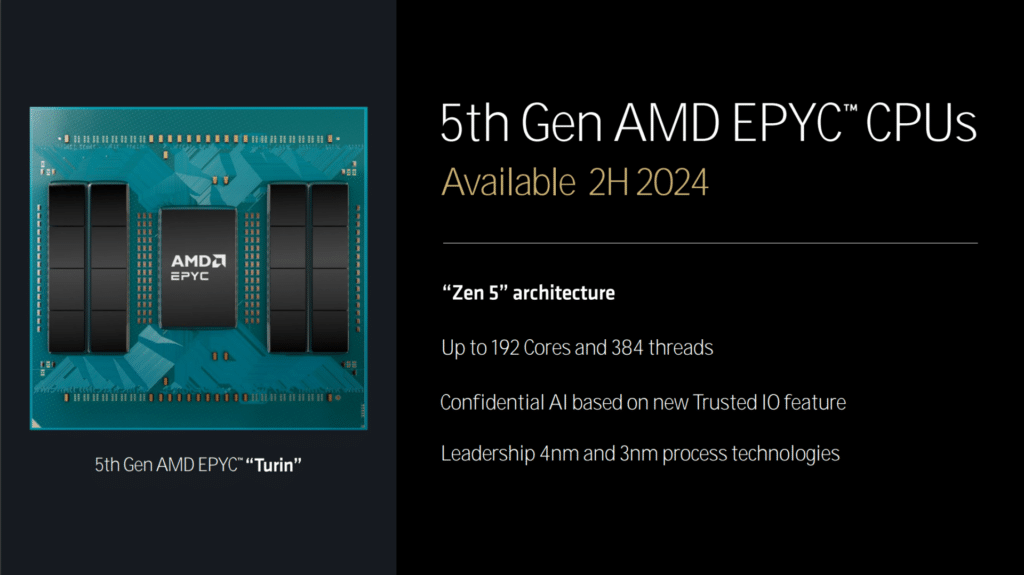

Strategic collaboration with Microsoft set the stage for AMD’s leadership in AI PC experiences, offering unprecedented efficiency, performance, and integration across a wide range of applications. The 5th Gen EPYC CPUs, with up to 192 cores and 384 threads, promise significant gains in power efficiency and AI acceleration. With advanced branch prediction, dual decode pipes, and a new Math Acceleration Unit, AMD says Zen 5 delivers a 35% improvement in single-core AES-XTS performance and a 32% boost in machine learning tasks over its predecessor. As AMD continues to push the boundaries of processing power and efficiency, the Zen 5 architecture stands poised to revolutionize the data center and server markets.

The AMD Zen 5 architecture employs an innovative modular design, allowing AMD to create products tailored for desktop, server, client, and embedded applications. Incorporating 4nm and 3nm process technologies ensures Zen5-based products can deliver optimized performance and power efficiency across diverse use cases.

AMD Zen 5

In AMD’s presentation at 2024 Tech Day, Mark Papermaster unveiled significant advancements in their Zen 5 architecture, particularly spotlighting the 5th Generation EPYC CPUs. The EPYC line, to be released in the second half of 2024, promises to deliver unparalleled performance and efficiency, pushing the density and performance metrics to the extreme in the server and data center markets.

The 5th Gen EPYC CPUs have significantly increased core count and threading capability. These enhancements also include improvements in power efficiency, which were made possible through a continued partnership with TSMC and an optimized metal stack. The latter has notably enhanced thermal and electrical performance. The architecture leverages advanced AI acceleration by introducing a new Math Acceleration Unit promising up to 35% improvement in single-core AES-XTS performance and up to 32% in single-core machine learning tasks compared to Zen 4.

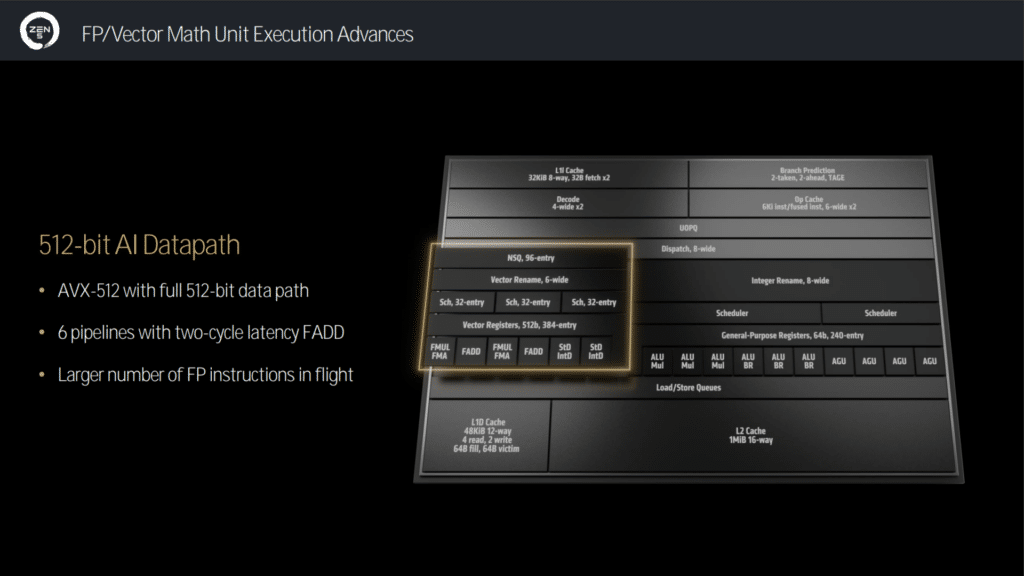

The architectural advancements in Zen 5 are comprehensive. The instruction fetch and decode stages have been enhanced with advanced branch prediction and dual decode pipes to reduce latency and improve accuracy. The integer execution units see a substantial upgrade with 8-wide dispatch/retire capabilities and a more unified ALU scheduler, all within a larger execution window. The load/store advancements include a 48KB 12-way L1 data cache with double the maximum bandwidth to both the L1 cache and the Floating-point Unit, which is crucial for data-heavy operations.

The Zen 5 architecture also includes significant improvements in data bandwidth. The load/store advancements, with a 48KB 12-way L1 data cache, feature double the maximum bandwidth to the L1 cache and Floating-Point Unit, essential for data-intensive operations. The architecture’s ability to handle larger numbers of floating-point instructions in flight, with AVX-512 and a full 512-bit data path, ensures substantial performance gains in AI and vector workloads.

Floating-point and vector math unit execution have also seen significant improvements. The AVX-512, with a full 512-bit data path and six pipelines (which offer two-cycle latency for floating-point add operations), significantly enhances the capability to manage concurrent floating-point instructions. This particularly benefits vector and AI workloads, enabling significant performance improvements in machine learning and data-intensive tasks. This contrasts Zen 4, where AMD “double-pumped” the 256-bit path to achieve 512-bit performance.

Zen 5 brings a 16% average IPC uplift for desktop and mobile processors over its predecessor, Zen 4. This is achieved through architectural refinements, including wider dispatch and execution units, increased data bandwidth, and enhanced prefetching algorithms. The IPC gains translate into real-world performance improvements across various applications, from gaming to content creation and machine learning.

On the GPU front, AMD continues to optimize its RDNA 3 architecture for power-performance efficiency. AMD claims up to 32% higher performance per watt than previous Ryzen CPUs. This is achieved through better memory management, double-rate common game texture operations, and enhanced power management features.

AMD’s Zen 5 architecture is an impressive evolution in the Zen architecture, especially for the EPYC line, which promises to boost performance standards across the board from desktop and mobile to server and data center markets. With significant improvements in core count, threading, power efficiency, and AI acceleration in some chips, the 5th Gen EPYC CPUs are positioned to meet the growing demands of modern data-centric workloads.

XDNA

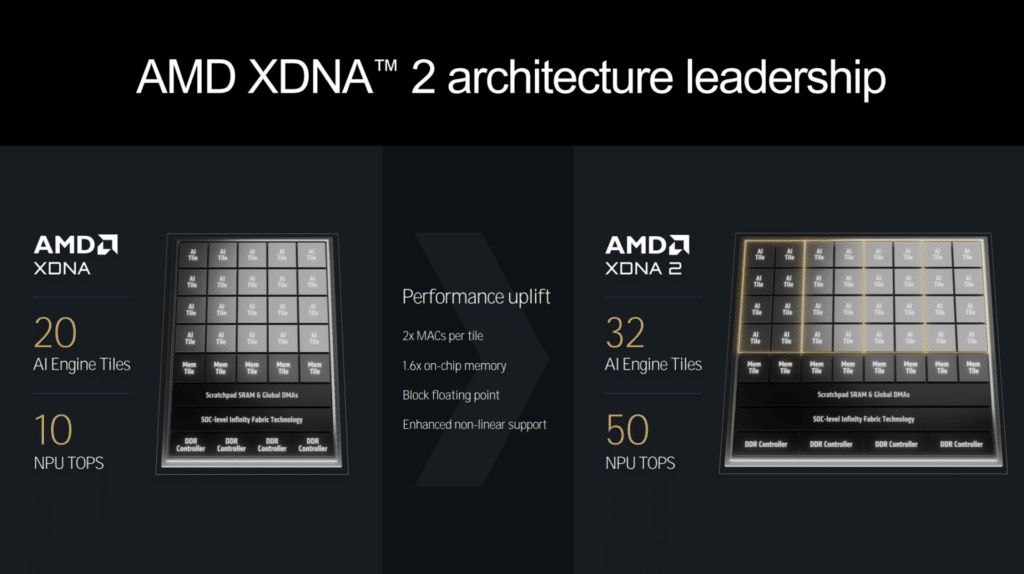

Vamsi Boppana, the Senior Vice President of the Artificial Intelligence Group, outlined AMD’s new AI-centric architecture’s transformative potential. The exponential growth and specialization of AI workloads demand innovative compute architectures, and AMD’s response is the introduction of the XDNA 2 architecture.

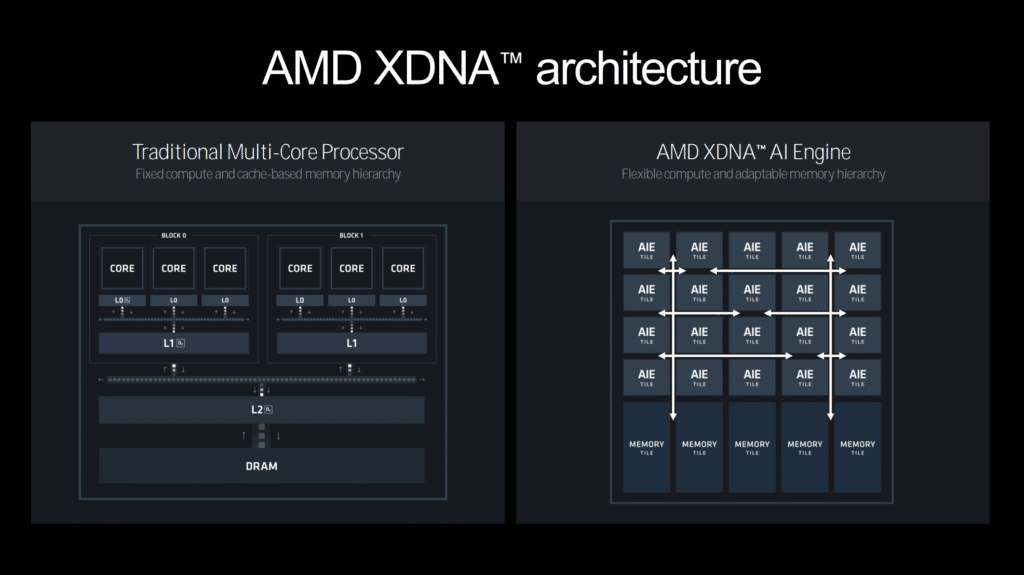

At the heart of this innovation is the AMD XDNA architecture, known for its legacy in diverse AI and DSP applications, spanning communication, 5G deployment, defense radar signal processing, broadcast real-time AI processing for 3D viewpoints, and healthcare image processing. The architecture moves from traditional fixed compute and cache-based memory hierarchies to a more flexible, adaptable model. This spatial reconfigurability and tiled dataflow architecture allow efficient multitasking and guaranteed real-time performance.

The presentation highlighted the AMD’s x86 processor with an integrated NPU, designed to deliver high efficiency and performance for AI workloads. The 3rd Generation AMD Ryzen AI processors boast significant advancements in NPU capabilities, achieving up to 50 TOPS (Trillions of Operations Per Second) and incorporating up to 12 CPU cores and 16 GPU compute units. These processors are set to power over 100 AI-driven experiences across platforms like Adobe, Black Magic, and Topaz Labs, making them central to next-gen AI PC experiences.

The AI engine within the XDNA 2 architecture includes enhanced support for diverse data types, such as INT8 and Block FP16, which ensures high performance and accuracy across a range of AI applications. The adaptive AI architecture allows for scalable integration across AMD’s product portfolio, offering efficient multitasking and guaranteed real-time performance through spatial reconfigurability and a tiled dataflow architecture.

Block FP16, in particular, allows for a drop-in replacement for FP32 models with little to no accuracy loss, making it highly efficient for tasks like image generation, language models, and real-time audio and video processing.

One standout feature is the ability to configure the XDNA fabric at runtime. This allows flexibility with datapaths and segmentation of the NPU to run multiple models of different sizes simultaneously. It also provides the flexibility to deliver AI-powered experiences like Copilot+ for enhanced productivity and immersive collaboration. Integrating the unified AI software stack across AMD’s CPU, GPU, and NPU components enables broad model support and optimized performance, making it easier for developers to deploy thousands of AI models quickly and effectively.

The AMD XDNA 2 architecture demonstrates a significant leap in AI technology. With up to eight concurrent spatial streams, it doubles the power efficiency of previous generations. This makes AMD’s solution powerful and highly efficient, paving the way for a new era of AI applications in PCs and beyond.

The 3rd Gen AMD Ryzen AI processors also feature enhanced security by introducing new Trusted IO capabilities. This security enhancement is crucial for protecting sensitive data and ensuring reliable performance in AI-driven applications, reinforcing AMD’s commitment to secure and efficient AI solutions.

AMD Zen 5 and XDNA 2 Specs

| Zen 5 Architecture | |

| Feature | Details |

| Process Technology | 4nm and 3nm |

| Core Count | Up to 192 cores |

| Thread Count | Up to 384 threads |

| Cache | 48KB 12-way L1 data cache |

| Bandwidth | Double the maximum bandwidth to L1 cache and Floating-Point Unit |

| Integer Execution | 8-wide dispatch/retire, 6 ALU, 3 multiplies |

| Floating-Point Execution | AVX-512 with full 512-bit data path, 6 pipelines |

| AI Acceleration | New Math Acceleration Unit |

| IPC Uplift | 16% average IPC uplift over Zen 4 |

| Performance Gains | 35% improvement in single-core AES-XTS, 32% boost in machine learning tasks |

| Power Efficiency | Optimized for performance/watt with enhanced metal stack |

| Product Applications | Desktop, mobile, server, and data center |

| XDNA 2 Architecture | |

| AI Engine Tiles | Up to 32 |

| AI Performance | Up to 50 TOPS |

| Core Count | Up to 12 CPU cores |

| GPU Compute Units | Up to 16 |

| Data Types Supported | INT8, Block FP16 |

| Efficiency | 2x power efficiency compared to previous generation |

| Concurrent Streams | Up to 8 |

| Security | New Trusted IO capabilities |

| Software Stack | Unified AI software stack across CPU, GPU, and NPU |

| Real-time Performance | Guaranteed real-time performance with spatial architecture |

| Applications | Gaming, entertainment, personal AI assistance, content creation, enterprise productivity |

Zen 5 Desktop Overclocking with Curve Shaper

AMD’s Curve Optimizer, a hallmark feature from the Ryzen 7000 series, allows users to enable PMFW/PBO-aware dynamic voltage scaling or undervolting. This powerful tool dynamically shifts the voltage curve through adjustable “Curve Optimizer” steps, providing variable voltage across the frequency spectrum, with more voltage allocated at higher frequencies. Users can apply this optimization on a per-core, per-CCD, or per-CPU basis, allowing for granular control over their CPU’s performance and efficiency.

Building on the foundation of Curve Optimizer, AMD introduces Curve Shaper, a sophisticated enhancement that enables users to reshape the underlying voltage curves to maximize undervolting potential. Curve Shaper utilizes the same steps as its predecessor. Still, it grants users the flexibility to selectively add or remove steps from 15 distinct frequency-temperature bands (three temperature bands and five frequency bands). This fine-tuning capability allows users to reduce voltage in stable bands further while adding voltage in areas where instabilities are observed. The reshaped curve is applied uniformly across all cores, which can be further adjusted using the Curve Optimizer.

Closing Thoughts

The AMD Tech Day 2024 was a great event where we had the much-appreciated opportunity to dive deep with the engineers behind the products. AMD’s latest advancements with the XDNA 2 architecture and Zen 5 CPUs highlight its commitment to leading the AI and high-performance computing revolution. With groundbreaking improvements in core count, threading, power efficiency, and AI acceleration, AMD is set to redefine industry standards and meet the growing demands of modern data-centric workloads, ensuring extensive performance across desktop, mobile, server, and data center applications.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed