GIGABYTE, a well-regarded manufacturer of computer-related hardware components and systems, has jumped into the data storage market by partnering with Bigtera to offer a unique high-performance, software-defined storage solution. GIGABYTE supplies the hardware, pairing with Bigtera's VirtualStor software platform to offer a range of storage appliances to meet different capacity and performance requirements. While most people will be familiar with GIGABYTE (which has been in business for over 30 years), Bigtera may be a new name for some. Bigtera, founded in 2012, has two development centers and over a hundred customers running VirtualStor in production environments.

GIGABYTE, a well-regarded manufacturer of computer-related hardware components and systems, has jumped into the data storage market by partnering with Bigtera to offer a unique high-performance, software-defined storage solution. GIGABYTE supplies the hardware, pairing with Bigtera's VirtualStor software platform to offer a range of storage appliances to meet different capacity and performance requirements. While most people will be familiar with GIGABYTE (which has been in business for over 30 years), Bigtera may be a new name for some. Bigtera, founded in 2012, has two development centers and over a hundred customers running VirtualStor in production environments.

GIGABYTE, a well-regarded manufacturer of computer-related hardware components and systems, has jumped into the data storage market by partnering with Bigtera to offer a unique high-performance, software-defined storage solution. GIGABYTE supplies the hardware, pairing with Bigtera's VirtualStor software platform to offer a range of storage appliances to meet different capacity and performance requirements. While most people will be familiar with GIGABYTE (which has been in business for over 30 years), Bigtera may be a new name for some. Bigtera, founded in 2012, has two development centers and over a hundred customers running VirtualStor in production environments.

The Bigtera VirtualStor family is composed of three different product lines—Scaler, Converger, and Extreme—each of which is a software-defined storage solution deployed on standard x86 architecture. Scaler is data storage for hybrid scale-out solutions; Converger is a storage solution that can be used to create a hyperconverged infrastructure by pairing it with VMware, Hyper-V, or KVM; and Extreme is an all-flash scale-out storage solution designed to supply I/O to applications that require consistently low latency and consume a large amount of bandwidth. In this deep dive we will examine the VirtualStor Scaler solution.

VirtualStor Scaler is a scale-out storage solution, rather than a scale-up or hyperconverged infrastructure (HCI) solution, meaning that more disks or nodes can be added to a VirtualStor Scaler storage cluster as more storage capacity is needed. In other words, you get the correct amount of storage for your datacenter that can be modified depending on current circumstances. This flexibility effectively eliminates both the overprovisioning of hardware (a requirement of scale-up solutions) and the need to add more compute power (whether needed or not) when using an HCI solution.

GIGABYTE offers six different platforms for the VirtualStor Scaler system. On one end of the spectrum, optimized for its smaller users, is a system with 48TB of usable storage capacity which is composed of three 1U nodes. On the other end, for customers who need to deal with a huge amount of data, is a system that can store 4PB of data and is composed of eight 4U nodes. To ensure the quality of these systems, GIGABYTE uses their own top-of-the-line servers that come equipped with dual Intel Second Generation Xeon Scalable CPUs to supply the compute needs for these storage nodes. For data storage, VirtualStor storage appliances use a combination of HDDs and NVMe or SATA SSD cache drives. To guarantee that the data keeps flowing from the appliance, the nodes use Intel SFP+ and NICs, and offline node management is accomplished with Aspeed remote management controllers.

VirtualStor Scaler Storage Protocols

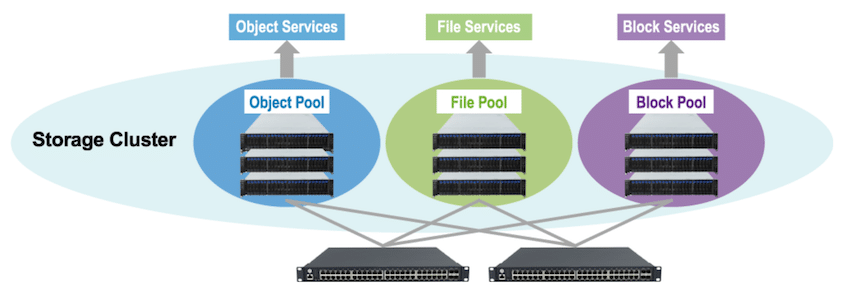

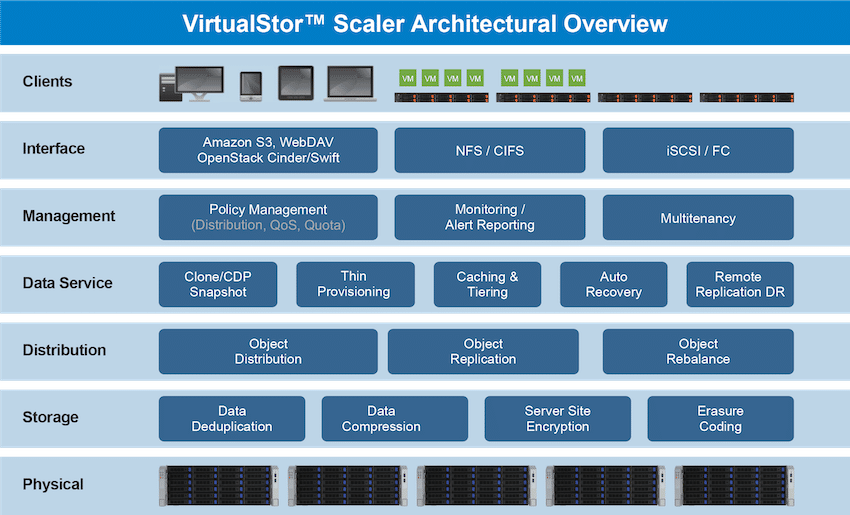

Today’s datacenter must deliver storage to meet the needs of a demanding and diverse group of users as quickly and cost efficiently as possible. To meet the diverse storage needs of a datacenter, VirtualStor supports all commonly-used storage protocols (NAS, SAN, and object storage) from a single, unified pool of storage. To ensure that the performance requirements are being met, the storage can have quality of service (QoS) attributes applied to files, folders, or volumes. Quotas, which prevent a user or application from overconsuming storage, can be applied on a folder or volume basis.

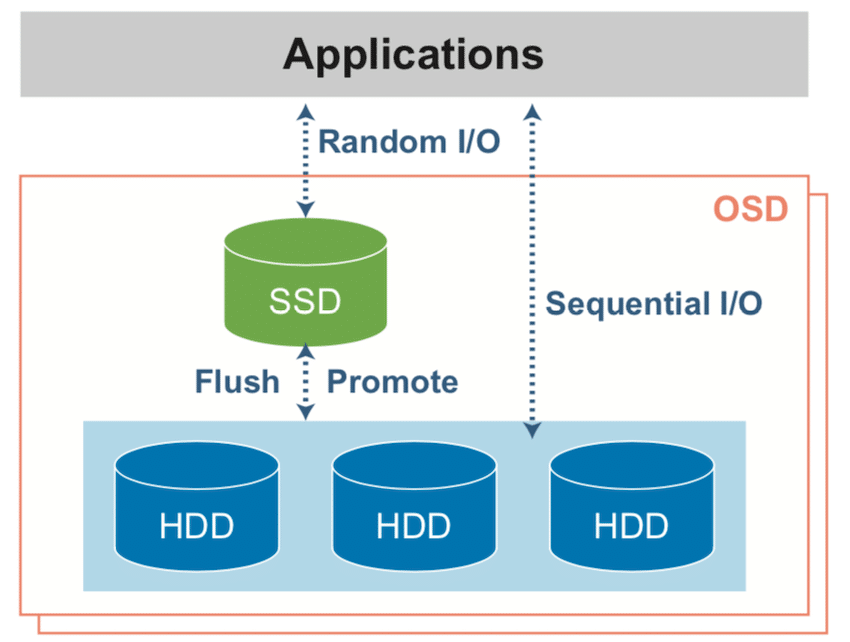

VirtualStor is designed to reliably and consistently provide the performance needs for the most demanding of applications. One of the ways that they have accomplished this is in how the VirtualStor back-end storage engine, BigteraStore, handles the placement of data. BigteraStore uses flash devices for data caching and to consolidate and merge small blocks of data into larger sequential blocks. Not only does this have a huge impact on the performance of a VirtualStor system when dealing with random data, it also increases the life of the device with fewer writes being made to a flash device. BigteraStore also improves the performance of its storage systems by identifying sequential data (which is delivered in larger blocks of data) and will read and write this data directly onto its hard drives. Since the streaming of sequential data directly to a hard drive does not suffer the same performance penalties as reading and writing random data to a hard drive, this also preserves the capacity of expensive flash devices for use with random data where it has the biggest impact.

VirtualStor Scaler Flash Use

Data efficiency, protection, and resiliency are three factors that must be addressed by today’s modern storage solutions, and VirtualStor uses the latest techniques and tools to provide a robust, efficient solution to address these factors.

The most important feature of any storage system is the ability to protect the integrity of the data stored on it. To do this, VirtualStor supports data replication, erasure coding, RAID, error detection, and the self-repairing of corrupted data. As data is replicated and balanced over many different storage nodes, in the unlikely event that a hardware component or server fails, another storage node will seamlessly take over, and in most cases the user or application will not even be aware that a failure has occurred. Once the failed component has been identified and replaced, it will be automatically integrated back into the system. You can secure sensitive data that needs the utmost protection on a VirtualStor by using Intel AES-NI encryption technology, but of course, other less secure data can be left in an unencrypted state on the VirtualStor storage appliance.

VirtualStor Scaler Architectural Overview

One factor that is often overlooked by most users when deciding which storage solution to go with is the difficulty in carving out the underlying storage for use. The fact that all VirtualStor storage, regardless of type or protocol, comes from a single pool that can be overprovisioned effectively eliminates both the time-consuming task of re-provisioning the underlying storage to make it available, as well as islands, or silos, of storage that have been provisioned but are unused.

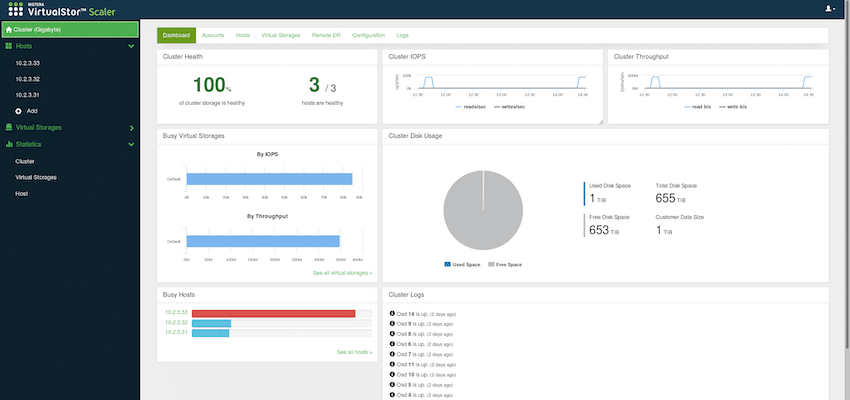

VirtualStor Scaler Dashboard

The value of a storage solution can be minimized if its manageability is either difficult or subpar, but luckily VirtualStor has made it easy to manage its storage by offering an intuitive, modern, web-based management console. In the past, we have seen some legacy unified storage systems advertised to have a single management console, but on closer inspection, the management console had been comprised of just the management components from various systems placed as functions on a management console. Unfortunately, this situation leads to confusion as different terms are used for the storage components and different workflows are required depending on the storage type that is being managed—a convoluted and perplexing way to deal with storage, to say the least.

We have also seen storage systems that require command line interaction to complete common tasks where an incorrect entry can cause catastrophic results. On the contrary, with VirtualStor appliances, all of the workflows when dealing with the day-to-day system management are GUI-based and do not require command-line interaction. Furthermore, as they were designed with multiprotocol support from the start—not as an afterthought—they are not encumbered with legacy bolt-on functionality, which makes the management of the VirtualStor intuitive and error-free.

VirtualStor Scaler Performance

Beyond assembling the solution and making it easy to operate, the cluster still must offer performance that suits the targeted customer use cases. Further, Bigtera offers multi-protocol support, something that brings more flexibility to this solution. Over a period of several weeks, we tested the solution with the following hardware configuration:

- Client Nodes

- 1 x GIGABYTE H261-3C0 – 2U 4 nodes, 3 nodes were used for 3 client servers

- Per node:

- 2 x Xeon Gold 6140 CPUs (18 cores, 2.3GHz)

- 8 x 16GB 2666MHz DDR4 RDIMM memory modules

- 1 x GIGABYTE CLNOQ42 Dual Port 25GB SFP+ OCP LAN Card (QLogic FastLinQ QL41202-A2G)

- 1 x 960GB 2.5” Seagate SATA SSD

- Storage Nodes

- 3 x GIGABYTE S451-3R0 storage servers

- Per Node:

- 2 x Intel Xeon Silver 4114 CPUs (10 cores, 2.2GHz)

- 8 x 16GB 2666MHz DDR4 RDIMM memory modules

- 36 x 8TB 3.5” Seagate Exos SATA HDD

- 2 x 3.84TB Adata SR2000CP AIC SSD

- 1 x 960GB 2.5” Seagate SATA SSD

- 1 x GIGABYTE CLN4C44 4 x 25GbE SFP28 LAN ports (Mellanox ConnectX-4 Lx)

- 1 x GIGABYTE HW RAID CARD CRA4648, GIGABYTE MR 3108 BBU

As noted, the solution makes use of both hard drives and flash. Each node uses a 960GB Seagate SSD for boot. For the capacity tier, GIGABYTE is using Seagate Exos 8TB Enterprise HDDs, with 36 drives per storage node. To get the best performance profile out of this configuration, GIGABYTE uses a pair of Adata SR2000CP 3D eTLC SSDs per storage node. The 3.84TB add-in cards handle journaling duties as well as providing the cache element for the cluster. The SR2000CP family comes in capacities up to 11TB, but the 3.84TB cards meet the performance goals (R/W rates of up to 6000/3800MB per second) and cost goals of this appliance.

Looking at performance, it is important to understand how businesses look at large-scale SDS platforms. They are generally great for object protocol performance, but when you want to leverage a more traditional protocol such as iSCSI, it's seen as more of a "compatibility" use case. To put it another way, they work, but are much slower than the primary protocols the storage array was built around. That isn't the case with all platforms, though, which is part of what makes the VirtualStor Scaler so unique. To prove out this point, we ran back-to-back tests, one leveraging the RBD protocol and one with iSCSI. It's worth noting that testing every protocol VirtualScaler supports was outside of the scope of this article, as the list is broad (NFS, CIFS/SMB and S3 API).

For the testing specifics, we leveraged FIO to measure the performance of 30 10GB RBD shares up against 30 10GB iSCSI LUNs. We split that up so out of our three client systems, each was accessing 10 shares or LUNs. We then applied a workload of 1 thread per storage device, and a queue depth of 16 (in aggregate to the cluster, this worked out to 30 threads each with a 16Q load). We then compared performance of large sequential transfers as well as smaller 4K random traffic.

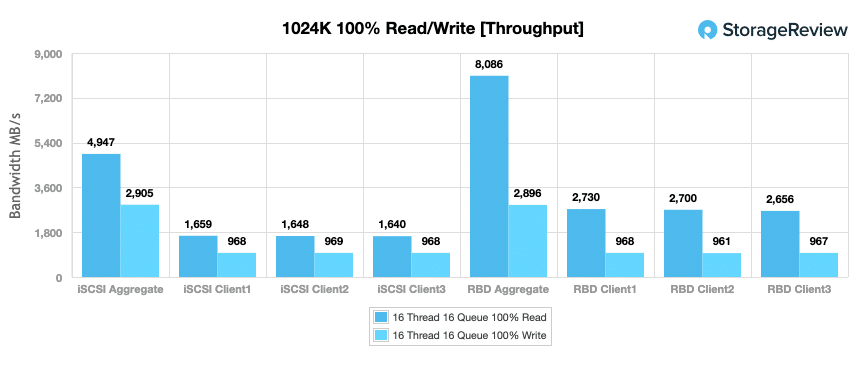

In our first workload measuring a 1024K sequential transfer, we saw an average of just over 1600MB/s read and 960MB/s write from each of our three clients leveraging iSCSI. In aggregate, this worked out to 4.9GB/s read and 2.9GB/s write. Leveraging RBD, we saw similar write traffic of over 960MB/s per client, but read performance was higher at over 2700MB/s per client. In aggregate, RBD totals measured 8.1GB/s read and 2.9GB/s write.

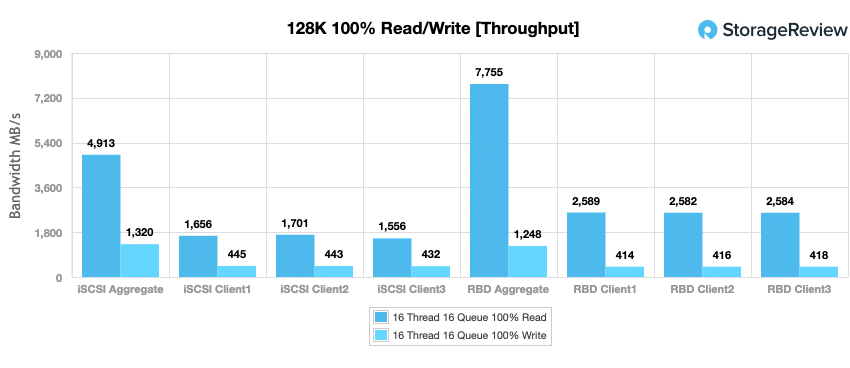

Lowering our workload size to a 128k sequential transfer, we again saw a similar balance between iSCSI and RBD performance. Using iSCSI, each client saw about 440MB/s write and 1600MB/s read, working out to an aggregate of 1.3GB/s write and 4.9GB/s read. Focusing on RBD, we saw slightly lower write performance of over 410MB/s per client and higher read performance of over 2500MB/s per client. This worked out to an aggregate of 1.2GB/s write and 7.8GB/s read over RBD.

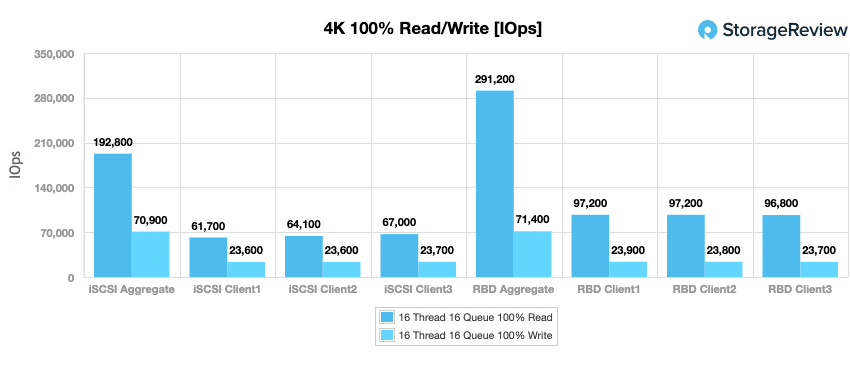

Switching our focus to smaller random-transfer performance, we focus on our 4K test. Looking at both protocols in 4K random write, iSCSI and RBD both offered similar performance of over 23K IOPS per client or roughly 71K IOPS in aggregate, with an edge towards RBD. Looking at read performance, we saw iSCSI with about 64K IOPS per client or 193K IOPS in aggregate, and RBD measuring 97K IOPS per client or 291K IOPS in aggregate.

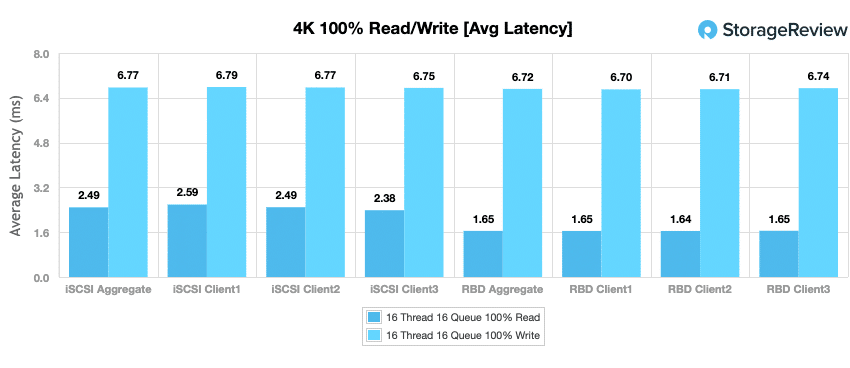

Focusing on average latency in our 4K random transfer tests, with an aggregate cluster load of 30 thread and 16 queue per thread, we measured just over 6.7ms write for both iSCSI and RBD transfers, with RBD having a small edge. In read transfers, RBD performance had a larger advantage, measuring 1.647ms in aggregate, compared to 2.489ms aggregate from iSCSI.

Bottom Line

VirtualStor Scaler is a flexible and scalable software-defined solution that runs on industry standard x86 servers, delivering file, block or object storage. In this case, we've combined client and storage nodes from GIGABYTE to highlight the converged solution's ease of management and multi-protocol support. It's also important to highlight resiliency available in the platform. That comes in thanks largely to the Ceph underpinnings of VirutalStor. Bigtera prefers to not lead with that messaging, because it's often assumed that Ceph provides fantastic data protection features, but lacks the ability to tell a performance and flexibility story—both of which are critical in most enterprise-storage applications. In our time with the VirtualStor Scaler, we were continually impressed with the ability to deliver very strong iSCSI performance alongside the RADOS block interface. Toss in the fact that there's a nice GUI on top, which is handy for those that prefer to not dabble with CLI—Bigtera has done well here. The VirtualStor solution is clearly capable of being much more than a data warehouse for typical analytics, HPC and AI/ML deployments. The enterprise would be wise to consider this as a direct replacement for a wide variety of use cases from on-prem cloud, to storage consolidation and more typical virtualized workloads, thanks to the flexibility of the solution.

GIGABYTE VirtualStor Scaler Product Page