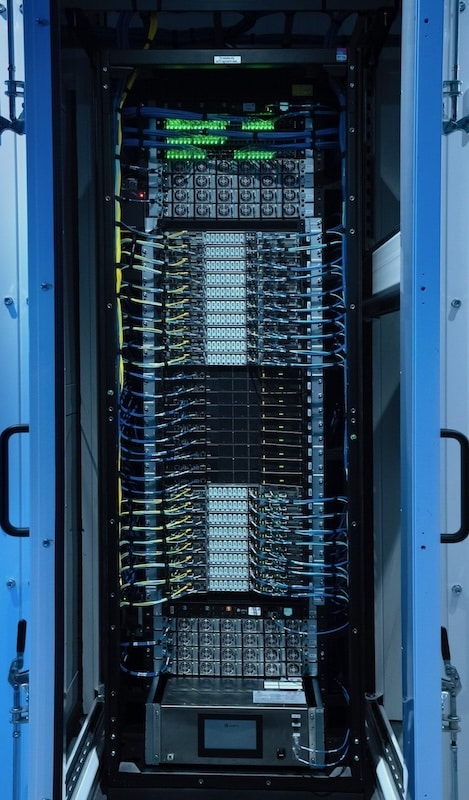

CoreWeave has deployed the latest GB200 NVL-72 system with the new Dell XE9712 servers. The system was showcased in a live demonstration at a Switch state-of-the-art data center, which highlighted its groundbreaking performance and advanced cooling infrastructure.

CoreWeave’s GB200 NVL-72 system, housed in Rob Roy’s Evo Chamber, is designed to handle the most demanding computational workloads. The live demo began with the NCCL All-Reduce Test, a benchmark demonstrating the ultra-high-bandwidth and low-latency of the Nvidia NVLink interconnectivity across the rack’s 72 GPUs. The test ensures seamless communication between the GPUs.

Live Training with CoreWeave’s Sunk

Live Training with CoreWeave’s Sunk

The GB200 NVL-72 was also tested with a live training run using Slurm on Kubernetes (Sunk), training the Megatron Model. The training session validated the rack with a real workload and demonstrated the resulting load on the cooling and power infrastructure.

Rob Roy’s Evo Chamber

The NVL72 is housed in Rob Roy’s Evo Chamber, which provides an impressive 1MW of power and cooling capability per rack. This advancement in infrastructure combines 250kW of air cooling with 750kW of direct-to-chip liquid cooling capacity, ensuring optimal performance for the most demanding AI and HPC workloads. The chamber’s sophisticated design maintains efficient power usage and thermal management while supporting next-generation computing requirements.

Conclusion

CoreWeave is a clear industry leader when it comes to proving AI infrastructure as a service. Much of their success is due to their ability to onboard the latest AI infrastructure faster than other clouds. The new Dell GB200 NVL-72 systems represent a new era in high-performance computing. They combine cutting-edge GPU performance, advanced cooling solutions, and energy efficiency to meet the demands of AI, scientific research, and data-intensive applications—a massive win for their customers who are running AI workloads at scale.

Amazon

Amazon