Quantum-Safe Storage is critical as Q-Day nears. Learn how vendors are securing data with post-quantum cryptography before it’s too late.

As the predicted “Q-Day” approaches—the point at which quantum computers can break widely used public-key encryption—organizations across government, finance, cloud, and enterprise IT are racing to harden their infrastructure. While estimates vary, NSA and NIST project Q-Day could arrive as early as 2033, forcing enterprises to rethink their approach to cryptographic security. The most immediate threat isn’t just future decryption—it’s the “harvest now, decrypt later” (HNDL) strategy already used by cybercriminals and nation-state actors. Sensitive data stolen today under RSA-2048 or ECC encryption could be decrypted once quantum computers reach sufficient scale, exposing everything from financial transactions to government secrets.

In response, governments, cloud providers, and hardware vendors are rapidly shifting to quantum-safe encryption, implementing new NIST-approved algorithms, and updating their security architectures. This transition isn’t just about upgrading encryption—it requires cryptographic agility, ensuring storage appliances, servers, and network protocols can seamlessly swap cryptographic algorithms as new threats emerge. While symmetric encryption and hashing methods like AES-256 and SHA-512 remain more resistant, even they are susceptible to quantum attacks, accelerating the need for post-quantum cryptographic (PQC) standards.

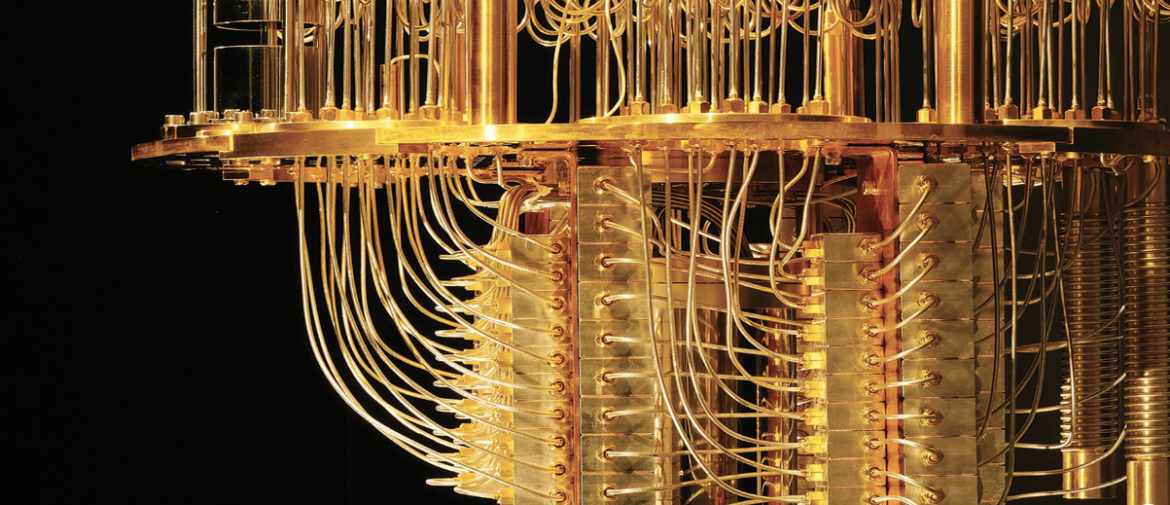

With breakthroughs in error correction and qubit scaling from IBM, Google, AWS, and Microsoft, the quantum race is moving faster than expected. Google’s Willow chip recently performed a computation that would take the world’s fastest supercomputer 10 septillion years, and with IBM and AWS developing quantum-safe cloud solutions, the shift to post-quantum cryptography (PQC) is no longer theoretical. Companies that fail to adapt risk compliance violations, massive data breaches, and reputational damage—making quantum-safe security a top priority for every enterprise storing long-term sensitive data.

AI-Generated photo of a quantum computer

Why is Quantum-Safe Security Critical?

In recent months, Amazon, Google, Microsoft, and others have made advancements in quantum computing. Google announced that its new Willow quantum chip made massive improvements over any other quantum computer known to the public. Even though Google’s Willow chip has only 105 qubits, and IBM’s Heron R2 chip has 156, they are still strong competitors. What sets Willow apart is its extremely low error rate compared to the current offerings. IBM’s Heron R2 2Q error rate is 0.371%, and its readout error rate is 1.475%, while Google’s Willow 2Q error rate is 0.14% (+/- 0.05%) with a readout error rate of 0.67% (+/- 0.51%). Even though there are larger quantum computers, such as IBM’s Condor with 1,121 qubits and Atom Computing’s second-generation 1,225 qubit system, Heron and Willow are significantly faster due to the lower error rates. To put this into perspective, IBM’s Heron R2 is said to be roughly 3-5x faster than its Condor chip.

The speed of Willow and Heron R2 display significant improvements in quantum computing technology because they can perform much faster than other chips with higher qubit counts. Since quantum computers already have a much higher rate of computing than classical computing technology, the increased speed of the new chips is closing the gap to Q-Day quicker than expected. Google’s Willow chip performed a benchmark computation in under 5 minutes, which would have taken today’s ONRL Frontier supercomputer 10 septillion years to complete. Quantum computers are developed quietly until the unveiling, raising questions about the next release.

At this point, “Q-Day” is rumored to be in the 2030s, when quantum computers can break public-key 2048-bit encryption. This poses considerable risks to data storage since quantum computers will reach the point where they can break this encryption in a matter of weeks or days. In contrast, classical supercomputers could take trillions of years to crack it. Not only is the physical technology for quantum computers a big concern, but the software leaves concerns as well. Most quantum computers typically utilize Shor’s algorithm, but Grover’s algorithm could speed up computational speed and attacks. Any significant computational improvement can bring the Q-Day even closer than previously expected.

Currently, the highest-risk attacks use “harvest now and decrypt later” attacks. These attacks are prevalent today, even though quantum computers have not reached the point of decrypting current algorithms. Attackers will steal your data encrypted with encryption algorithms that are not quantum-safe, and then, once the technology is available, they will crack it with quantum computers. This means that if you store data that is not quantum-safe, that data is vulnerable. The primary data targeted in these attacks are data types that will still be valuable when decrypting becomes an option. Typical targets include SSNs, names, dates of birth, and addresses. Other data types may be bank account numbers, tax IDs, and other financial or personal identifying data. However, information like credit and debit card numbers are less likely to remain valuable as these numbers rotate over time. Since current data, even before Q-Day, is vulnerable, appropriate measures should be taken to ensure your environment is quantum-safe.

How Many Qubits Does It Take to Break Encryption?

There’s no single answer to how many qubits it takes to break cryptographic methods. The number of qubits required can vary depending on the targeted algorithm and the approach used to attack it. However, for some of the most widely used encryption schemes today, researchers often refer to estimates based on Shor’s algorithm, which is designed for efficiently factoring large numbers and computing discrete logarithms—operations that underpin the security of many public-key cryptographic systems.

For instance, breaking RSA-2048, a common encryption standard, would require several thousand logical qubits. The exact number varies depending on the efficiency of the quantum algorithms and error-correction methods used, but estimates typically range from around 2,000 to 10,000 logical qubits. For symmetric encryption methods like AES, a full quantum brute-force attack would also require many logical qubits, though typically less than what’s needed for RSA, since symmetric encryption relies on different principles.

In short, the number of qubits required is not a fixed value but rather a range that depends on the encryption algorithm, the quantum algorithm used to break it, and the specifics of the quantum hardware and error-correction techniques employed.

Key Players in the Quantum Computing Race

Amazon AWS

Amazon Web Services (AWS) introduced Ocelot, an innovative quantum computing chip designed to overcome one of the most significant barriers in quantum computing: the prohibitive cost of error correction. By incorporating a novel approach to error suppression from the outset, the Ocelot architecture represents a breakthrough that could bring practical, fault-tolerant quantum computing closer to reality. Although the Ocelot announcement is not “quantum-safe” specific, it is essential to see the advances in quantum computing.

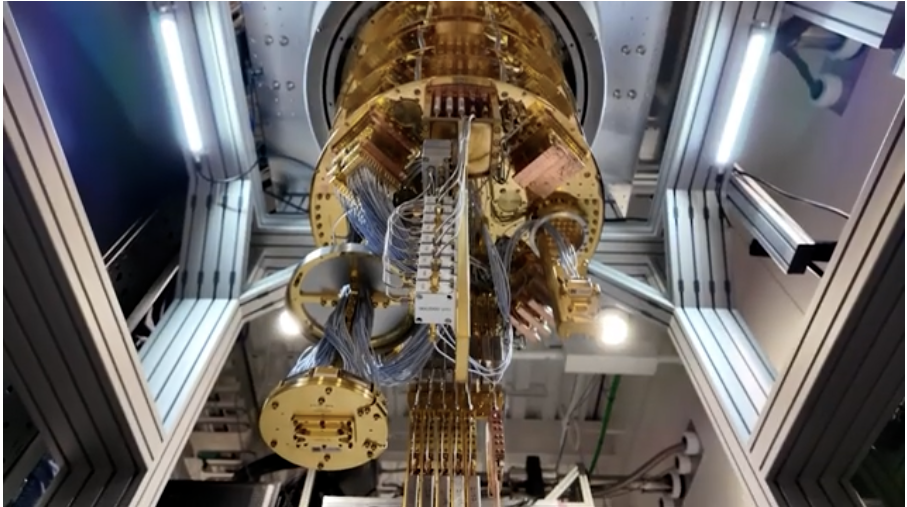

Quantum computers are susceptible to their surroundings. Slight disturbances—such as vibrations, temperature fluctuations, or even cosmic rays—can disrupt qubits, causing calculation errors. Historically, quantum error correction involves encoding quantum information across multiple qubits, creating “logical” qubits that detect and fix errors. However, current error correction approaches require vast resources, making large-scale quantum computing costly and complex.

A Novel Approach to Quantum Error Correction

Developed by the AWS Center for Quantum Computing at Caltech, Ocelot was built from the ground up with error correction as its foundation. This approach departs from the traditional method of retrofitting existing architectures to handle errors. Instead, Ocelot uses “cat qubits,” inspired by Schrödinger’s cat thought experiment, which inherently suppresses certain errors. This built-in error resilience drastically reduces the resources needed for error correction, potentially cutting the cost by up to 90%.

Ocelot combines these cat qubits with additional quantum error correction components on a scalable silicon microchip, leveraging microelectronics industry manufacturing techniques. This design ensures the chip can be produced in larger quantities at lower costs, addressing a key obstacle in quantum computing’s path to widespread adoption.

According to Oskar Painter, AWS Director of Quantum Hardware, this new approach could accelerate the development of practical quantum computers by up to five years. Ocelot sets the stage for applying quantum computing to complex, real-world problems by lowering resource requirements and enabling more compact, reliable quantum systems. These include advancing drug discovery, creating new materials, optimizing supply chains, and improving financial forecasting models.

The prototype Ocelot chip comprises two integrated silicon microchips bonded in a stack. The quantum circuit elements are formed from thin layers of superconducting material, including Tantalum, which enhances the quality of the chip’s oscillators—the core components responsible for maintaining stable quantum states. Each chip is just 1cm², yet it houses 14 critical components: five data qubits (cat qubits), five buffer circuits for stabilization, and four error-detection qubits.

A Vision for the Future

While Ocelot is still in the prototype phase, AWS is committed to ongoing research and development. Painter notes that the journey to fault-tolerant quantum computing will require continuous innovation and collaboration with the academic community. By rethinking the quantum stack and integrating new findings into the engineering process, AWS aims to build a robust foundation for the next generation of quantum technologies.

AWS has published its findings on Ocelot in a peer-reviewed Nature article and on the Amazon Science website, providing deeper technical insights into the chip’s architecture and capabilities. AWS’s investment in foundational quantum research and scalable solutions will help transform quantum computing’s potential into real-world breakthroughs as the research progresses.

Google Research (Willow)

In December 2024, Google Research introduced Willow, a breakthrough in quantum error correction that could significantly accelerate the timeline for practical quantum computing. While Google has not explicitly announced post-quantum cryptographic advancements, Willow’s error suppression and scalability improvements make it a major step toward fault-tolerant quantum systems.

One of the long-standing challenges in quantum computing has been managing error rates, which increase as more qubits are added. Willow addresses this by demonstrating exponential error suppression—as the number of qubits grows, the system becomes significantly more stable and reliable. In testing, Willow achieved a benchmark computation in under five minutes—a task that would take ORNL’s Frontier supercomputer over 10 septillion years to complete, a number that far exceeds the universe’s age.

Google’s key breakthrough lies in its scalable quantum error correction approach. Each time the encoded qubit lattice was increased from 3×3 to 5×5 to 7×7, the encoded error rate was cut in half. This proves that as more qubits are added, the system does not just grow—it becomes exponentially more reliable. This marks a significant milestone in quantum error correction, a challenge researchers have pursued for nearly three decades.

While Willow’s current 105-qubit design may seem modest compared to IBM’s 1,121-qubit Condor, its drastically lower error rate and scalable architecture position it as a potential game-changer in the quantum computing arms race.

IBM

IBM’s advancements in quantum computing and artificial intelligence place the company at the forefront of technological innovation. From leading efforts in post-quantum cryptography to releasing cutting-edge AI models, IBM continues to demonstrate a commitment to shaping the future of secure, intelligent enterprise solutions.

IBM has positioned itself as a leader in the post-quantum cryptography landscape, a vital area of research as quantum computing evolves. The company’s significant contributions to quantum-safe cryptographic standards include the development of several algorithms that have been recognized as benchmarks in the field. Notably, two IBM algorithms—ML-KEM (formerly CRYSTALS-Kyber) and ML-DSA (formerly CRYSTALS-Dilithium)—were officially adopted as post-quantum cryptography standards in August 2024. These algorithms were created in collaboration with top academic and industry partners, representing a critical step toward encryption methods capable of resisting quantum attacks.

In addition, IBM played a key role in another significant standard, SLH-DSA (formerly SPHINCS+), which was co-developed by a researcher now at IBM. The company’s FN-DSA algorithm (formerly FALCON) has also been selected for future standardization to further establish its leadership. These achievements highlight IBM’s ongoing effort to define and refine the cryptographic tools to secure data in a post-quantum world.

Beyond algorithm development, IBM has begun integrating these quantum-safe technologies into its cloud platforms. By delivering practical, scalable solutions that enterprise environments can adopt, IBM underscores its commitment to helping organizations safeguard their data against quantum-based threats. This comprehensive approach—creating new algorithms, establishing industry standards, and real-world deployment—positions IBM as a trusted partner for enterprises preparing for a post-quantum future.

Granite 3.2

Alongside its quantum computing efforts, IBM is also advancing AI with the release of the Granite 3.2 model family. These AI models range from smaller 2-billion-parameter configurations to more extensive 8-billion-parameter options, offering a versatile lineup tailored to various enterprise needs. Among these are several specialized models designed to handle distinct tasks:

- Vision Language Models (VLMs): Capable of understanding and processing tasks that combine image and text data, such as reading documents.

- Instruct models with reasoning support: This feature is optimized for more complex instruction-following and reasoning tasks, enabling improved performance on benchmarks.

- Guardian models: Safety-focused models building upon prior iterations are fine-tuned to deliver more secure and responsible content handling.

IBM’s portfolio also includes time series models (formerly referred to as TinyTimeMixers, or TTMs) designed for analyzing data that changes over time. These models can forecast long-term trends, making them valuable for predicting financial market movements, supply chain demand, or seasonal inventory planning.

As with its quantum advancements, IBM’s AI models benefit from ongoing evaluation and refinement. The Granite 3.2 lineup has shown strong performance, particularly in reasoning tasks where the models can rival state-of-the-art (SOTA) competitors. However, questions remain regarding the transparency of the testing process. The current benchmarks highlight the strength of IBM’s models, but some techniques, such as inference scaling, may have given Granite an advantage. Importantly, these techniques aren’t unique to IBM’s models; competitor adoption could surpass Granite in similar tests.

Clarifying how these benchmarks were conducted and acknowledging that the underlying techniques can be applied across models would help paint a fairer picture of the competitive landscape. This transparency ensures that enterprises fully understand the capabilities and limitations of the Granite models, allowing them to make informed decisions when adopting AI solutions.

By integrating cryptographic standards and developing advanced AI models, IBM is delivering a comprehensive suite of technologies designed to meet the needs of modern enterprises. Its approach to post-quantum cryptography sets the stage for a secure future, while the Granite 3.2 family showcases AI’s potential to transform business operations.

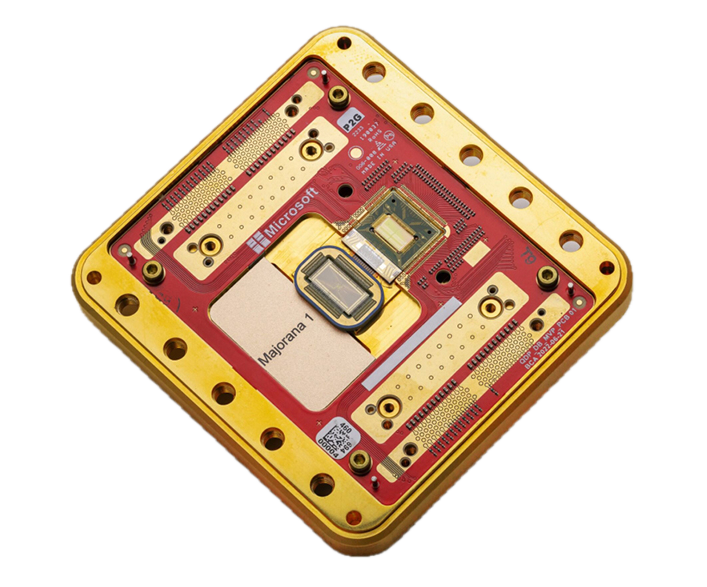

Microsoft

Microsoft recently announced the Majorana 1 quantum chip. It is built using a breakthrough material called a topoconductor and is the world’s first quantum chip powered by a Topological Core. This chip can achieve a new state of matter that may shorten the timeline for developing meaningful quantum computers from decades to years. This topological approach enables the creation of quantum systems capable of scaling to a million qubits on a single chip. This advancement allows us to address problems that worldwide computational power cannot solve today.

Although Microsoft has not announced a post-quantum crypto solution, it has followed security standards. It is prepared to offer a hybrid solution that utilizes classical and quantum computing.

What’s the impact of being unprepared?

- Encryption Vulnerabilities: Once large-scale quantum machines become a reality, classical encryption methods like RSA and ECC could be broken more quickly.

- Post-Quantum Algorithms: To address this threat, cryptographers and tech vendors are developing new algorithms designed to resist quantum attacks.

- Data Protection and Compliance: Industries handling sensitive data—finance, healthcare, government—must stay ahead of quantum threats to meet regulatory standards and protect customer information.

- Hardware and Software Updates: Implementing post-quantum cryptography requires updates to existing infrastructure, impacting everything from servers and storage devices to network equipment and software-based security tools.

- Long-Term Strategy: Early planning helps organizations avoid rushed migrations, ensuring that data remains secure even if quantum computing evolves more rapidly.

What Does It Mean to Be Quantum-Safe?

Being quantum-safe means ensuring that an entire IT infrastructure—servers, storage, networks, and applications—is protected against potential quantum computing attacks. This involves transitioning to NIST-approved post-quantum cryptographic (PQC) algorithms designed to withstand attacks from quantum computers. Unlike traditional encryption, which relies on integer factorization or elliptic-curve cryptography (ECC), quantum-safe encryption is based on structured lattices, stateless hashes, and NTRU lattices, which are much harder for quantum computers to crack.

One of the biggest challenges in this transition is cryptographic agility—the ability to swap encryption algorithms as vulnerabilities are discovered quickly. Many current cryptographic methods are deeply embedded into firmware, software, and hardware security chips, making this transition complex. Organizations that fail to adopt cryptographic agility may struggle to update their security posture as quantum threats emerge.

Since 2016, NIST has been leading an 8-year post-quantum cryptography standardization effort, evaluating 69 potential algorithms before selecting four finalists:

- CRYSTALS-Kyber (FIPS 203) – Public-key encryption and key exchange

- CRYSTALS-Dilithium (FIPS 204) – Digital signatures

- SPHINCS+ (SLH-DSA, FIPS 205) – Hash-based digital signatures

- FALCON – Another digital signature scheme (not yet fully recommended by NIST)

These algorithms form the backbone of quantum-resistant security, and NIST is also working on backup standards to ensure adaptability as quantum computing advances.

Transitioning to quantum-safe security for organizations running legacy systems may require creative middleware solutions or hardware refresh cycles to ensure long-term compliance. However, enterprises prioritizing cryptographic agility today will be better positioned for a smooth migration as post-quantum cryptography becomes the new standard.

Vendor’s Approach to Quantum-Safe in Storage

Broadcom

Broadcom, a company traditionally focused on high-speed network connectivity and offloading technologies, has been heavily engaged in the security implications of post-quantum computing. While they haven’t heavily publicized its quantum research efforts, Broadcom’s initiatives around secure connectivity are significant. By aligning its products with emerging cryptographic standards, Broadcom aims to ensure that its widely used networking hardware—such as Emulex-branded adapters—remains secure against future threats. This quiet, methodical approach reflects Broadcom’s broader commitment to delivering resilient infrastructure that supports the evolving demands of enterprise and data center environments. Over time, Broadcom may leverage its industry position and trusted hardware portfolio to integrate quantum-safe solutions more prominently, helping its customers navigate the shift to post-quantum standards.

We recently covered Broadcom’s new Emulex Secure Fibre Channel Host Bus Adapter (HBA), which incorporates Post-Quantum Cryptography NIST standards and Zero Trust. These new HBAs offload encryption from the host system to ensure no adverse performance effects. During our testing, the Emulex HBAs performed as advertised, with less than a 3% performance hit.

Emulex HBAs process all Encrypted Data In Flight (EDIF) in hardware. The HBAs have 8-core SoCs, which manage the workload and direct the data packets through the encryption offload engine. Since the encryption is offloaded, the host CPU is unaffected by those encryption operations.

Dell

Dell has steadily been laying the groundwork for post-quantum readiness. By embedding hardware-based encryption capabilities into its servers, storage arrays, and data protection appliances, Dell offers a secure foundation for enterprise workloads. Its collaboration with industry partners and adherence to emerging quantum-safe standards reflect a deliberate strategy to help customers future-proof their infrastructures.

Although Dell’s quantum-related initiatives are not as publicly visible as some of its peers, its focus on resilience, reliability, and seamless integration into existing IT environments demonstrates a strong commitment to its customers. As the post-quantum era approaches, Dell’s blend of industry partnerships and solid infrastructure solutions will likely provide enterprises with a straightforward path to secure their data and operations.

Dell is aware that quantum computing will seriously impact the current security landscape, making quantum computing a powerful tool for attackers. Cryptography, foundational to data and system security, must evolve. Dell will assist enterprises transitioning to quantum-safe security in the following ways:

- Engage in the PQC ecosystem: Provide extensive resources and expertise to help businesses stay ahead of advancements in quantum computing and post-quantum cryptography (PQC). Our insights can help companies anticipate and effectively navigate future challenges.

- Evaluate security postures: Assess the data and systems in your environment to identify potential vulnerabilities in cryptographic systems and prepare for future threats.

- Invest in Quantum-Safe Solutions: Dell is committed to providing cutting-edge solutions to explore and implement PQC strategies. They collaborate with industry experts to ensure alignment with emerging standards and technologies.

- Craft a transition roadmap: Develop and execute detailed transition plans, integrating quantum-safe infrastructure with clear timelines and resource commitments. Consumers of technology need to prepare now for 2035 to adopt quantum-resistant systems.

- Foster industry collaboration: Actively participate in industry forums such as the Quantum Economic Development Consortium (QED-C) and Quantum Cryptography and Post-Quantum Cryptography Working Groups as well as other partnerships to share insights and best practices, driving collective progress in quantum security.

As we near the quantum era, enterprise resilience depends on anticipating and adapting to the technological shift ahead. Dell customers are aligning with the standardization of post-quantum cryptography algorithms. Governments are mandating quantum-resistant systems, with significant transitions expected around 2030 to 2033. While PQC implementation may take a few years, organizations should adopt security best practices today to ease tomorrow’s transition. By embracing PQC and preparing with Dell Technologies, enterprises can secure operations, drive innovation, and thrive in a quantum-powered world. Having strategic foresight and utilizing proactive measures are essential.

Post-Quantum Cryptography: A Strategic Imperative for Enterprise Resilience

IBM

Two of the newly released NIST PQC standards were developed by cryptography experts at IBM Research in Zurich, while the third was co-developed by a scientist now working at IBM Research. IBM has established itself as a leader in PQC research, driven by a commitment to a quantum-safe future through its portfolio of IBM Quantum Safe™ products and services. As a side note, IBM worked on a standard for encryption in 1970 that was adopted by the NIST predecessor, the US National Bureau of Standards.

We recently published a review of the IBM FlashSystem 5300 that discusses IBM’s focus on future-proofing its storage products and everything that IBM supports. Read our review of the FlashSystem 5300.

FlashCore Modules are the core building blocks for all NVMe FlashSystem storage arrays. IBM FlashCore Module 4 (FCM4) support:

- Quantum Safe Cryptography (QSC)

- Asymmetrical Cryptographic Algorithms

- CRYSTALS-Dilithium signatures for authentication and FW verification

- CRYSTALS-Kyber for secure key transport of unlock PINtransmitted by IBM FLASHSYSTEMS Controllers to FCM’s

- Customer data encrypted in flash memory with *XTS-AES-256

- Two other algorithms that are being considered by NIST, FALCON and Sphincs+, are not currently used in the FlashSystem.

Teams at IBM Quantum Safe and IBM Research have launched several initiatives to secure IBM’s quantum computing platform and hardware against potential “harvest now, decrypt later” cyber threats. In addition, IBM is forging partnerships with both quantum and open-source communities to protect its clients and ensure global quantum safety. Central to these efforts is a comprehensive plan to integrate quantum-safe security protocols across IBM’s hardware, software, and services, starting with the IBM Quantum Platform.

The IBM Quantum Platform, accessible via the Qiskit software development kit, provides cloud-based access to IBM’s utility-scale quantum computers. Its transition to quantum-safe security will occur in multiple phases, with each stage extending post-quantum cryptography into additional hardware and software stack layers. IBM has implemented quantum-safe Transport Layer Security (TLS) on the IBM Quantum Platform. This security measure, powered by the IBM Quantum Safe Remediator™ tool’s Istio service mesh, ensures quantum-safe encryption from client workstations through IBM Cloud’s firewall and into the cloud services. While IBM continues to support standard legacy connections, researchers and developers will soon be able to submit quantum computational tasks entirely via quantum-safe protocols.

Courtesy of IBM from the Responsible Quantum Computing blog.

IBM’s commitment to quantum safety also includes a robust portfolio of tools under the IBM Quantum Safe brand. These tools include the IBM Quantum Safe Explorer™, IBM Quantum Safe Posture Management, and IBM Quantum Safe Remediator. Each tool serves a distinct role:

- IBM Quantum Safe Explorer helps application developers and CIOs scan their organization’s application portfolios, identify cryptographic vulnerabilities, and generate Cryptographic Bills of Materials (CBOMs) to guide quantum-safe implementation.

- IBM Quantum Safe Posture Management provides a comprehensive inventory of an organization’s cryptographic assets, enabling tailored cryptographic policies, risk assessments, and contextual analyses of vulnerabilities.

- IBM Quantum Safe Remediator protects data in transit by enabling quantum-safe TLS communications. It also includes a Test Harness that allows organizations to measure the performance impact of post-quantum algorithms before making system-wide updates.

While IBM Quantum Safe Explorer and IBM Quantum Safe Remediator are already available, IBM Quantum Safe Posture Management is currently in private preview. As IBM expands its Quantum Safe Portfolio, it focuses on delivering complete visibility and control over cryptographic security, empowering enterprises to transition seamlessly to quantum-safe systems.

Beyond IBM’s proprietary efforts, significant strides have been made in the open-source community. Recognizing the critical role of open-source software (OSS) in global computing, IBM has advocated for building community and governance around post-quantum cryptography tools. In collaboration with the Linux Foundation and the Open Quantum Safe community, IBM helped establish the Post-Quantum Cryptography Alliance (PQCA) in 2023. This alliance fosters industry-wide cooperation and the advancement of post-quantum cryptography, supported by contributions from major players like AWS, NVIDIA, and the University of Waterloo.

IBM’s contributions to the open-source ecosystem include:

- Open Quantum Safe: A foundational project enabling post-quantum cryptography in Linux and other environments.

- Post-Quantum Code Package: High-assurance software implementations of standards-track PQC algorithms.

- Sonar Cryptography: A SonarQube plugin that scans codebases for cryptographic assets and generates CBOMs.

- OpenSSL and cURL Enhancements: Adding post-quantum algorithm support and observability features.

- HAProxy and Istio Contributions: Improving observability and configuring quantum-safe curves for secure communications.

- Python Integration: Enabling quantum-safe algorithm configuration for TLS within Python’s OpenSSLv3 provider.

These contributions illustrate IBM’s involvement in advancing open-source quantum safety, from pioneering the Qiskit toolkit to driving community efforts that will secure open-source software in the quantum era.

As quantum computers progress toward practical utility, the potential threat of breaking public-key encryption becomes more pressing. While it may be years before this becomes a reality, the risk of “harvest now, decrypt later” schemes demands immediate action. IBM has been at the forefront of developing and sharing post-quantum encryption algorithms as part of the NIST competition. IBM will continue to lead the Post-Quantum Cryptography Alliance, incorporate NIST feedback, and guide the global transition to quantum-safe methods.

To support enterprise clients, IBM provides a comprehensive suite of tools and services to enable quantum-safe transformation. These resources help organizations replace at-risk cryptography, enhance cryptographic agility, and maintain visibility over cybersecurity postures.

IBM has created a guide to help determine what is required for deploying cryptography. Download Implementing Cryptography Build of Materials to get started on implementing post-quantum systems and applications.

NetApp

NetApp has announced a solution called Quantum-Ready Data-at-Rest Encryption by NetApp. This solution utilizes AES-256 encryption to enforce current NSA recommendations to protect against quantum attacks.

NetApp’s introduction of Quantum-Ready Data-at-Rest Encryption highlights the company’s approach to storage security. By implementing AES-256 encryption in alignment with NSA recommendations, NetApp provides customers with a safeguard against the potential risks posed by quantum computing. Beyond the technical implementation, NetApp’s emphasis on cryptographic agility—such as the ability to adapt to new encryption standards—sets it apart. This focus ensures that as quantum-safe algorithms mature and become standardized, NetApp’s storage solutions can evolve alongside them. By combining this agility with a strong reputation in data management and hybrid cloud environments, NetApp positions itself as a reliable partner for organizations preparing for a post-quantum world.

NetApp offers an integrated, quantum-ready encryption solution adhering to the Commercial National Security Algorithm Suite, which recommends AES-256 as the preferred algorithm and key length until quantum-resistant encryption algorithms are defined (see NSA site for more details). Additionally, under the Commercial Solutions for Classified Program, the NSA advocates a layered encryption approach incorporating software and hardware layers.

NetApp Volume Encryption (NVE), a key feature in NetApp ONTAP data management software, provides FIPS 140-2 validated, AES-256 encryption via a software cryptographic module. NetApp Storage Encryption (NSE) utilizes self-encrypting drives to deliver FIPS 140-2 validated, AES-256 encryption for AFF all-flash and FAS hybrid-flash systems. These two distinct encryption technologies can be combined

together to provide a native, layered encryption solution that provides encryption redundancy and additional security: if one layer is breached, the second layer is still securing the data.

Much More to Come

While this article highlights quantum-safe initiatives from IBM, Dell, NetApp, and Broadcom, other major storage and infrastructure providers have also begun preparing for the post-quantum era. HPE has incorporated quantum-safe cryptographic capabilities into Alletra Storage MP and Aruba networking products, aligning with NIST’s PQC standards. Pure Storage has acknowledged the quantum threat and is working on integrating post-quantum security into its Evergreen architecture, ensuring seamless cryptographic updates.

Western Digital and Seagate are exploring quantum-resistant data protection strategies to secure long-term archival data. Cloud storage providers like AWS, Google Cloud, and Microsoft Azure have begun rolling out post-quantum TLS (PQTLS) for encrypted data in transit, signaling a broader industry shift toward quantum-safe storage and networking solutions. As quantum computing continues to evolve, enterprises should actively monitor vendor roadmaps to ensure long-term cryptographic resilience in their infrastructure.

Preparing for the Quantum Future

Quantum computing poses one of our most significant cybersecurity challenges, and waiting until Q-Day arrives is not an option. When 2048-bit asymmetric encryption is broken, organizations that have not adapted will find their most sensitive data exposed—potentially facing massive financial, legal, and reputational consequences. While a quantum-driven breach may not end the world, it could quickly end a company.

The good news? Quantum-safe security isn’t an overnight overhaul—it’s a strategic transition. Organizations implementing cryptographic agility today will be far better positioned to handle future threats, ensuring that software, storage, and infrastructure can evolve alongside post-quantum standards. Cloud providers, storage vendors, and security firms are already integrating NIST-approved PQC algorithms, but businesses must actively secure their own data.

If your organization has not yet begun assessing quantum risks, this should be your wake-up call. Review NIST’s PQC recommendations, vendor roadmaps, and post-quantum migration strategies. The transition to quantum-safe security is already underway, and those who act now will be the best prepared for the future.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed