NVIDIA Spectrum-X includes adaptive routing to stem the flow of collisions and optimize bandwidth utilization.

AI factories require more than high-performance compute fabrics to operate efficiently. While East-West networking plays a critical role in connecting GPUs, storage fabrics—responsible for linking high-speed storage arrays—are equally essential. Storage performance significantly impacts multiple AI lifecycle stages, including training checkpointing and inference techniques such as retrieval-augmented generation (RAG). To address these demands, NVIDIA and its storage ecosystem have extended the NVIDIA Spectrum-X networking platform to enhance storage fabric performance, accelerating time to AI insights.

Understanding Network Collisions in AI Clusters

Network collisions occur when multiple data packets attempt to traverse the same network path simultaneously, resulting in interference, delays, and, occasionally, the need for retransmission. In large-scale AI clusters, such collisions are more likely when GPUs are fully loaded or heavy traffic from data-intensive operations.

As GPUs process complex computations simultaneously, network resources can become saturated, leading to communication bottlenecks. Spectrum-X is designed to counter these issues by automatically and dynamically rerouting traffic and managing congestion, ensuring that critical data flows uninterrupted without the need for implementations such as Meta’s Enhanced ECMP described in the LLAMA 3 paper.

Optimizing Storage Performance with Spectrum-X

NVIDIA Spectrum-X introduces adaptive routing capabilities that mitigate flow collisions and optimize bandwidth utilization. Compared to RoCE v2, the Ethernet networking protocol widely used in AI compute and storage fabrics, Spectrum-X achieves superior storage performance. Tests demonstrate up to a 48% improvement in read bandwidth and a 41% increase in write bandwidth. These advancements translate to faster execution of AI workloads, reducing training job completion times and minimizing inter-token latency for inference tasks.

As AI workloads scale in complexity, storage solutions must evolve accordingly. Leading storage providers, including DDN, VAST Data, and WEKA, have partnered with NVIDIA to integrate Spectrum-X into their storage solutions. This collaboration enables AI storage fabrics to leverage cutting-edge networking capabilities, enhancing performance and scalability.

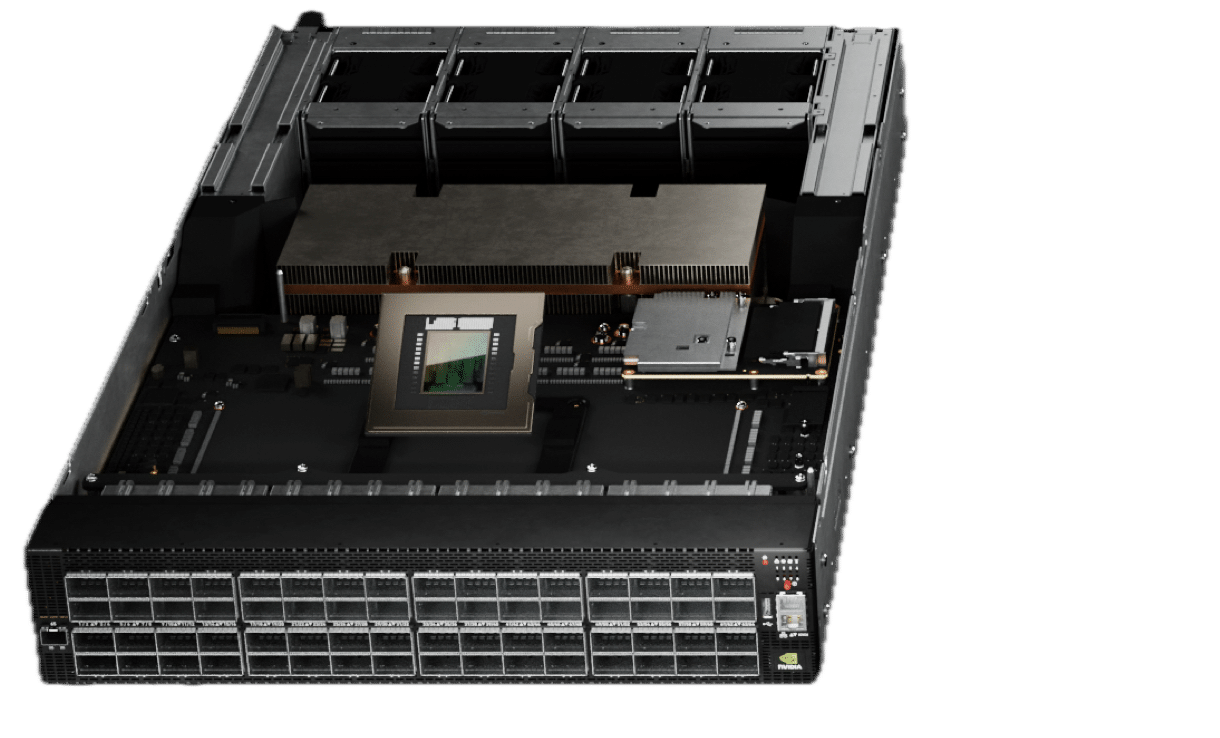

The Israel-1 Supercomputer: Validating Spectrum-X Impact

NVIDIA built the Israel-1 generative AI supercomputer as a test bed to optimize Spectrum-X performance in real-world scenarios. The Israel-1 team conducted extensive benchmarking to evaluate Spectrum-X’s impact on storage network performance. Using the Flexible I/O Tester (FIO) benchmark, they compared a standard RoCE v2 network configuration with Spectrum-X’s adaptive routing and congestion control enabled.

The tests spanned configurations ranging from 40 to 800 GPUs, consistently demonstrating superior performance with Spectrum-X. Read bandwidth improvements ranged from 20% to 48%, while write bandwidth saw gains between 9% and 41%. These results closely align with performance enhancements observed in partner ecosystem solutions, further validating the technology’s effectiveness in AI storage fabrics.

The Role of Storage Networks in AI Performance

Storage network efficiency is critical to AI operations. Model training often spans days, weeks, or even months, necessitating periodic checkpointing to prevent data loss from a system failure. With large-scale AI models reaching terabyte-scale checkpoint states, efficient storage network management ensures seamless training continuity.

RAG-based inference workloads further emphasize the importance of high-performance storage fabrics. By combining an LLM with a dynamic knowledge base, RAG enhances response accuracy without requiring model retraining. Typically stored in large vector databases, these knowledge bases necessitate low-latency storage access to maintain optimal inference performance, particularly in multi-tenant generative AI environments that handle high query volumes.

Applying Adaptive Routing, Congestion Control to Storage

Spectrum-X introduces key Ethernet networking innovations adapted from InfiniBand to improve storage fabric performance:

- Adaptive Routing: Spectrum-X dynamically balances network traffic to prevent elephant flow collisions during checkpointing and data-intensive operations. Spectrum-4 Ethernet switches analyze real-time congestion data, selecting the least congested path for each packet. Unlike legacy Ethernet, where out-of-order packets require retransmission, Spectrum-X utilizes SuperNICs and DPUs to reorder packets at the destination, ensuring seamless operation and higher effective bandwidth utilization.

- Congestion Control: Checkpointing and other AI storage operations frequently result in many-to-one congestion, where multiple clients attempt to write to a single storage node. Spectrum-X mitigates this by regulating data injection rates using hardware-based telemetry, preventing congestion hotspots that could degrade network performance.

Ensuring Resiliency in AI Storage Fabrics

Large-scale AI factories incorporate an extensive network of switches, cables, and transceivers, making resilience a critical factor in maintaining performance. Spectrum-X employs global adaptive routing to quickly reroute traffic during link failures, minimizing disruptions and preserving optimal storage fabric utilization.

Seamless Integration with the NVIDIA AI Stack

In addition to Spectrum-X’s hardware innovations, NVIDIA offers software solutions to accelerate AI storage workflows. These include:

- NVIDIA Air: A cloud-based simulation tool for modeling switches, SuperNICs, and storage, streamlining deployment and operations.

- NVIDIA Cumulus Linux: A network operating system with built-in automation and API support for efficient management at scale.

- NVIDIA DOCA: An SDK for SuperNICs and DPUs, providing enhanced programmability and storage performance.

- NVIDIA NetQ: A real-time network validation tool integrating with switch telemetry for enhanced visibility and diagnostics.

- NVIDIA GPUDirect Storage: A direct data transfer technology optimizing storage-to-GPU memory pathways for improved data throughput.

By integrating Spectrum-X into storage networks, NVIDIA and its partners are redefining AI infrastructure performance. The combination of adaptive networking, congestion control, and software optimization ensures that AI factories can scale efficiently, delivering faster insights and improved operational efficiency.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed