Dell unveils the PowerEdge XE8712 at GTC 2025, enhancing AI and HPC capabilities with NVIDIA Grace Blackwell Superchip integration.

Dell Technologies unveiled its latest innovations designed to accelerate large-scale AI and High-Performance Computing (HPC) at NVIDIA GTC 2025, prominently featuring the Dell PowerEdge XE8712. Designed for high density, computational performance, and energy efficiency, the XE8712 illustrates Dell’s commitment to delivering powerful solutions tailored for demanding research, AI modeling, and intensive compute workloads.

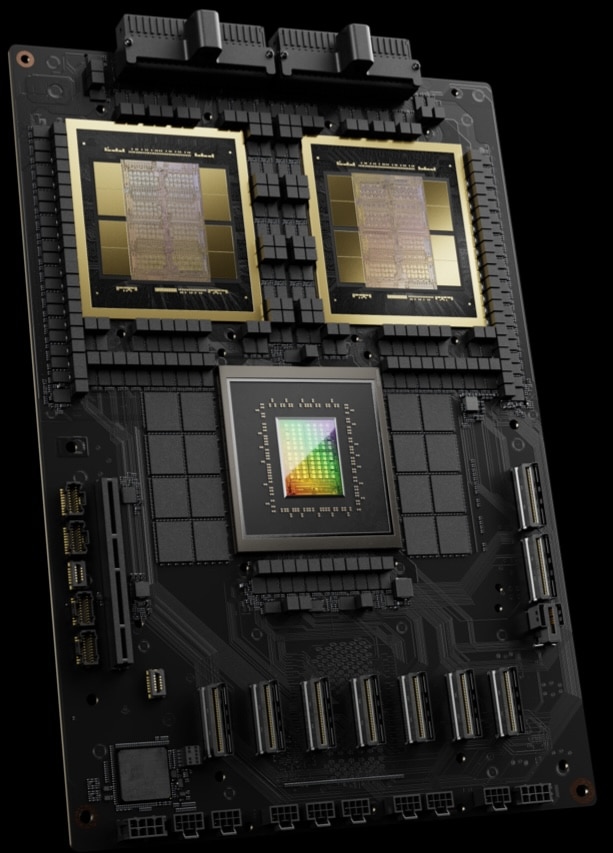

At its core, the Dell PowerEdge XE8712 integrates the NVIDIA GB200 NVL4 architecture, featuring the NVIDIA Grace Blackwell Superchip configuration. Specifically, it utilizes two NVIDIA Grace CPU Superchips and four NVIDIA NVLink interconnected B200 GPUs, setting new standards for AI-powered rack solutions. The XE8712 has a high-performance backbone that can deliver advanced molecular simulations, train trillion-parameter AI models, and accelerate financial market forecasting.

The Versatile Dell PowerEdge XE8712

The XE8712 differentiates itself with density, cooling, and rack-scale versatility. It supports up to 144 NVIDIA Blackwell GPUs within a single Dell IR7000 factory-integrated rack, offering one of the highest GPU densities in the industry. Organizations benefit from significant reductions in data center space, associated costs, and operational overhead by maximizing GPU resources within a compact footprint.

Smart Power and Advanced Cooling

Leveraging Direct Liquid Cooling (DLC) technology, the XE8712 supports extraordinary power densities, handling up to 264kW per rack. Its integration into Dell’s modular IR7000 racks includes advanced, disaggregated power shelves and shared power bus bars capable of 480kW total power support. This hybrid DLC approach efficiently captures and dissipates heat generated by intensive computing loads, dramatically improving energy efficiency, sustainability, and overall operational reliability.

Versatile and Scalable Rack-Scale Design

Inspired by the Open Compute Project’s (OCP) Open Rack v3 (ORv3) specifications, the IR7000 rack integrates seamlessly with the XE8712, accommodating up to 36 nodes per rack. Dell’s design features front-facing I/O serviceability and quick-disconnect DLC manifolds, simplifying maintenance and future expansions. The modular structure ensures readiness for emerging PowerEdge servers and continued adaptability to evolving power and cooling needs in dynamic AI environments.

But Wait, There’s More!

Dell also introduced significant updates across its PowerEdge server portfolio, explicitly tailored for AI-intensive use cases, including:

- PowerEdge XE7740 & XE7745 Servers: The new Dell PowerEdge XE7740 and XE7745 servers support up to eight NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, making them ideal platforms for AI inferencing, model fine-tuning, advanced graphics workloads, and enterprise AI applications. These servers provide flexible, high-performance environments to meet complex computational challenges.

- Sneak Peek at the NVIDIA Blackwell Ultra Platform: Dell offered a sneak peek of its forthcoming servers featuring the NVIDIA Blackwell Ultra platform, designed specifically for large-scale AI applications like large language model (LLM) training. With configurations featuring up to 288GB of advanced HBM3e memory, Dell is poised to set new benchmarks in scalability and performance.

- Networking with NVIDIA ConnectX-8: Dell’s PowerEdge servers now support NVIDIA’s ConnectX-8 networking at 800Gb Ethernet. This advanced connectivity solution delivers ultra-low latency and massive bandwidth scalability, enabling enterprises to achieve peak performance from their data centers under heavy, AI-driven workloads.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed