Cisco’s Unified Computing System (UCS) is designed with the idea of unifying and simplifying the data center. Customers should be able to deploy servers quickly with less overall infrastructure all with unified management through UCS Manger. The Cisco UCS Mini takes the UCS blade server system, houses it into one 6U chassis, the Cisco UCS 5100, and optimizes it for remote and branch offices. The Mini offers servers, storage, and 10 Gigabit networking in a very dense form factor.

Cisco’s Unified Computing System (UCS) is designed with the idea of unifying and simplifying the data center. Customers should be able to deploy servers quickly with less overall infrastructure all with unified management through UCS Manger. The Cisco UCS Mini takes the UCS blade server system, houses it into one 6U chassis, the Cisco UCS 5100, and optimizes it for remote and branch offices. The Mini offers servers, storage, and 10 Gigabit networking in a very dense form factor.

Cisco’s Unified Computing System (UCS) is designed with the idea of unifying and simplifying the data center. Customers should be able to deploy servers quickly with less overall infrastructure all with unified management through UCS Manger. The Cisco UCS Mini takes the UCS blade server system, houses it into one 6U chassis, the Cisco UCS 5100, and optimizes it for remote and branch offices. The Mini offers servers, storage, and 10 Gigabit networking in a very dense form factor.

The Mini supports several Cisco UCS B-Series Blade Servers enabling customers to start with just one and move up to eight if need be. The B-Series Blade Servers serve specific needs with the B200 M4 serving the broadest set of workloads with more performance and versatility, the B200 M3 is aimed at most general workloads, the B420 M3 is aimed at virtualization and database workloads, and the B22 M3 is the more cost-effective Blade Server. Users can have one blade server type, all eight of the same type, or mix and match to hit their needs. Each Mini comes with the Cisco UCS 6324 Fabric Interconnect and Cisco UCS Manager. While the B-Series Blade Servers are supported, one of which we used for our testing, the Mini also supports some of Cisco’s C-Series Rack servers.

While the Mini helps bring UCS from the data center to ROBO locations it has an interesting use case. Once the blade servers are either no longer needed or are replaced in the data center, companies can take the same blade and install it at their ROBO sites within the Mini. This can make for some more attractive TCO over the long run making an investment continue to return after its immediate use is no longer needed.

For our configuration we used eight B200 M4 Server Blades giving us a good overall impression of how the Mini will perform. The blades themselves are also configurable and each of the ones we used has 2 Intel Xeon E6-2660 v3 CPUs, 256GB (16x 16GB DIMMS) of memory, 1x 1TB + 1x 480GB High Endurance SSD + 12G SAS RAID Controller, and 2 x 10Gbit UCS VIC 1340.The MSRP was $120,922 but obviously this price will vary depending on customer configuration.

Cisco UCS Mini Specifications

- Chassis

- Electrical power specifications: 100 – 120 Volts, 200 – 240 Volts

- Minimum UCS Manager version: 3.0(1a)

- Recommended UCS Manager version: 3.0 (2c)

- Connectivity

- Standard

- 4 x Small Form Factor Pluggable Plus (SFP+) unified ports for 1 Gbps

- 10 Gbps

- 2/4/8 Gbps Fibre Channel

- Optional QSFP+

- 6324 Fabric Interconnect throughput: 500 Gbps

- Server Blades

- B200 M4

- Form factor: Half-width blade form factor

- Processors: Either 1 or 2 Intel Xeon processor E5-2600 v3 product family CPUs

- Chipset: Intel C610 series

- Memory: Up to 24 double-data-rate 4 (DDR4) dual in-line memory (DIMMs) at 2400 and 2133 MHz speeds

- Mezzanine adapter slots: 2

- Hard drives: Two optional, hot-pluggable, SAS, SATA hard disk drives (HDDs) or solid-state drives (SSDs)

- Maximum internal storage: Up to 3.2 TB

- B200 M3

- Processors: Either 1 or 2 Intel Xeon processor E5-2600 v3 or E5-2600 v2 product family CPUs

- Chipset: Intel C600 series

- Memory: 24 total slots for registered ECC DIMMs for up to 768 GB total memory capacity (B200 M3 configured with 2 CPUs using 32 GB DIMMs)

- Mezzanine adapter slots: 2

- Hard drives: Two optional, hot-pluggable, SAS, SATA hard disk drives (HDDs) or solid-state drives (SSDs)

- Maximum internal storage: Up to 1.2 TB

- B420 M4

- Form factor: Half-width blade form factor

- Processors: Up to two or four Intel Xeon processor E5-4600 and E5-4600 v2 product family

- Chipset: Intel C600 series

- Memory: Up to 384 GB of RAM with 12 DIMM slots

- Mezzanine adapter slots: 2

- Hard drives: Two optional, hot-pluggable, SAS, SATA hard disk drives (HDDs) or solid-state drives (SSDs)

- B22 M3

- Form factor: Half-width blade form factor

- Processor: Up to two processors from Intel Xeon E5-2400 and E5-2400 v2 product family

- Chipset: Intel C600 series

- Memory: Up to 384 GB of RAM with 12 DIMM slots

- Mezzanine adapter slots: 2

- Hard drives: Two optional, hot-pluggable, SAS, SATA hard disk drives (HDDs) or solid-state drives (SSDs)

- B200 M4

- Cisco UCS 6324 Fabric Interconnect

- Form factor: I/O module for Cisco UCS 5108 Server Chassis

- Number of 1- and 10-Gigabit Ethernet, FCoE, or Fibre Channel Enhanced Small Form-Factor Pluggable (SFP+) external ports: 4

- Number of 40 Gigabit Ethernet or FCoE Enhanced Quad SFP (QSFP) ports: 1

- Server ports: 16 x 10GBASE-KR lanes

- Throughput: 500Gbps

- Latency: <1us

- Quality-of-service (QoS) hardware queues: 16 (8 each for unicast and multicast)

Design and build

The Cisco UCS Mini Chassis is 6U offers a clean ash-gold appearance. The outer sheet metal casing of the chassis feels like it could survive some sort of environmental disaster. To say this is heavy and sturdy is an understatement. This is true of most dense compute and storage enclosures that just come down to lots of metal parts crammed into one small space.

The eight server blades take up the bulk of the front of the device. Along the front bottom are four power supplies. To remove the server blades one only needs to loosen the thumb screws and then pull the handle out, and blade slides right out after that. The power supplies come out in a similar fashion, one just needs to loosen the thumbscrew and then pull out the supply with the blue handle on the front.

Fans, eight in total, dominate the rear of the device. To remove the fans one simply needs to press down the button above each fan handle and pull the fan out (a side note, the fans do not add a significant amount of weight to the overall device and will not help lighten the load when attempting to rack the unit). The bottom of the unit has the other end of the power supplies running along it. On the left hand side and in the middle of the device are the Fabric Interconnects that too can easily be removed.

Cisco USC Management Interface

The latest UCS Mini and version 3.1 of UCS Manager makes great strides in the user interface of the system, moving from a Java environment to a full HTML5 implementation (save for the KVM launcher). For those who have used UCSM previously and want to continue using the Java environment, it does still exist and you can still use it. The new HTML 5 version has gone to great lengths to keep a similar look and feel to the Java environment though, and the transition is very simple.

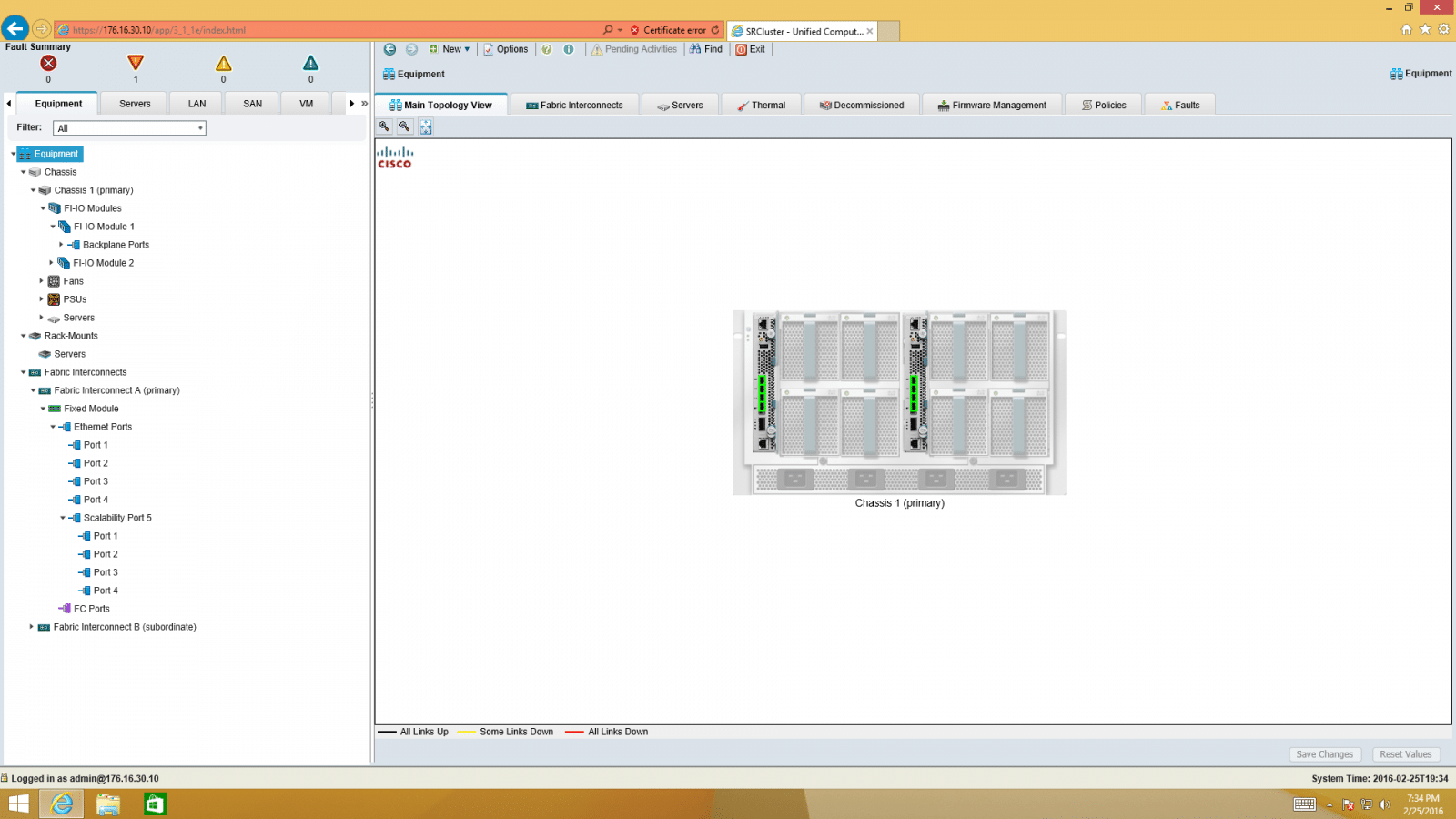

When you first log in to the UCS system, you are brought to a screen that has several tabs in the left hand pane, and several tabs on the right hand pane. Initially it lands on the Equipment tab in the left hand pane. This gives a general overview of the entire system and alerts you to any issues that it thinks there might have in the infrastructure. There are tabs for Fabric Interconnects, Servers, Thermals, Decommissioned Equipment, Firmware Management, Policies and Faults. This all looks fairly stock for a bladecenter. Once you’ve gone over the infrastructure, there are additional tabs that get into the intricacies of UCS.

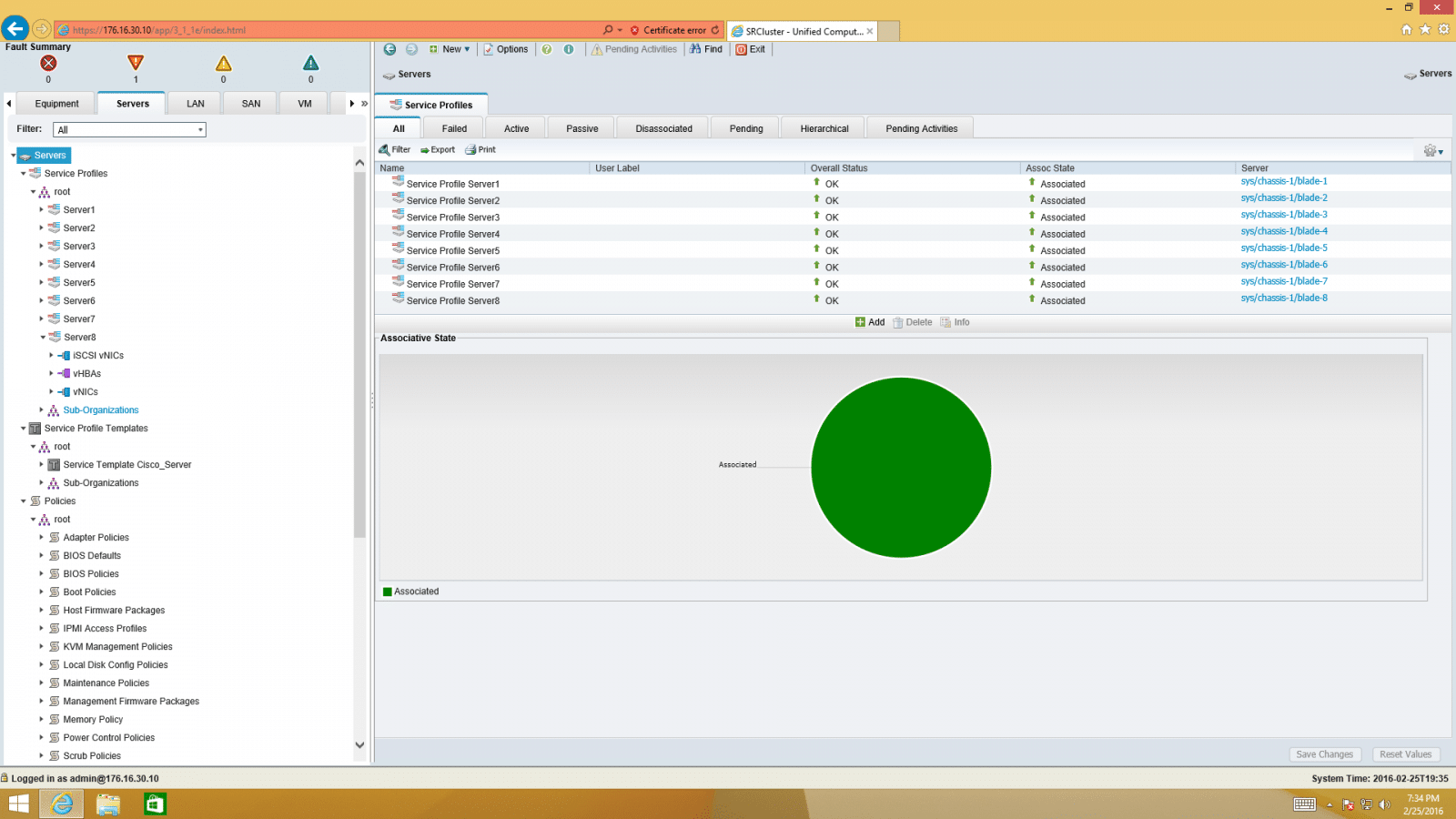

The next tab is the Servers tab. This tab is slightly misleading in that it doesn’t actually give you a view of the physical servers; those are all located in the equipment tab. The Servers tab is where you build service profiles, policies and some resource pools. Service profiles are the software definition of the settings for a server. The UUID of a server, number of NIC’s that it has, what VLAN’s it is connected to, number of HBA’s that it has, the firmware version, boot order, and KVM settings are all part of this definition. This definition is decoupled from the actual physical blade and presented up as a series of configuration options that can be applied to any blade in the chassis. This allows for great flexibility when replacing a failed server – simply replace the server and re-associate the service profile and all of those settings are instantly restored to a new piece of hardware. This also allows for easy provisioning with a “clone service profile template” feature. When deploying multiple systems that will have the same connectivity cloning a service profile will automatically create multiple service profiles that are functionally identical to the others.

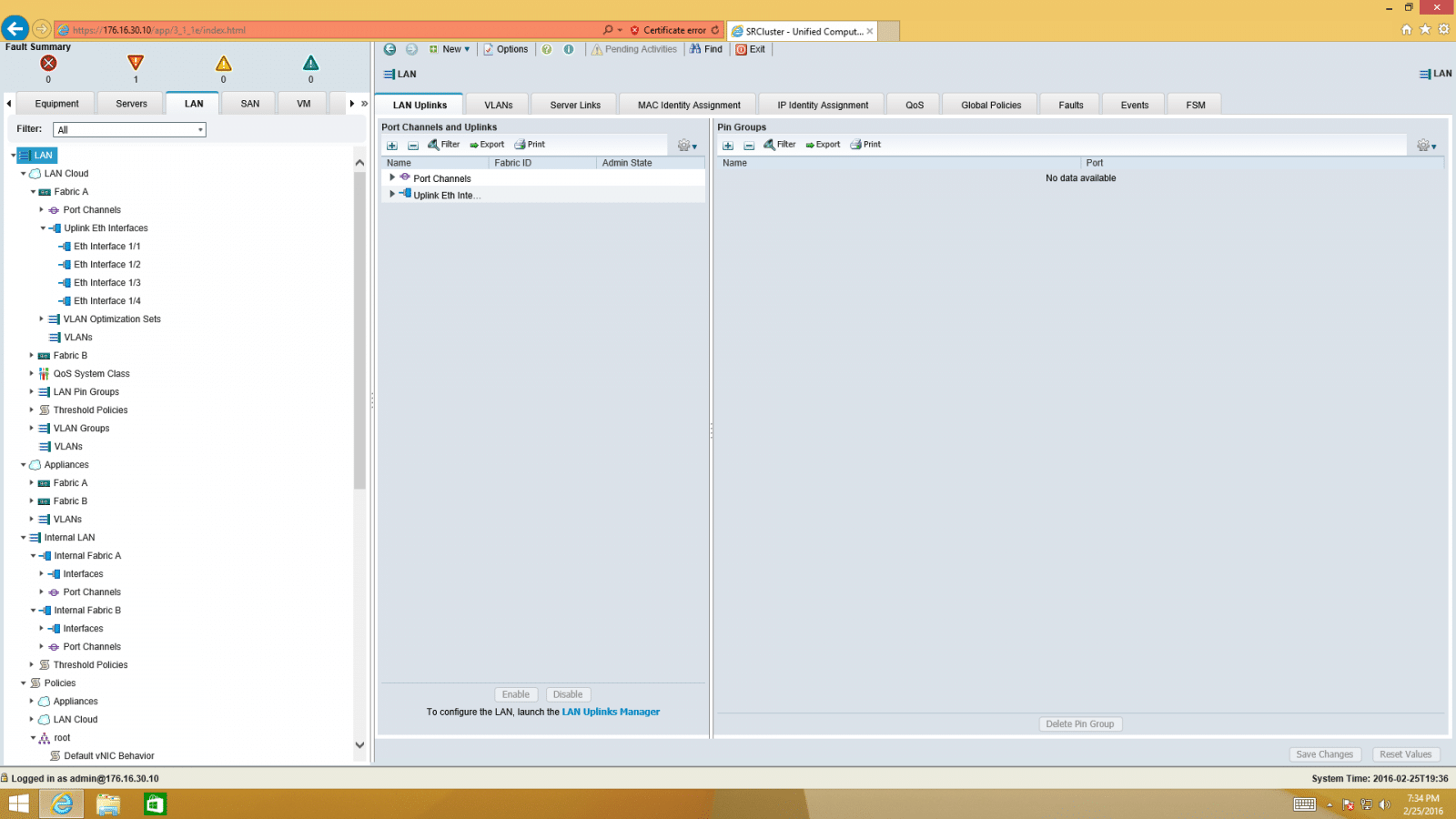

Following the Servers tab is the LAN tab. This tab is where things like VLAN’s, port channels, QOS, IP pools and MAC address pools are managed. Managing IP pools and MAC address pools allows you to pre-define what your KVM IP addresses are, what your iSCSI initiator addresses are, and what MAC addresses will be assigned to what adapters. VLAN management allows you to create and modify VLAN’s and assign ownership to those VLAN’s. Also in the LAN tab we have Netflow and port monitoring capabilities, allowing for packet captures and diagnostics of any traffic that is needed for packet inspection.

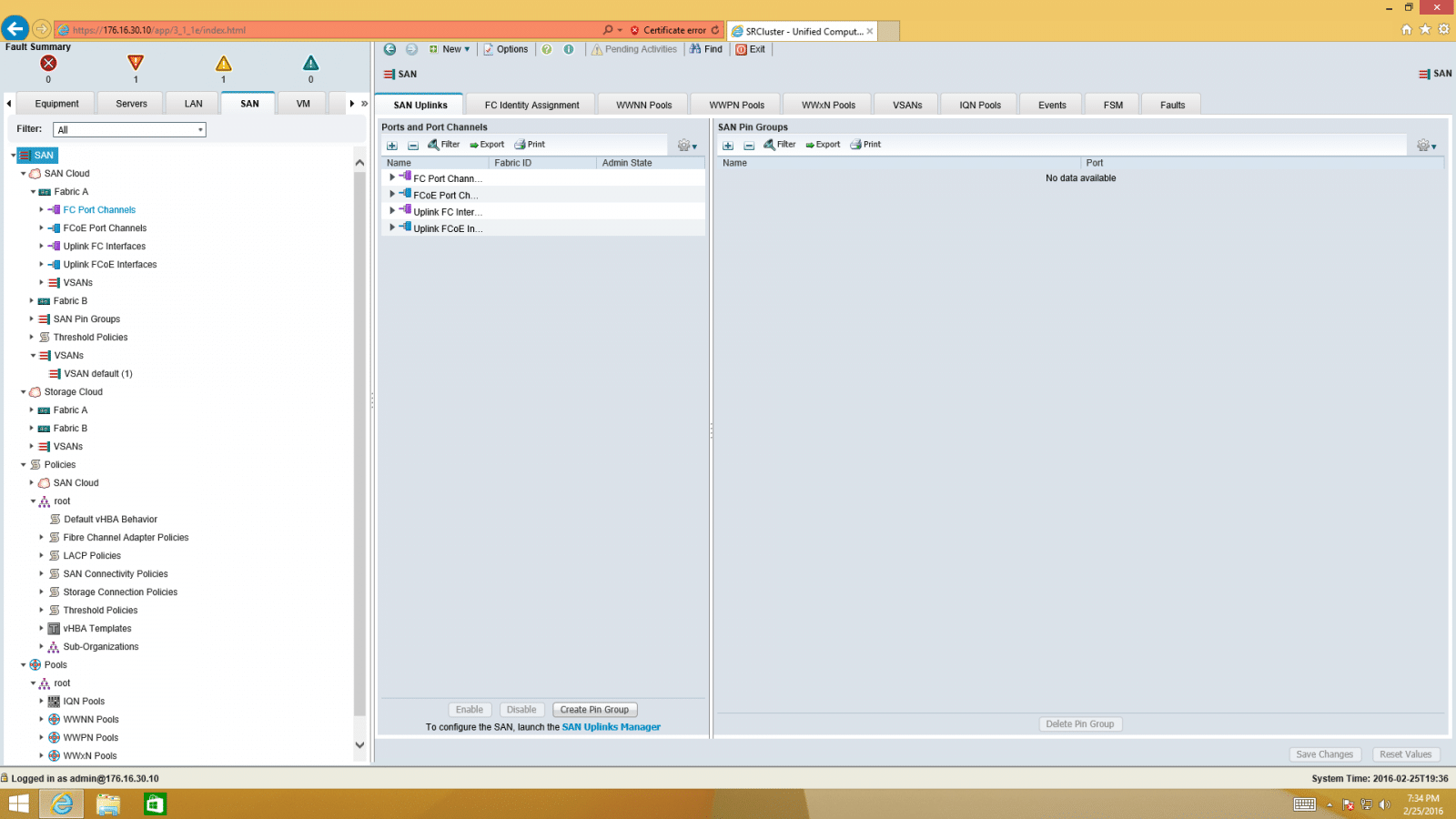

The SAN tab follows the LAN tab and contains all of the information that is germane to SAN configuration. WWN pools, WWPN pools, VSAN information and configuration, adapter queues and VHBA templates are all things that you will find in the SAN section of UCS Manager. WWN pools and WWPN pools allow you to define the exact WWN/WWPN’s that you want to assign to your HBA’s. Depending on which mode UCS is operating in, there will be additional zoning options in this section that turn the UCS infrastructure into a fully operational SAN switch that would enable full FC connectivity in and out to a SAN array.

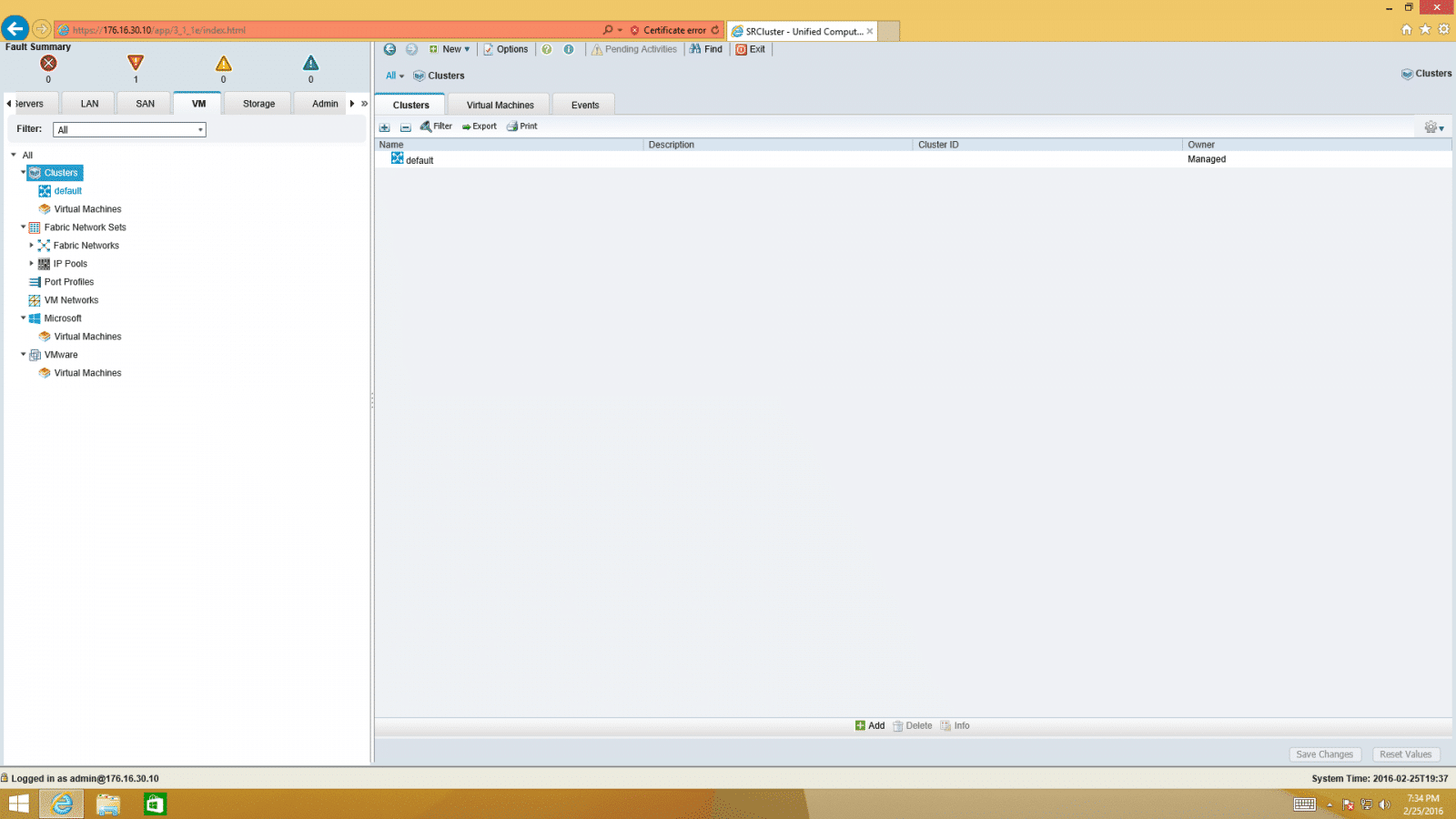

The next tab in UCSM is the VM tab. This tab provides integration into VMware or Hyper-v for additional management and visibility. Specifically this is for VM-FEX integration where a virtual adapter is created in the UCS interface and assigned directly to a VM. There are many possible uses for this that would exceed the scope of this brief overview.

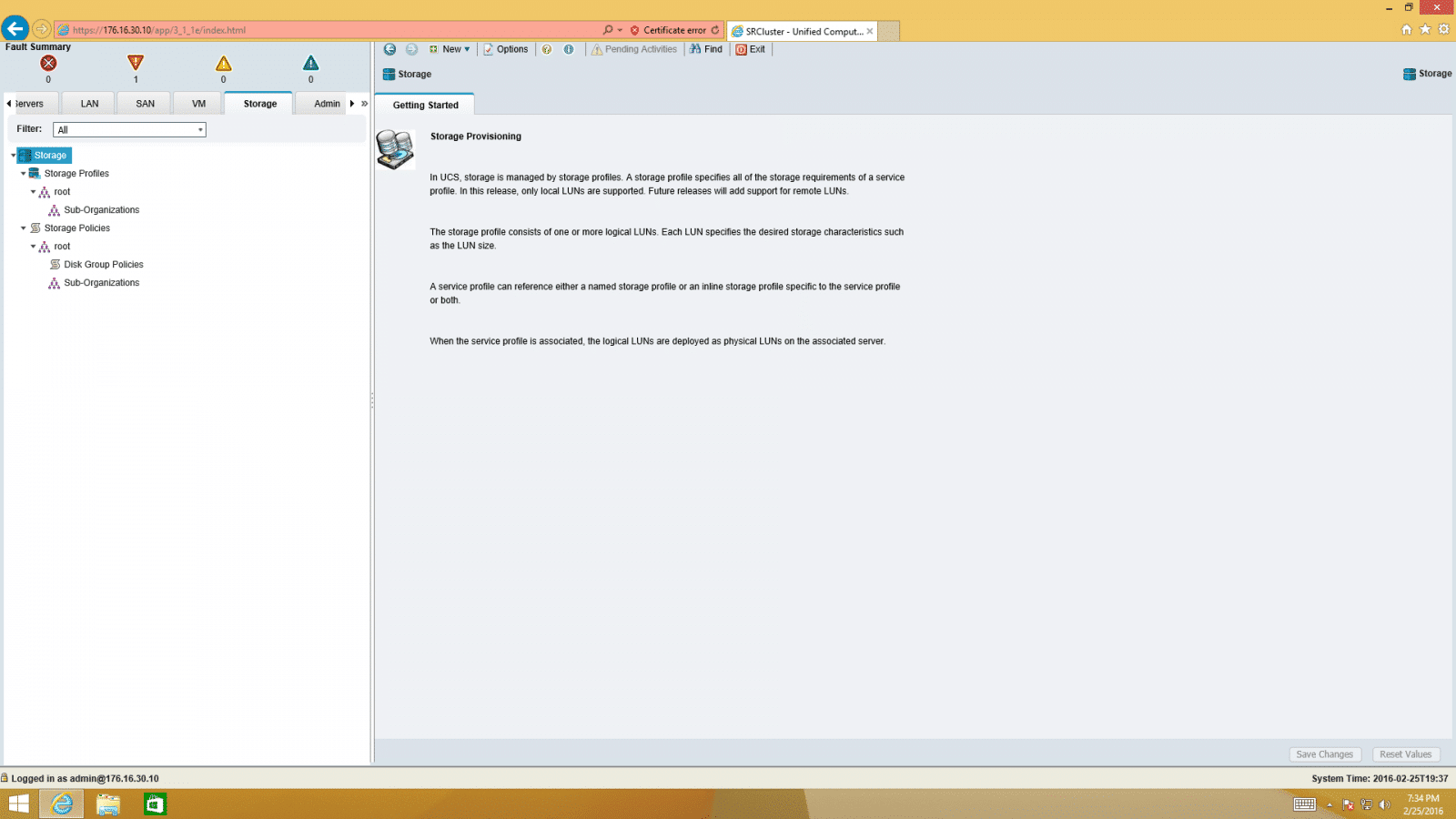

The next tab is the Storage tab. This tab sets local storage policies for blades. This is where you can set a policy for every disk to be mirrored, or striped, or that the disks have to be a minimum of 200GB to be useable. These profiles can be applied as part of a service profile without any external intervention and can be cloned for use on multiple blades.

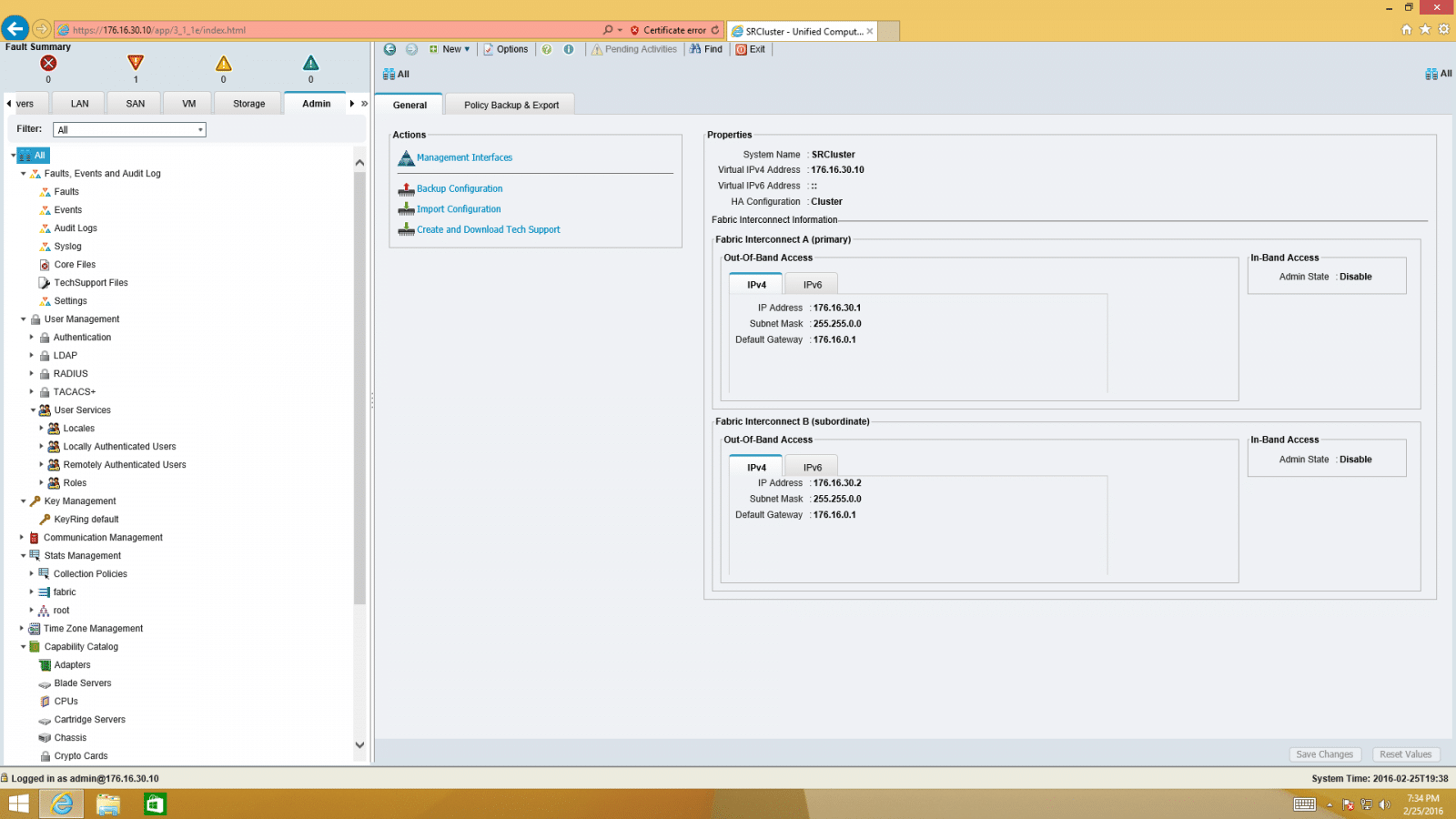

The final tab is the Admin tab, which encompasses a large amount of configuration and monitoring sub-sections. From this tab you can view things such as the faults and events going on in your system (Power events, Link events, failover, capacity issues, configurations, login attempts, etc.). You can configure users and roles (Admin, network, storage, read-only, operations, etc.). You can configure RADIUS/TACAC+/LDAP integration to tie all users back to a centralized authentication system. This section also handles call home capabilities, integration into UCS Central, capability catalogs, and license management for the UCS system.

Overall the UCS Manager interface has functionality that incorporates SAN, LAN, Bladecenter and KVM features into one large single pane of glass. It can be daunting to navigate some of it due to the sheer depth and breadth of capabilities of the system. The interdependencies of the system can make misconfiguration very simple if a user is not careful about what they are doing. When deployed properly and integrated with a central management system like UCS Central, it can make management of the system as a part of a larger enterprise system much simpler and streamlined.

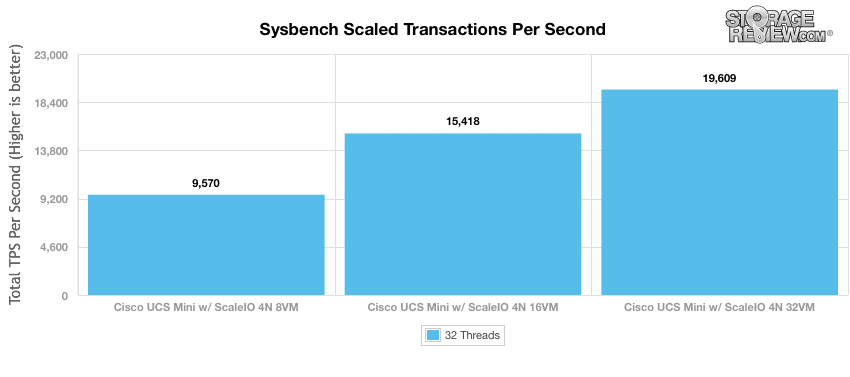

Sysbench Performance

When looking at UCS Mini performance, we decided to take a known entity, the EMC VxRack Node powered by ScaleIO, putting the all-flash array to work in 2-layer SAN configuration. This testing however is a little bit different. In the VxRack Node review we tested Sysbench starting with four compute nodes, then scaling up to eight in single servers until we hit the limits of what the Node could do. In running the same exact test profile in the case of the UCS Mini, all eight blades were used from the start, which improves the numbers some. We also did less stepping for this review, but the scalability the combination enables is pretty clear from the results, which run the storage to nearly full capacity.

Each Sysbench VM is configured with three vDisks, one for boot (~92GB), one with the pre-built database (~447GB) and the third for the database under test (270GB). In previous tests we allocated 400GB to the database volume (253GB database size), although to pack additional VMs onto the VxRack Node we shrunk that allocation down to make more room. From a system resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM and leveraged the LSI Logic SAS SCSI controller. Load gen systems are the UCS Mini blades; we operate with 1VM, 2VMs or 4VMs per each of the 8 server blades.

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Storage Footprint: 1TB, 800GB used

- Percona XtraDB 5.5.30-rel30.1

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

Our Sysbench test measures average TPS (Transactions Per Second), average latency, as well as average 99th percentile latency at a peak load of 32 threads. Looking at scaled transactions per second, the Mini was able to hit 9,570 TPS using 8VMs. Doubling the VMs to 16 we saw the performance jump to 15,418 TPS. Doubling the VMs once again to 32 we see the performance bump up to 19,609 TPS.

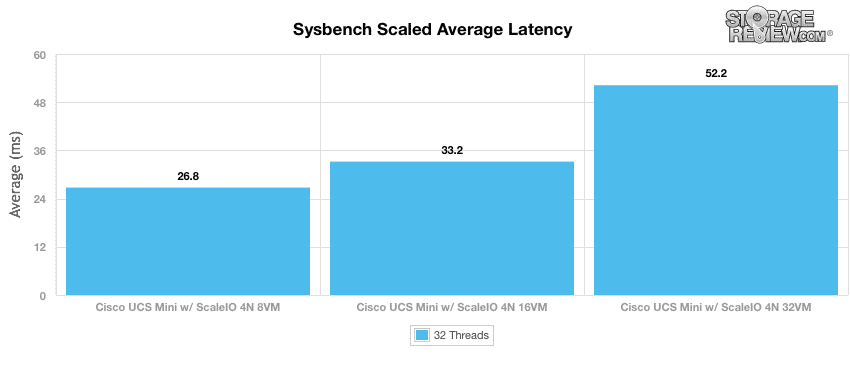

Looking at scaled average latency, the Mini was able to hit 26.8ms with 8VMs. When we doubled the VMs to 16 the latency only went up 6.4ms to 33.2ms. Doubling the VMs once again to 32 the latency only went up 19ms to 52.2ms.

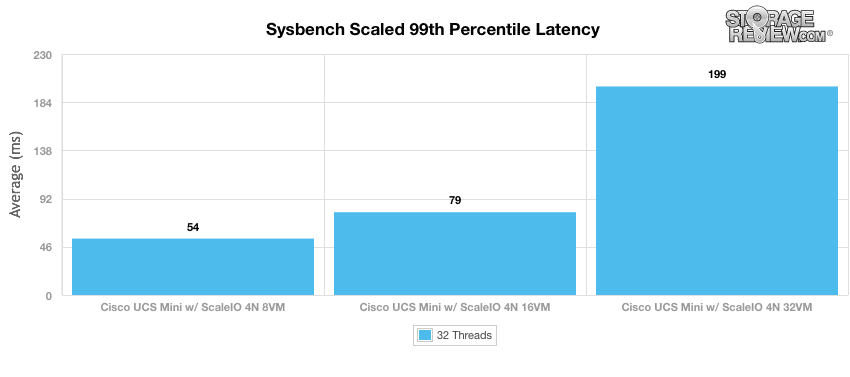

In terms of our worst-case MySQL latency scenario (99th percentile latency), the Mini had a latency as low as 54ms with 8VMs. With twice as many VMs, 16, latency only jumped up to 79ms. But when we doubled the VMs once more to 32 the latency shot up to 199ms.

Conclusion

The Cisco UCS Mini brings Cisco’s Unified Computing System out of the data center and into ROBO sites. It allows for 8 blade servers to be installed and mixed and matched into a single, compact (yet heavy) 6U form factor. All of the servers are controlled through a single point of management through Cisco UCS Manger. And the Mini enables older Cisco blade servers that are retired out of the data center to be installed at ROBO sites where the fastest and best server may not be required.

Looking at performance, we ran the Sysbench MySQL application benchmark on the Cisco UCS Mini. For our Sysbench test we ran either 1, 2, or 4 VMs on each server blade making a total of 8, 16, or 32 VMs total ran for the benchmark. We looked at transactions per second, scaled latency, and scaled worst-case MySQL latency (99th percentile latency). The Mini had TPS as high as 19,609 with 32VMs. More impressive was the Mini’s scaled latency results running as low as 26.8ms at 8VMs and when the amount of VMs was bumped up to 32 latency only hit 52.2ms, less than double at four times the VMs. Looking at worst-case the Mini fared better with either 8 or 16VMs but jumped significantly in latency at 32VMs.

Pros

- Fits four full-width or eight half-width servers in a single chassis (manages up to 15 total)

- Single point of management for all servers

- Older server blades can be used from the data center at the ROBO site

Cons

- Not the lightest device to install

The Bottom Line

The Cisco UCS Mini gives ROBO site 8 servers in a single 6U chassis that are all managed through Cisco UCS Manager. With the Mini, Cisco is bringing its Unified Computing System out of the data center.

Sign up for the StorageReview newsletter