By Peter Velikin VP Online Marketing, VeloBit, Inc.

Many IT managers have been hearing about the benefits (low power, high IO bandwidth, low latency) of adding SSDs to their existing system to improve IO speed and application performance. They have also heard about some of the issues associated with adding SSDs such as high cost, device wear and data organization disruption. Still, SSDs are here to stay and the list of vendors and products is getting bigger every day. The easiest way to take advantage of the SSD benefits is to deploy SSDs as cache. However, even doing something that sounds that simple can waste this valuable resource. Enter the concept of SSD caching software. SSD caching software is used to seamlessly manage the data flow to the SSD to maximize the ROI of this expensive technology.

Why cache application data in the first place?

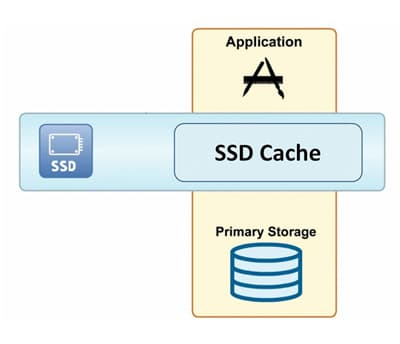

Simply put, caches are used to speed up access to data. A cache is a component placed in front of a primary device to transparently store data so that future data requests can be served faster. As data passes through a cache on its way to or from the primary device, some of the data is selectively stored in the cache. When an application or process later accesses data stored in the cache (a cache hit), that request can be served faster from the cache than from the slower device. The more requests that can be served from cache, the faster is the overall system performance.

There is a trade-off in cache cost and performance. Larger caches yield a higher cache hit rate and therefore better performance. Unfortunately, the hardware used for cache is generally more expensive than the hardware used for the primary device. Cache design is a tradeoff between size and performance.

Why use SSDs as cache?

As mentioned above, SSDs have several features that make them ideal for use as the cache solution of choice. SSDs have blazing IO speeds which is the primary goal of caching data in the first place. Using SSDs as primary storage devices is expensive and simply installing them in an existing system poses challenges because most applications were not written with SSD in mind. Data tiering software was supposed to solve problems like this, but that ended up just causing more headaches and expense. So, if you are going to invest in SSDs, keep it simple and deploy SSDs as cache.

What is hard about using SSDs as cache?

Well, if you got this far in the article, you can handle the truth. SSDs are not perfect. They have issues associated with the physics of the flash memory used to make them. First, SSDs have asymmetric read/write performance. Read operations are much faster than write operations. Of course you have to write into them sometime but writing must be carefully managed. Second, SSDs wear out. There is a limited number write cycles into the SSD before the flash memory chips have cell failures. So writing to the SSD must be carefully managed. Third, SSDs exhibit a phenomenon called “write amplification” which basically means that a single write to SSD may result in many re-writes as data is reorganized on SSD to make place for the new write. This slows performance and shortens the life of the SSD. Here we go again: writing to the SSD must be carefully managed.

How to “carefully manage writing” to the SSD

Deploying SSDs as cache is good. Unlimited writing to SSDs is bad. What can be done about this? Well, Business 101 has provided the answer: the development of the SSD caching software industry. Smart people saw the pros of SSDs and the figured out a way to minimize the cons by developing software to (you guessed it) carefully manage writing data to SSDs. SSD caching software has three main objectives:

- Transparently manage the SSD cache so the application software does not need to be modified

- Determine what data is a good candidate to store in SSDs and what data should be sent to primary storage

- Minimize the writing of data to the SSD to reduce write amplification

Common types of SSD caching

Since an SSD cache’s size is typically only a fraction of the overall data set, not all application data will fit in the cache. A cache is most effective if it holds data that is most likely to be accessed in the future. The goal of cache algorithm design is to somehow “predict” which data will be accessed so that an optimal subset of the data is held in cache. The better the ability to predict future data access, the higher the cache hit rate and the better the application performance.

Three types of common SSD caching algorithms are:

- Temporal locality caching which stores data based on how recently it was used

- Spatial locality caching which stores data based on its physical storage location

- Content locality caching which stores data blocks that are most frequently used and referenced

Some caching algorithms are generic; new caching algorithms like content locality caching are specifically designed for SSD. When selecting a SSD caching software solution, you should investigate if the caching algorithm is suited for the pattern of data to be cached on SSD (so that you can get higher cache hit rates and better performance) and whether the caching software carefully manages writes to SSD (so you get better SSD performance and reliability). And, of course, benchmark the performance of each solution: you are deploying SSD to boost performance; the better the price/performance, the higher the ROI.

Peter is Vice President of Online Marketing at VeloBit, Inc, responsible for all of marketing. He has 12 years of experience creating new markets and commercializing products in multiple high tech industries. Prior to VeloBit, he was VP Marketing at Zmags, a SaaS-based digital content platform for e-commerce and mobile devices, where he managed all aspects of marketing, product management, and business development. Prior to that, Peter was Director of Product and Market Strategy at PTC, and at EMC Corporation, where he held roles in product management, business development, and engineering program management. Peter has a M.S. in Electrical Engineering from Boston University and an MBA from Harvard Business School.