By George Teixeira, CEO and President, DataCore Software

In 2012, the phrase “big data” is on everyone’s lips, just like virtualization was five years ago. Also just like virtualization, and cloud computing, there is a lot of hype obscuring the more important discussions around IT challenges, skill sets needed and how to actually build an infrastructure that can support effective big data technologies.

When people waxed poetic about the wonders of virtualization, they failed to recognize the new performance demands and enormous increase in storage capacity required to support server and desktop initiatives. The need for higher performance and continuous availability came at a high price, and clearly, the budget-busting impact subsequently delayed or scuttled many projects.

These problems are now being solved through the adoption of storage virtualization that harnesses industry innovations in performance, drives greater commoditization of expensive equipment, and automates storage management with new technologies like enterprise-wide auto-tiering software.

That same dynamic is again at work, only this time with big data.

Business intelligence firms would have you believe that you simply slap their analytics platforms on top of a Hadoop framework and BINGO – you have a big data application. The reality is, Hadoop production deployments are practically non-existent in large enterprises. There are simply not many people who understand and can take advantage of these new technologies. In addition, the ecosystem of supporting technologies and methodologies are still too immature, which means the industry is going through a painful process of trial and error, just as it did with virtualization five years ago. Again, underestimating storage requirements is emerging as a major barrier to adoption as the age-old practice of “throwing hardware at the problem” creates often-untenable cost and complexity.

To put it bluntly, big data does not simply require big storage – it requires big smart storage. Unfortunately, the industry is threatening to repeat major mistakes of the past!

Before I turn to the technology, let me point out the biggest challenge being faced as we enter the world of big data – we need to develop the people who will drive and implement useful big data systems. A recent Forbes article indicated that our schools have not kept pace with the educational demands brought about by big data. I think the following passage sums up the problem well: “We are facing a huge deficit in people to not only handle big data, but more importantly to have the knowledge and skills to generate value from data — dealing with the non-stop tsunami. How do you aggregate and filter data, how do you present the data, how do you analyze them to gain insights, how do you use the insights to aid decision-making, and then how do you integrate this from an industry point of view into your business process?”

Now, back to the product technologies that will make a difference – especially those related to managing storage for this new paradigm.

As we all know, there has been an explosion in the growth of data, and traditional approaches to scaling storage and processing have begun to reach computational, operational and economic limits. A new tact is needed to intelligently manage and meet the performance and availability needs of rapidly growing data sets. Just a few short years ago, it was practically unheard of for organizations to be talking about scaling beyond several hundred terabytes, now discussions deal with several petabytes. Also in the past, companies chose to archive a large percentage of their content on tape. However, in todays on-demand, social media centric world, that’s no longer feasible. Users now demand instantaneous access and will not tolerate delays to restore data from slow and or remote tape vaults.

Instant gratification is the word-of-the-day and performance is critical.

New storage systems must automatically and non-disruptively migrate data from one generation of a system to another to effectively address long-term archiving. Also adding to this problem is the need to distribute storage among multiple data centers, either for disaster recovery or to place content closer to the requesting users in order to keep latency at a minimum and improve response times.

This is especially important for the performance of applications critical to the day-to-day running of a business such as databases, enterprise resource planning and other transaction oriented workloads. In addition to the data these resource-heavy applications produce, IT departments must deal with the massive scale of new media such as Facebook and YouTube. Traditional storage systems are not well suited to solve these problems, leaving IT architects with little choice but to attempt to develop solutions on their own or suffer with complex, low performing systems.

Storage systems are very challenged in big data and cloud environments because they were designed to be deployed on larger and larger storage arrays, typically located in a single location. These high-end proprietary systems have come at a high price in terms of capital and operational costs, as well as agility and vendor lock-in. They provide inadequate mechanisms to create a common, centrally managed resource pool. This leaves an IT architect to solve the problem with a multitude of individual storage systems usually compromised of varied brands, makes and models, requiring a different approach to replication across sites and some form of custom management.

With this in mind, there are four big issues to address:

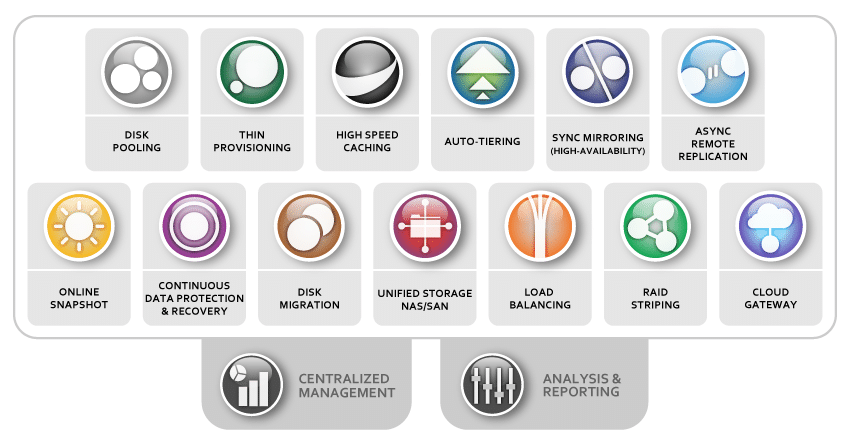

- Virtual Resource Management and Flexibility: It takes smart software to transition the current landscape of monolithic, independent solutions into a virtual storage resource pool that incorporates and harnesses the large variety of storage hardware and devices available today. High Capital and Operational Costs: To reduce operational costs, achieving greater productivity through automation and centralized management is essential. Just as importantly, empowering companies with more of an ability to commoditize and allow hardware inter-changeability is critical to cost-effective storage scalability. No one can afford “big bucks” to throw more expensive “big box” solutions at a big data world. Hypervisors such as VMware and Microsoft Hyper-V have opened up purchasing power for users. Today, the use of a Dell, HP, IBM or Intel server has become largely a personal company preference. This same approach is required for storage and has driven the need for hypervisors.

- Scalability Demands Performance and Continuous Availability: As data storage grows and more applications, users and systems need to be serviced, the requirement for higher performance and availability rise in parallel. It is no longer about how much can be contained in a large box, but, how do we harness new technologies to make storage faster and more responsive. Likewise, no matter how reliable any one single storage box may be, it does not compare to many systems working together over different locations to ensure the highest level of business continuity, regardless of the underlying hardware.

- Dynamic Versus Static Storage: It is also obvious we can no longer keep up with how to optimize enterprise data for maximum performance and cost trade-offs. Software automation is now critical. Technologies such as thin-provisioning that span multiple storage devices are necessary for greater efficiency and better storage asset utilization. Powerful enterprise-wide capabilities that work across a diversity of storage classes and device types are becoming a must-have. Automated storage tiering is required to migrate from one class of storage to another. As a result, sophisticated storage hypervisors are critical, regardless of whether the data and I/O traffic is best-migrated from high-speed dynamic RAM memory, solid state drives, disks or even cloud storage providers.

Intensive applications now need a storage system that can start small, yet scale easily to many petabytes. It must be one that serves content rapidly and handles the growing workload of thousands of users each day. It must also be exceedingly easy to use, automate as much as possible and avoid a high degree of specialized expertise to deploy or tune. Perhaps most importantly, it must change the paradigm of storage from being cemented to a single array or location to being a dispersed, yet centrally managed system that can actively store, retrieve and distribute content anywhere it is needed. The good news is, storage hypervisors like DataCore’s SANsymphony-V, deliver an easy-to-use, scalable solution for the IT architect faced with large amounts of data running through a modern-day company.

Yes, big data offers big potential. Let’s just make sure we get storage right this time to avoid repeating the big mistakes of the past.

George Teixeira is CEO and president of DataCore Software. The company’s software-as-infrastructure platform solves the big problem stalling virtualization initiatives by eliminating storage-related barriers that make virtualization too difficult and too expensive. You can contact the author at [email protected].