Today WekaIO and Destiny Corporation announced that they have published a reference design that is capable of delivering 5X runtime and wall clock time improvements for SAS analytics long running jobs. Not only is the new solution aimed at performance but it is said to provide good storage economics as well. This solution is available as both on-prem as well as on AWS.

Today WekaIO and Destiny Corporation announced that they have published a reference design that is capable of delivering 5X runtime and wall clock time improvements for SAS analytics long running jobs. Not only is the new solution aimed at performance but it is said to provide good storage economics as well. This solution is available as both on-prem as well as on AWS.

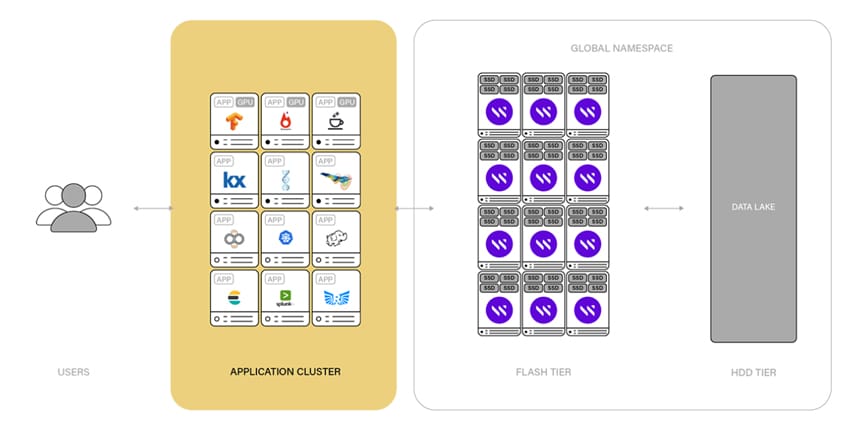

The two companies are a good fit to create this new solution. Weka is all about high-performance, scalable file storage. Destiny is a business and technology consultancy specializing in SAS (an integrated software suite for advanced and predictive analytics, business intelligence, and data management) analytics and a SAS Institute gold partner. The two are working together to tackle the issues with SAS. SAS solutions can take advantage of multi-core CPUs, but are hamstrung by access to volumes or by storage I/O responsiveness. This being the case, SAS Grid and SAS Viya application servers remain vastly underutilized when operating across large data sets as they wait for data to be served from the storage system.

WekaFS is stated by the company to be the world’s fastest parallel file system. To maximize SAS application servers, they would connect over Infiniband or 100Gbit Ethernet to a storage system running WekaFS. This shared storage system needs at least eight storage nodes with local NVMe but can scale to hundreds of nodes. According to the companies, the starting reference design can scale from one SAS client running 32 concurrent sessions to 8 clients running 256 concurrent sessions, WekaFS measures up to 106GB/sec of mixed Read/Write bandwidth, while only taking 320 SAS cores to achieve this performance. The new reference design should maximum the usage without waiting on storage I/O or wasting expensive compute cycles.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed