Synology has a nice line of NAS devices that are great for SOHO and SMBs. The only drawback is that midrange NAS aren’t typically built with maximum performance and network speed in mind. Thanks to a new add-in card, now they don’t have to be. With the introduction of the Synology E10M20-T1 AIC, users can get much higher I/O and bandwidth with one card.

Synology has a nice line of NAS devices that are great for SOHO and SMBs. The only drawback is that midrange NAS aren’t typically built with maximum performance and network speed in mind. Thanks to a new add-in card, now they don’t have to be. With the introduction of the Synology E10M20-T1 AIC, users can get much higher I/O and bandwidth with one card.

For bandwidth, the Synology E10M20-T1 comes with a 10GbE port, drastically upping the I/O from a NAS that has GbE onboard. On top of the enhanced network performance, the card comes with two NVMe SSD slots for M.2 drives (2280 and 22110 form factors). This gives users high I/O performances and adds two NVMe slots for SSD cache without giving up to drive bays. That way users can load up their NAS with high capacity HDDs and still have an SSD cache. This card is particularly useful in some of Synology’s older NAS systems as well, that don’t have the onboard SSD slots.

The Synology E10M20-T1 can be picked up today for $250. For those who prefer, we have a video walkthrough of the card as well.

Synology E10M20-T1 Specifications

| General | |

| Host Bus Interface | PCIe 3.0 x8 |

| Bracket Height | Low Profile and Full Height |

| Size (Height x Width x Depth) | 71.75 mm x 200.05 mm x 17.70 mm |

| Operating Temperature | 0°C to 40°C (32°F to 104°F) |

| Storage Temperature | -20°C to 60°C (-5°F to 140°F) |

| Relative Humidity | 5% to 95% RH |

| Warranty | 5 Years |

| Storage | |

| Storage Interface | PCIe NVMe |

| Supported Form Factor | 22110 / 2280 |

| Connector Type and Quantity | M-key, 2 Slots |

| Network | |

| IEEE Specification Compliance | IEEE 802.3an 10Gbps Ethernet IEEE 802.3bz 2.5Gbps / 5Gbps Ethernet IEEE 802.3ab Gigabit Ethernet IEEE 802.3u Fast Ethernet IEEE 802.3x Flow Control |

| Data Transfer Rates | 10 Gbps |

| Network Operation Mode | Full Duplex |

| Supported Features | 9 KB Jumbo Frame TCP/UDP/IP Checksum Offloading Auto-negotiation between 100Mb/s, 1Gb/s, 2.5Gb/s, 5Gb/s, and 10Gb/s |

| Compatibility | |

| Applied Models NVMe SSD | SA series: SA3600, SA3400 20 series: RS820RP+, RS820+ 19 series: DS2419+, DS1819+ 18 series: RS2818RP+, DS3018xs, DS1618+ |

Design and Build

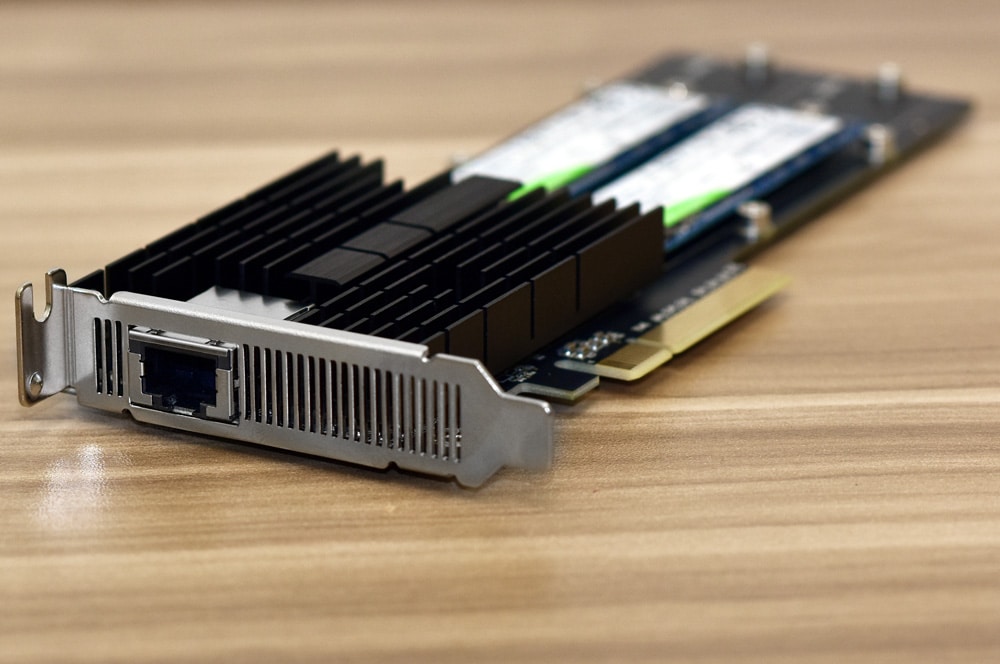

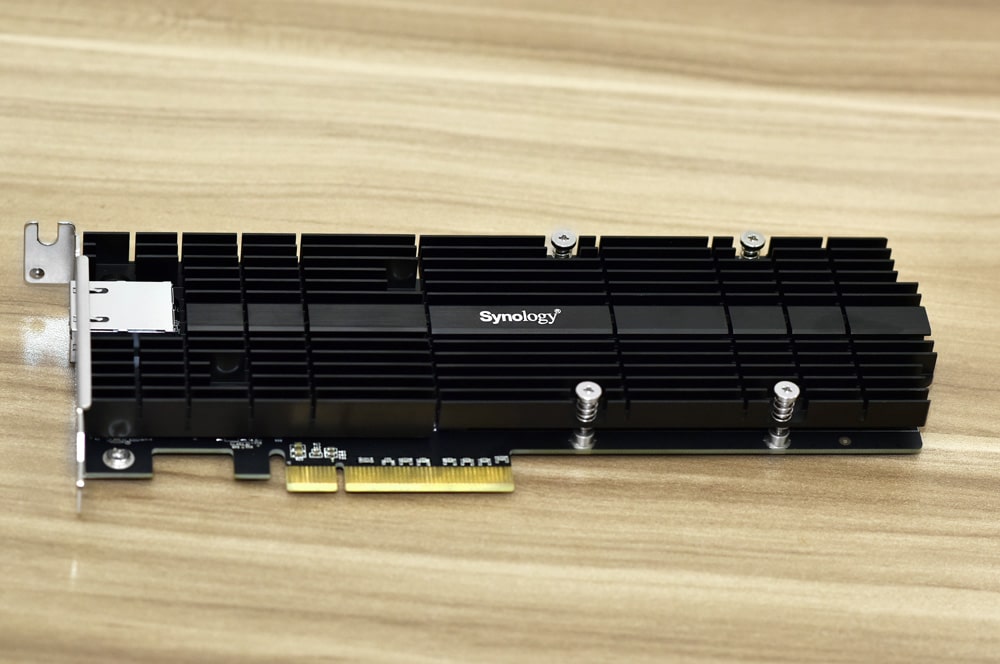

The Synology E10M20-T1 is a HHFL AIC that will fit in select models of Synology NAS. On one side are heatsinks that run the length of the card.

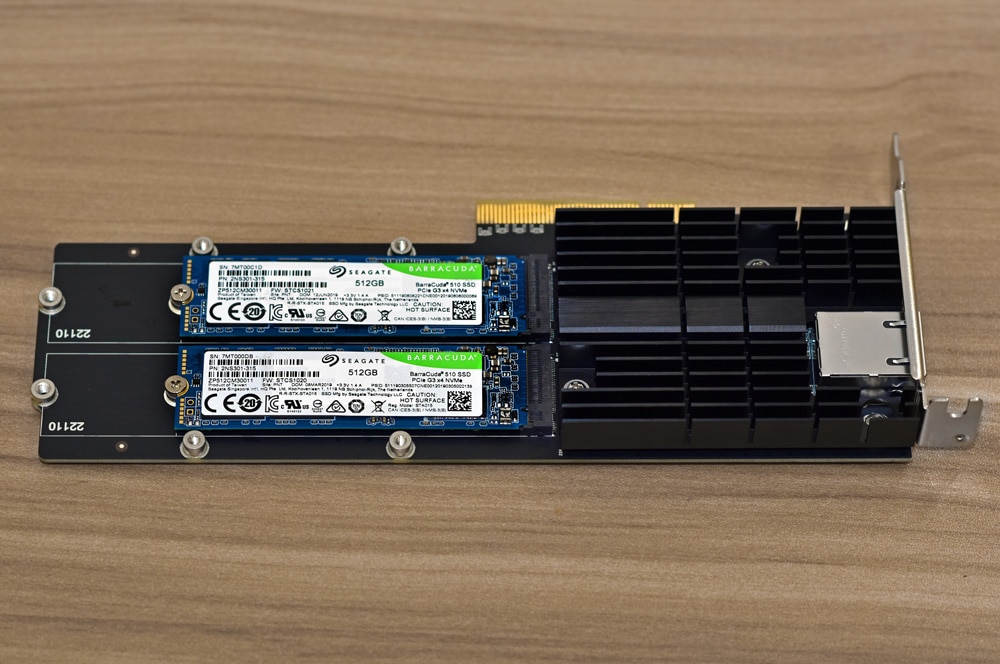

Removing the heatsink with four screws at the rear gives one access to the two M.2 NVMe SSD bays. Overall it is easy to secure drives on the card.

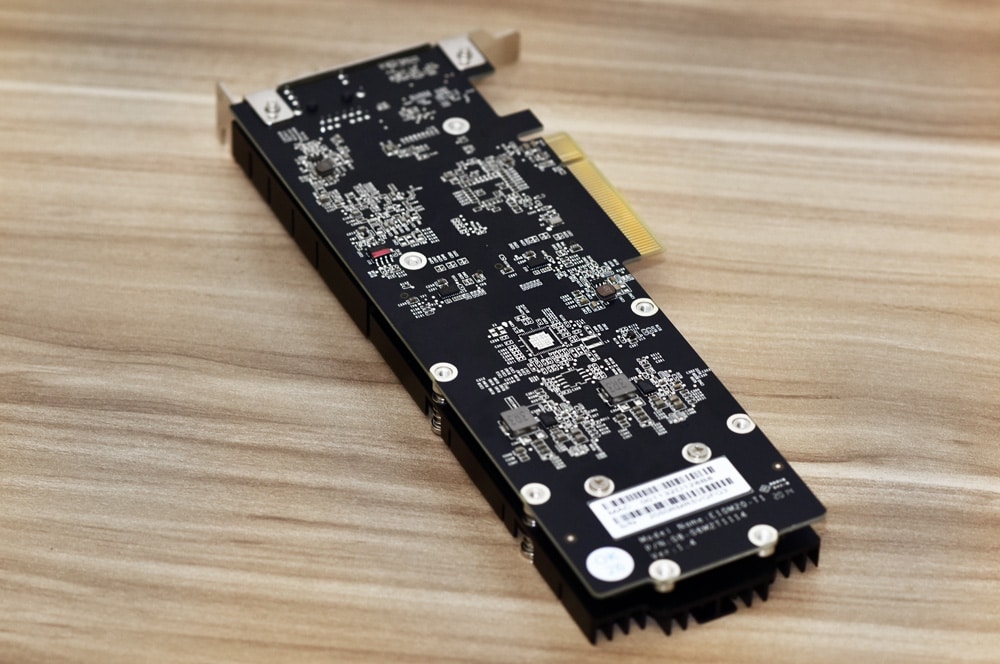

The back of the card is relatively spartan. Synology also includes a full size bracked in the box should that be required, and some thermal contact pads for the SSDs.

Performance

To test the Synology E10M20-T1 we inserted it in a Synology DS1819+. We filled the HDD bays with WD Red 14TB HDDs. For the cache, we used Synology’s SNV3400-400G SSDs. We tested the drives in both iSCSI and CIFS configuration in RAID6 with the cache off and on.

Enterprise Synthetic Workload Analysis

Our enterprise hard-drive benchmark process preconditions each drive into steady-state with the same workload the device will be tested with under a heavy load of 16 threads with an outstanding queue of 16 per thread. It is then tested in set intervals in multiple thread/queue depth profiles to show performance under light and heavy usage. Since hard drives reach their rated performance level very quickly, we only graph out the main sections of each test.

Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregate)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

Our Enterprise Synthetic Workload Analysis includes four profiles based on real-world tasks. These profiles have been developed to make it easier to compare to our past benchmarks as well as widely-published values such as max 4K read and write speed and 8K 70/30, which is commonly used for enterprise drives.

- 4K

- 100% Read or 100% Write

- 100% 4K

- 8K 70/30

- 70% Read, 30% Write

- 100% 8K

- 128K (Sequential)

- 100% Read or 100% Write

- 100% 128K

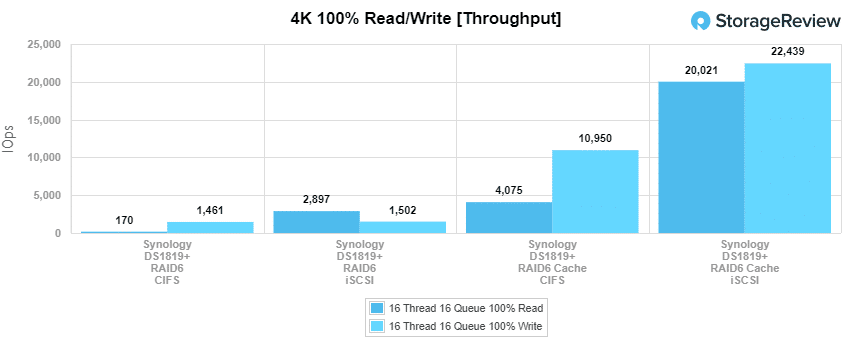

In the first of our enterprise workloads, we measured a long sample of random 4K performance with 100% write and 100% read activity to get our main results. For CIFS we saw 170 IPS read and 1,461 IOPS write without cache and 4,075 IOPS read and 10,950 IOPS write with the cache active. For iSCSI, we saw 2,897 IOPS read and 1,502 IOPS write without cache and leveraging the cache in the AIC we saw 20,021 IOPS read and 22,439 IOPS write.

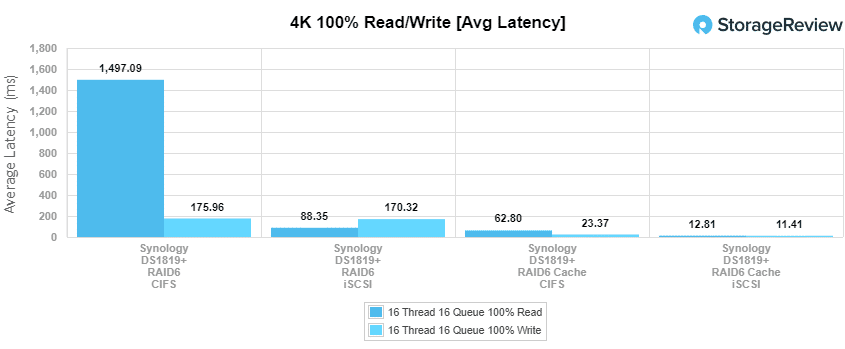

With 4K average latency, CIFS gave us 1,497ms read and 176ms write without the cache, and then turning it on it dropped to 63ms read and 23ms write. iSCSI saw 88ms read and 170ms write, then turning on the cache it dropped to 12.8ms read and 11.4ms write.

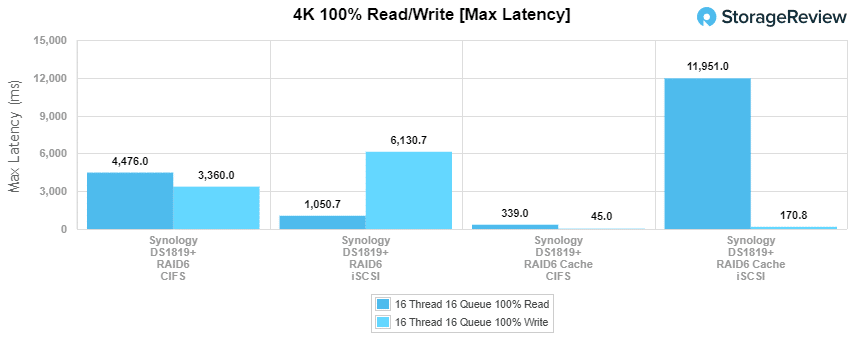

Next up is 4K max latency. Here, CIFS hit 4,476ms read and 3,360ms write without the cache; leveraging the AIC the numbers dropped to 339ms read and 45ms write. iSCSI had 1,051ms read and 6,131ms write without the cache, and with it read latency went way up to 11,951ms but write latency dropped to 171ms.

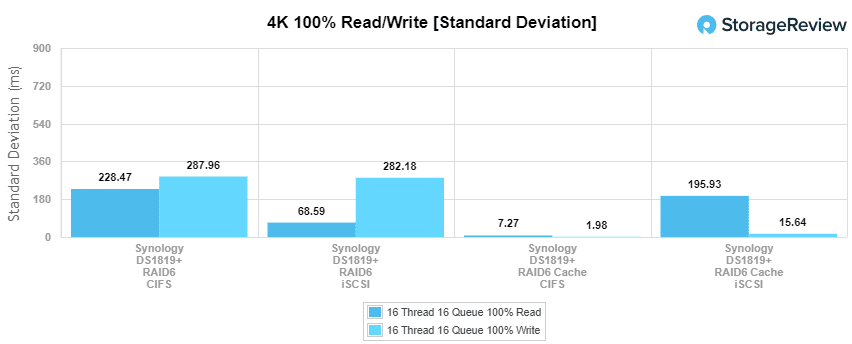

Our last 4K test is the standard deviation. Here, without the cache, CIFS gave us 228ms read and 288ms write, with the cache enable latency dropped all the way to 7.3ms read and 2ms write. For iSCSI, we again saw a spike instead of a drop in reads, going from 69ms non-cache to 196ms cached. Writes showed an improvement, going from 282ms to 16ms.

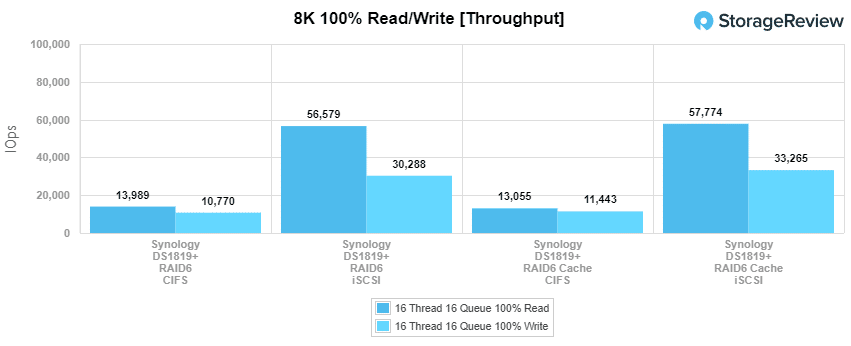

Our next benchmark measures 100% 8K sequential throughput with a 16T16Q load in 100% read and 100% write operations. Here, the CIFS configuration without the cache had 13,989 IOPS read and 10,770 IOPS write; after the cache was enabled the numbers went to 13,055 IOPS read and 11,443 IOPS write. With iSCSI we saw 56,579 IOPS read and 30,288 IOPS without the cache enabled, with it enabled we saw the performance go up a bit to 57,774 IOPS read and 33,265 IOPS write.

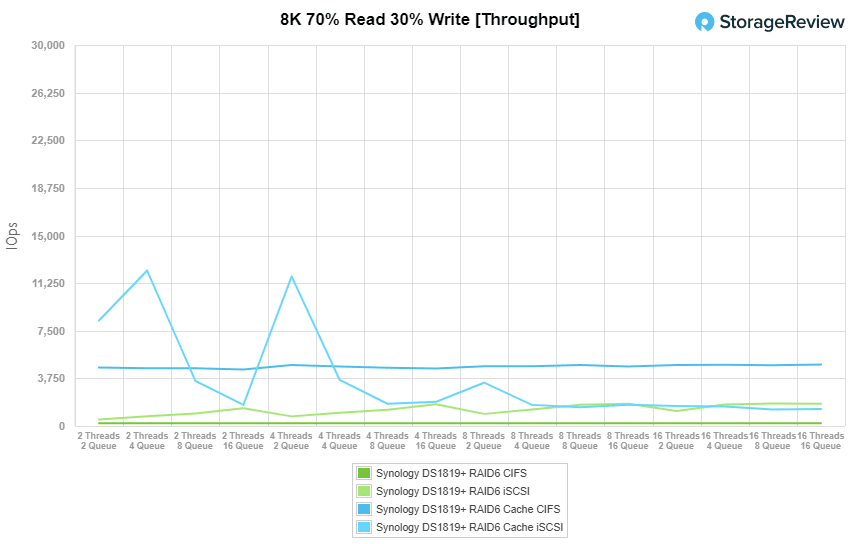

Compared to the fixed 16 thread, 16 queue max workload we performed in the 100% 4K write test, our mixed workload profiles scale the performance across a wide range of thread/queue combinations. In these tests, we span workload intensity from 2 threads and 2 queues up to 16 threads and 16 queues. In the non-cached CIFS, we saw throughput start at 221 IOPS and end at 219 IOPS, pretty steady throughout. With the cached enable we saw CIFS start at 4,597 IOPS and end at 4,844 IOPS. For iSCSI, the non-cache started at 519 IOPS and ended at 1,751 IOPS. With the cache activated we saw iSCSI start at 8,308 IOPS and end down at 1,340 IOPS.

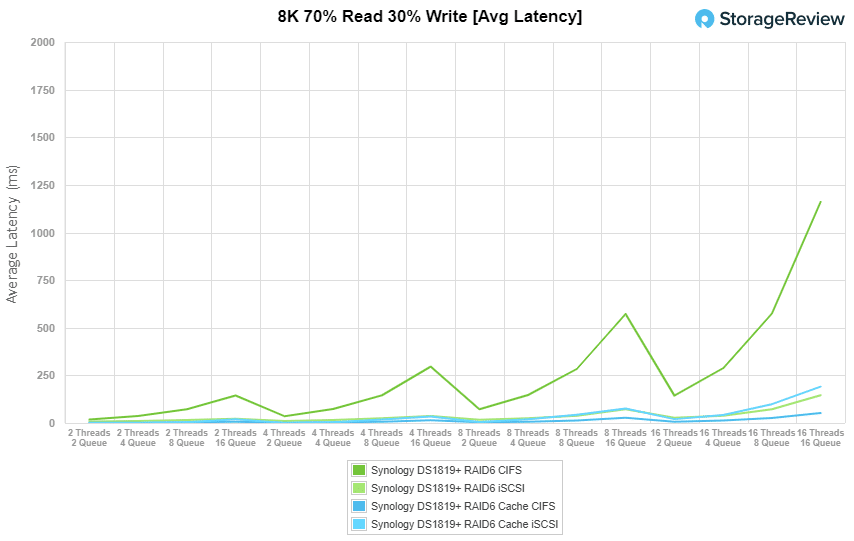

When looking at 8K 70/30 average response times, the CIFS configuration started at 18ms and finished at 1,161ms non-cache, and with the card active dropped down to 860µs at the start and finished at 53ms. For iSCSI, we saw 7.7ms at the start and 146ms at the end without the card, with the card it was 470µs at the start and 191ms at the end.

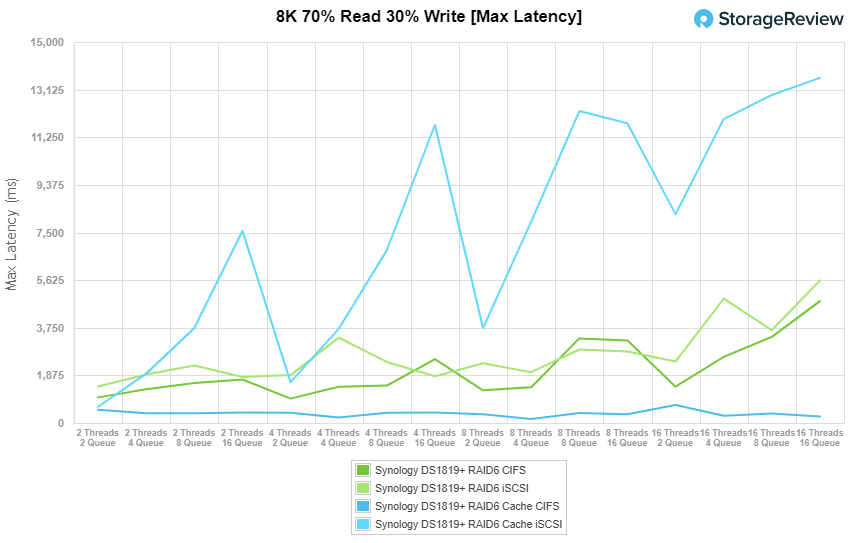

With 8K 70/30 max latency, the CIFS config started around 1,009ms and went up to 4,799ms throughout. With the cache enabled the numbers went from 523ms down to 260ms throughout. With iSCSI, we saw latency go from 1,436ms to 5,614ms without the cache and 640ms to 13,588ms with the cache.

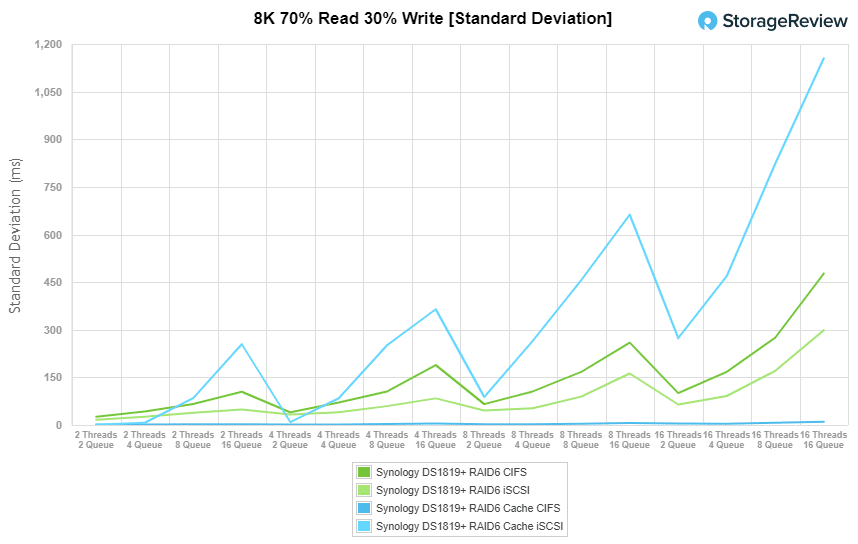

For 8K 70/30 Standard Deviation, the CIFS configuration stared at 26ms and ran to 477ms without the cache, with the NIC it went form 1.3ms to 10.1ms. For iSCSI, we say 17ms to 299ms without cached, and 920µs to 1,155ms with it.

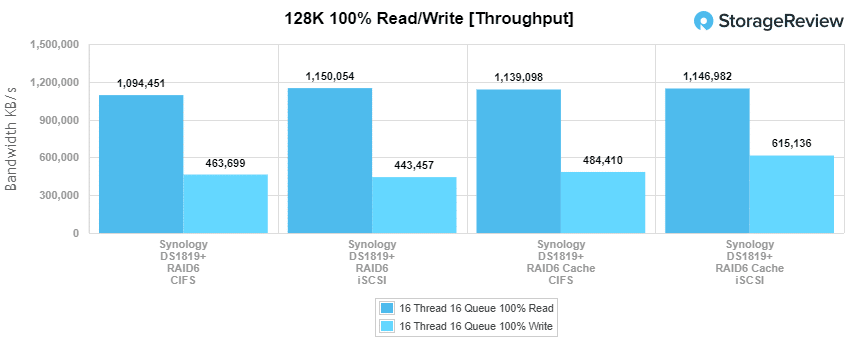

The last Enterprise Synthetic Workload benchmark is our 128K test, which is a large-block sequential test that shows the highest sequential transfer speed for a device. In this workload scenario, CIFS had 1.09GB/s read and 464MB/s write without the cache and 1.14GB/s read and 484MB/s with it. For iSCSI we saw 1.15GB/s read and 443MB/s without cache and 1.15GB/s read and 615MB/s write with it.

Conclusion

Synology has come up with an easy way to add 10GbE connectivity and NVMe cache to a select amount of their NAS devices through the Synology E10M20-T1 AIC. The card fits in nicely with the company’s SSD line and has slots to fit two 2280 or 22110 M.2 form factors. This allows users to load the NAS up with high capacity HDDs and then use the card to boost I/O performance. And, of course, the 10GbE port increases network speed over the onboard GbE ports.

For performance, we once again leveraged the Synology DS1819+ with WD Red 14TB HDDs, only this time we added the E10M20-T1 AIC with two Synology SNV3400-400G SSDs. In most, but not all, cases we saw an improvement in performance. Instead of listing the performance again, let’s instead look at the differences first with CIFS configuration. In 4K CIFS throughput we saw an increase of 3,905 IOPS read and 9,489 IOPS write. In 4K average latency, we saw latency drop 1,434ms in reads and 153ms in writes. In 4K max latency, we saw a drop of 4,137ms read and 3,315ms in write. 4K standard deviation saw a drop of 220.7ms read and 286ms in writes. In 100% 8K, we saw a decrease of 934 IOPS in reads and an increase of 673 IOPS in write. Large block sequential saw a boost of 50MB/s in reads and 20MB/s in writes.

With iSCSI performance, we saw highlights of 4K throughput having an increase of 17,124 IOPS read and 20,937 IOPS write. In 4K average latency, we saw a drop of 75.2ms in reads and 158.6ms in writes. In 4K max latency, we saw an increase of 10,900ms read and a decrease of 15,960ms in write. 4K standard deviation saw a spike in latency again going up 127ms read and a drop in writes of 266ms. In 100% 8K, we saw an increase of 1,200 IOPS in reads and 2,977 IOPS in write. Large block sequential saw read hold steady at 1.15GB/s with or without cache and 172MB/s increase in writes.

The Synology E10M20-T1 AIC is an easy way to add 10GbE connectivity and an SSD cache to select Synology NAS models. While it didn’t improve all performance across the board, it did see some significant boosts in several of our benchmarks.

Synology E10M20-T1 AIC on Amazon

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed