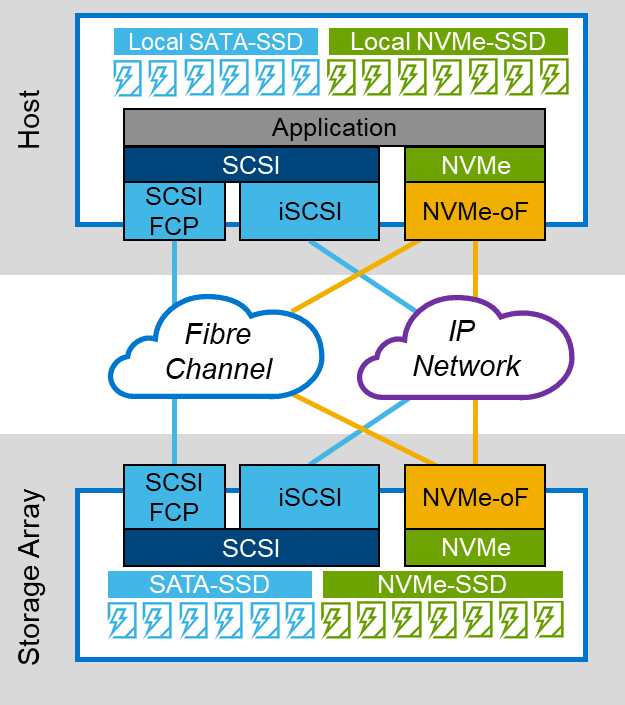

We have been impressed with NVMe drives since we first reviewed them and realized the performance capabilities that they possessed. In the early days, their cost and the lack of PCIe slots in servers to host them were limiting factors to their adoption for use as local storage. However, in a recent report that we did, we found that they were only negligibly more expensive than SATA-based flash drives on a cost per GB basis. Furthermore, all the major vendors have equipped their latest servers with the slots to handle multiple NVMe drives for storage. But local storage for NVMe drives, just as with SATA-based storage, has limited capabilities and usefulness in the data center. To be truly useful we need to aggregate, manage and make NVMe drives available remotely.

We have been impressed with NVMe drives since we first reviewed them and realized the performance capabilities that they possessed. In the early days, their cost and the lack of PCIe slots in servers to host them were limiting factors to their adoption for use as local storage. However, in a recent report that we did, we found that they were only negligibly more expensive than SATA-based flash drives on a cost per GB basis. Furthermore, all the major vendors have equipped their latest servers with the slots to handle multiple NVMe drives for storage. But local storage for NVMe drives, just as with SATA-based storage, has limited capabilities and usefulness in the data center. To be truly useful we need to aggregate, manage and make NVMe drives available remotely.

Delivering NVMe remotely has faced some growing pains, the largest one being the protocol to use. Unlike traditional NAS and SAN storage, NVMe has some unique characteristics that need to be preserved when delivering it to the compute side of the house. As with most new technologies many different standards have emerged to present remote NVMe drives to servers.

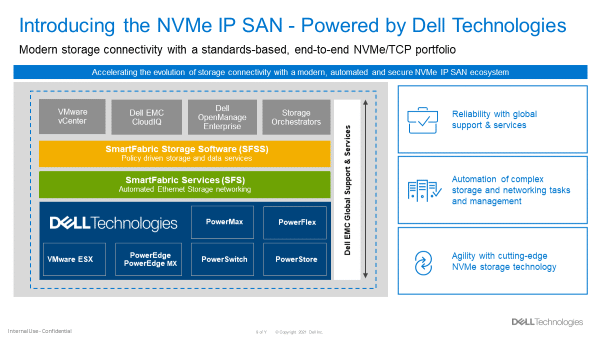

The philosophy of having different technology standards to complete the same goal is analogous to throwing spaghetti against the wall and seeing what sticks – it is messy, but it works. With this in mind, Dell just announced that it is throwing its massive support behind the NVMe/TCP remote protocol. We believe this is the right choice and it will set the standard that others in the industry will follow. To support this decision, they will shortly release a slew of new products based on NVMe/TCP.

What is NVMe/TCP?

NVMe/TCP, as the name implies, delivers NVMe over standard Ethernet using TCP. To be fair other NVMe remote protocols may be slightly more performant, but they require the use of non-standard equipment not only in the servers but also in the network. We agree with Dell that preserving the ability to deliver NVMe over equipment already in use and using an open standard will outweigh the slight performance gain of some of the other protocols.

Dell’s release announcement included performance testing (that we hope to verify in-house) that shows NVMe/TCP delivers 2.5 to 3.5 times more IOPS, reduces latency by up to 75% and cuts CPU load per IO in half over iSCSI. Usually, we are highly skeptical of performance claims that show such order of magnitude gains such as these but our past experience with NVMe technology places these numbers in the realm of possibility.

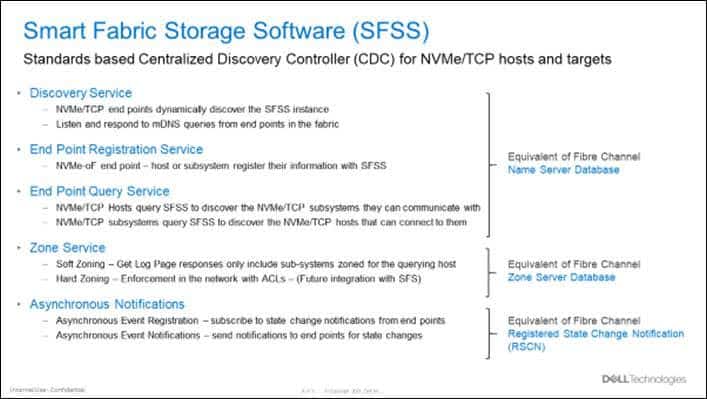

Dell identified some issues, and then came up with corresponding solutions to using NVMe/TCP in the data center. One of the major issues that they identified was that NVMe/TCP relies on a Direct Discovery model. To mitigate this problem Dell has developed a Centralized Discovery Controller (CDC) called SmartFabric Storage Software (SFSS) that automates the discovery of remote NVMe.

Dell’s NVMe/TCP Support

Dell has announced that on November 18th, 2021, they will release the following products to support their NVMe/TCP initiative:

- SmartFabric Storage Software (SFSS) – Automates storage connectivity for your NVMe IP SAN. It allows host and storage interfaces to register with a Centralized Discovery Controller, enables storage administrators to create and activate zoning configurations and then automatically notifies hosts of new storage resources. Hosts will then automatically connect to these storage resources.

- PowerStore: NVMe/TCP protocol and SFSS integration – We have enabled our market-leading Dell EMC PowerStore storage array to use the NVMe/TCP protocol and it will support both the Direct Discovery and Centralized Discovery management models. PowerStore integration with SFSS is initially accomplished via the Pull Registration technique.

- VMware ESXi 7.0u3: NVMe/TCP protocol and SFSS integration – We have partnered with VMware to add support for the NVMe/TCP protocol as well as the ability for each ESX server interface to explicitly register discovery information with SFSS via the Push Registration technique. We have also updated our OMNI plugin to support the configuration of SFSS from within vCenter.

- PowerEdge: ESXi 7.0u3 using NVMe/TCP has been qualified.

- PowerSwitch and SmartFabric Services (SFS): While our NVMe IP SAN solution will run over traditional fabric switches, implementing Dell EMC PowerSwitch and SmartFabric Services (SFS) can be used to automate the configuration of the switches that make up your NVMe IP SAN. SFSS will eventually have an integration with SFS to create hardware-enforced zones in future releases.

In the future, Dell expects to add support for NVMe/TCP to both PowerMax and their PowerFlex product lines.

Dell SFSS is of particular interest as it combines several sub-services to create the equivalent functionality in an IP network as a Fibre Channel (FC) network.

As mentioned above we see Dell’s support as a huge win for the adoption and standardization of NVMe/TCP in the data center. We hope to get some equipment in our labs to give a hands-on evaluation of these new products.

You can find out more information about Dell Technologies NVMe/TCP solutions here.

Engage with StorageReview

Newsletter | YouTube | LinkedIn | Instagram | Twitter | Facebook | TikTok | RSS Feed