Since its inception, Marvell’s focus has been infrastructure networking, by building products that meet the service levels demanded by a variety of applications and various workloads. IT architects need to look for intelligent technology that provides flexibility and scalability to support SSD, Flash, sizable databases, and virtualization. Marvell firmly believes Fibre Channel (FC) is the go-to technology for storage transport, with an FC migration strategy for NVMe-oF via FC (FC-NVMe) with support for native VMware vSphere and ESXi. This technology is available today but not apparent to many customers.

Since its inception, Marvell’s focus has been infrastructure networking, by building products that meet the service levels demanded by a variety of applications and various workloads. IT architects need to look for intelligent technology that provides flexibility and scalability to support SSD, Flash, sizable databases, and virtualization. Marvell firmly believes Fibre Channel (FC) is the go-to technology for storage transport, with an FC migration strategy for NVMe-oF via FC (FC-NVMe) with support for native VMware vSphere and ESXi. This technology is available today but not apparent to many customers.

NVMe-oF Primer

Marvell believes FC will remain the gold standard for storage networks primarily because FC has a history of reliability and innovation; it is enhancing the value of FC by focusing on including NVMe-oF technology for Fibre Channel to deliver additional value for the enterprise. Marvell has kept innovation at the fore for its deep product portfolio that supports EBOF, FC-NVMe, DPU, SSD controllers, and NVMe-oF. With such a deep product lineup, and their interest in networking technologies, Marvell has invited us into their lab to do some performance testing focused on FC-NVMe, NVMe/TCP, NVMe-RoCEv2 within a VMware ESXi environment.

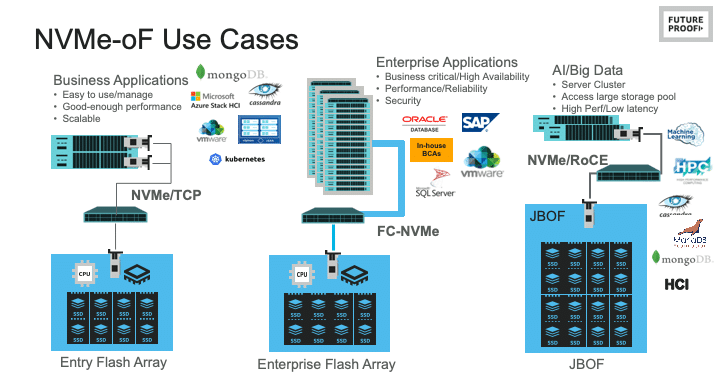

As vendors continue to roll out storage based on NVMe protocol, enterprises are fully embracing the technology. NVMe Flash arrays are deployed globally in many datacenters using Fibre Channel as the preferred transport because it is a proven technology for reliability, performance, and security. NVMe-oF has grown in popularity, with the NVMe workgroup rolling the standard into the NVMe 2.0 specification announced at the end of 2021.

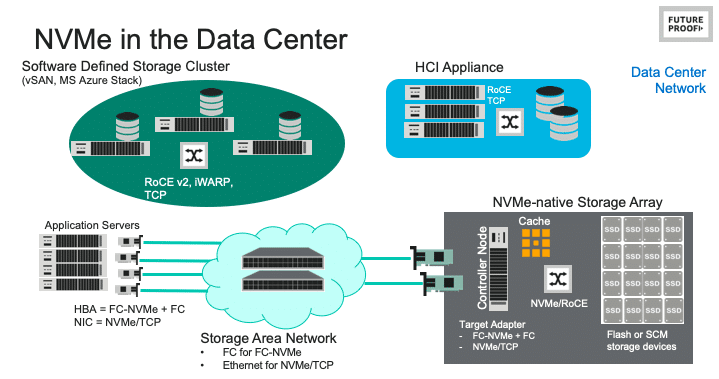

As NVMe-oF standards have matured, vendors are incorporating the technology into storage and transport hardware, thereby easing deployment concerns. NVMe over Ethernet protocols will be used in Software-defined and HCI appliances as they typically connect to Ethernet networks. In the SAN, organizations using Fibre Channel today will transition to FC-NVMe, while those using iSCSI will likely transition to NVMe/TCP.

For decades, FC has been the go-to technology for mission- and business-critical environments because the design meets the performance and latency demands of block storage workloads. FC supports meeting the tight SLA for mission and business-critical workloads that are extremely sensitive to the performance and availability of underlying storage infrastructure. Workloads have increased in size, and that has affected infrastructure scalability. Of course, one of the primary focal points as these workloads grow is access to storage systems. NVMe over Fibre Channel provides numerous performance, latency, and reliability benefits. Sustained and predictable storage performance requires seamless integration and compatibility with Fibre Channel.

Other options for building out an IT infrastructure fabric include Ethernet and Infiniband. However, Fibre Channel’s inherent capabilities can best address the next-generation infrastructure performance, and scalability demands to meet NVMe metrics. Remember that Fibre Channel fabrics have been well established since the ANSI standard was published in 1994 and have continued to evolve, making the case that Fibre Channel is still the premier network technology for storage networks. And NVMe over FC can simultaneously support both NVMe and SCSI on the same network.

As organizations transition to more demanding workloads such as AI/ML, NVMe over FC provides predictability in network performance and low latency. Vendors have been shipping switch technology that concurrently supports FC-NVMe, SCSI/FC, and native SAN fabrics. Integrating network components supporting FC-NVMe is quite simple and typically requires no additional hardware. If the current implementation is an FC SAN operating at 16GFC or above, NVMe commands are encapsulated in FC. Beyond possibly changing or adding NVMe target disks might be a firmware or driver update to the HBA or system OS.

VMware and FC-NVMe

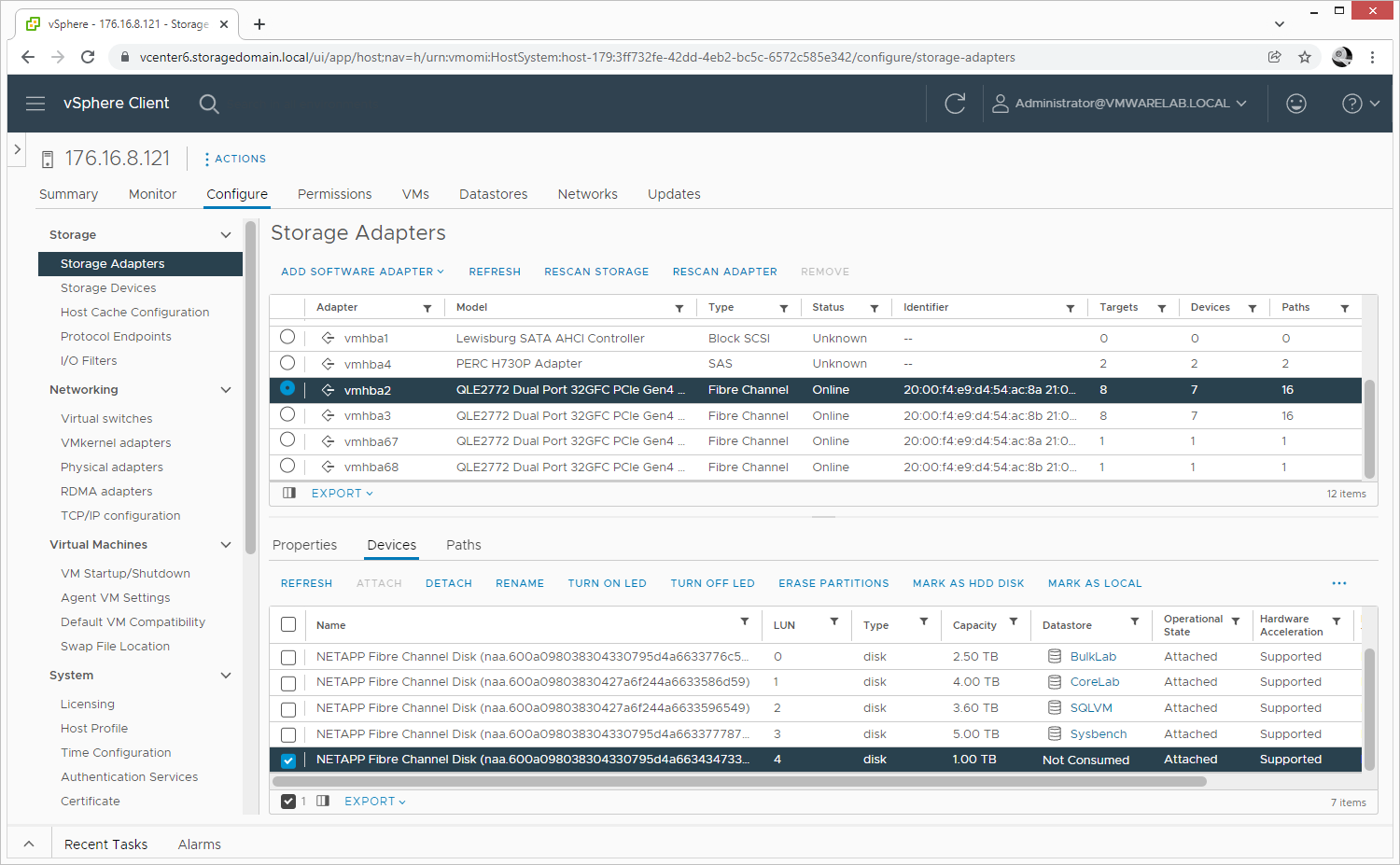

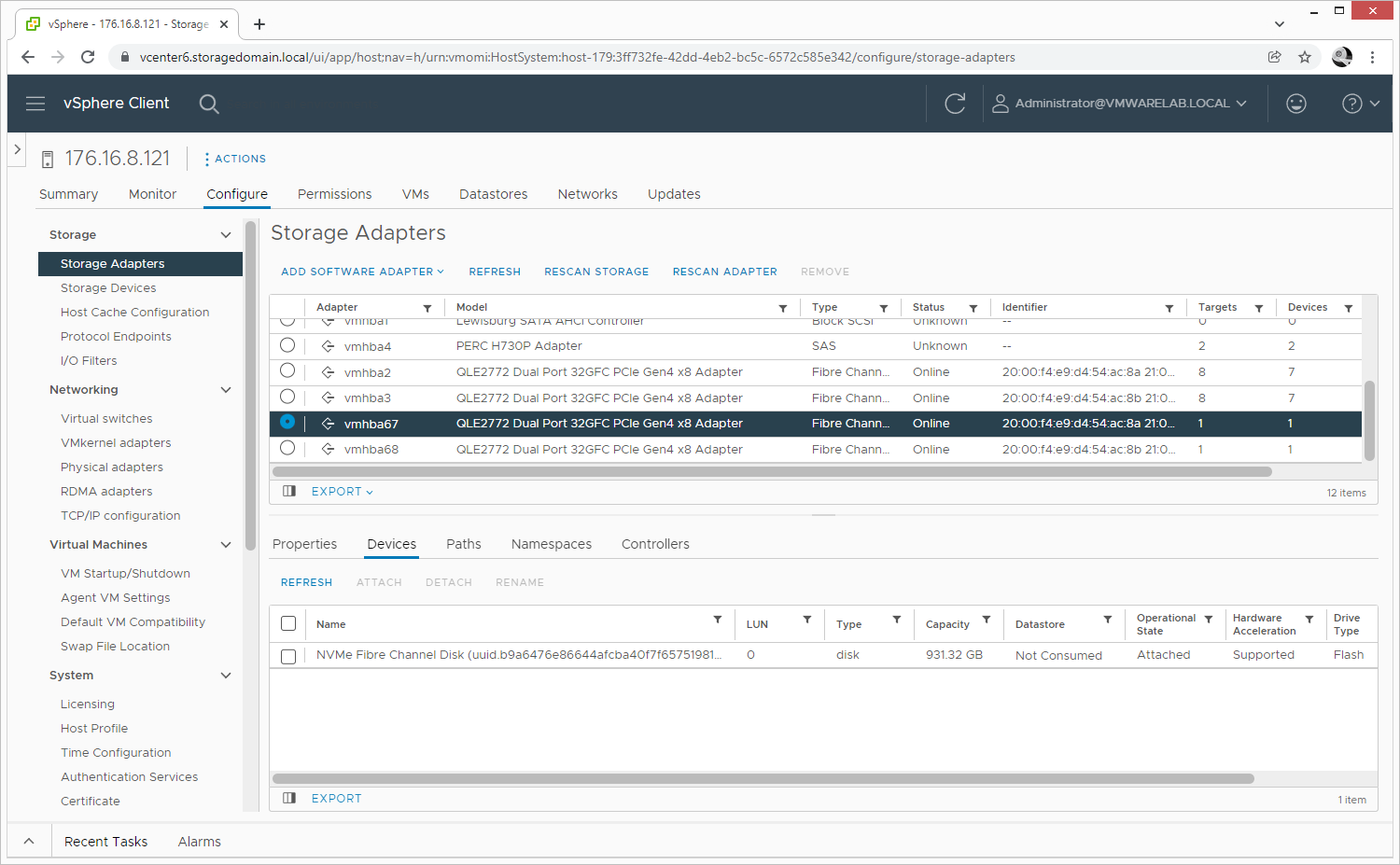

VMware ESXi 7.0+ supports NVMe over Fibre Channel (FC-NVMe) and NVMe over RDMA Converged Ethernet (NVMe-RoCE). NVMe-RDMA and NVMe-RoCE are essentially the same and are sometimes interchangeable. VMware recently released ESXi 7.0 U3 with support for NVMe/TCP. Workloads running in a VMware vSphere or ESXi environment, integrating FC-NVMe is straightforward and looks similar to a traditional FC implementation. The following screenshots illustrate the similarities in configuring HBAs to support FC, and FC-NVMe.

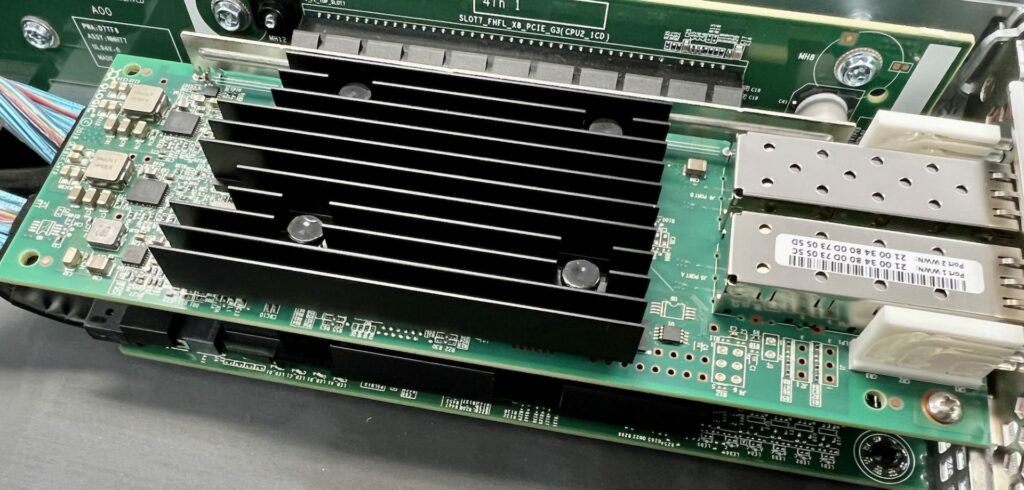

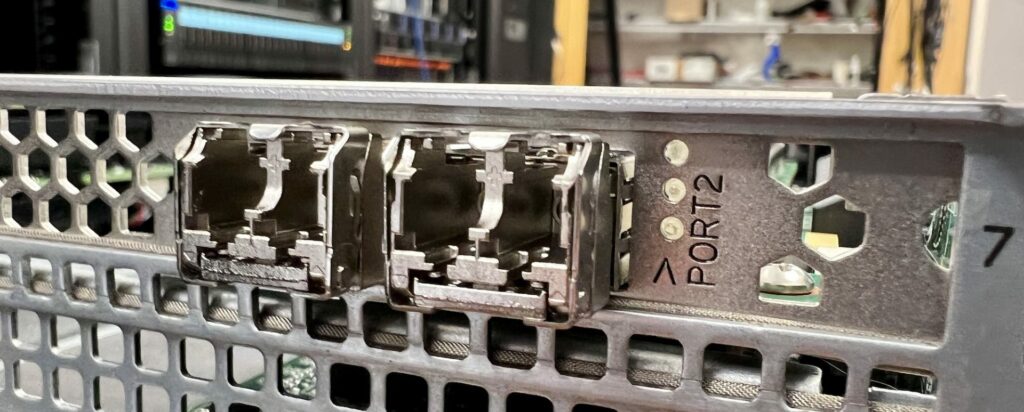

Using Marvell QLogic QLE2772 dual-port 32Gb FC adapters and NetApp AFF A250 to present traditional FC LUNs and NVMe namespaces, we’re able to show just how seamless it is for an end-user to provision each storage type. With the storage zoned appropriately on the Fibre Channel fabric to a VMware host, the same workflow creates a datastore.

Behind the scenes, the dual-port card presents as four devices. Two are for traditional FC storage, while the others are for NVMe. In our screenshot, vmhba2/3 are standard FC devices, and vmhba67/68 are for NVMe. It should be noted that these automatically appear in VMware ESXi 7 with the built-in drivers and current firmware, requiring no unique installation. The VMware documentation recommends accepting defaults during the installation. In our first view, the traditional Fibre Channel device is selected, showing a 1TB NetApp Fibre Channel Disk that is “Not Consumed.”

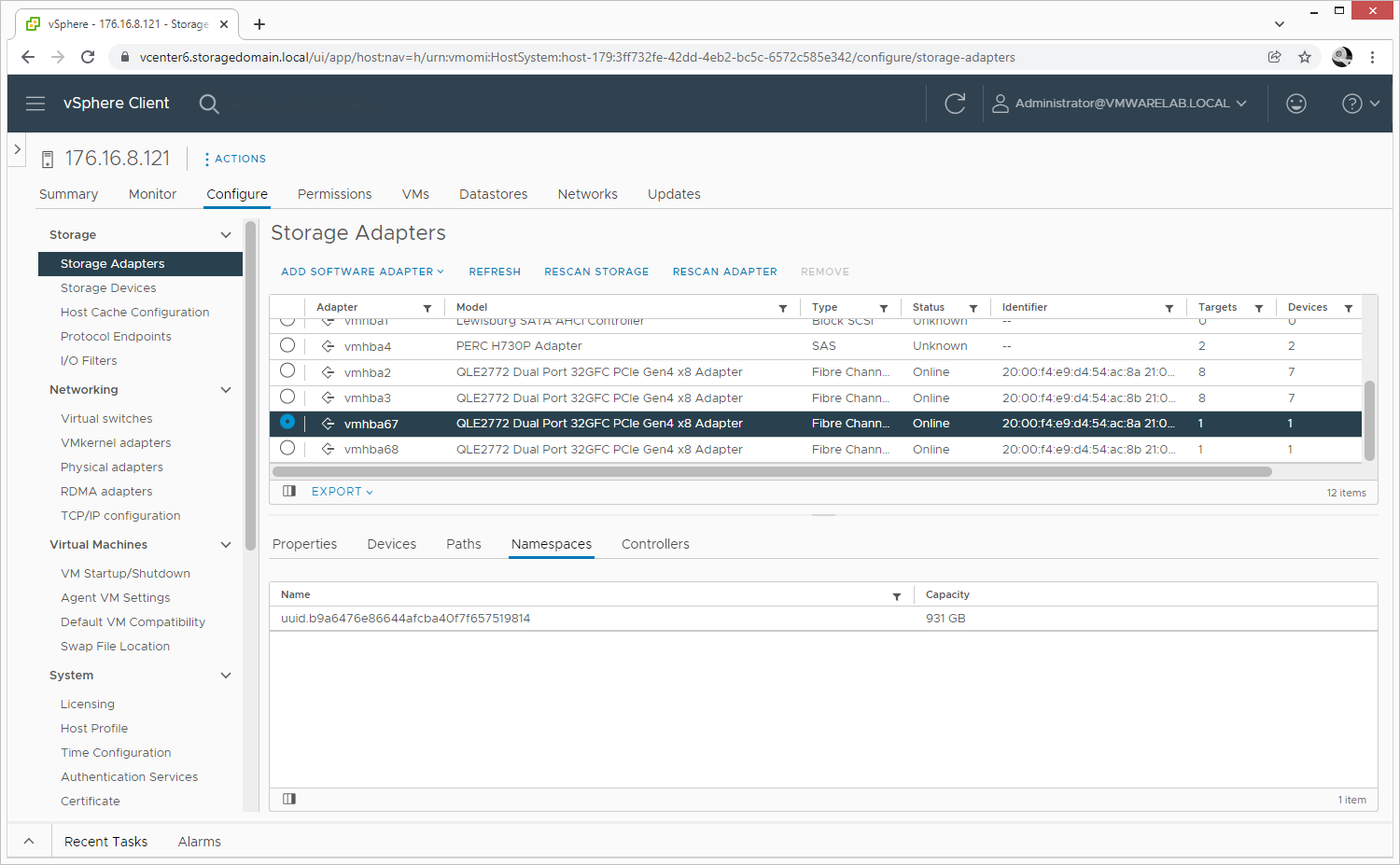

Switching the view to the Marvell QLogic NVMe view, we see another 1TB NVMe Fibre Channel Disk, also not consumed. The vSphere output includes options for Namespaces and Controllers to define how the system accesses the LUN.

The NVMe Namespace view would typically have multiple devices shown; however, we provisioned a single 1TB device in our test.

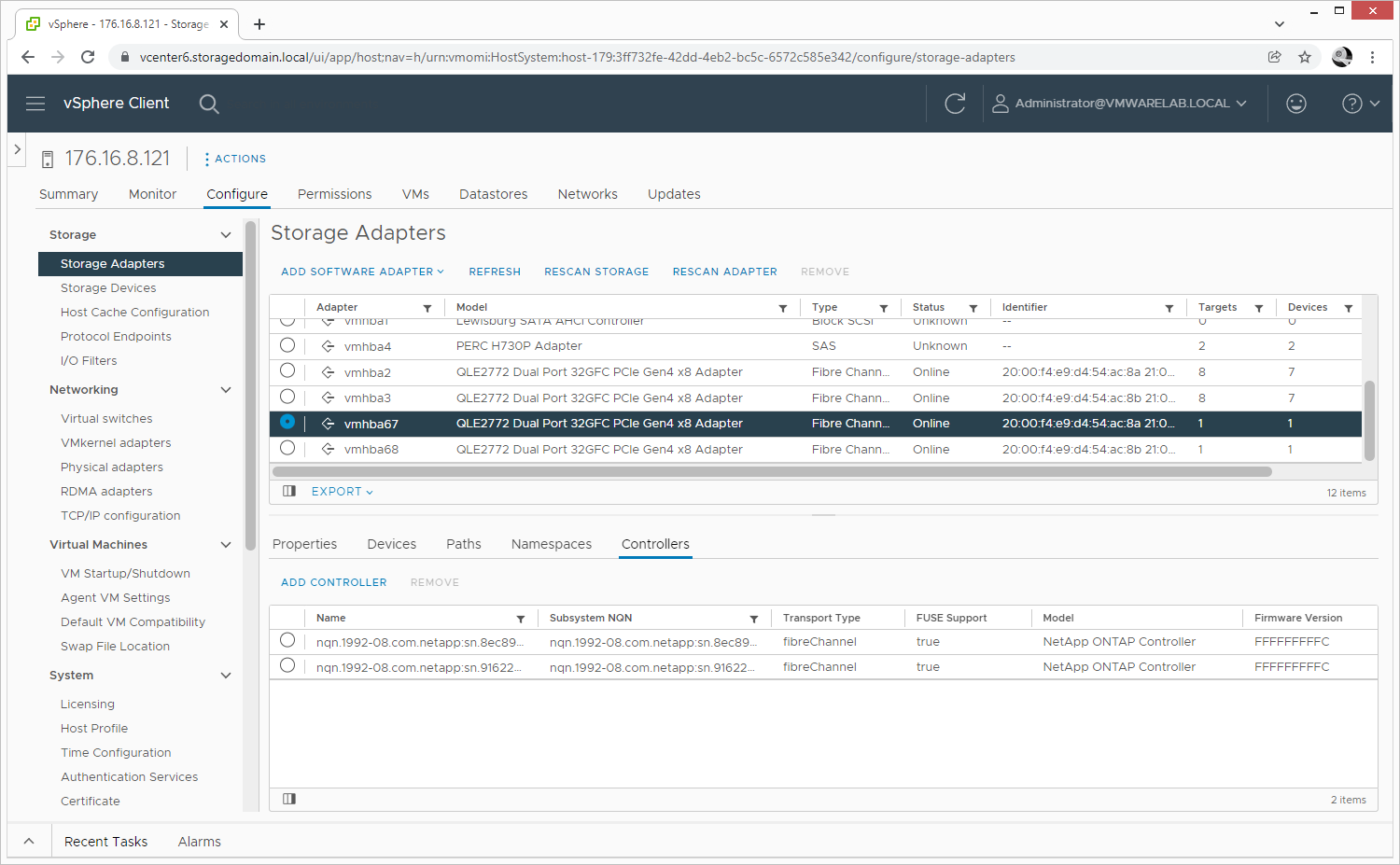

Under the Controllers view, the NetApp ONTAP NVMe controllers are displayed, presenting the devices to the mapped hosts. The “Add Controller” option is available to manually add a new controller, although VMware ESXi 7 automatically discovers these when appropriately zoned to a given FC WWN.

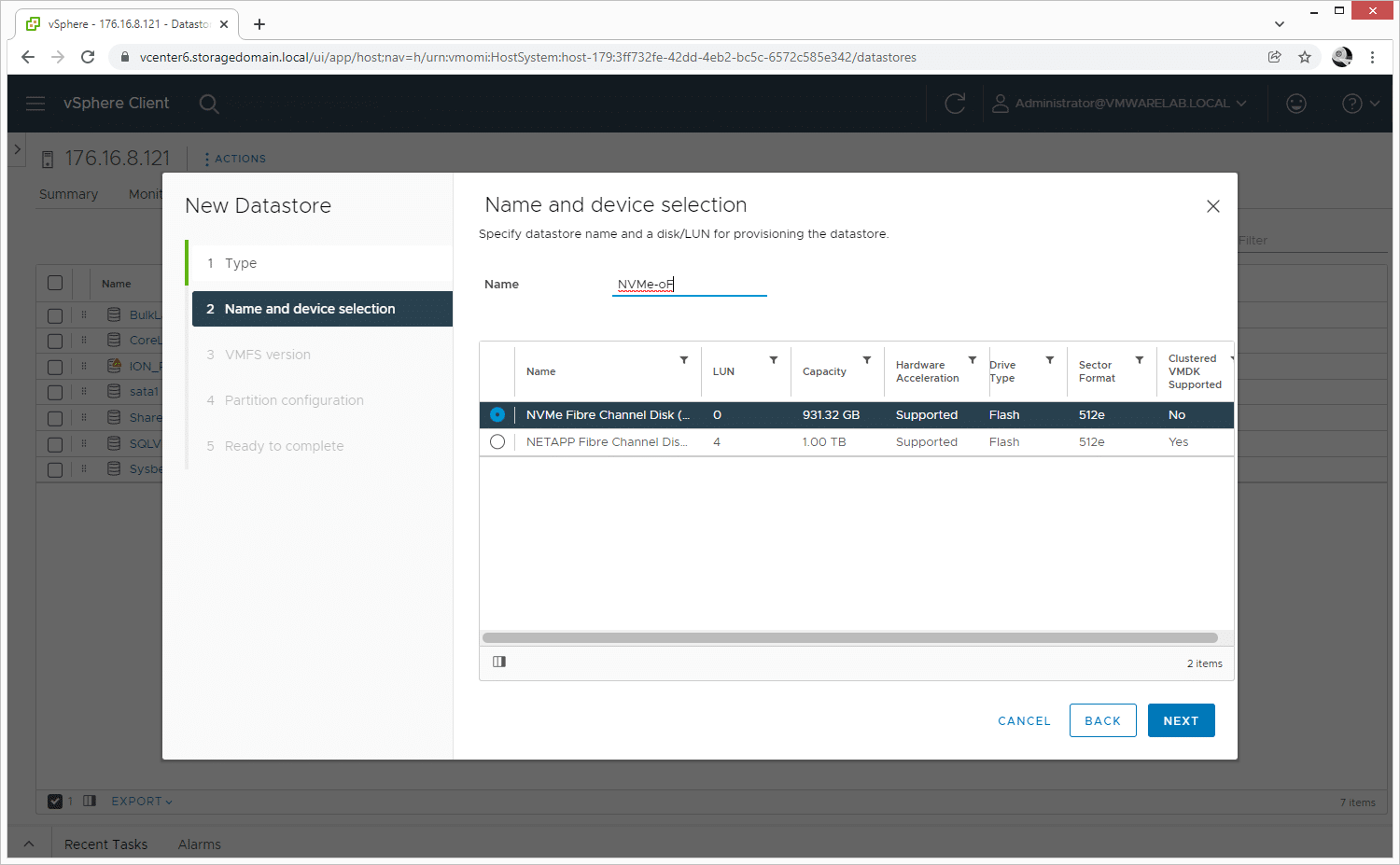

This next step involves configuring storage. ESXi provides a “New Datastore” tool to add one onto the system easily. We have both FC and FC-NVMe storage types available as options in our host setup, illustrating how painless the process is for an end-user to configure. First, we select the NVMe device for our test to create an appropriately named datastore and move to the next step.

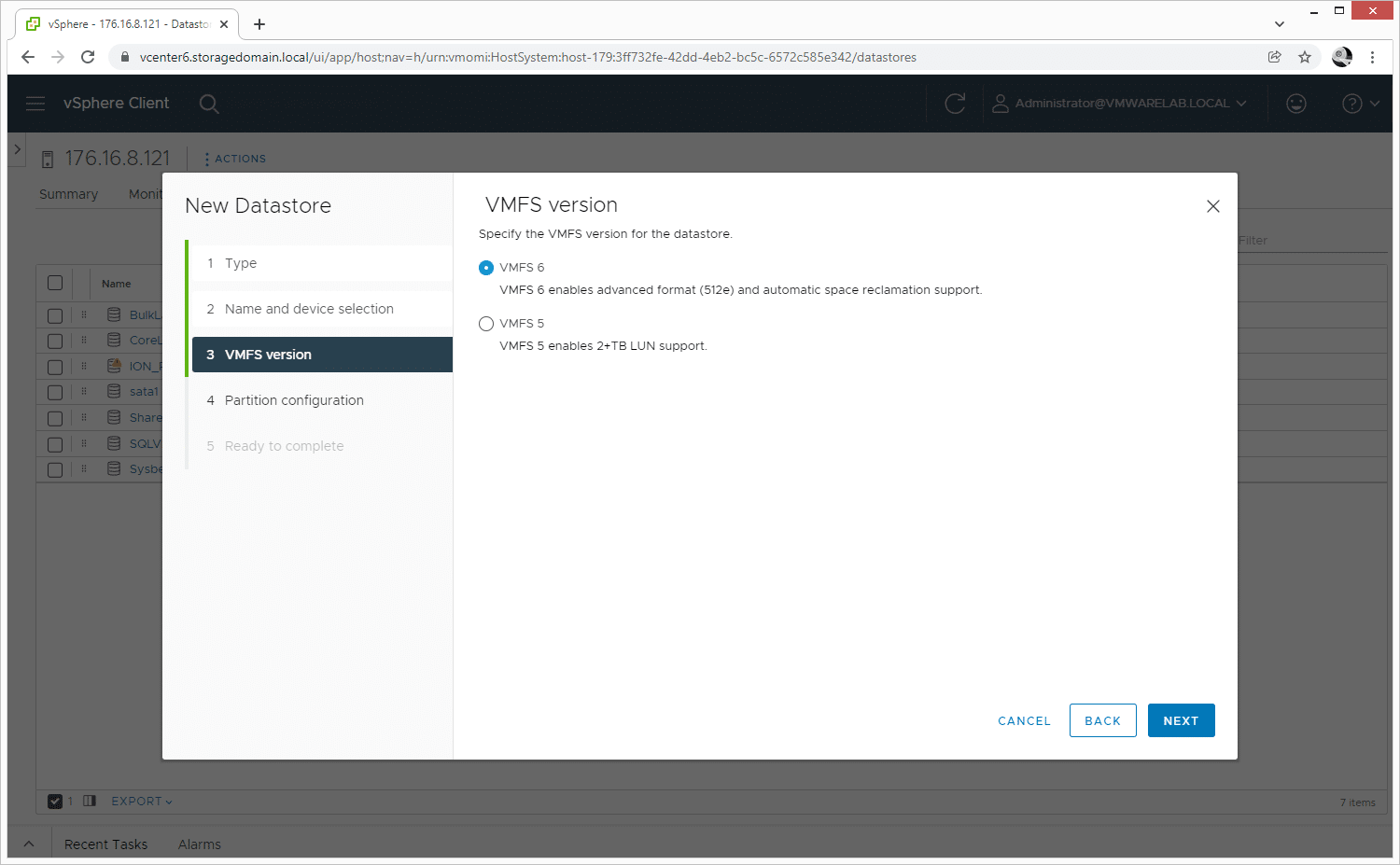

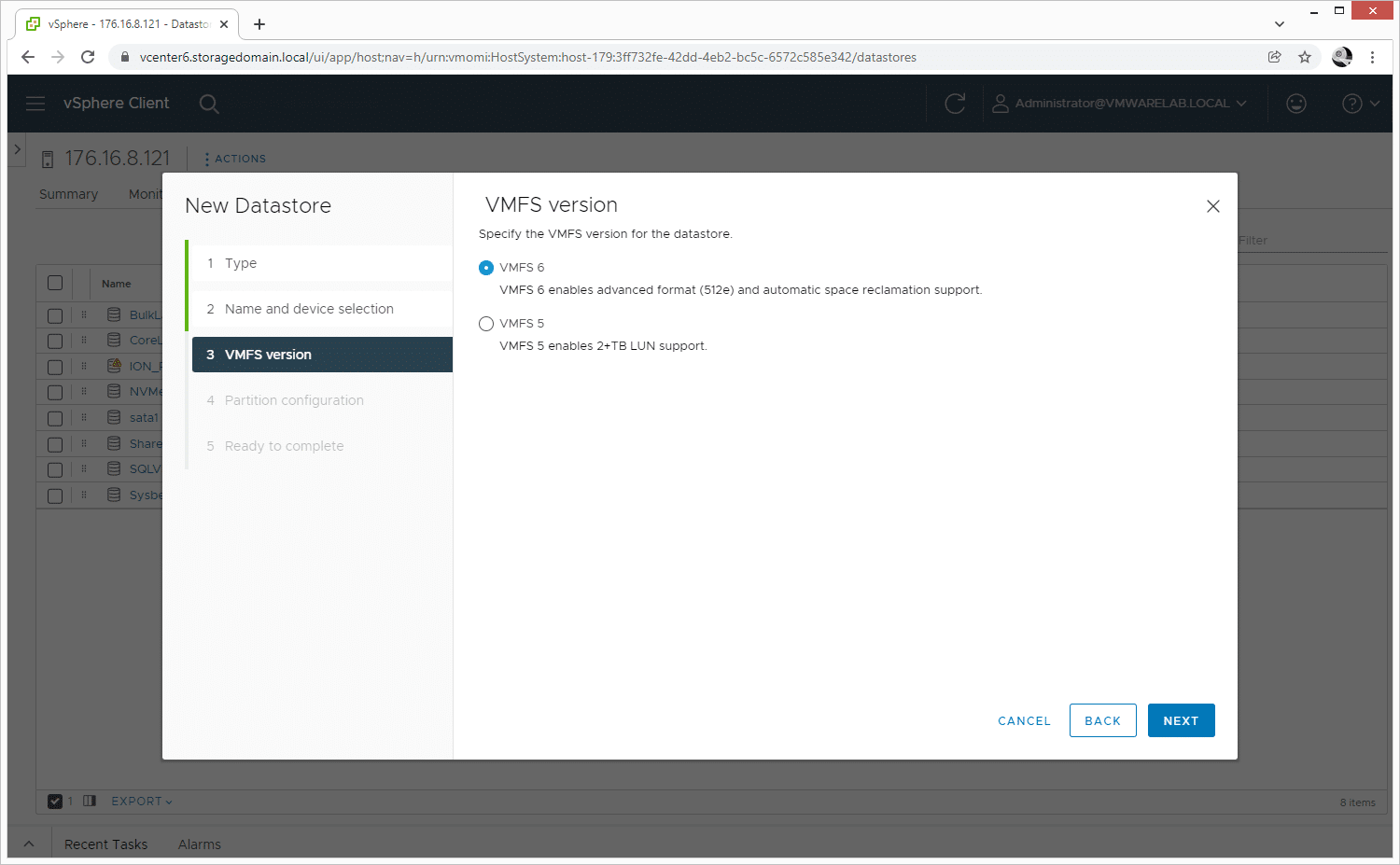

The NVMe datastore has the same VMFS selection process, where the user can choose between VMFS 6 or a legacy VMFS 5 version.

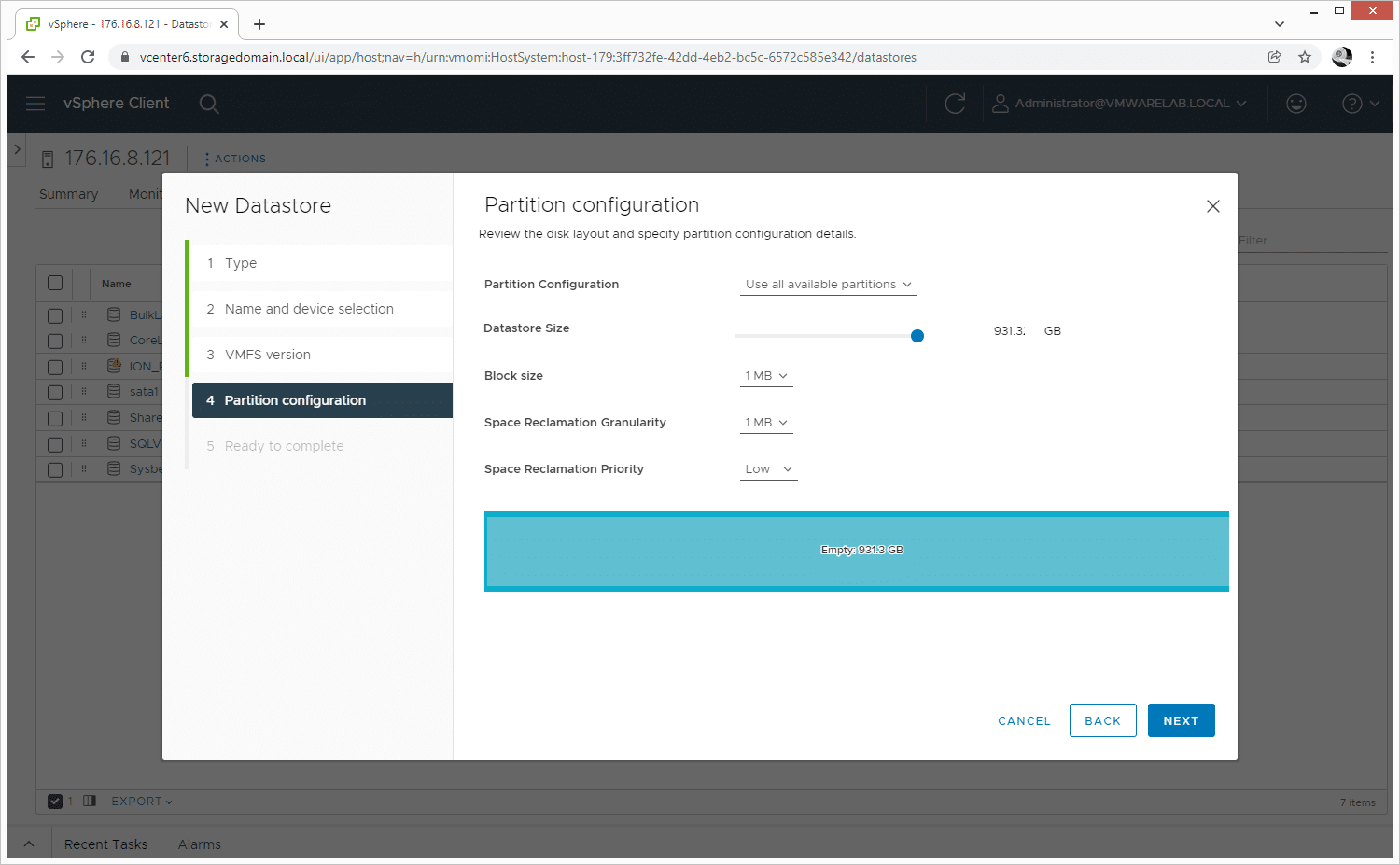

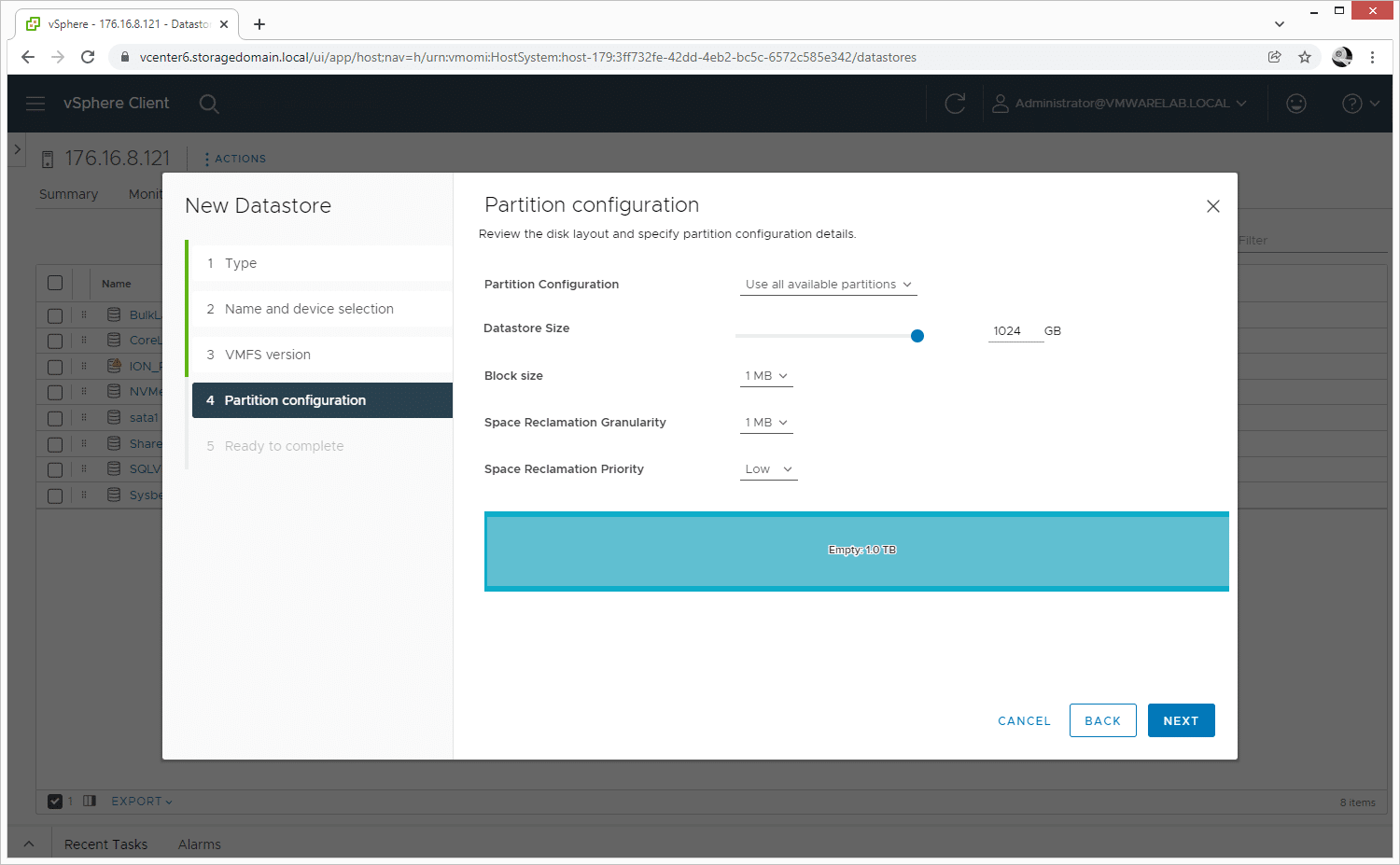

Next, the disk is partitioned for the datastore, using the entire available space on the device.

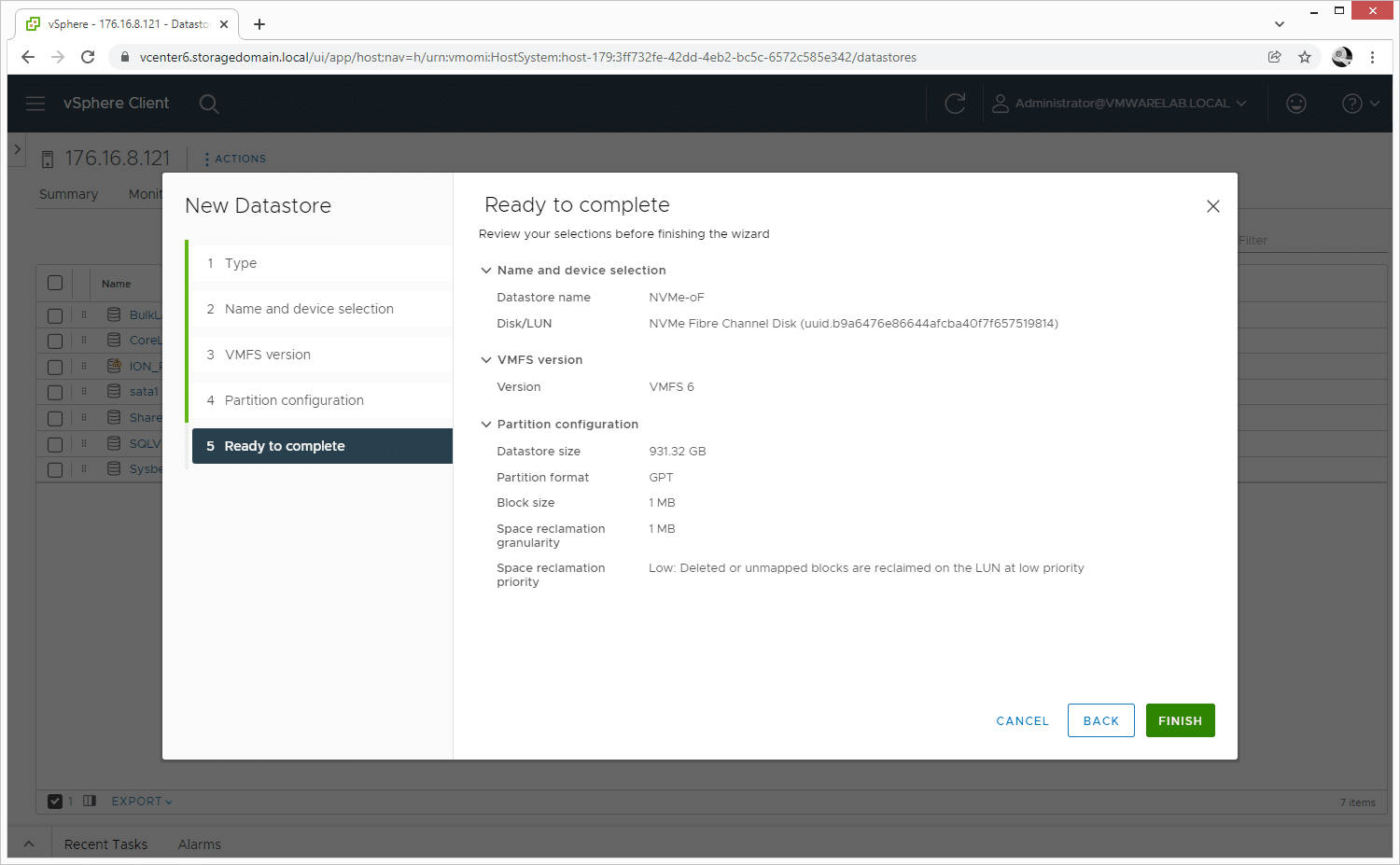

After those few steps, it’s time to create the new FC-NVMe datastore. Throughout the process, ESXi displays key information to assist in getting this process correct.

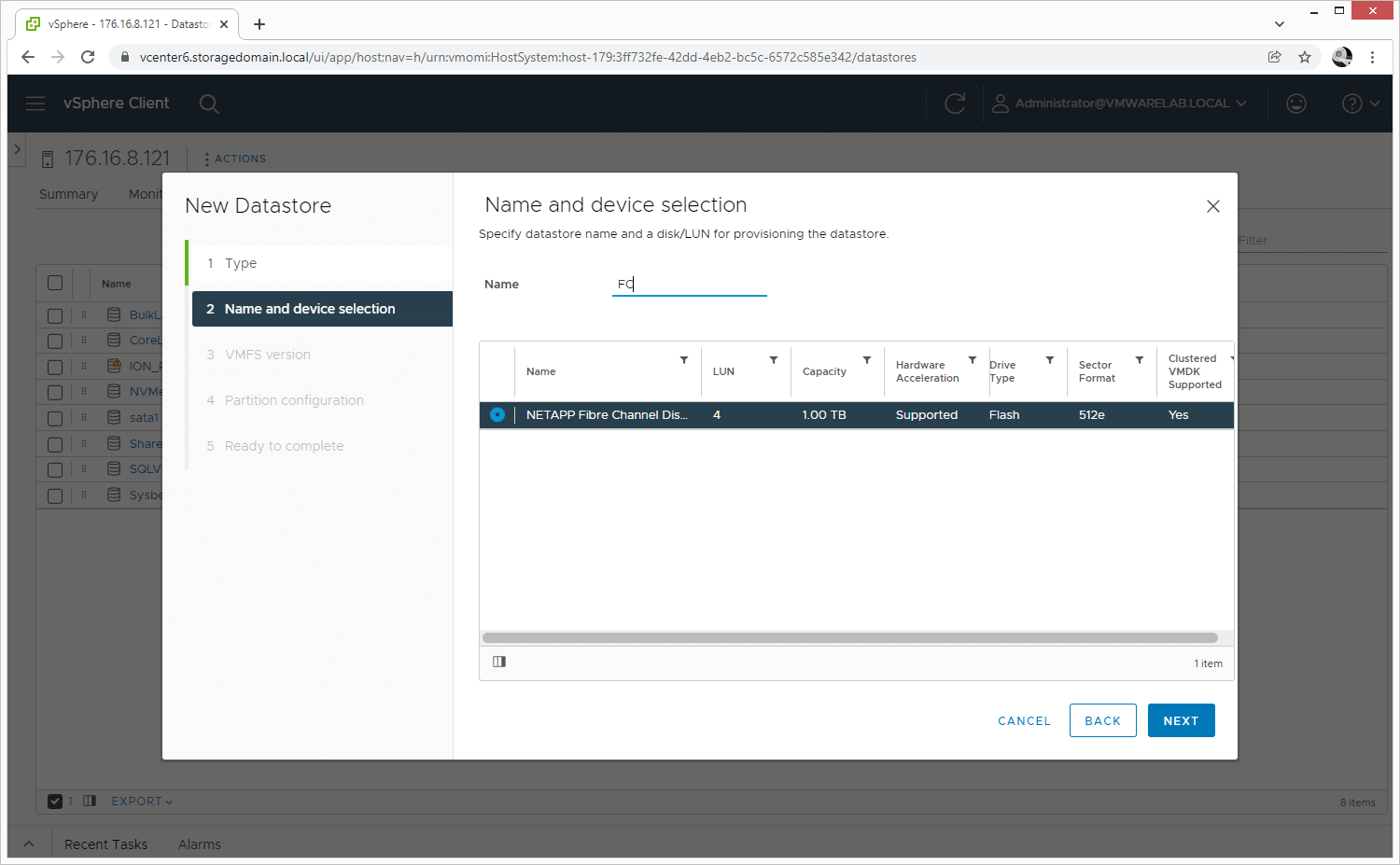

The steps to create FC storage are the same as FC-NVMe outlined above. First, select the available FC device.

Then choose the VMFS version.

With the VMFS version selected, the next step is to partition the datastore, again using all available space on the device.

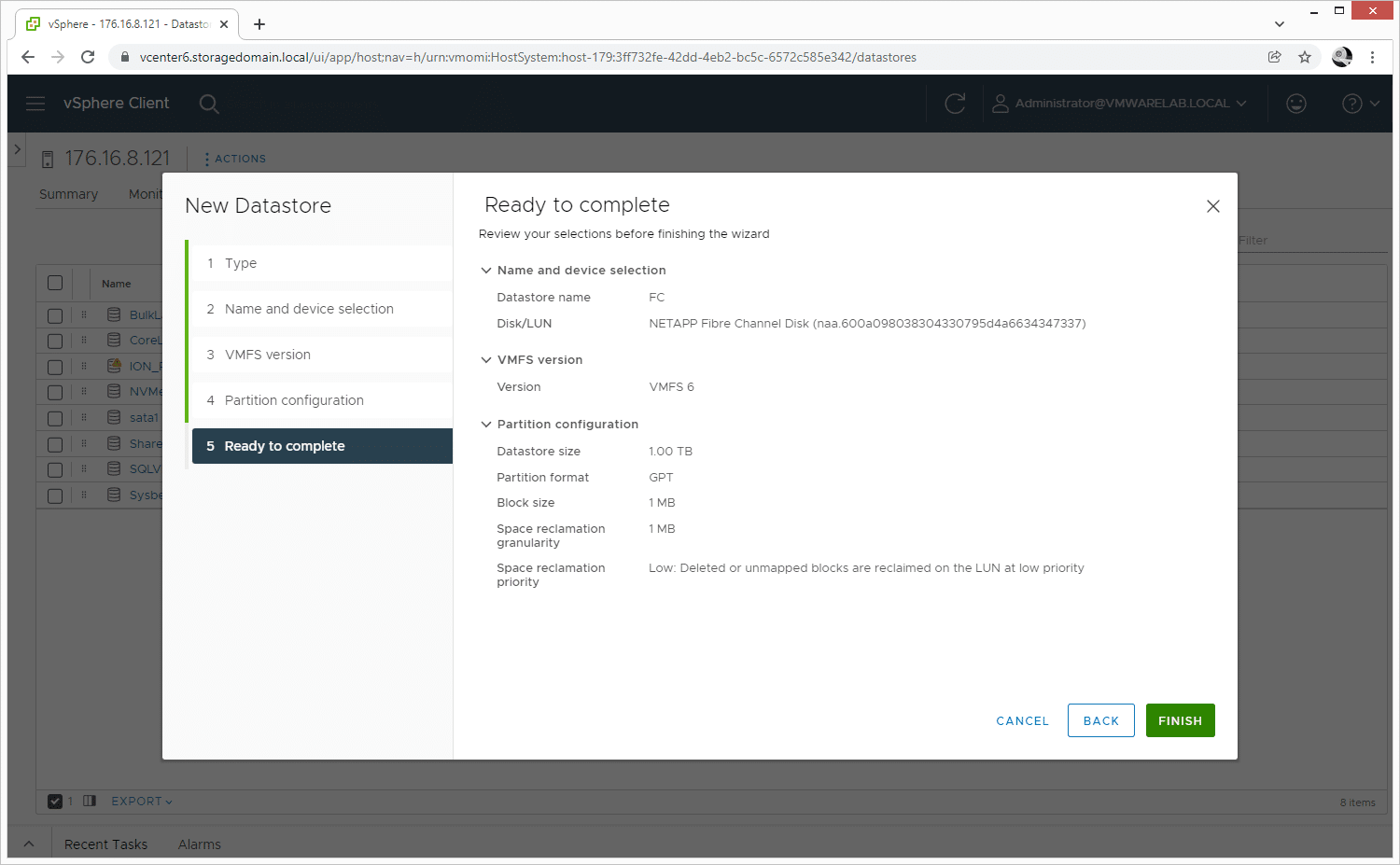

The output on the summary screen displays the NetApp Fibre Channel device instead of the NVMe Fibre Channel device, but the workflow to get to this point is the same for both.

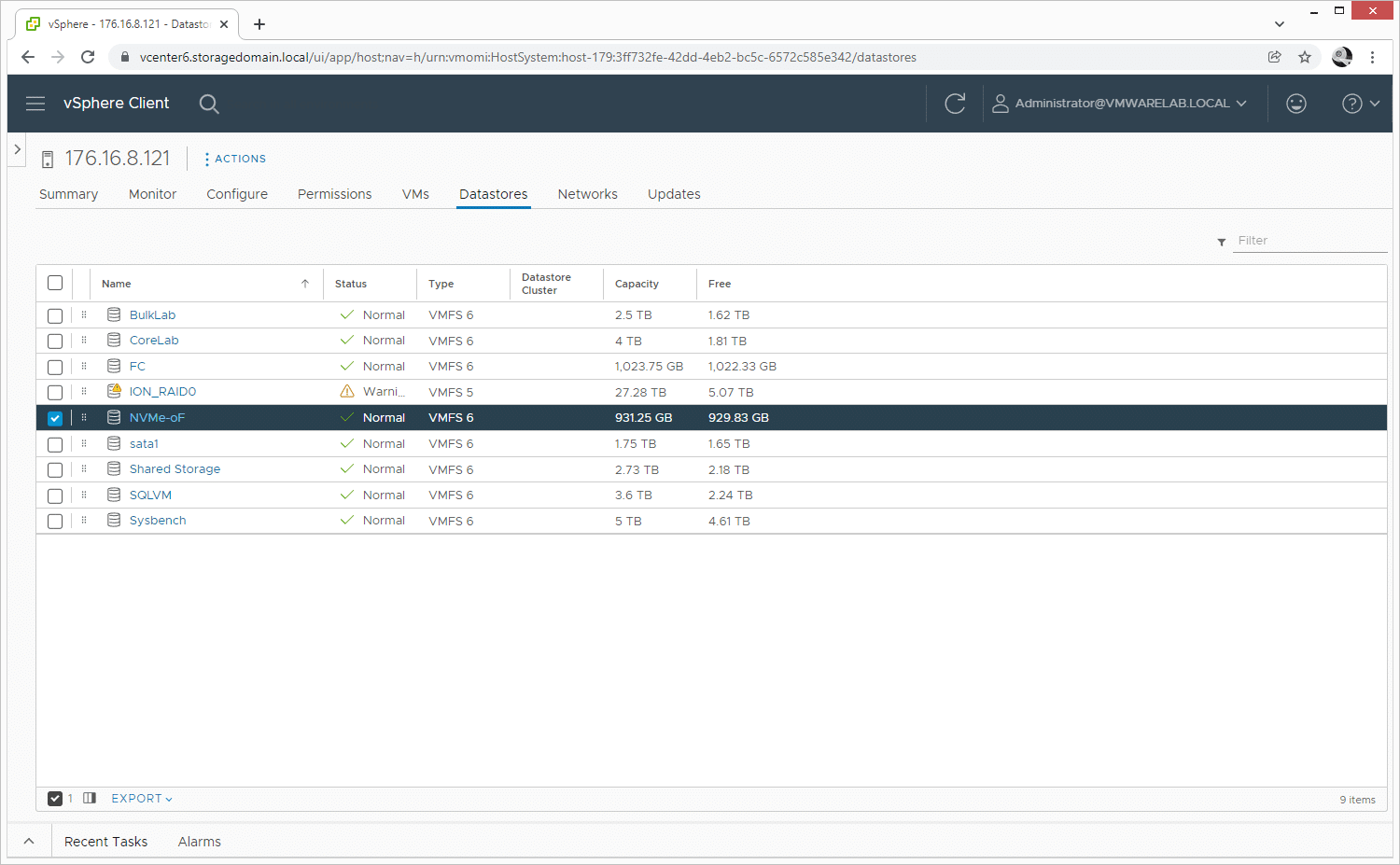

The new datastores we created are displayed in the Datastore list for the host and are ready to use for VM storage.

FC-NVMe Performance

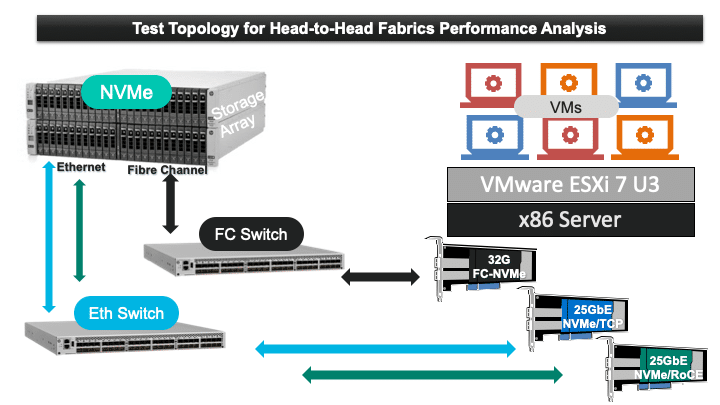

To illustrate the overall performance between FC-NVMe vs. NVMe-RoCE vs. NVMe/TCP, Marvell measured results based on light, medium, and heavy workloads. Performance results were fairly consistent across all three workloads, with NVMe/TCP lagging behind FC-NVMe and NVMe-RoCE. The testing layout was configured as shown below.

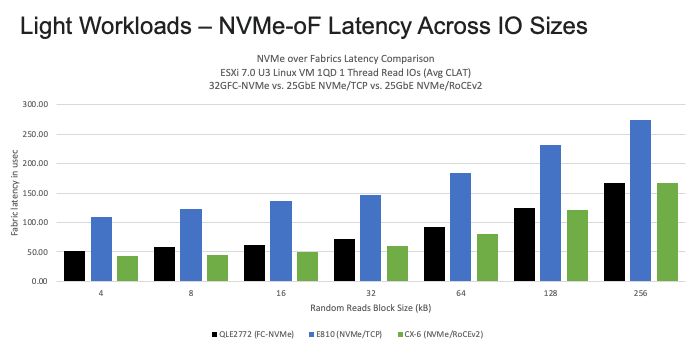

Latency analysis when running light workloads highlighted latency inherent in TCP. Measuring random block sizes, NVMe-RoCE outperformed, with FC-NVMe coming in a close second. Running simulated 8K reads, NVMe/TCP had almost twice the fabric latency of FC-NVMe.

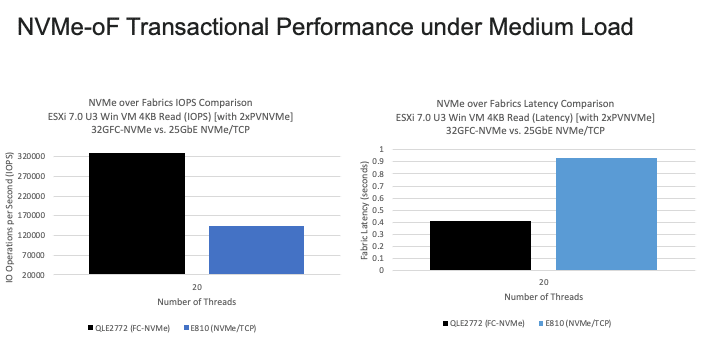

In an environment running medium workloads comparing FC-NVMe to NVMe/TCP, FC-NVMe was the high performer. The results were impressive, with FC-NVMe delivering about 127 percent more transactions than NVMe/TCP. Latency numbers reflected FC-NVMe dominance with 56 percent lower latency than NVMe/TCP.

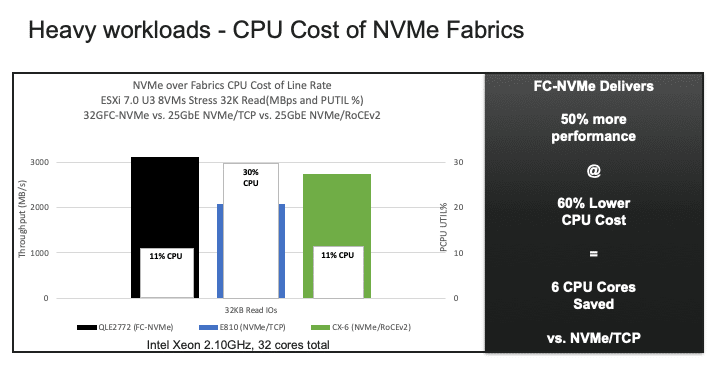

Measuring stress levels running in a heavy workload simulation, FC-NVMe performed consistently better than Ethernet-based fabrics. FC-NVMe delivered 50 percent higher bandwidth over NVMe/TCP but, more importantly, required significantly lower CPU cycles freeing up the VMware ESXi server to host more VMs.

Final Thoughts

The simplicity of configuring both FC and NVMe-oF within a virtualized environment has been illustrated in the screenshots above. Most users are not fully aware of the ease of implementing both FC and FC-NVMe on ESXi systems. VMware provides a simple option for configuring and administering trusted FC benefits without introducing new complexities.

Although we used NetApp hardware in our lab to highlight the ease of connectivity, there is a wide range of other vendors supporting FC NVMe-oF. Marvell has compiled a list of vendors and posted that on their website. If you want to see the full list, please click here.

For more information on the Marvell FC-NVMe adapters, visit their website by clicking here. You can also go to the NVM Express site to check out the latest spec and see what’s next for NVMe by going here.

This report is sponsored by Marvell. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed