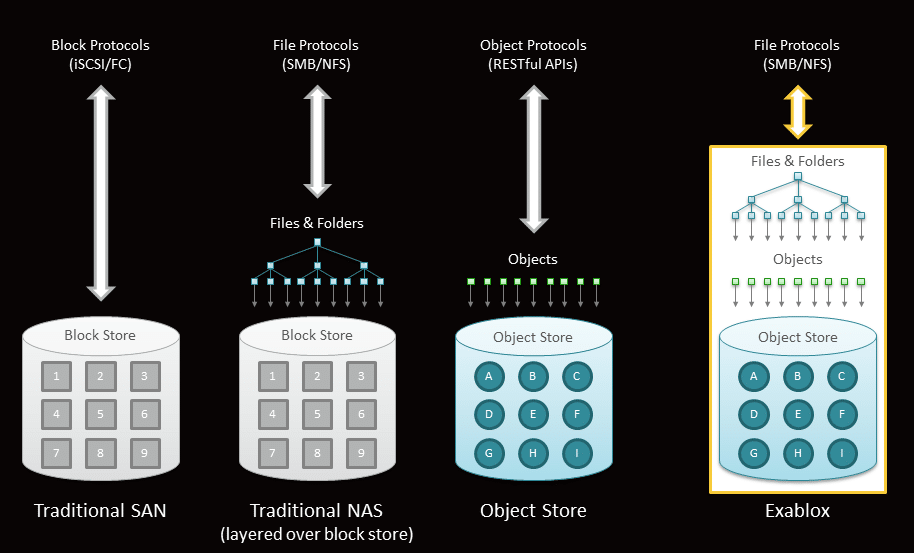

Exablox’s OneBlox embodies a distinctive approach to streamlining storage for unstructured information. OneBlox offers a range of enterprise scale-out NAS functionality including a global filesystem that can span multiple 2U OneBlox nodes as a OneBlox ring with up to 336TB that incorporates global inline deduplication and continuous data protection. OneBlox also allows administrators to ‘bring their own drives’ by populating the appliance with a combination of SATA and SAS drives and capacities.

Organizations can begin with a single OneBlox as an independent 2U storage appliance with eight 3.5-inch drive bays that can handle HDDs up to 6TB in size for a total of 48TB of raw capacity per node. For larger capacity requirements, OneBlox uses a scale-out ring architecture, where the ring consists of one or more OneBlox nodes that scale by adding new drives or new systems at any time. this architecture enables the global file system to scale from a few TBs to hundreds of TBs without requiring application reconfiguration.

Exablox has created its own object-store that is tightly integrated with a distributed file system delivering inline deduplication, continuous data protection, remote replication, and seamless scalability to SMB and NFS-based applications. Like many popular object-stores, OneBlox provides RAID-less protection against multiple drive failures or multiple node failures by creating three copies of every object for redundancy. Each OneBlox accelerates its object metadata access with a built-in SSD in keeping with the decentralized structure of OneBlox rings.

Exablox has designed an architecture that emphasizes usability and a robust feature set rather than high-performance. The result is an unstructured storage solution that is notably simpler to deploy and manage than other storage solutions.

These strengths make OneBlox an appealing solution for understaffed IT organizations with capacity focused backup and/or archive applications. This approach minimizes the effort that is often required for managing disk pools and capacity requirements with other solutions. OneBlox offers SMB/CIFS and NFS connectivity and is certified for compatibility with backup and recovery offerings from Symantec, Veeam, CommVault, and Unitrends.

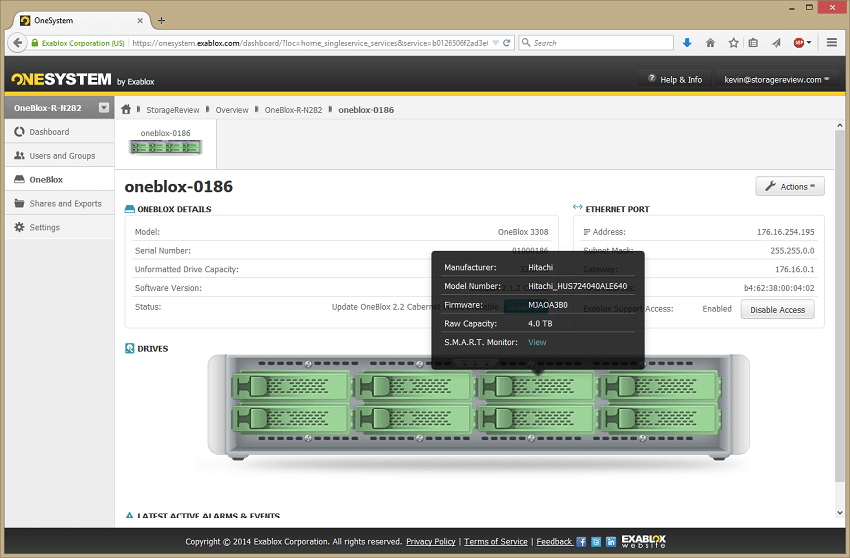

OneBlox supports any SATA or SAS drive from 1TB to 6TB, with support for 8TB drives expected later this year. Because of its object-based filesystem, each drive is accessed independently, meaning that each drive can be accessed at its full data transfer rate rather than being throttled to the lowest rate in the pool. The OneBlox midplane is 6Gbs, meaning that OneBlox supports 12Gbs drives but only at a 6Gbs transfer rate, however.

Exablox ships the OneBlox bare with a suggested price of $9,995.

Exablox OneBlox Specifications

- Power: 100-120 / 200-240 VAC

- Thermal Rating: 500 BTU/hr, 150W (typical)

- Weight (empty, no disks): 23 lb. (10 kg)

- Space Requirements: 18.75″ w x 3.7″ h x 15″ d inches (unit can fit in 19″ rack or on table top)

- 2 Rack Units (RU)

- Hard Drive Bays: 8 drive slots, hot swappable

- Hard Drive Types:

- 3.5″ SATA (up to 6Gb/sec)

- 3.5″ SAS (up to 12Gb/sec)

- Max. Raw Capacity: 48 TB (8 disks rated at 6 TB each)

- Operating Acoustic Noise: 56.5 dBA sound pressure (LpA) @ 22°C and at sea level

- Cooling: Adjusted fan cooled

- File Service Protocols: SMB (1.0, 2.0, 2.1, 3.0)

- Onboard GbE Ports: 4

- Onboard USB Ports: 2

- LCD Screen: 3″ TFT

- LED Lights: 3 lights on front panel for system status, 8 drive status lights

Build and Design

The OneBlox design reflects its priorities as a simple and streamlined solution for unstructured data storage. The front of the 2U OneBlox chassis features eight hot swap disk bays, along with a 3-inch LCD screen and LED diagnostic lights. The LCD screen is the most comprehensive front display we’ve seen to date, featuring setup and system information.

Loading HDDs into the chassis is easy with its tray-less and tool-less design. After opening a slot, the drive slides in and the bay door closes behind it. Removing drives involves opening the door and pulling the door extension. This ejects the drive that can then be removed.

The rear of the chassis incorporates four gigabit Ethernet ports along with one USB port. There is also a single power supply with physical on/off switch. In a scale-out deployment the network ports are used for both client and node-to-node traffic.

Management

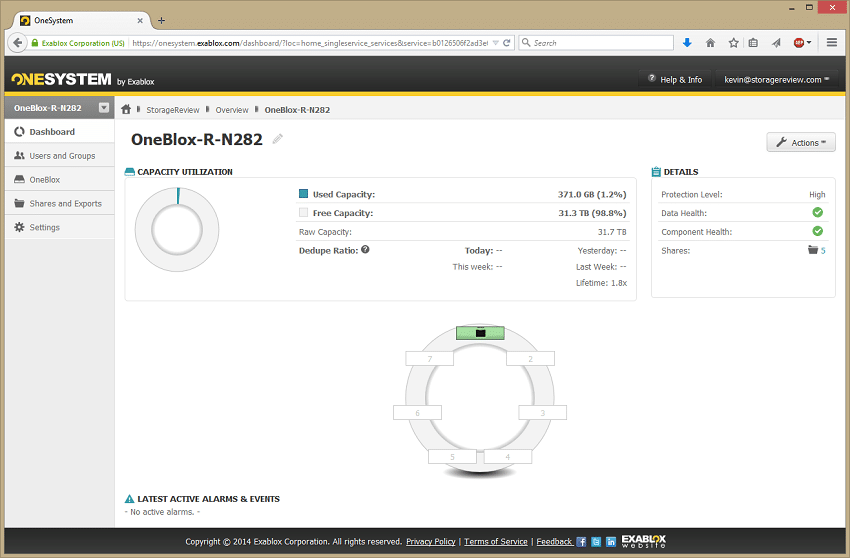

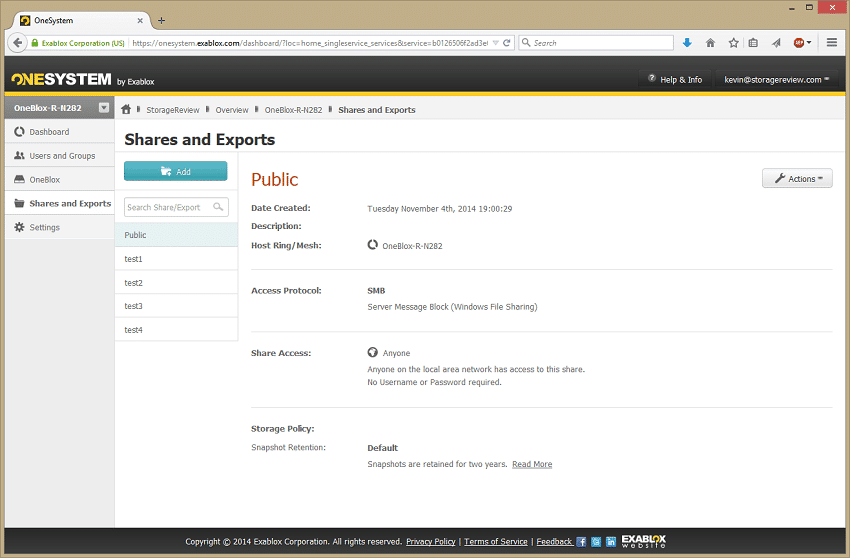

The OneBlox makes use of Exablox’s OneSystem, a cloud-based management platform that is accessible from any browser with HTTPS connectivity regardless of geographic location. OneSystem continuously and proactively monitors the health of every OneBlox. Additionally, organizations can manage and view distributed networks of OneBlox as a single entity or as discrete elements. When a OneBlox node is brought online, its LCD display provides the pairing code for the administrator to access the node via OneSystem.

The OneBlox also features optional AES 256 encryption, which allows administrators to ensure that all data is encrypted before being written to the physical disk. With encryption enabled, failed or stolen drives are unreadable.

The OneBlox continuous data protection feature creates an unlimited number of snapshots within the constraints of its available capacity, meaning that previous versions of any stored file can be quickly recovered using Mac Finder and Windows Explorer.

OneBlox rings can be joined to an Active Directory domain to integrate user accounts. Organizations that do not leverage Active Directory can set up user accounts through OneSystem, offering permission-based access to the global filesystem.

Up to seven OneBlox appliances can be added to a cluster – a “ring” in Exablox terminology – resulting in a capacity of 336TB when outfitted with 6TB HDDs. The OneBlox architecture is built for data redundancy at three levels: across multiple drives within a OneBlox node, across multiple nodes within a OneBlox ring, and across multiple rings within a OneBlox mesh. Using OneSystem, a simple drag and drop connects two rings into a mesh with mirroring and remote replication functionality that transmits only deduplicated information across the WAN to a secondary site.

While OneBlox does not currently provide primary storage for VMware or Hyper-V datastores, it can be used as a disk-based backup/recovery target for VMDK and VHD files. OneBlox is certified with backup and recovery solutions from Veeam, Backup Exec, Unitrends, and CommVault.

Application Performance

A key selling point for the OneBlox over a traditional NAS is the addition of data reduction capabilities that looks for duplicate or compressible data and compacts it to store more onto the device than just its raw capacity. As such, OneBlox finds itself as a backup target in many locations it’s deployed in.

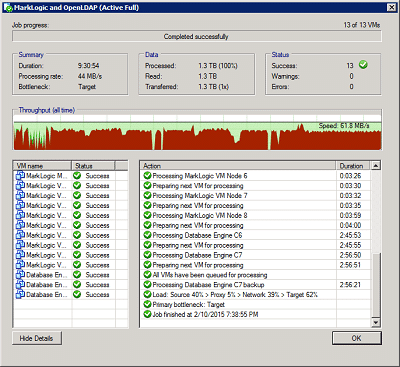

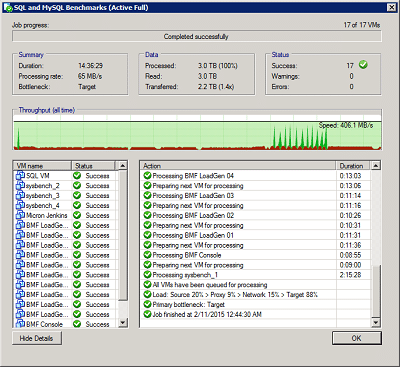

We created a few scenarios to measure both deduplication capabilities, backing up similar VMs to the OneBlox with Veeam v8, looking at the time required to backup and restore those VMs. We’re no strangers to Veeam in our test lab, leveraging it for our own production VMs as well as using it in prior reviews of backup appliances. One of the advantages of using Veeam with a device capable of deduplication is that the data Veeam sends out can be readily deduplicated with its dedupe-friendly inline compression. Although in the case of Exablox, inline compression and dedupe from Veeam must be disabled per their best practices. To that end we backed up many of our Sysbench, MarkLogic and Windows SQL Server VMs, some of which are all clones of one another which can offer best-case data reduction metrics. These were setup into two jobs that ran side by side to the OneBlox appliance.

Our first backup job transfers 1.3TB of data over an 8 hour period, while the second transfers 2.2TB of data in about 14 hours and 36 minutes. The following morning, the OneBlox management console displayed a lifetime dedupe ratio of 2.3:1 across these VMs. While the OneBlox was able to reduce the data footprint to some extent, it lacked the ability to reach the metrics often associated with dedicated dedupe appliances, as well as an excessively long backup window without being able to leverage Veeam’s inline compression and dedupe functionality.

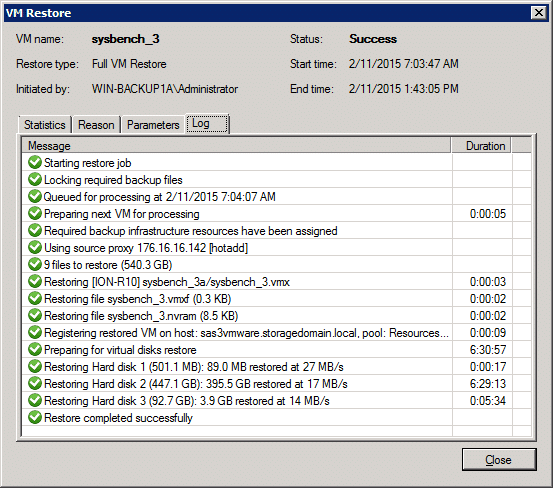

The next area we focused on was restore performance of one of our Sysbench VMs (603GB) we backed up to the unit. The restore target was an all-flash iSCSI LUN. This was to replicate what a small business might go through, primary storage not performance limited, if they used the Exablox OneBlox as a backup target and had to recover a production server from the appliance. The restore took 6 hours and 30 minutes with a restore speed of 14-27MB/s for the different disks it was restoring. As one might imagine, the time consumed during this process would eat most of a business day if the recovery process started in the morning.

By comparison a WD Sentinel DS6100 running Windows Server 2012 R2 in our lab with four disks in a Storage Spaces pool leveraging deduplication (making the backup and restore under its own power) was able to far exceed the OneBlox’s backup performance at a much lower price point. The same Sysbench VM being restored on that system from its storage pool with deduplicated data measured 1 hour and 40 minutes with an average speed between 70-82MB/s. In the same scenario the business mentioned above would be up and running before lunchtime. So while the OneBlox can be used as a backup target, its usage in that area could dramatically impact an IT department in the event one needs to pull data from it and jobs need to be chunked to allow backups to complete within the allotted backup window.

While the Exablox OneBlox is by no means sold as a performance-oriented storage platform, this test illustrates the trade-off Exablox has made with its object-based storage solution. Exablox has sacrificed its single node performance in return for a global namespace, scale-out clustering, RAID-less protection, and inline deduplication. So while the OneBlox can be used as a backup target, its usage in that area could dramatically impact operations in the event of large restores. However, for IT organizations that need to prioritize scalable capacity over performance OneBlox has a deep feature set.

Enterprise Synthetic Workload Analysis

We publish an inventory of our lab environment, an overview of the lab’s networking capabilities, and other details about our testing protocols so that administrators and those responsible for equipment acquisition can fairly gauge the conditions under which we have achieved the published results. To maintain our independence, none of our reviews are paid for or managed by the manufacturer of equipment we are testing.

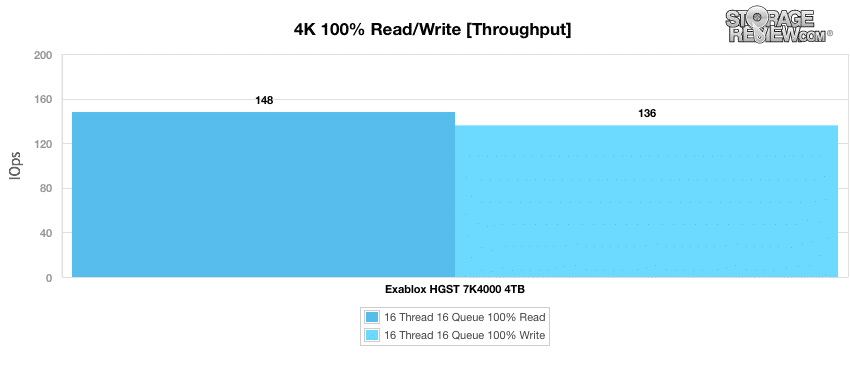

Our OneBlox node has been configured with 4TB HGST 7K4000 enterprise HDDs.

Prior to initiating each of the fio synthetic benchmarks, our lab preconditions the device into steady-state under a heavy load of 16 threads with an outstanding queue of 16 per thread. Then the storage is tested in set intervals with multiple thread/queue depth profiles to show performance under light and heavy usage.

Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregated)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

This synthetic analysis incorporates four profiles which are widely used in manufacturer specifications and benchmarks:

- 4k random – 100% Read and 100% Write

- 8k sequential – 100% Read and 100% Write

- 8k random – 70% Read/30% Write

- 128k sequential – 100% Read and 100% Write

With a 4k synthetic workload, the OneBlox was reached a throughput of 148 IOPS for read operations and 136 IOPS for write operations.

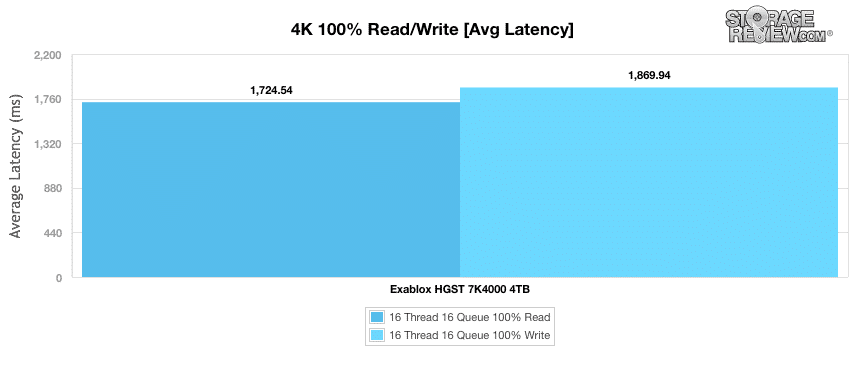

The average latency calculations for the 4k benchmark placed this OneBlox configuration at 1,725ms for read operations and 1,870ms for write operations.

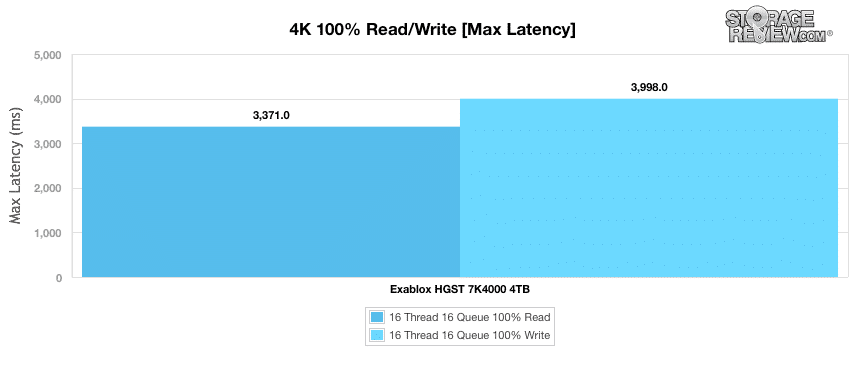

The highest read latencies recorded during the 4k benchmark were 3,371ms on the read side and 3,998ms for write operations.

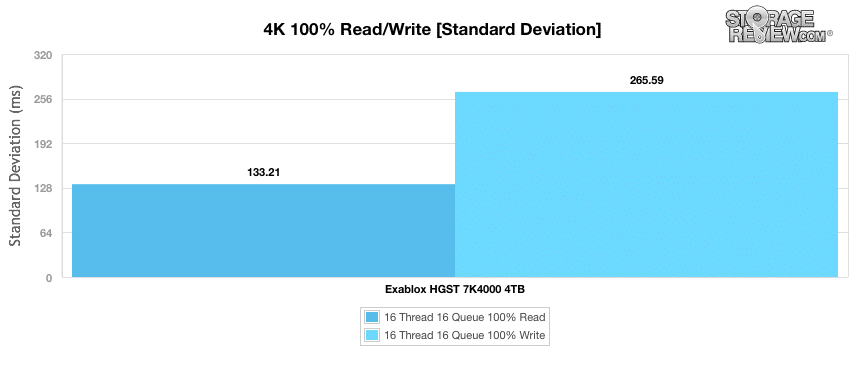

As expected, standard deviation calculations reflect a greater consistency among the measured latencies during the 4k read operations than during write operations.

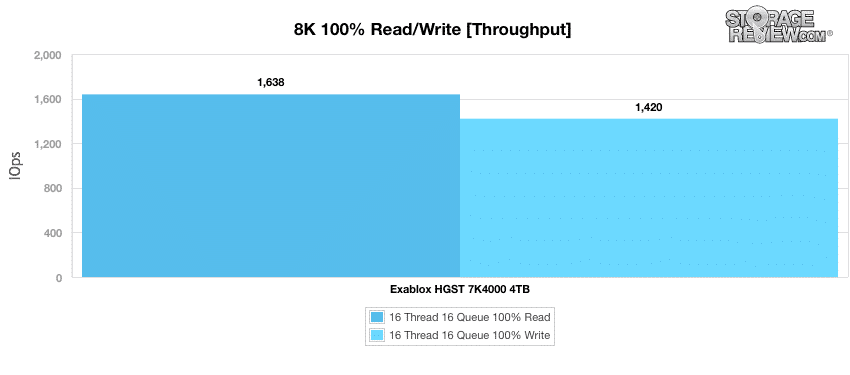

Our next benchmark uses a sequential workload composed of 100% read operations and then 100% write operations with an 8k transfer size. The OneBlox reached 1,638 IOPS for read operations and 1,420 IOPS for write operations.

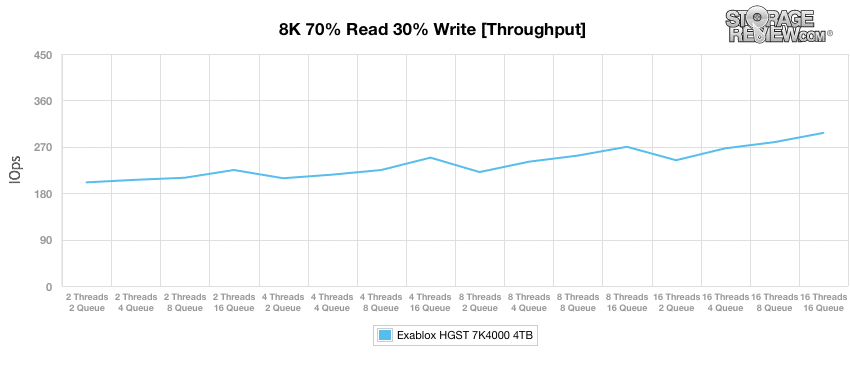

Our next series of workloads are composed of a mixture of 8k read (70%) and write (30%) operations up to 16 threads and a queue depth of 16. The OneBlox system maintained a predictable level of performance throughout the 8k 70/30 testing protocol, generally performing between 200 IOPS and 300 IOPS depending on thread count and queue depth.

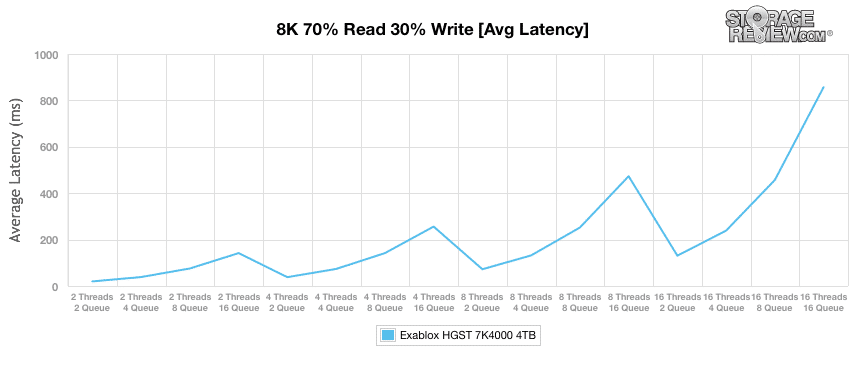

Average latency results during the 8k 70/30 benchmark likewise reveal no notable bottlenecks or stress points for the OneBlox.

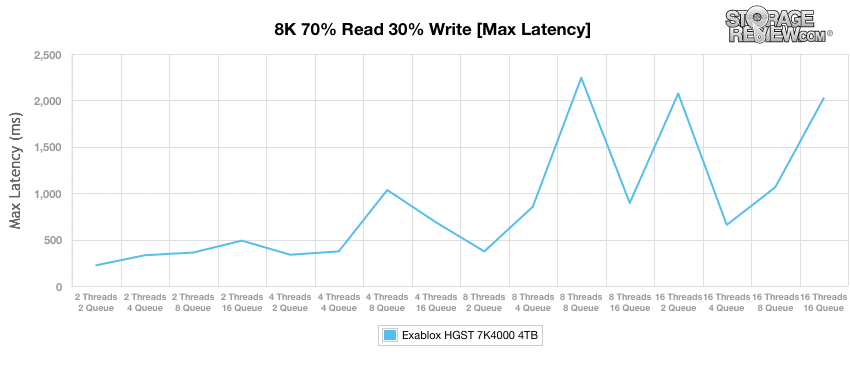

The highest latency measured during the 8k 70/30 benchmark occurred with 8 threads and a queue that was 8 deep, although this latency performance was not frequent enough with that workload to be notable in the average latency calculations for the 8k 70/30 benchmark. The OneBlox also experienced a peak in maximum latency results with 16 threads and a queue depth of 2 that was likewise not significant enough to affect average latency.

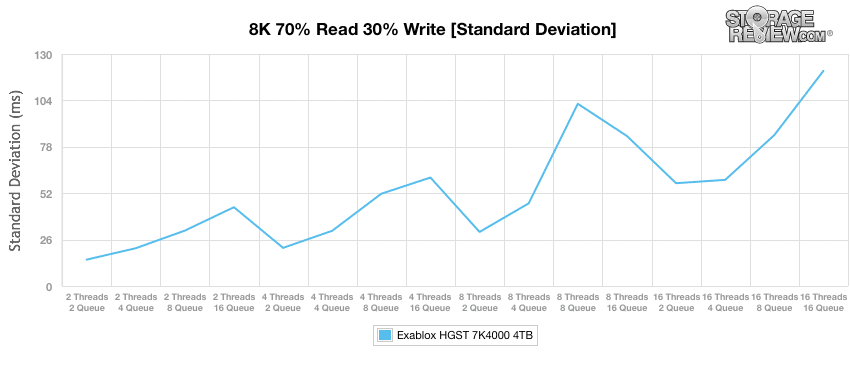

Standard deviation calculations for 8k 70/30 benchmark also show a larger degree of variation in latencies for the 8 thread and 8 queue workload.

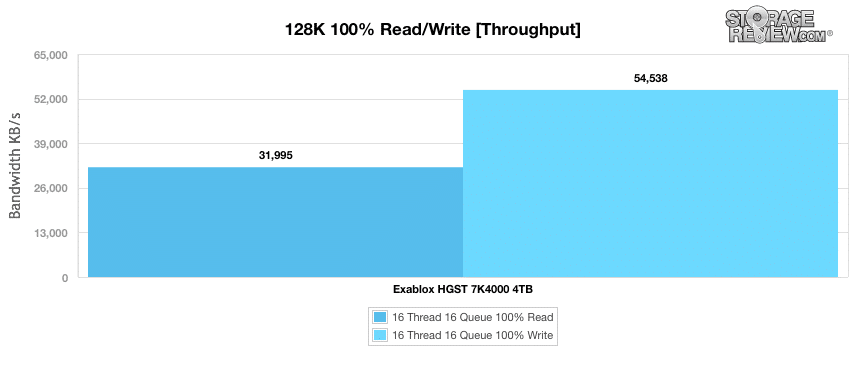

Our final synthetic benchmark made use of sequential 128k transfers and a workload of 100% read and 100% write operations. In this scenario, the OneBlox reached a throughput of 31.9MB/s for read operations and 54.5MB/s for write operations.

Conclusion

Exablox has charted its own course with the development of the OneBlox. Rather than going head-to-head against other enterprise storage architectures which attempt to compete in terms of performance as well as functionality, scalability, and ease-of-use, Exablox has identified its core market and focused its engineering resources there. The result is a modular storage system with an effortless scaling mechanism built on a bedrock of data protection that does not require extensive staff resources to deploy or maintain. Exablox’s core emphasis is taking this unique object store and laying NAS application accessibility on top. Deep feature set comes first with the OneBlox, performance was much further down on the development list priority.

Minimizing the importance of performance shows through, the Exablox OneBlox leaves much to be desired on that front. In our synthetic tests with one appliance fully populated with 4TB 7K HDDs, we could barely saturate one 1Gb port, let alone four, with its large-block sequential read or write transfer speeds. In that test we measured just under 32MB/s read and 54.5MB/s write. These numbers are actually better than when we first had the system in our lab last year, which at least shows some iterative growth. To put that in perspective though, a standard 8-bay NAS (albeit without deduplication) populated with the same HDDs in RAID10 with software encryption enabled measured 329MB/s read and 64MB/s write. When we leveraged the OneBlox in our lab as a backup target, which is a primary use case for OneBlox, we saw only modest deduplication capabilities across many cloned VMs as well as very slow backup and restore performance.

Customers looking at the Exablox OneBlox for a large file-share cross shopping traditional NAS or those looking for an affordable backup target with deduplication capabilities will have a hard choice to make. The OneBlox is priced much higher than competitive NAS platforms that can perform dramatically better, although competitive single NAS systems generally don’t scale-out, offer deduplication or RAID-less protection against drive and node failure in a cluster. Compared to a Windows Microserver costing half as much that can both run backup software locally and leverage deduplication, the OneBlox fell short in backup and restore performance.

Buyers have a tough decision to make when looking at OneBlox due to its very distinct dual-facets. The OneBlox is extremely easy to use and scale out while offering an interesting architecture. It’s not a performance machine by any stretch though, the company readily points out they make no performance claims in their marketing materials. The problem is, there has to be a minimum usable performance level for OneBlox to be seriously considered by most businesses. If the numbers we captured meet the need, then OneBlox is going to be a great option for those with no/limited tech resources. Unfortunately, we’re guessing that most organizations need more and will find the transfer speeds are too slow to make use of its large potential storage capacity.

Pros

- Entry-priced object-store that presents itself as a NAS to applications

- Offers deduplication and continuous data protection

- ‘Bring Your Own Drive’ functionality to mix and match HDDs

- Easy to administer and to scale within the appliance, within a ring, and within a OneBlox mesh

Cons

- Slow read and write performance

- Long backup and recovery windows when used as a backup target

- Support for virtualized environments only as a disk target for backup

The Bottom Line

The Exablox OneBlox is easy to deploy, manage, and scale as part of a solution that helps ensure that backup and replication services, but falls short when performance is key to the buying decision.