The iXsystems Titan iX-316J is a 16 3.5″ bay, JBOD storage expansion shelf. The JBOD has become a permanent fixture of the Storage Review lab, enabling us to directly connect SATA or SAS drives to a host compute system via LSI 9207-8e SAS expander. The iX-316J can be used in a variety of use cases, ranging from accepting up to 64TB of SATA drives, all the way up to the speedier 2.5″ 10K and 15K drives, should the user choose to go that route. In this review we look at three different sets of hard drives, clearly illustrating the performance vs. capacity trade-offs that occur with modern enterprise hard drives.

The concept of a storage shelf, or JBOD, is one of the more basic in storage architecture. The chassis essentially houses the drives, connecting to a host machine via SAS cable and HBA in the host. This type of arrangement continues to be popular where enterprise users want to keep the storage local to the host, but have perhaps grown out of available drive bays, or have other unique requirements and aren’t in need of a full-on SAN with its own storage controllers. In fact, looking forward, we’ll be showing these same configurations of hard drives paired with caching solutions, to show how flash and software can benefit large arrays of hard drives in an enterprise environment. The use cases for JBOD continue to expand with new technology and powerful compute power on the host side.

iXsystems Titan iX-316J Specifications

- Form Factor: 3U Storage Chassis supporting up to 16 Hard Drives

- Dimensions: 17.2″W x 5.2″H x 25.5″D

- Fans: 6 x 40×56, 4 pin PWM Fan Assembly

- Mounting Rails: Rail set, quick/quick

- Hard Drive Bays: 16 x 3.5” Hot-Swap SAS/SATA – SATA drives require interposer Add-on-cards

- RAID support: Supported through head unit RAID controller

- SAS2 compliance

- 6Gb support

- SAS Connectivity: Compatible with any SAS, SAS2.0, or SAS3.0 Host Bus Adapter

- 2 x SFF-8088 Connectors

- Power Supply: Redundant 720W high-efficiency power supply with PMBus

Video Overview

Build and Design

The iXsystems Titan iX-316J is a 3U rack-mount enclosure with 16 front-accessible 3.5″ drive bays. It offers an active-active HA SAS interface to connect to two hosts, as well as an expansion port to string together multiple JBOD units. It supports both 3.5″ and 2.5″ drives natively, through the use of universal drive caddies. While both SAS and SATA drives are supported, SATA drives must use an adapter to give them dual-ported capabilities. The front of the Titan 316J is equipped with a power switch, as well as interface lights that show when connections are active and the unit is online. This particular chassis was designed with both JBOD and storage server duties in mind, with some lights not being connected in our configuration.

The back of the iXsystems Titan iX-316J is very basic, since the unit doesn’t contain any compute interface that you might find in a server. The only connections to this unit are two power supplies as well as four SAS connections. The main link to the internal expander is a 4-channel SFF-8088 connection, giving the unit a peak transfer speed of about 2,400MB/s. One port on each side is dedicated to a connection to the server that will be hosting it, while the other two are for connecting the 316J to another JBOD shelf.

Since the unit and backplane are geared towards HA environments, iXsystems supplied us with LSI SATA-to-SAS adapters that we used when testing the JBOD with SATA hard drives. The drive caddies were designed with these adapters in mind, so installation was a breeze.

For quick and easy rack installation, iXsystems includes a sliding rail kit with the Titan iX-316J JBOD. Installation took just a few minutes, as the rails snapped into position in our rack without the use of tools. Once installed, you extend the receiving rails, install the mounting rails on the side of the chassis, and slide the unit into place.

Testing Background and Comparables

The iXsystems Titan 316J JBOD supports both 3.5″ and 2.5″ SATA and SATA hard drives. For this review we used both large-capacity 4TB 7,200RPM SATA drives with SAS adapters, as well as 2.5″ 10K and 15K SAS drives.

Hard drives used in this review:

- Toshiba MK01GRRB (147GB, 15,000RPM, 6.0Gb/s SAS)

- Toshiba MBF2600RC (600GB, 10,000RPM, 6.0Gb/s SAS)

- Hitachi Ultrastar 7K4000 (4TB, 7,200RPM, 6.0Gb/s SATA)

All enterprise storage devices are benchmarked on our next-generation enterprise testing platform based on a Lenovo ThinkServer RD630. The ThinkServer RD630 is configured with:

- 2 x Intel Xeon E5-2620 (2.0GHz, 15MB Cache)

- Windows Server 2008 R2 SP1 64-Bit, Windows Server 2012 64-Bit, and CentOS 6.3 64-Bit

- Intel C602 Chipset

- Memory – 16GB (2 x 8GB) 1333Mhz DDR3 Registered RDIMMs

- LSI 9207 SAS/SATA 6.0Gb/s HBA

Enterprise Synthetic Workload Analysis

Our enterprise storage benchmark process begins with an analysis of the way the drive performs during a thorough preconditioning phase. Each of the comparable hard drive arrays are setup in RAID10, allowed to fully sync, and then tested under a heavy load of 16 threads with an outstanding queue of 16 per thread down to our light load of 2 threads with an outstanding queue of 2 per thread.

Performance attributes we measure in our random workloads:

- Throughput (Read+Write IOPS Aggregate)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

Our Enterprise Synthetic Workload Analysis includes six profiles, some based on real-world tasks. These profiles have been developed to make it easier to compare to our past benchmarks as well as widely-published values such as max 4K read and write speed and 8K 70/30, which is commonly used for enterprise drives. We also included two legacy mixed workloads, the traditional File Server and Webserver, each offering a wide mix of transfer sizes.

- Sequential

- 8K

- 100% Read or 100% Write

- 100% 8K

- 128K

- 100% Read or 100% Write

- 100% 128K

- 8K

- Random

- 4K

- 100% Read or 100% Write

- 100% 4K

- 8K 70/30

- 70% Read, 30% Write

- 100% 8K

- File Server

- 80% Read, 20% Write

- 10% 512b, 5% 1k, 5% 2k, 60% 4k, 2% 8k, 4% 16k, 4% 32k, 10% 64k

- Webserver

- 100% Read

- 22% 512b, 15% 1k, 8% 2k, 23% 4k, 15% 8k, 2% 16k, 6% 32k, 7% 64k, 1% 128k, 1% 512k

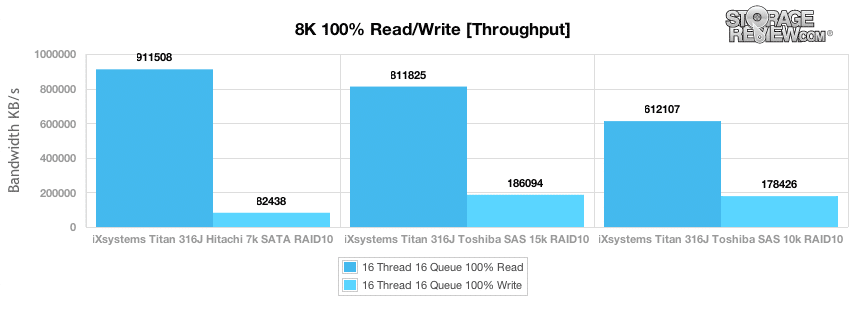

- 4K

The first test we look at when measuring the performance of the iXsystems Titan iX-316J is 8K sequential read and write. In this test, which both spindle speed and areal density come into play, the 7,200RPM Hitachi Ultrastar 7K4000 RAID10 offered the fastest 8K read speed, measuring 911MB/s, while the Toshiba 15K RAID10 measured 811MB/s, and the Toshiba 10K RAID10 measured 612MB/s. Comparing write speeds, the 15K RAID10 measured 186MB/s, while the 10K RAID10 measured 178MB/s and the 7.2K RAID10 measured 82MB/s.

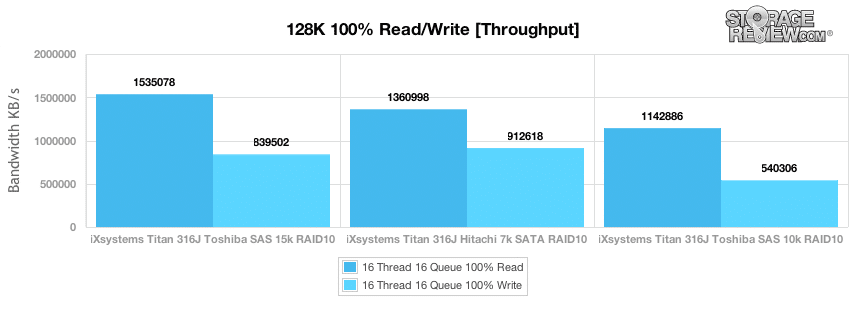

Our next sequential test measured large-block transfer speeds. In this test the 15K SAS array measured 1,535MB/s read and 839MB/s write, with the 7.2K SATA array measuring 1,361MB/s read and 912MB/s write, and the 10K SAS array coming in last with 1,142MB/s read and 540MB/s write.

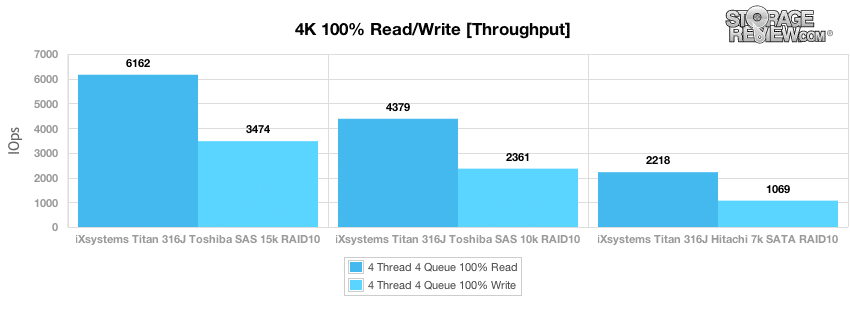

For the remaining benchmarks in this review, we switch from sequential to fully random tests. From the iX- 316J we were able to get 6,162 IOPS 4K read and 3,474 IOPS 4K write from 15K SAS drives, 4,379 IOPS 4K read and 2,361 IOPS 4K write from 10K SAS drives, and 2,218 IOPS 4K read and 1,069 IOPS 4K write from 7.2K SATA drives.

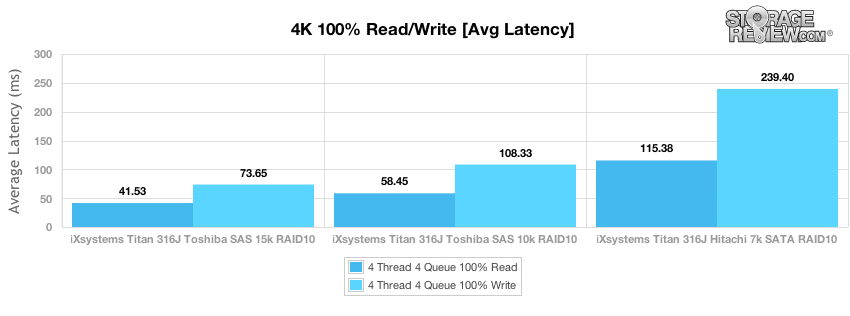

Comparing average latency in our 100% 4K random test, when the iXsystems Titan iX-316J is equipped with 15K SAS drives, response times were as low as 41ms read and 73ms write. With larger bulk-storage 7.2K SATA drives installed read latency increased to 115ms and write latency went up to 239ms with an effective queue depth of 256.

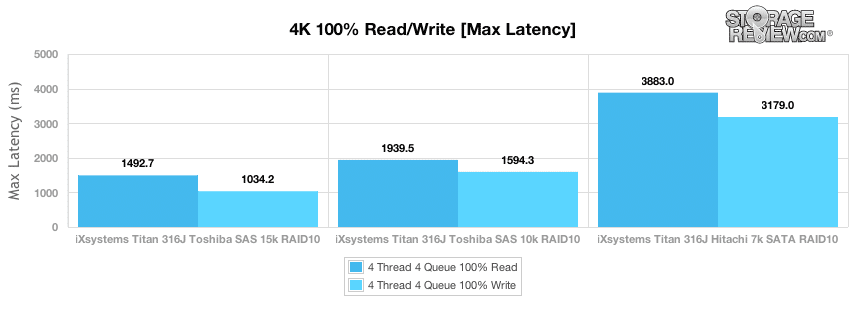

Comparing max latency, the 10K and 15K arrays offered the lowest peak response times, with the 7.2K array having the highest.

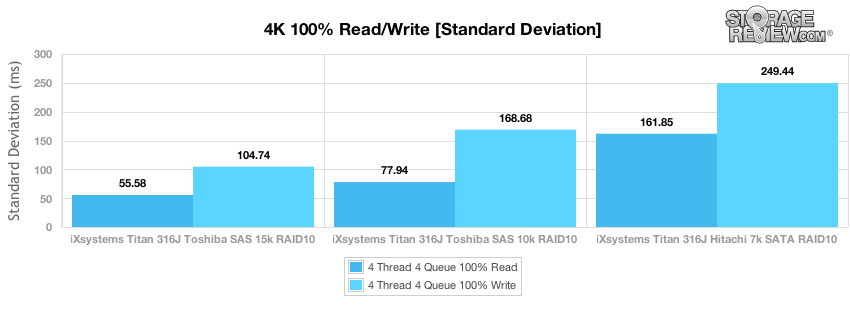

Comparing latency consistency in our iX-316J, the 15K SAS array offered the lowest read latency standard deviation and write standard deviation. There were linear bumps moving down to a 10K or 7.2K spindle speed, which shows it makes sense understanding the workload and picking the drives that make the most sense given the requirements.

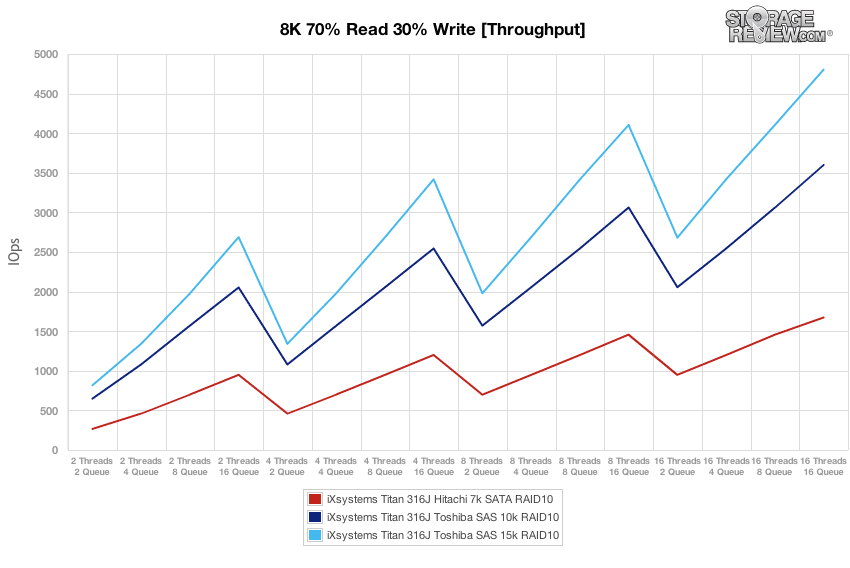

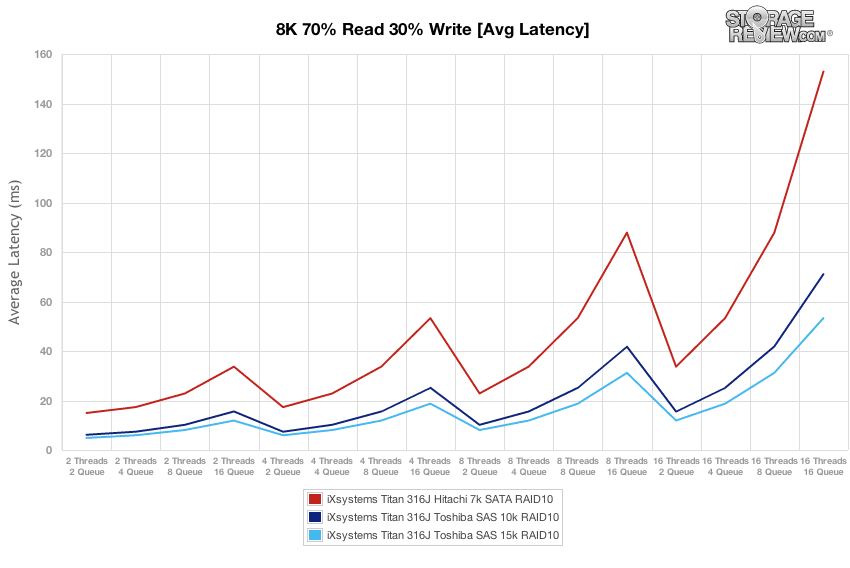

Switching to our 8K 70/30 profile with a workload scaling from 2T/2Q to 16T/16Q, we measured a peak I/O rate of 4,803 IOPS from the 15K SAS RAID10 array, 3,600 IOPS from the 10K array, and 1,673 IOPS from the 7.2K array.

If the requirement was keeping latency below 20ms, the speed spot from both the 10K and 15K SAS arrays was found with an effective queue depth of 32 or below. In this setting the max throughput measured 2,686 IOPS from the 15K SAS array and 2,055 IOPS from the 10K SAS array. Keeping that same requirement for the 7.2K SATA array, its sweet spot was an effective queue depth of 8 or lower, which offers a peak throughput of 460 IOPS.

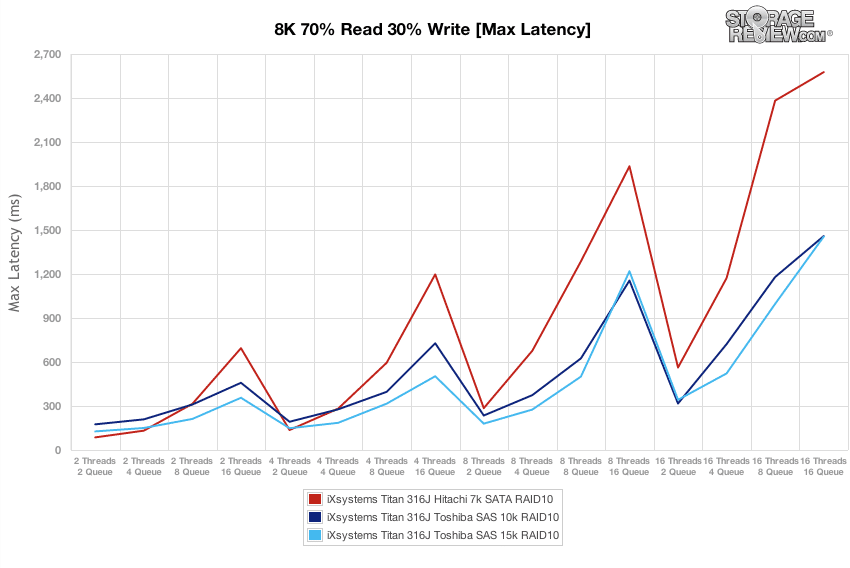

For each spindle speed, keeping the effective queue depth below 32 kept max response times the lowest, with the largest impact seen on the 7.2K array.

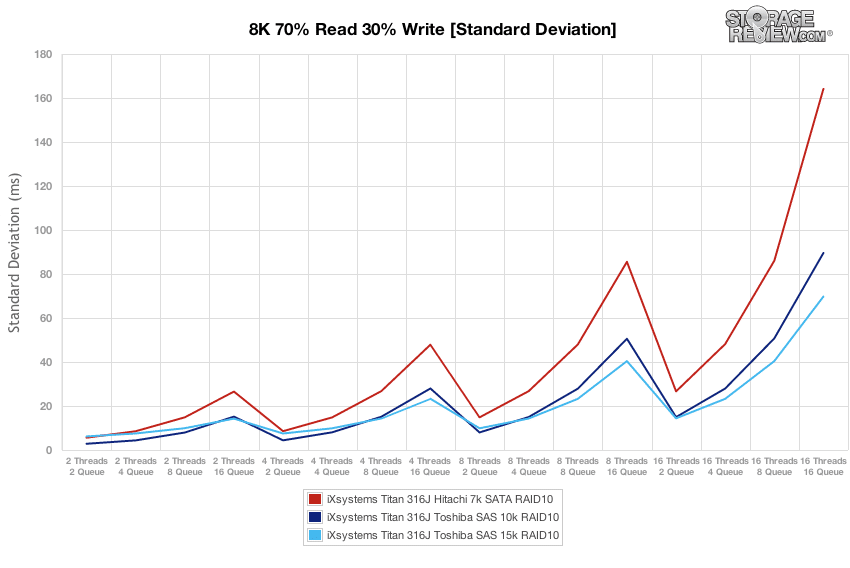

In terms of latency consistency, both the 10K and 15K SAS drives offered similar performance at lower queue depths, with the edge given to the 15K SAS drives at the highest effective queue depths.

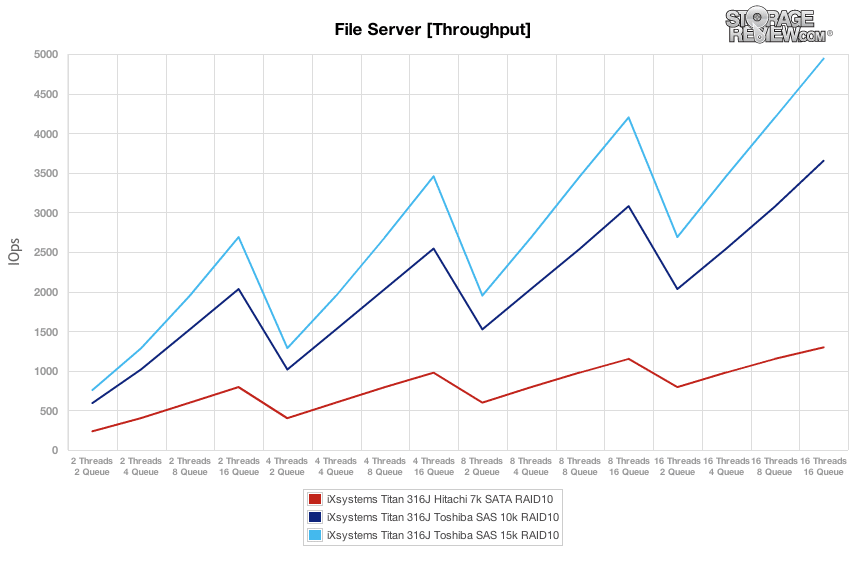

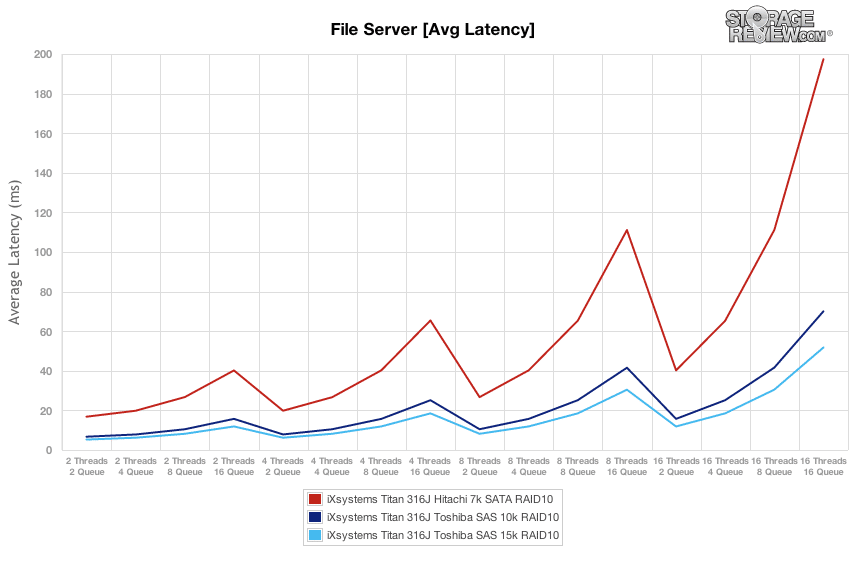

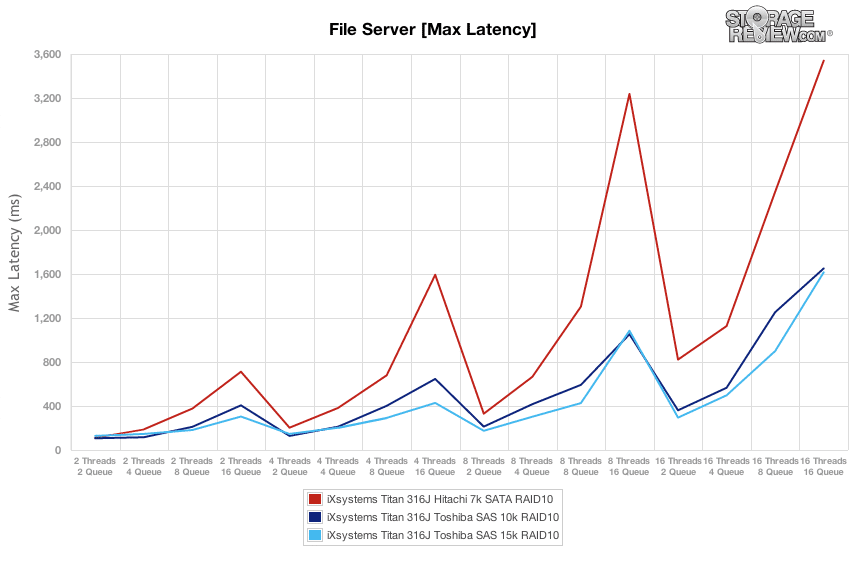

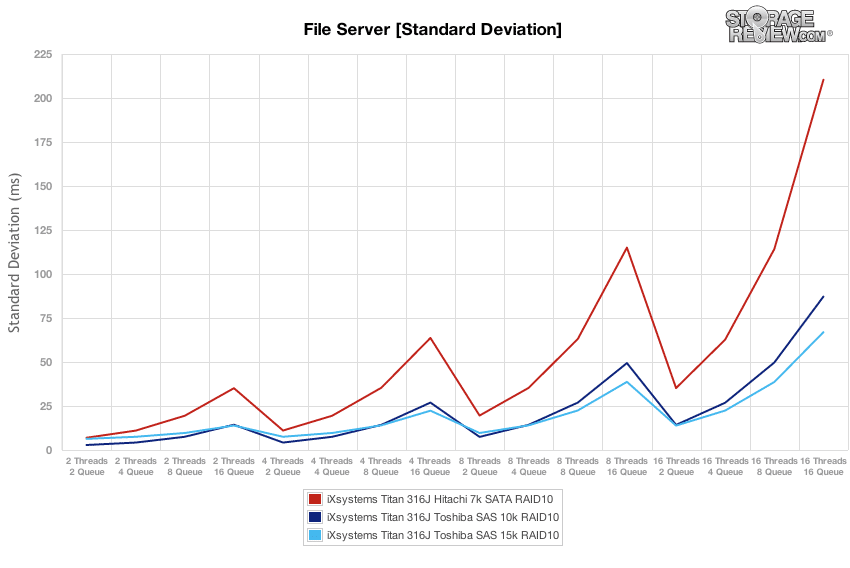

Switching to our File Server workload the impact of spindle speed in our 16-bay iX-316J became more apparent. At a peak effective queue depth of 256, the 15K SAS array measured 4,943 IOPS with the 10K SAS array measuring 3,652 IOPS. The 7.2K array offered just 1,296 IOPS at its highest.

Comparing average latency between each drive type in our 16-bay JBOD, the 10K and 15K SAS drives offered the best performance in our File Server workload, with the 7.2K array having higher latency in this area. In terms of optimal performance versus latency, the SAS arrays offered the best performance without getting bogged down with high latency at queue depths lower than 32 for the 10K and 64 for the 15K arrays.

Comparing peak response times, two SAS arrays kept latency below 500ms at effective queue depths 64 and below. From the 7.2K SATA array, effective queue depth loads above 32 caused peak response times to spike dramatically.

Comparing latency standard deviation in our File Server profile, we found similar performance from both our 10K and 15K arrays, where the 15K array had the edge at the highest effective queue depths. In this particular transfer profile, the slower 7.2K hard drives had a tougher time keeping latency consistent as the load increased over an effective rate of 32.

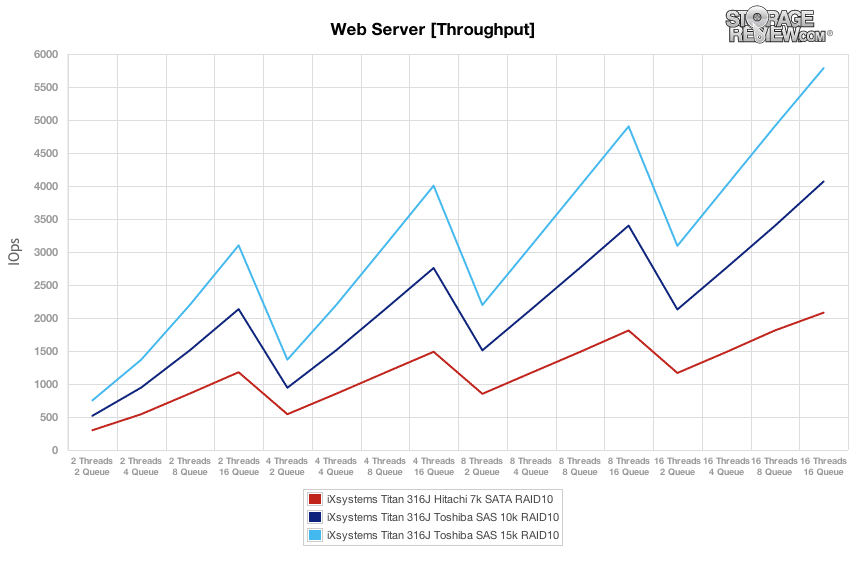

Our last profile covering simulated Web Server activity is completely read-only. In this setting the 7,200RPM hard drives were able to keep up better than they had in previous tests with write activity mixed in. At our highest thread and queue count, we measured a peak I/O rate of 5,786 IOPS with our 15K SAS array, 4,068 IOPS with our 10K SAS array, and 2,081 IOPS with the 7.2K SATA array.

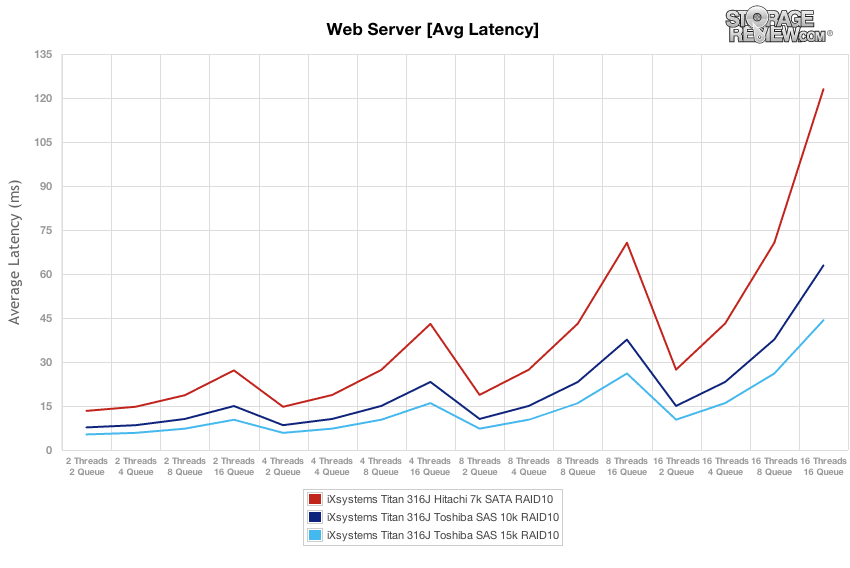

The 10K and 15K SAS arrays inside out iXsystems Titan iX-316J were able to keep average latency in check at effective queue depths below 64, with the 7.2K array having a lower limit of 32 before average latency increased significantly.

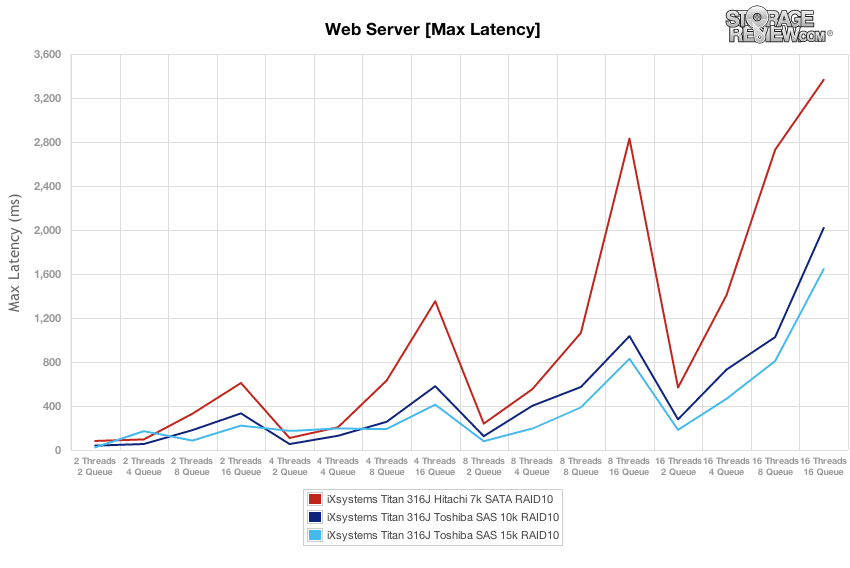

Max latency in our Web Server test had similar results as the average latency section, where peak response times were kept to a minimum at effective queue depths below 64 or 32 (for the 10/15K SAS arrays and 7.2K SATA array respectively).

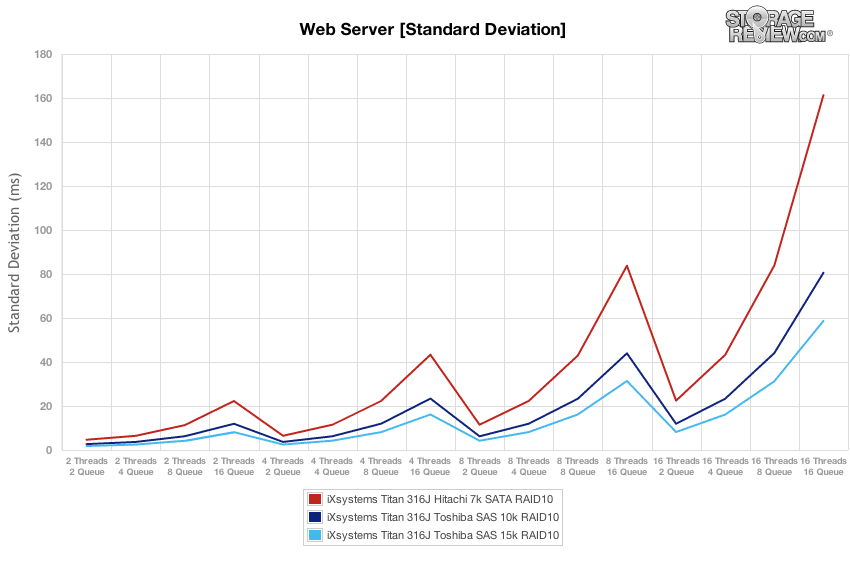

With no write activity, the 15K SAS array had the best latency standard deviation across the entire range of thread and queue levels, followed by the 10K then 7.2K arrays. The same held true with the sweet spot, having best consistency below EQD64 for the faster spindles and EQD32 for the 7.2K array.

Conclusion

There are many times when a headless JBOD makes perfect sense for growing storage needs. The iXsystems Titan iX-316J provides an easy to configure 3U chassis that with 4TB hard drives can support a total capacity of 64TB. Of course as we have shown, it adapts easily to 2.5″ drives, though you do give up the density benefits in this case compared to iXsystems 2U 24-bay SFF options. When it comes to compatibility, the Titan iX-316J can connect to both HBAs and RAID cards through an industry-standard SFF-8088 connection. The only downside, which would apply only if you installed SSDs in this array, is a single 4-channel SAS connection is limited to 2,400MB/s over SAS 6.0Gb/s. That limit won’t hold back platter drives, but flash drives which peak at 500MB/s+ each would require more miniSAS connections to utilize their full potential.

The 10K and 15K hard drive arrays offered the greatest throughput and lowest latency in our random-activity mixed workloads. In sequential workloads the 7.2K RAID10 array offered the greatest 8K read speed and 128K write speed. For enterprise buyers deciding the best hard drive for a particular application, they need to weigh capacity requirements versus performance needs and then factor in cost. The 7.2K drives offer the best capacity per dollar, but can’t match the I/O performance of the faster 10K and 15K drives. For certain needs such as backups or bulk storage random access isn’t as important, making the 7.2K hard drives more appealing. In either situation though the iXsystems Titan iX-316J worked quite well no matter the drive size or interface.

Pros

- Easy deployment into HA infrastructure

- Works with both SAS and SATA hard drives

- Compatible with anything that supports JBOD over SFF-8088

- Includes dual power supplies

Cons

- Not enough throughput support for SSDs in certain scenarios

Bottom Line

The iXsystems Titan iX-316J is a simple to deploy headless storage system that has a wide variety of use cases for the enterprise. While direct attached storage doesn’t have to be complex, it does need to work reliably, which the iX-316J did over three sets of SATA and SAS hard drives.

Product Page

Discuss This Review