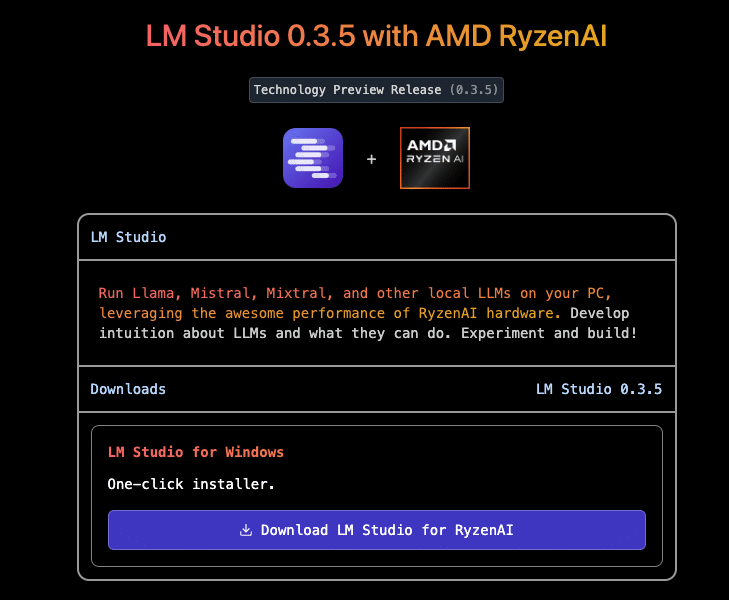

Recent advances in large language models (LLMs), including accessible tools like LM Studio, have empowered users to deploy sophisticated AI models quickly and effortlessly. AMD’s latest technology supports LM Studio’s high-performance AI functionality without requiring coding expertise or technical knowledge.

Developed on the llama.cpp framework, LM Studio is designed for swift LLM deployment. This framework operates without dependencies and performs efficiently on CPUs, although GPU acceleration is available. To optimize performance for x86-based CPUs, LM Studio leverages AVX2 instructions, enhancing compatibility and speed on modern processors.

AMD Ryzen AI, integrated within the Ryzen 9 HX 375 processor, boosts LLM performance in applications like LM Studio, especially for x86 laptops. Although LLMs depend significantly on memory speed, AMD Ryzen’s AI acceleration delivers leadership performance. In tests, despite the AMD laptop’s RAM speed being 7500 MT/s compared to Intel’s 8533 MT/s, the Ryzen 9 HX 375 outperformed Intel by up to 27% in tokens per second—a metric indicating how many words per second the LLM generates.

In the Meta Llama 3.2 1b Instruct model (using 4-bit quantization), the AMD Ryzen 9 HX 375 demonstrated a peak output of 50.7 tokens per second. For larger models, it achieved up to a 3.5x faster “time to first token” compared to competing processors. This is a significant benchmark measuring latency between prompt submission and initial response.

Each of the three accelerators within AMD Ryzen AI CPUs is optimized for specific tasks, with the XDNA 2 architecture-based NPUs excelling in efficiency for persistent AI. While CPUs support broad compatibility across AI tools, iGPUs handle many on-demand AI operations, offering flexibility for real-time applications.

LM Studio’s adaptation of llama.cpp uses the Vulkan API for platform-independent GPU acceleration. This optimization has shown a 31% improvement in Meta Llama 3.2 1b Instruct performance when offloading tasks to the GPU. In larger models, such as Mistral Nemo 2407 12b Instruct, this approach led to a 5.1% performance boost. Testing on competitor processors showed no significant gains in GPU offloading for most models, so these results were excluded for a fair comparison.

Additionally, AMD’s Ryzen AI 300 Series processors feature Variable Graphics Memory (VGM), which allows extending the dedicated iGPU allocation by using up to 75% of system RAM. When VGM was enabled, performance in Meta Llama 3.2 1b Instruct increased by 22%, resulting in a total speed gain of 60% in iGPU-accelerated tasks. Even large models benefited from VGM, achieving up to 17% improved performance over CPU-only processing.

Although the competition’s laptop configuration did not benefit from Vulkan-based GPU offloading in LM Studio, performance was assessed using Intel’s AI Playground for an objective comparison. Tests with comparable quantization found the AMD Ryzen 9 HX 375 8.7% faster in the Phi 3.1 model and 13% faster in Mistral 7b Instruct 0.3.

AMD’s commitment to advancing AI is clear: by enabling powerful, consumer-friendly LLM deployment tools like LM Studio, AMD aims to democratize AI. With the addition of features such as Variable Graphics Memory, AMD Ryzen AI processors are poised to offer unparalleled AI experiences on x86 laptops, ensuring users can leverage state-of-the-art models as soon as they’re available.

Amazon

Amazon