During her Computex keynote address, AMD’s Chair and CEO, Dr. Lisa Su, unveiled significant advancements in the AMD Instinct accelerator family. The company announced an expanded multiyear roadmap for the accelerators, promising annual improvements in AI performance and memory capabilities. This marks a new era of innovation for AI and data center workloads.

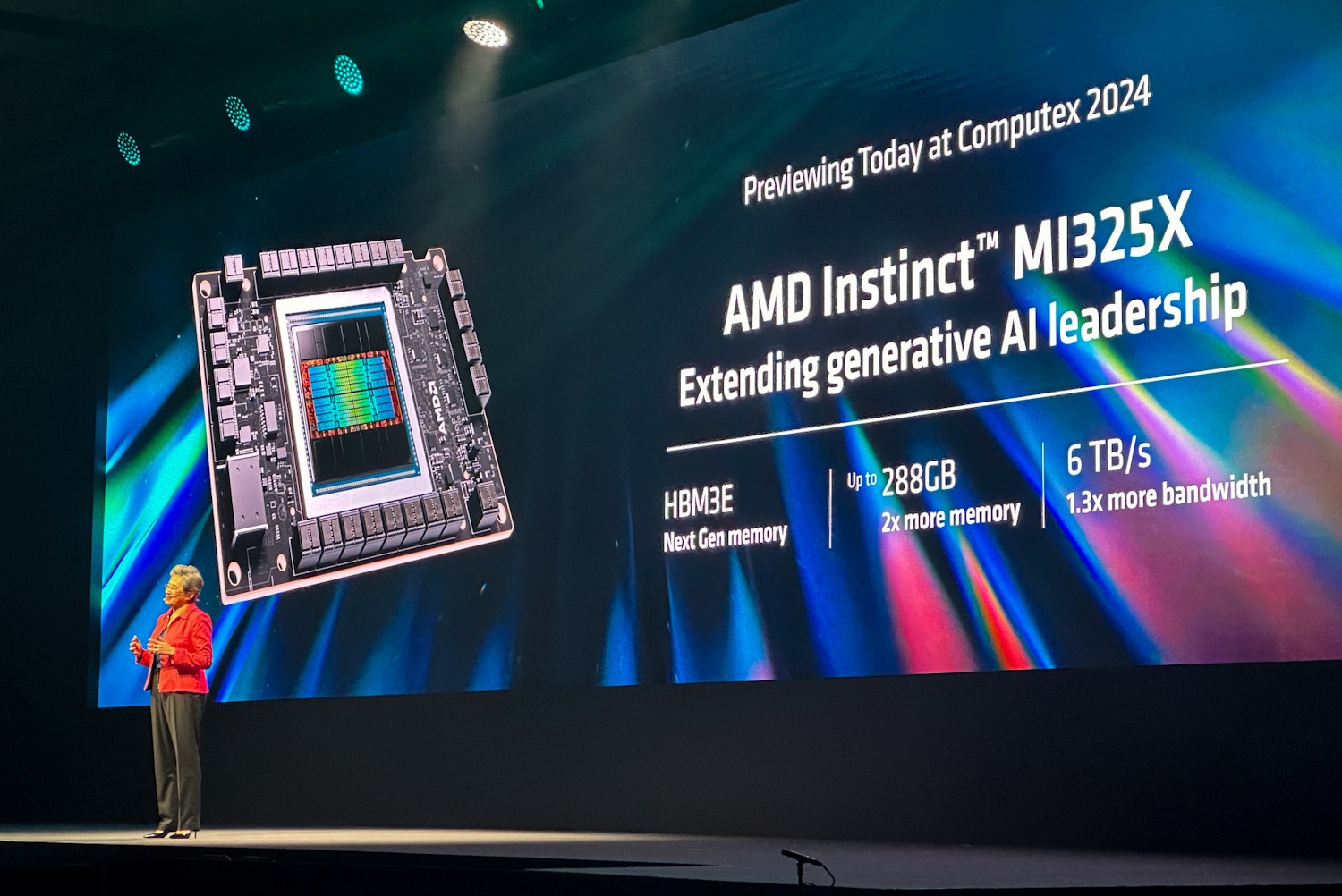

Introducing the AMD Instinct MI325X Accelerator

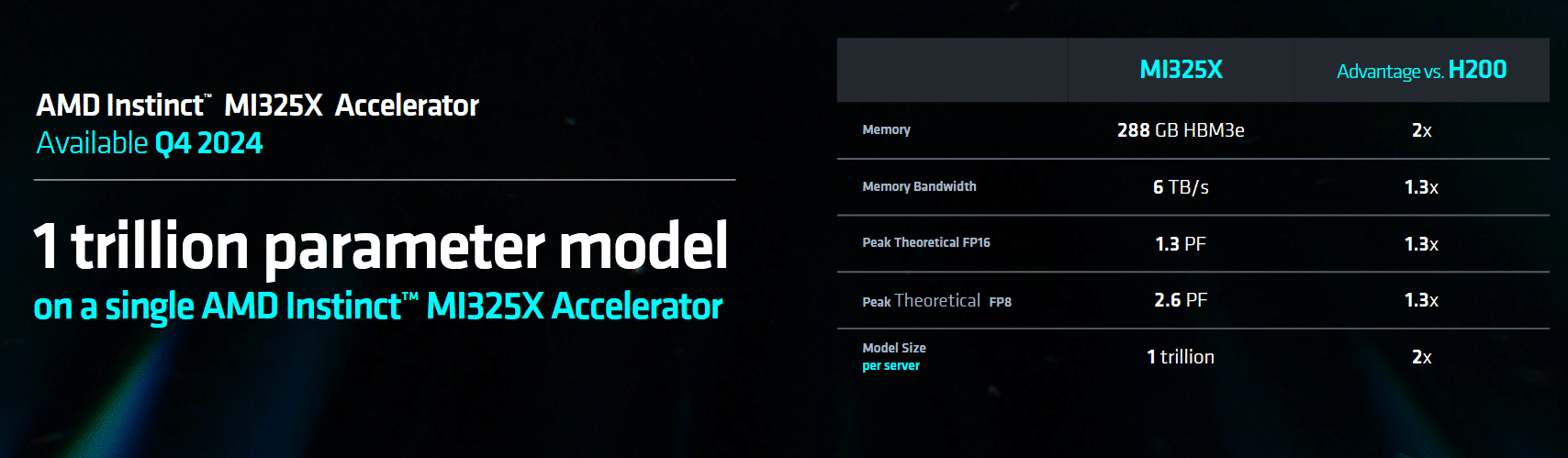

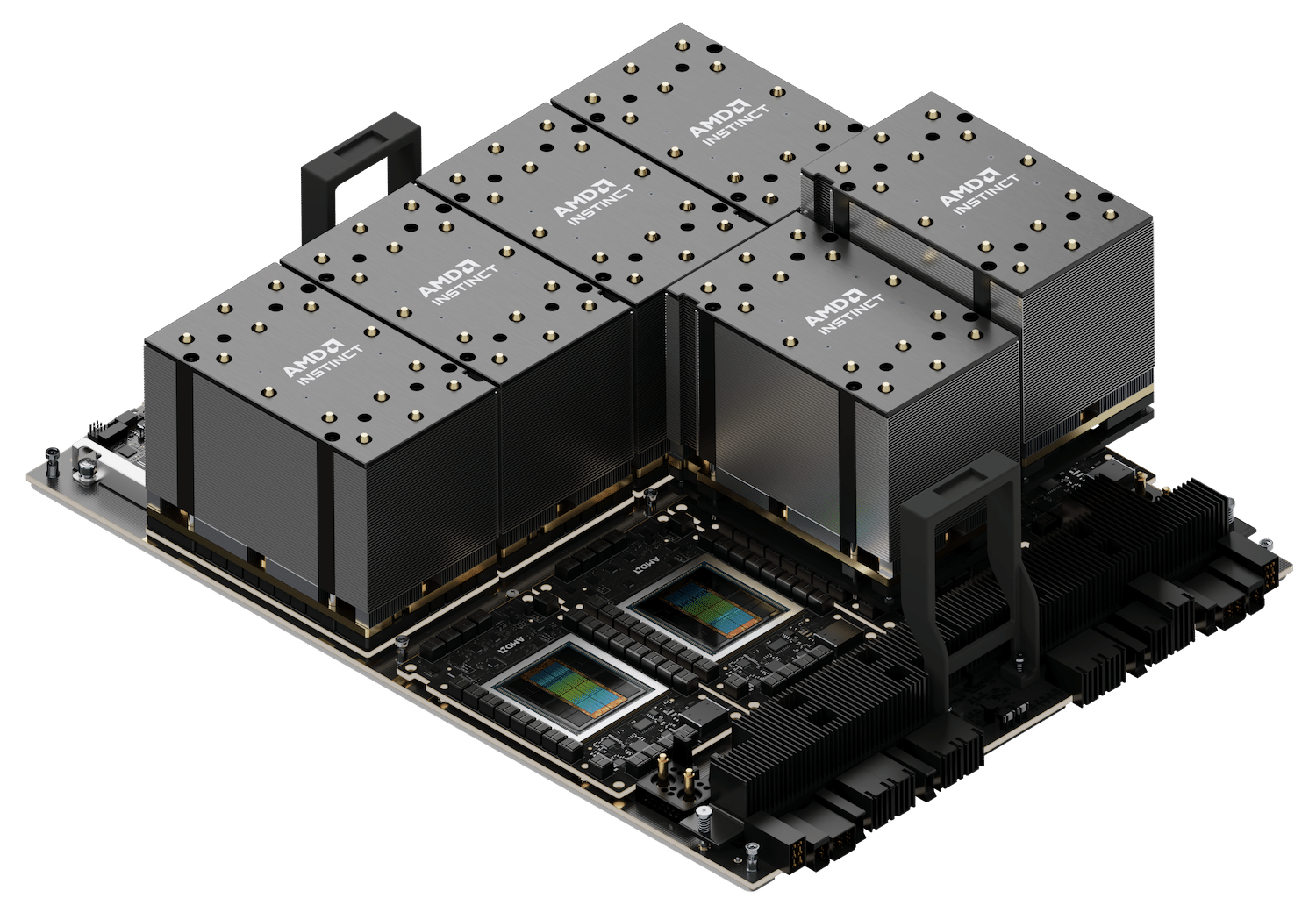

The updated roadmap kicks off with the new AMD Instinct MI325X accelerator, set to be available in Q4 2024. This accelerator features 288GB of HBM3E memory and a memory bandwidth of 6 terabytes per second. It utilizes the Universal Baseboard server design, ensuring compatibility with the AMD Instinct MI300 series.

The MI325X boasts industry-leading memory capacity and bandwidth, outperforming the competition by 2x and 1.3x, respectively. It offers 1.3x better compute performance than competitors, making it a powerful tool for AI workloads.

Future Generations: MI350 and MI400 Series

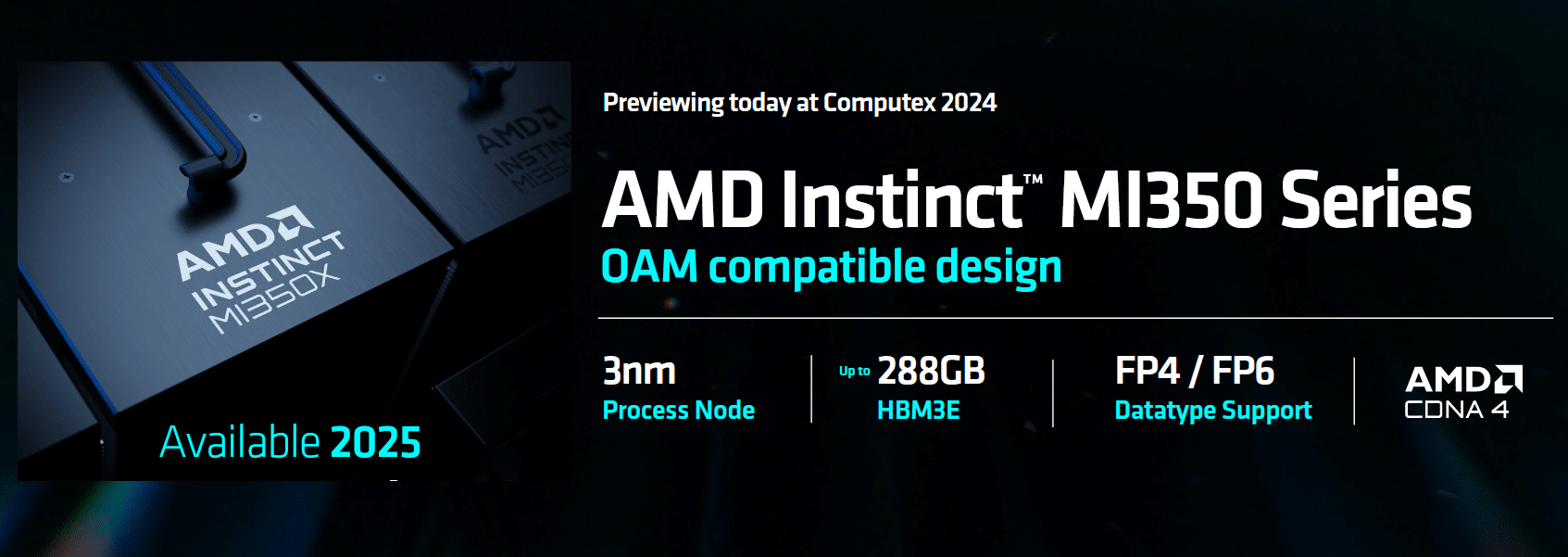

Following the MI325X, the AMD Instinct MI350 series, powered by the new AMD CDNA 4 architecture, is expected to launch in 2025. This series will bring a substantial leap in AI inference performance, with projections indicating up to a 35x increase compared to the AMD Instinct MI300 series. The MI350X accelerator will maintain the Universal Baseboard design, utilize advanced 3nm process technology, support FP4 and FP6 AI datatypes, and feature up to 288GB of HBM3E memory.

Looking further ahead, the AMD Instinct MI400 series, powered by the AMD CDNA “Next” architecture, is slated for release in 2026. This series will introduce the latest features and capabilities designed to enhance performance and efficiency for large-scale AI training and inference tasks.

Adoption and Impact of AMD Instinct MI300X Accelerators

The AMD Instinct MI300X accelerators have seen strong adoption from major partners and customers, including Microsoft Azure, Meta, Dell Technologies, HPE, Lenovo, and others. Brad McCredie, corporate vice president of Data Center Accelerated Compute at AMD, highlighted the exceptional performance and value proposition of the MI300X accelerators, noting their significant role in driving AI innovation. He stated, “With our updated annual cadence of products, we are relentless in our pace of innovation, providing the leadership capabilities and performance the AI industry, and our customers expect to drive the next evolution of data center AI training and inference.”

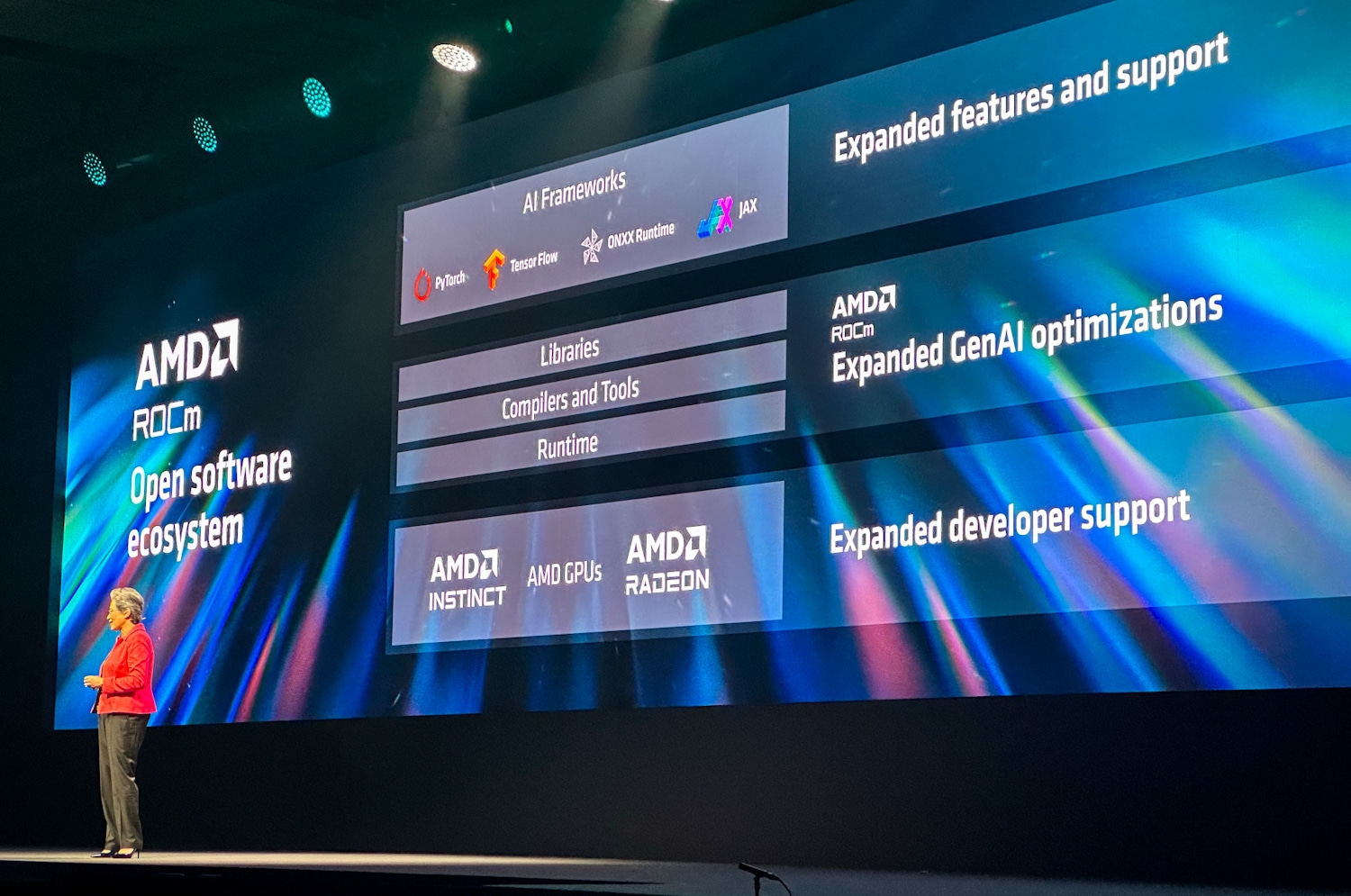

Advancements in AMD AI Software Ecosystem

The AMD ROCm 6 open software stack continues to mature, enabling AMD Instinct MI300X accelerators to deliver impressive performance for popular large language models (LLMs). On servers using eight AMD Instinct MI300X accelerators and ROCm 6 running Meta Llama-3 70B, customers can achieve 1.3x better inference performance and token generation compared to the competition.

Additionally, a single AMD Instinct MI300X accelerator with ROCm 6 can outperform competitors by 1.2x in inference performance and token generation throughput on Mistral-7B.

AMD’s collaboration with Hugging Face, the largest repository for AI models, further underscores the ecosystem’s robustness. Hugging Face tests 700,000 of their most popular models nightly to ensure seamless functionality on AMD Instinct MI300X accelerators. AMD is also continuing its upstream work with popular AI frameworks like PyTorch, TensorFlow, and JAX, ensuring broad compatibility and enhanced performance.

Key AMD Roadmap Highlights

During the keynote, AMD unveiled an updated annual cadence for the AMD Instinct accelerator roadmap to meet the growing demand for AI compute. This ensures that AMD Instinct accelerators will continue to drive the development of next-generation frontier AI models.

- AMD Instinct MI325X Accelerator: Launching in Q4 2024, this accelerator will feature 288GB of HBM3E memory, 6 terabytes per second of memory bandwidth, and industry-leading memory capacity and bandwidth. It offers 1.3x better compute performance compared to the competition.

- AMD Instinct MI350 Series: Expected in 2025, the MI350X accelerator will be based on the AMD CDNA 4 architecture and use advanced 3nm process technology. It will support FP4 and FP6 AI datatypes and include up to 288GB of HBM3E memory.

- AMD Instinct MI400 Series: Scheduled for 2026, this series will leverage the AMD CDNA “Next” architecture, introducing new features and capabilities to enhance AI training and inference performance.

Widespread Industry Adoption

The demand for AMD Instinct MI300X accelerators continues to grow, with numerous partners and customers leveraging these accelerators for their demanding AI workloads.

- Microsoft Azure: Utilizing MI300X accelerators for Azure OpenAI services and the new Azure ND MI300X V5 virtual machines.

- Dell Technologies: Integrating MI300X accelerators in the PowerEdge XE9680 for enterprise AI workloads.

- Supermicro: Providing multiple solutions incorporating AMD Instinct accelerators.

- Lenovo: Powering Hybrid AI innovation with the ThinkSystem SR685a V3.

- HPE: Using MI300X accelerators to enhance AI workloads in the HPE Cray XD675.

AMD’s introduction of the expanded Instinct accelerator roadmap at Computex 2024 marks a significant milestone in the company’s AI and data center strategy. With a commitment to annual product updates, enhanced performance, and broad industry adoption, AMD Instinct accelerators are set to drive the next generation of AI innovation.

Amazon

Amazon