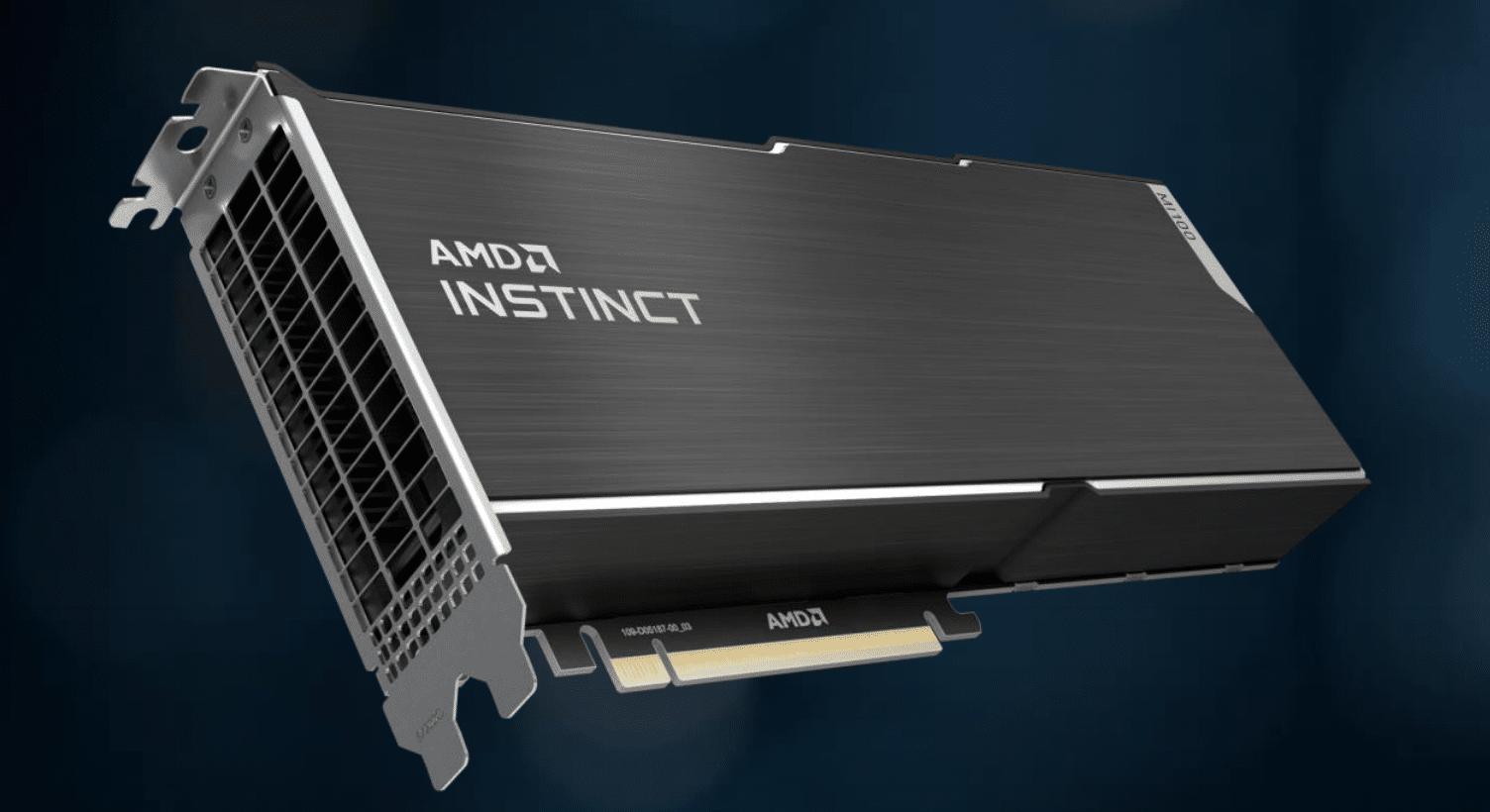

Today at SC20, AMD launched what it is boldly proclaiming as the world’s fastest HPC accelerator for scientific research with the AMD Instinct MI100. They may be correct as they claim this to be the first x86 server GPU to surpass the 10 teraflops (FP64) performance barrier. The company is combing the new GPUs with its 2nd generation AMD EPYC CPUs as well as ROCm 4.0 open software platform to hit impressive new numbers and to help researchers propel new discoveries ahead of the exascale era.

Today at SC20, AMD launched what it is boldly proclaiming as the world’s fastest HPC accelerator for scientific research with the AMD Instinct MI100. They may be correct as they claim this to be the first x86 server GPU to surpass the 10 teraflops (FP64) performance barrier. The company is combing the new GPUs with its 2nd generation AMD EPYC CPUs as well as ROCm 4.0 open software platform to hit impressive new numbers and to help researchers propel new discoveries ahead of the exascale era.

It is nice to see some competition move into the high-end GPU space. This space has been dominated by a single company for several years. AMD is moving into the space by leveraging its new AMD CDNA architecture for the AMD Instinct MI100 GPU and then combining it with its popular and high-performing AMD EPYC processors. This combination is said to provide up to 11.5 TFLOPS of peak FP64 performance for HPC and up to 46.1 TFLOPS peak FP32 Matrix performance for AI and machine learning workloads. The company also claims a nearly 7x boost in FP16 theoretical peak floating point performance for AI training workloads (compared to AMD’s prior generation accelerators) with its new AMD Matrix Core technology.

On top of the GPU news, the company is rolling out software for exascale computing with its latest AMD ROCm developer software. ROCm consists of compilers, programming APIs and libraries that helps exascale developers when they are creating high performance applications. The latest version, ROCm 4.0, is optimized for delivering high performance on MI100-based systems, such as PyTorch and Tensorflow frameworks.

AMD Instinct MI100 Specifications

| Compute Units | Stream Processors | FP64 TFLOPS (Peak) | FP32 TFLOPS (Peak) | FP32 Matrix TFLOPS

(Peak) |

FP16/FP16 Matrix TFLOPS(Peak) |

INT4 | INT8 TOPS

(Peak) |

bFloat16 TFLOPs

(Peak) |

HBM2 ECC Memory |

Memory Bandwidth |

| 120 | 7680 | Up to 11.5 | Up to 23.1 | Up to 46.1 | Up to 184.6 | Up to 184.6 | Up to 92.3 TFLOPS | 32GB | Up to 1.23 TB/s |

Key capabilities and features of the AMD Instinct MI100 accelerator include:

- All-New AMD CDNA Architecture- Engineered to power AMD GPUs for the exascale era and at the heart of the MI100 accelerator, the AMD CDNA architecture offers exceptional performance and power efficiency

- Leading FP64 and FP32 Performance for HPC Workloads – Delivers industry leading 11.5 TFLOPS peak FP64 performance and 23.1 TFLOPS peak FP32 performance, enabling scientists and researchers across the globe to accelerate discoveries in industries including life sciences, energy, finance, academics, government, defense and more.

- All-New Matrix Core Technology for HPC and AI – Supercharged performance for a full range of single and mixed precision matrix operations, such as FP32, FP16, bFloat16, Int8 and Int4, engineered to boost the convergence of HPC and AI.

- 2nd Gen AMD Infinity Fabric Technology – Instinct MI100 provides ~2x the peer-to-peer (P2P) peak I/O bandwidth over PCIe 4.0 with up to 340 GB/s of aggregate bandwidth per card with three AMD Infinity Fabric Links. In a server, MI100 GPUs can be configured with up to two fully-connected quad GPU hives, each providing up to 552 GB/s of P2P I/O bandwidth for fast data sharing.

- Ultra-Fast HBM2 Memory– Features 32GB High-bandwidth HBM2 memory at a clock rate of 1.2 GHz and delivers an ultra-high 1.23 TB/s of memory bandwidth to support large data sets and help eliminate bottlenecks in moving data in and out of memory.

- Support for Industry’s Latest PCIe Gen 4.0 – Designed with the latest PCIe Gen 4.0 technology support providing up to 64GB/s peak theoretical transport data bandwidth from CPU to GPU.

Availability

The AMD Instinct MI100 accelerators are expected by end of the year in systems from OEM and ODM partners in the enterprise markets, including Dell, Supermicro, GIGABYTE, and HPE.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed