The AMD Instinct MI300 series introduces a new approach to accelerator architecture for AI, combining advanced technologies to drive progress in high-performance computing and artificial intelligence. With its sophisticated design, the MI300 series promises to reshape the boundaries of computational power and efficiency.

The AMD Instinct MI300 series introduces a new approach to accelerator architecture for AI, combining advanced technologies to drive progress in high-performance computing and artificial intelligence. With its sophisticated design, the MI300 series promises to reshape the boundaries of computational power and efficiency.

MI300 Family Overview

There is a lot of ground to cover here, and the nuance between the two is subtle but important.

| Specification | MI300x | MI300a |

|---|---|---|

| CPU Cores | 12 chiplets as a single device • Four IOD and Eight XCD • Infinity Fabric AP and 3D packaging |

13 chiplets as a single APU • 8c 16t x86 CPU x 3 CCD’s (24 cores total) • Four IOD, Three CCD and Six XCD • Infinity Fabric AP and 3D packaging |

| Cache (L3) | 32 MB L3 cache shared by eight cores | L1 & L2 Only |

| HBM3 Capacity | 196GB | 128GB |

| Infinity Cache | • 256 MB at 17 TB/s peak BW • XCD Bandwidth amplification • HBM power reduction • Multi-XCD and CCD cache coherence • Prefetcher for CPU memory latency |

• 256 MB at 17 TB/s peak BW • XCD Bandwidth amplification • HBM power reduction • Multi-XCD cache coherence |

| Unified Architecture | N/A | Unified HBM and Infinity Cache • CCD and XCD data sharing • Reduced data movement • Simplified programming |

Zen 4 CPU Complex Die (CCD) and Enhancements

At the heart of the MI300A APU lies the ‘Zen 4’ CPU Complex Die (CCD), featuring eight multithreaded AMD ‘Zen 4’ x86 cores, each boasting 1MB L2 cache and 32 MB of shared L3 cache. This robust architecture supports simultaneous multithreading (SMT) and incorporates essential ISA updates, including BFLOAT16, VNNI, and AVX-512, with a 256b data path. The memory system is equally impressive, with 48b/48b virtual/physical addressability, ensuring expansive memory support.

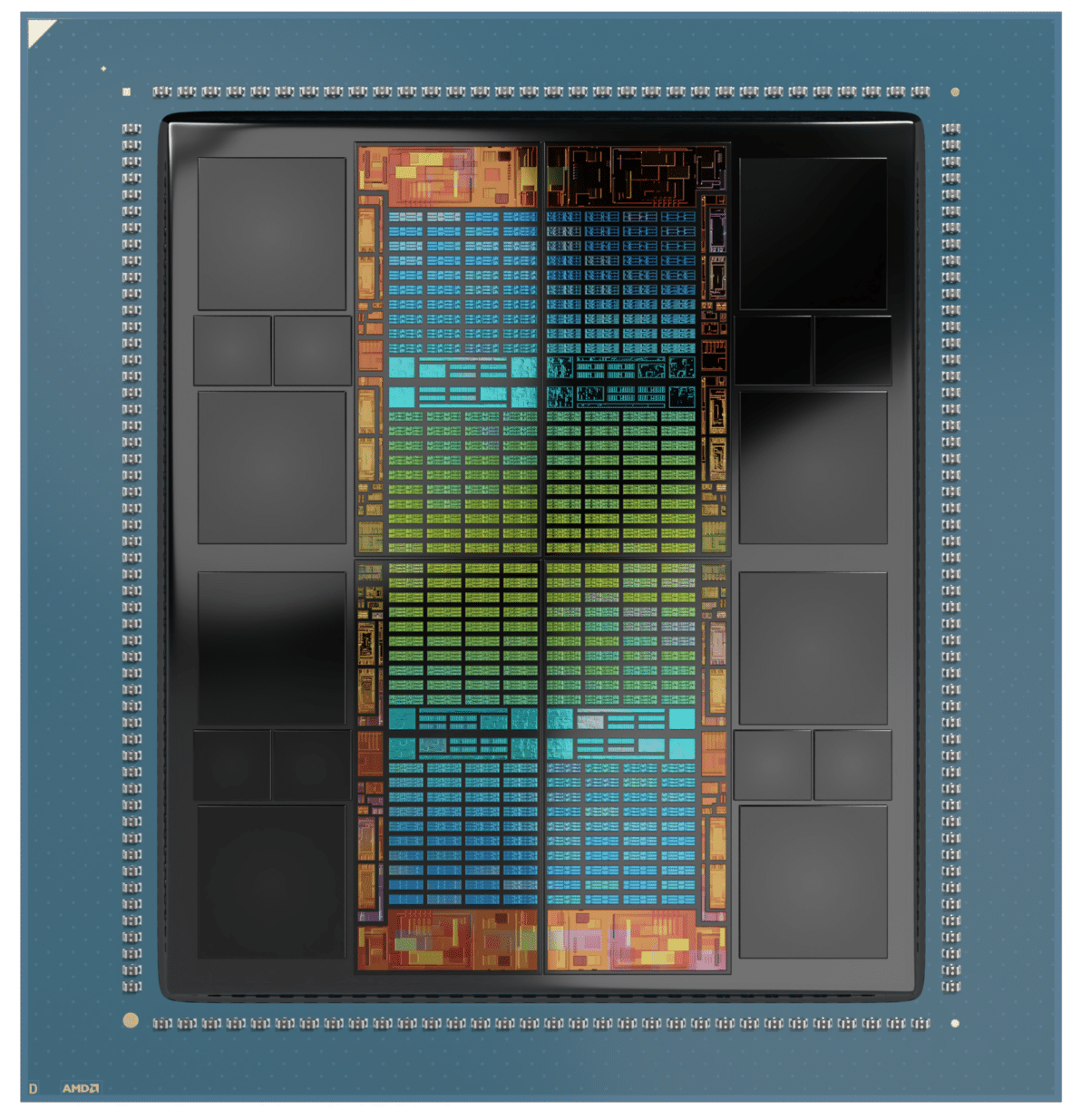

CDNA 3 Compute Unit and Memory System

The CDNA 3 compute unit in the MI300 series introduces notable enhancements. Each Accelerator Complex Die (XCD) houses 38 CDNA 3 compute units, backed by a 4 MB shared L2 cache and optimized L1 cache for bytes/FLOP. These units support a range of numerical formats like TF32 and FP8 and comply with OCP FP8 standards. The memory system is designed to maximize data-sharing efficiency and reduce latency, thanks to AMD’s innovative Infinity Cache and Infinity Fabric Interface.

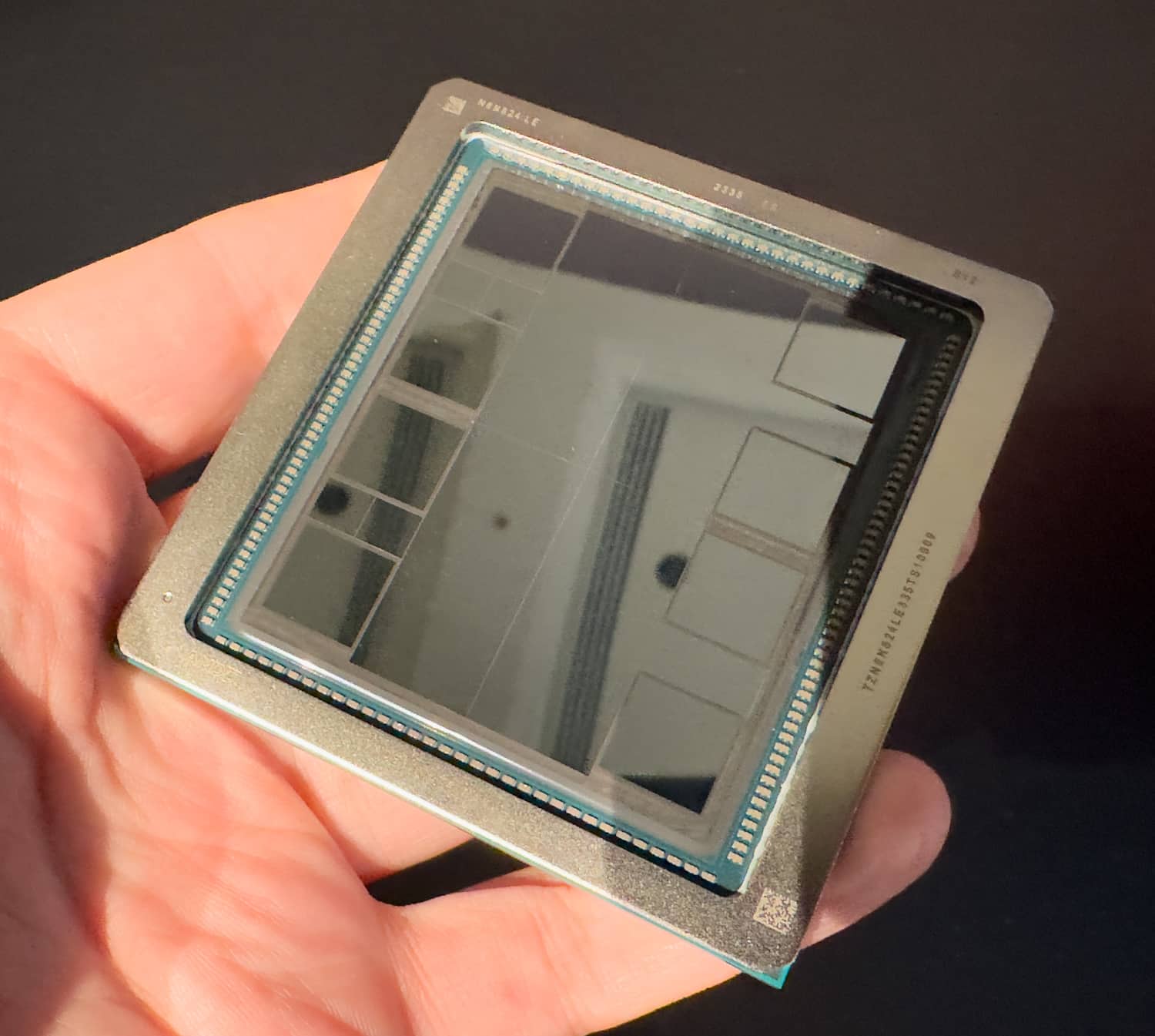

3.5D Hybrid Bond Packaging: A Leap in Integration

A key highlight of the MI300 family is its 3.5D Hybrid Bond Packaging, significantly increasing compute and HBM (High Bandwidth Memory) within a package. This packaging method offers dense, power-efficient chiplet interconnects, enhancing overall system-level efficiency. The MI300 series employs a modular construction approach, allowing for flexible configurations and scalability.

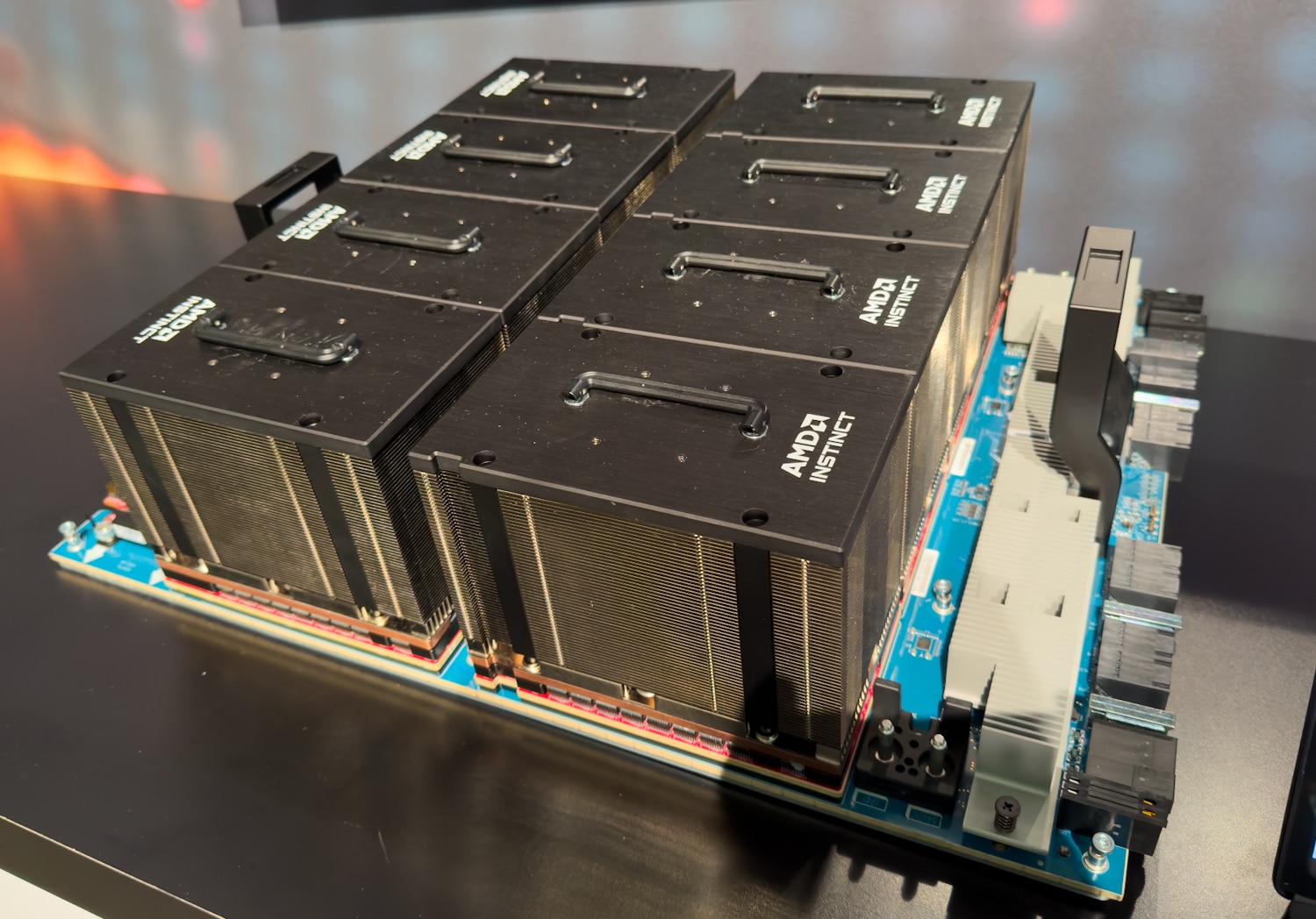

Advanced Power Management and Thermal Design

The MI300 family’s power management system is tailored to handle intensive computational workloads, with a design focus on power efficiency and heat extraction. The unique thermal architecture supports TDPs (Thermal Design Power) exceeding 550W, ensuring reliable performance even under demanding conditions. The power delivery system is ingeniously designed to accommodate different stacked dies and orientations, ensuring precise alignment and efficiency.

AMD MI300 Performance

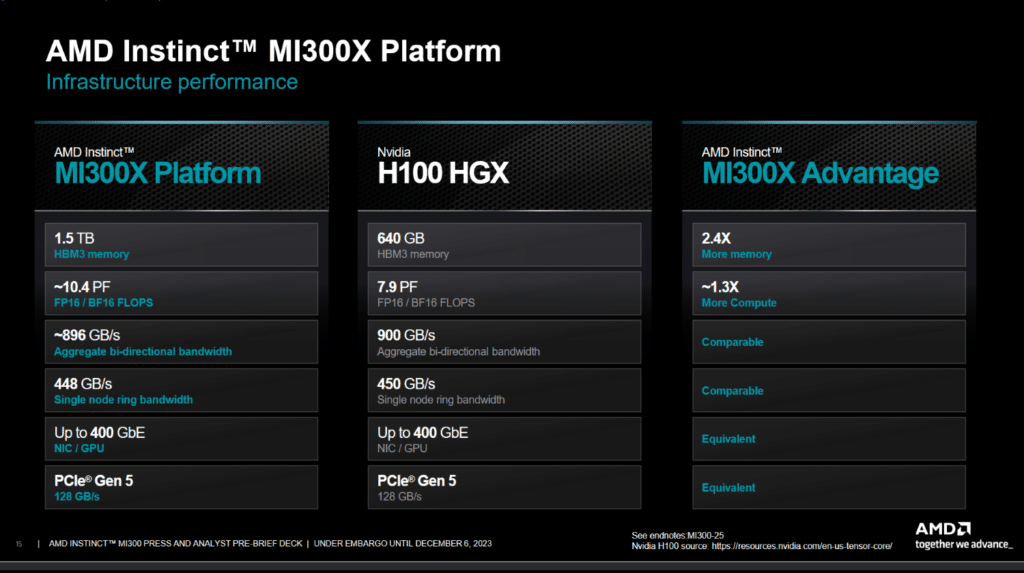

AMD has brought an interesting product to market with its Instinct MI300X Platform and is positioning it as a strong competitor to Nvidia’s hard-to-find H100 HGX. The MI300X Platform comes with 1.5TB of HBM3 memory, which significantly overshadows the 640GB memory capacity of the H100 HGX, something every developer will be happy to have. On the side of raw computational power, AMD takes the lead with around 10.4 petaFLOPS of FP16/BF16 performance, approximately 1.3 times that of the H100 HGX, promising enhanced efficiency for complex calculations.

Regarding the other key specs, the two platforms are close to parity, matching strides in aggregate bi-directional bandwidth and network interface capabilities, each providing up to 400 GbE and maintaining parity with PCIe Gen 5 interfaces at 128 GB/s. The duel between AMD and NVIDIA exhibits unprecedented rapid innovation in the HPC/AI sector as manufacturers continue releasing new technology to meet the growing demands for more memory and faster compute.

Closing Thoughts

The AMD Instinct MI300 series, with its advanced modular and chiplet architecture, powerful CPU and GPU cores, and innovative packaging and power management, has much to offer in high-performance computing and artificial intelligence. Its design reflects a well-thought-out integration of power, performance, and efficiency, setting new benchmarks for future computational technologies.

As the computing world eagerly anticipates the full deployment of the MI300 series, it will be interesting to see if AMD can keep pace with supply and technological advancements while continually driving innovation in the HPC and AI domains. AMD is offering a compelling, comparable solution with the MI300 series.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed