Arista Networks has announced a groundbreaking technology demonstration to create AI Data Centers that align compute and network domains into a single, managed AI entity.

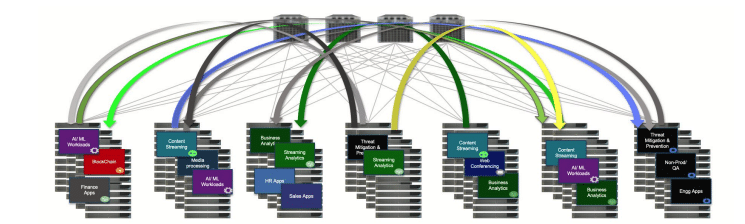

Arista Networks has announced a groundbreaking technology demonstration to create AI Data Centers that align compute and network domains into a single, managed AI entity. This initiative, undertaken in collaboration with NVIDIA, seeks to build optimal generative AI networks that offer lower job completion times by allowing customers to uniformly configure, manage, and monitor AI clusters across critical components, including networks, NICs, and servers.

Unified Management for AI Clusters

As AI clusters and large language models (LLMs) continue to expand, the complexity and the number of components involved also increase significantly. These components include GPUs, NICs, switches, optics, and cables, which must function cohesively to form a comprehensive network. Uniform controls ensure that AI servers hosting NICs and GPUs are in sync with AI network switches across various tiers. Without this alignment, there is a risk of misconfiguration or misalignment, particularly between NICs and the network switches, which can severely impact job completion due to difficult-to-diagnose network issues.

Coordinated Congestion Management

Large AI clusters require synchronized congestion management to prevent packet drops and under-utilization of GPUs. Coordinated, simultaneous management and monitoring are also necessary to optimize compute and network resources. At the core of Arista’s solution is an EOS-based agent enabling communication between the network and the host, coordinating configurations to optimize AI clusters.

Remote AI Agent for Enhanced Control

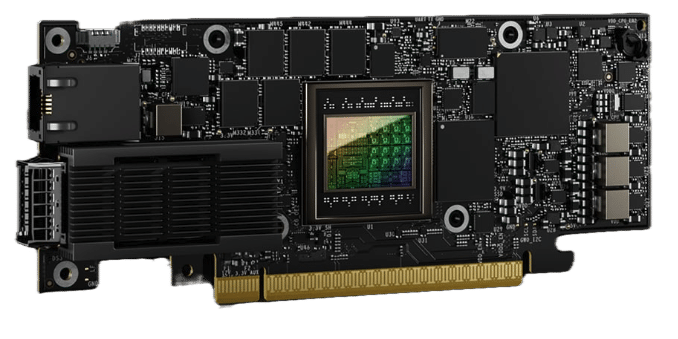

The Arista EOS running on Arista switches can be extended to directly attached NICs and servers through a remote AI agent. This allows a single point of control and visibility across an AI Data Center, creating a unified solution. The remote AI agent, hosted on an NVIDIA BlueField-3 SuperNIC or running on the server and collecting telemetry from the SuperNIC, allows EOS on the network switch to configure, monitor, and debug network issues on the server. This ensures end-to-end network configuration and Quality of Service (QoS) consistency, enabling AI clusters to be managed and optimized as a cohesive solution.

John McCool, Chief Platform Officer for Arista Networks, stated, “Arista aims to improve the efficiency of communication between the discovered network and GPU topology to enhance job completion times through coordinated orchestration, configuration, validation, and monitoring of NVIDIA accelerated compute, NVIDIA SuperNICs, and Arista network infrastructure.”

This new technology highlights how an Arista EOS-based remote AI agent allows an integrated AI cluster to be managed as a single solution. By extending EOS capabilities to servers and SuperNICs via remote AI agents, Arista ensures continuous tracking and reporting of performance issues or failures between hosts and networks, enabling rapid isolation and minimization of impacts. The EOS-based network switches maintain constant awareness of accurate network topology, and extending EOS to SuperNICs and servers with the remote AI agent enhances coordinated optimization of end-to-end QoS across all elements in the AI Data Center, ultimately reducing job completion times.

Next Up For Arista Networks

Arista Networks will showcase the AI agent technology at the Arista IPO 10th-anniversary celebration at the NYSE on June 5th, with customer trials expected to commence in the second half of 2024. This demonstration represents a significant step towards achieving a multi-vendor, interoperable ecosystem that enables seamless control and coordination between AI networking and AI compute infrastructure, addressing the growing demands of AI and LLM workloads.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed