Ayar Labs and NVIDIA are collaborating on developing scale-out architectures enabled by high-bandwidth, low-latency, and ultra-low-power optical-based interconnect for future NVIDIA products. Focused on integrating Ayar Labs’ technology, the two companies hope to accelerate the development and adoption of optical I/O technology to support the growth of AI/ML applications and data volumes.

Ayar Labs and NVIDIA are collaborating on developing scale-out architectures enabled by high-bandwidth, low-latency, and ultra-low-power optical-based interconnect for future NVIDIA products. Focused on integrating Ayar Labs’ technology, the two companies hope to accelerate the development and adoption of optical I/O technology to support the growth of AI/ML applications and data volumes.

Meeting Performance and Power Requirements with Optical I/O

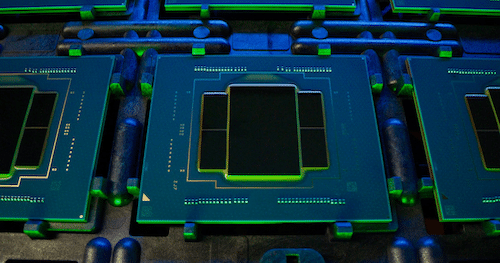

Optical I/O is positioned to change system design’s performance and power trajectories by enabling compute, memory, and networking ASICs to communicate with increased bandwidth, lower latency, over a greater distance, and less power than existing electrical I/O solutions. Optical I/O technology will enable emerging heterogeneous compute systems, disaggregated/pooled designs, and unified memory architectures to drive future data center innovation.

Ayar Labs CEO Charles Wuischpard believes current copper-based, compute-to-compute interconnects limit state-of-the-art AI/ML training architectures needed to build scale-out systems for the future. According to Wuischpard, Ayar Labs’ work with NVIDIA will provide the foundation to develop next-generation solutions based on optical I/O for the next leap in AI capabilities to address complex problems.

Delivering the ‘Next Million-X’ Speedup for AI with Optical Interconnect

Rob Ober, NVIDIA’s Chief Platform Architect for Data Center Products, commented that NVIDIA-accelerated computing has delivered a “million-X speedup in AI over the past decade.” The next million-X, he believes, will require advanced technologies like optical I/O to provide the bandwidth, power, and scale requirements for future AI/ML workloads.

As AI model sizes continue to grow, by 2023, NVIDIA believes that models will have 100 trillion or more connections – a 600X increase from 2021 – exceeding the technical capabilities of existing platforms. Traditional electrical-based interconnects will reach their bandwidth limits, driving lower application performance, higher latency, and increased power consumption. The collaboration between Ayar Labs and NVIDIA will focus on new interconnect solutions and system architectures to address the scale, performance, and power demands of the next generation AI with architectures based on optical I/O.

Please visit Ayar Labs to learn more.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | TikTok | RSS Feed