In the current computing market, there is growing competition among companies to develop machines that are AI-capable. The challenge isn’t who can build the biggest, baddest machine; it’s who can build the most heat-efficient machine.

When servers run AI processes, they generate extraordinary amounts of heat, more than the usual servers running standard business processes and services. Because of this, engineers designing the systems containing these servers will have to factor in more powerful cooling and heat exchange components.

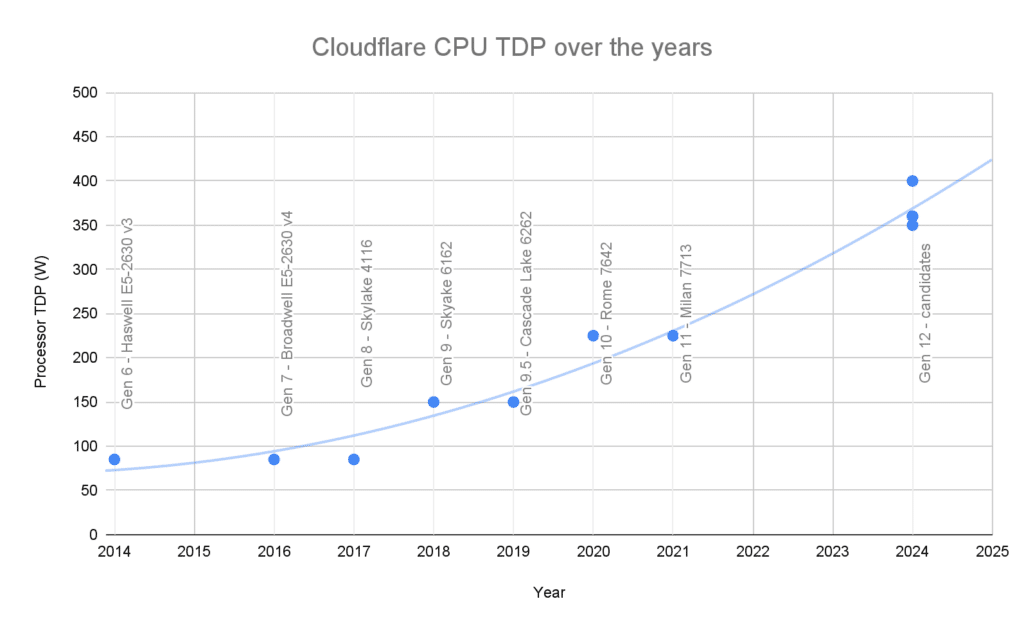

A relationship exists between the amount of power a CPU socket uses, and the heat a system is designed to dissipate while under full load. That’s referred to as Thermal Design Point or TDP. Companies use the TDP as a guide to ensure they are using the most powerful chips in their servers while at the same time designing the most physically efficient boxes and boards for density in a large data center environment. The larger the box, the more heat dissipation you can affect. However, that results in a loss of server density in a data center. It’s a delicate balance.

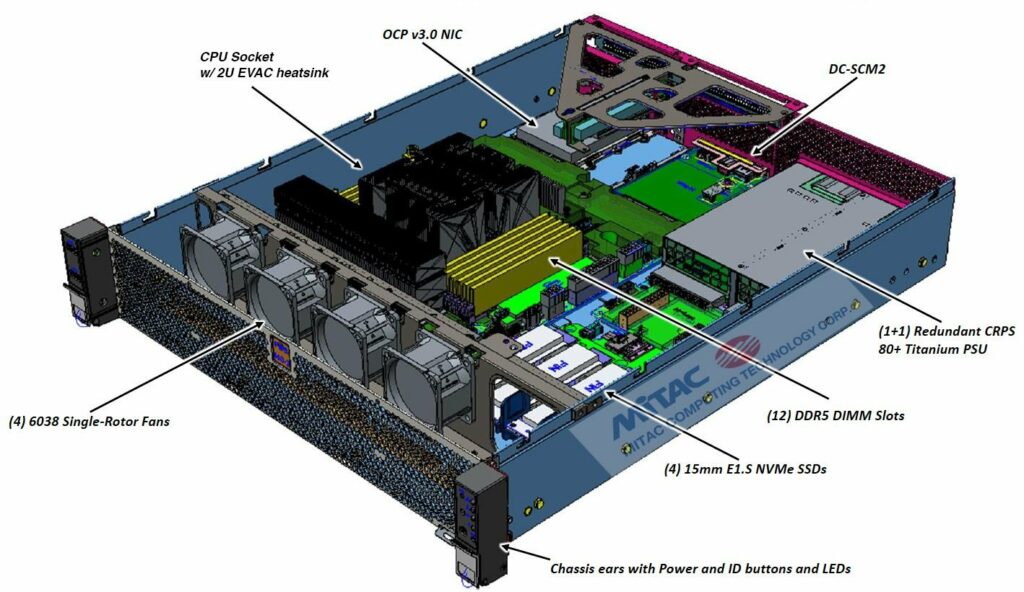

Cloudflare seems to have encountered the upper limit of this relationship with the launch of its latest generation compute server. As they are planning the design of their 12th generation server, they have discovered that it is no longer feasible to cram the latest AMD EPYC x86 processor and all its accouterments, especially the required eight 40mm dual rotor fans, which running anything at this level for their Workers AI program would have them running at 100% duty cycle, full time.

Potential Future Avenues

Although you can increase the physical size of a server to accommodate larger fans and cooling fins for CPUs to dissipate heat, this approach becomes inefficient quickly.

Imagine you have a 40-kW cabinet deployed in a data center. In an air-cooled system, up to 30 percent of the energy you feed into that cabinet would potentially go to powering the server fans. This means that the actual compute capacity of that cabinet would only represent about 28 kW. In contrast, the same cabinet supported by a liquid cooling system could dedicate as much as 39 kW of its power to compute workloads. In short, liquid cooling allows you to do more with the same power.

Additionally, because water can hold 4X more heat capacity than air, these new liquid-cooled servers can support higher-bin, faster processors with lower power requirements and greater reliability. By replacing or supplementing conventional air cooling with higher-efficiency liquid cooling, the overall operational efficiency of the data center is improved.

Dell has proven a 50-60 percent cooling and energy efficiency improvement with liquid-cooling technology in their data centers. If Cloudflare were to stop growing its physical boxes and embrace the liquid cooling revolution, it would join the ranks of system designers who successfully condense storage and compute capacities while lowering the total cost of ownership and operation.

Amazon

Amazon