At the Open Compute Project Summit today Facebook is announcing its flexible NVMe JBOF (just a bunch of flash), Lightning. Facebook is introducing Lightning as a flash building block, or flash sled. Lightning will allow data centers to better scale out flash capacity across multiple applications while tuning the compute-to-storage ratio. Facebook is contributing this new JBOF to the Open Compute Project.

Facebook has been big on using flash for some time now. They use it for cache, database applications, and boot drives. While flash gives them the performance they are looking for it has a few drawbacks such as not scaling effectively. Flash density doubles roughly every year and a half making efficient scaling an issue. Facebook has been looking for ways to minimize the number of hardware building blocks while maximizing the total amount of flash available for given applications. Facebook saw disaggregating the hardware and software components as a possible solution to improving its operational efficiency. Enter their JBOF, Lightning.

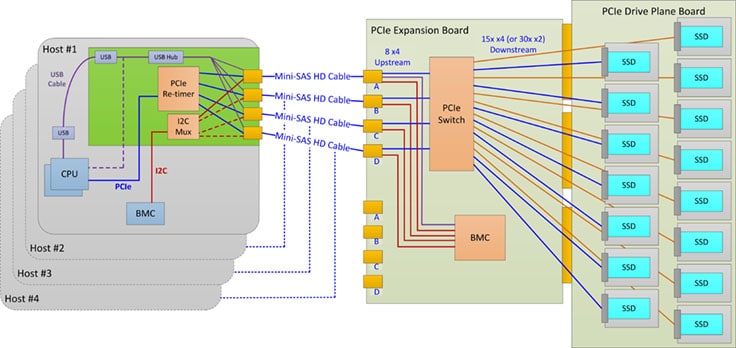

Lightning provides a PCIe gen 3 connection from end to end (CPU to SSD). In order to provide a faster time to market, maintain a common look, enable modularity in PCIe switch solutions, and enable flexibility in SSD form factors Facebook is leveraging the existing Open Vault (Knox) SAS JBOD infrastructure. Facebook had to use a certain topology to implement the new JBOF (pictured above) and new boards:

- PCIe retimer card. This x16 PCIe gen 3 card is installed in the Leopard server, which is used as a head node for the JBOF. It is a simple, low-cost, low-power design to retransmit the PCIe signals over external mini-SAS HD (SFF-8644) cables of at least 2 meters.

- PCIe Expansion Board (PEB). The PCIe switch, the Lightning BMC, and all the switch support circuitry are located on this board. One PEB is installed per tray of SSDs and replaces the SAS Expander Board (SEB) used in Knox. This allows us to use a common switch board for both trays and also allows us to easily design new or different versions (with next generation switches, for example) without modifying the rest of the infrastructure. Each PEB features up to 32x lanes of PCIe as the uplink(s) to the retimer in the head node and 60x lanes of PCIe to the SSDs. The PEB can be hot-swapped if it fails without affecting the other tray in the system.

- PCIe Drive Plane Board (PDPB). The PDPB contains the 15x SFF-8639 (U.2) SSD connectors and support circuitry. Each SSD connector, or slot, is connected to 4x lanes of PCIe, PCIe clocks, PCIe reset, and additional side-band signals from the PCIe switch or switches on the PEB. Each SSD slot can also be bifurcated into 2x x2 ports, which allows us to double the number of SSDs per tray from 15x to 30x (from 30x per system to 60x per system) without requiring an additional layer of PCIe switching.

Benefits include:

- Lightning can support a variety of SSD form factors, including 2.5", M.2, and 3.5" SSDs.

- Lightning will support surprise hot-add and surprise hot-removal of SSDs to make field replacements as simple and transparent as SAS JBODs.

- Lightning will use an ASPEED AST2400 BMC chip running OpenBMC.

- Lightning will support multiple switch configurations, which allows us to support different SSD configurations (for example, 15x x4 SSDs vs. 30x x2 SSDs) or different head node to SSD mappings without changing HW in any way.

- Lightning will be capable of supporting up to four head nodes. By supporting multiple head nodes per tray, we can adjust the compute-to-storage ratio as needed simply by changing the switch configuration.

While an all NVMe sounds like a wonderful idea there are some concerns that need to be address before they can be implemented into data centers everywhere. NMVe can’t be hot-plugged or hot-added in the same fashion SAS drives can be. Currently PCIe hot-plugging is complicated. PCIe does not have an in-band enclosure and chassis management scheme like the SAS does, making management tricky. Part of the layout and new boards deals with retaining signal integrity. As opposed to using external PCIe cables, Facebook chose mini-SAS HD cables (SFF-8644). The cables use a full complement of PCIe side-band signals and a USB connection for an out-of-band management connection. And Facebook is dealing with power consumption of NVMe, a 2.5” NVMe SSD can use as much as 25W. Lightning is limiting the power to 14W per slot.

Lightning is designed to be a flexible, scalable solution for flash. It supports multiple SSD form factors and multiple head nodes while using a power level that makes sense for its targeted IOPS/TB. Adding this solution to OCP should bolster the NVMe ecosystem and accelerate the adoption of NVMe SSDs.

Amazon

Amazon