Google Gemma 3 and AMD Instella AI Models elevate multimodal and enterprise AI capabilities, reshaping AI performance standards.

Google and AMD have announced significant developments in artificial intelligence. Google introduced Gemma 3, the latest generation of its open-source AI model series. At the same time, AMD announced integration with the Open Platform for Enterprise AI (OPEA) and launched its Instella language models.

Google’s Gemma 3: Multimodal AI Efficiency on Minimal Hardware

The March 12 release of Gemma 3 builds upon the success of Gemma 2. The new model introduces multimodal capabilities, multilingual support, and enhanced efficiency, enabling advanced AI performance even on limited hardware.

Gemma 3 is available in four sizes: 1B, 4B, 12B, and 27B parameters. Each variant comes in both base (pre-trained) and instruction-tuned versions. The larger models (4B, 12B, and 27B) offer multimodal functionality, seamlessly processing text, images, and short videos. Google’s SigLIP vision encoder converts visual inputs into tokens interpretable by the language model, enabling Gemma 3 to answer image-based questions, identify objects, and read embedded text.

Gemma 3 also expands its context window significantly, supporting up to 128,000 tokens compared to Gemma 2’s 80,000 tokens. This allows the model to handle more information within a single prompt. Additionally, Gemma 3 supports over 140 languages, enhancing global accessibility.

LMSYS Chatbot Arena Rankings

Gemma 3 has quickly emerged as a top-performing AI model on LMSYS Chatbot Arena, a benchmark evaluating large language models based on human preferences. Gemma-3-27B achieved an Elo score of 1338, ranking ninth globally. This places it ahead of notable competitors such as DeepSeek-V3 (1318), Llama3-405B (1257), Qwen2.5-72B (1257), Mistral Large, and Google’s previous Gemma 2 models.

AMD Strengthens Enterprise AI with OPEA Integration

AMD announced its support for the Open Platform for Enterprise AI (OPEA) on March 12, 2025. This integration connects the OPEA GenAI framework with AMD’s ROCm software stack, enabling enterprises to efficiently deploy scalable generative AI applications on AMD data center GPUs.

The collaboration addresses key enterprise AI challenges, including model integration complexity, GPU resource management, security, and workflow flexibility. As a member of OPEA’s technical steering committee, AMD collaborates with industry leaders to enable composable generative AI solutions that are deployable across public and private cloud environments.

OPEA provides essential AI application components, including pre-built workflows, evaluation abilities, embedding models, and vector databases. Its cloud-native, microservices-based architecture ensures seamless integration through API-driven workflows.

AMD Launches Instella: Fully Open 3B-Parameter Language Models

AMD also introduced Instella, a family of fully open-source, 3-billion-parameter language models developed entirely on AMD hardware.

Technical Innovations and Training Approach

Instella models employ a text-only autoregressive transformer architecture with 36 decoder layers and 32 attention heads per layer, supporting sequences up to 4,096 tokens. The models use a vocabulary of approximately 50,000 tokens via the OLMo tokenizer.

Following a multi-stage pipeline, training occurred on 128 AMD Instinct MI300X GPUs across 16 nodes. Initial pre-training involved approximately 4.065 trillion tokens from diverse datasets covering coding, academics, mathematics, and general knowledge. A second pre-training stage refined problem-solving capabilities using an additional 57.575 billion tokens from specialized benchmarks such as MMLU, BBH, and GSM8k.

Post-pre-training, Instella underwent supervised fine-tuning (SFT) with 8.9 billion tokens of curated instruction-response data, enhancing interactive capabilities. A final Direct Preference Optimization (DPO) phase aligned the model closely with human preferences, using 760 million tokens of carefully selected data.

Impressive Benchmark Performance

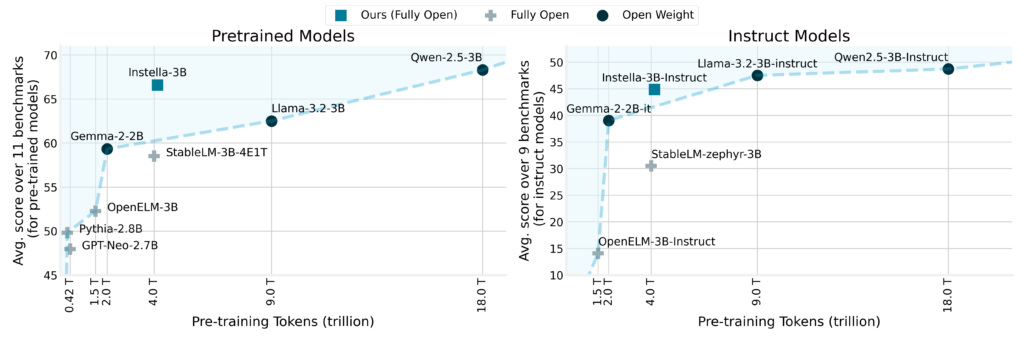

Benchmark results highlight Instella’s remarkable performance gains. The model outperformed existing fully open models by an average margin of over 8%, with impressive results in benchmarks such as ARC Challenge (+8.02%), ARC Easy (+3.51%), Winograde (+4.7%), OpenBookQA (+3.88%), MMLU (+13.12%), and GSM8k (+48.98%).

Unlike leading open-weight models like Llama-3.2-3B and Gemma-2-2B, Instella demonstrated superior or highly competitive performance across multiple tasks. The instruction-tuned variant, Instella-3B-Instruct, showed significant advantages over other fully open instruction-tuned models with an average performance lead of over 14% while also performing competitively against leading open-weight instruction-tuned models.

Full Open-Source Release and Availability

In line with AMD’s commitment to open-source principles, the company has released all artifacts related to Instella models, including model weights, detailed training configurations, datasets, and code. This complete transparency enables the AI community to collaborate, replicate, and innovate with these models.

Conclusion

These announcements from Google and AMD set the stage for an exciting year ahead in AI innovation. The industry momentum is clear with Gemma 3 redefining multimodal efficiency and AMD’s Instella models and OPEA integration empowering enterprise AI. As we approach NVIDIA’s GTC conference and anticipate further groundbreaking releases, it’s evident that these developments are just the beginning of what’s to come.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed