Earlier this year, Intel published performance results between Intel Habana Gaudi2 and GPU market leader NVIDIA that illustrated Intel’s commitment to AI and proved AI is not a one-size-fits-all category. At the same time, a joint development between Intel AI researchers and Microsoft Research created BridgeTower, a pre-trained multimodal transformer delivering state-of-the-art vision-language tasks. Hugging Face has integrated this model into its open-source library for machine learning.

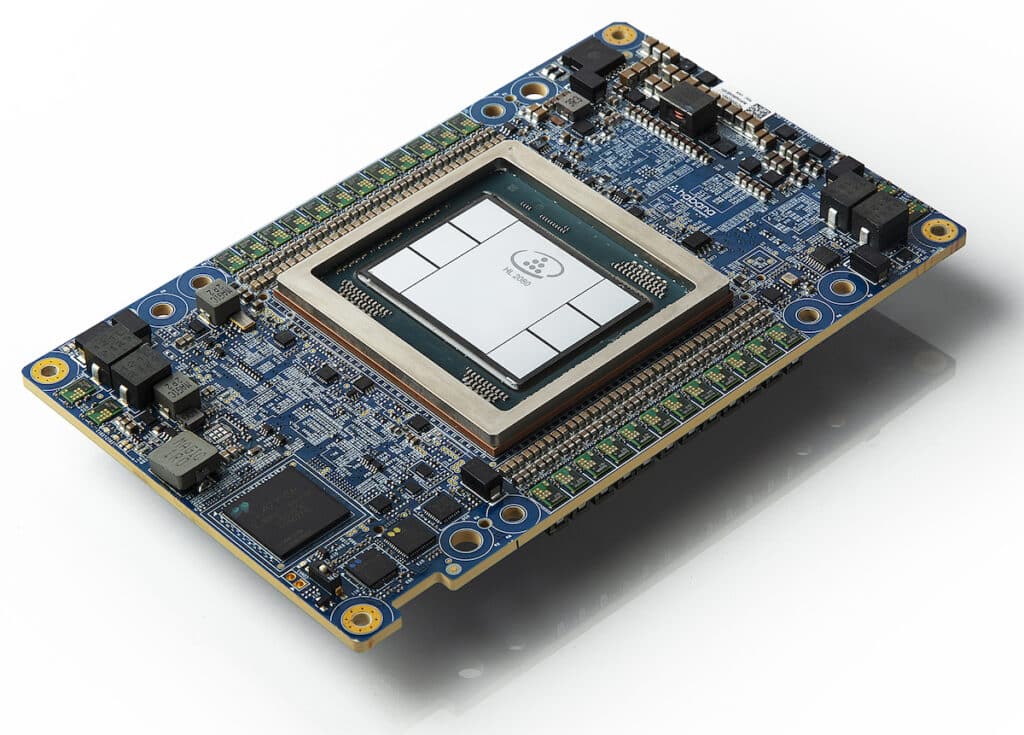

Habana Gaudi2 Mezzanine Card (Credit: Intel Corporation)

Hugging Face published the original benchmark results in a blog post on its website and updated the AI training performance benchmark results for Habana Gaudi2 and NVIDIA’s H100 GPU. According to those benchmark results, Gaudi2 outperformed H100 for gaining multimodal transformer BridgeTower model, but Gaudi2, using Optimum Habana, achieved x2.5 times better performance than the A100. Not only did the results validate Gaudi2’s place in the field of AI but also in Vision-Language training.

Optimum Habana is the interface between the Transformers and Diffusers libraries and Habana’s Gaudi processor (HPU). It provides tools that enable easy model loading, training, and inference on single- and multi-HPU settings for various downstream tasks.

BridgeTower Background

Vision-language models use uni-modal encoders to acquire data representations. The data is then combined or input into a cross-modal encoder. BridgeTower sets itself apart with its unique bridge layers, linking the topmost layers of uni-modal encoders to every layer of the cross-modal encoder, allowing for an efficient combination of visual and textual data at different levels.

BridgeTower, trained on just 4 million images, sets new performance standards, delivering 78.73 percent accuracy on the Visual Question Answering (VQAv2) test. That surpasses the previous best model by 1.09 percent. Scaling up, the model has an even higher accuracy of 81.15 percent, besting models trained on much larger datasets.

As a top-tier vision-language model, BridgeTower’s performance is due to its capability to rapidly load data using special hardware. These quick data-loading methods are beneficial for vision models, which often face data-loading challenges.

Hardware Insights

The updated benchmark tests were based on the latest hardware and software from NVIDIA and Habana Labs. The NVIDIA H100 Tensor Core GPU is INVIDIA’s latest and quickest GPU, with a Transformer Engine for specialized runs and 80GB of memory. Using the third iteration of Tensor Core technology, the Nvidia A100 Tensor Core GPU is widely available across cloud providers, with 80GB memory for superior speed over its 40GB counterpart.

Habana Labs Habana Gaudi2 is the second-generation AI hardware by Habana Labs that can house up to 8 HPUs, each with 96GB memory. It’s touted to have user-friendly features and, combined with Optimum Habana, makes transferring Transformers-based codes to Gaudi easier.

Benchmarking Details

The test involved fine-tuning a BridgeTower model with 866 million parameters and trained in English using various techniques on several datasets. The next step involved further fine-tuning using the New Yorker Caption Contest dataset. All platforms used the same settings and processed batches of 48 samples each for consistent results.

A challenge in such experiments is the time-consuming image data loading. Optimally, raw data should be directly sent to the devices for decoding. The focus now shifts to optimizing this data-loading process.

Optimizing Data Loading

For faster image loading on the CPU, increasing subprocesses can be helpful. Using Transformers’ TrainingArguments, the dataloader_num_workers=N argument can set the number of CPU subprocesses for data loading. The default setting is 0, meaning data is loaded by the main process, but this may not be efficient. Increasing it can improve speed, but it will also increase RAM consumption. The recommended setting is the number of CPU cores. However, it is best to experiment first to determine the optimum configuration.

This benchmark had three distinct runs:

- A mixed-precision run across eight devices, where data loading shares the same process with other tasks (dataloader_num_workers=0).

- A similar run but with a dedicated subprocess for data loading (dataloader_num_workers=1).

- The same setup but with two dedicated subprocesses (dataloader_num_workers=2).

Hardware-Accelerated Data Loading with Optimum Habana

To further enhance speed, shift data loading tasks from the CPU to accelerator devices, such as HPUs on Gaudi2 or GPUs on A100/H100, using Habana’s media pipeline. Instead of processing images entirely on the CPU, encoded images can be sent directly to the devices for decoding and augmentation. This approach maximizes device computing power but may increase device memory consumption.

Two effective methods for enhancing training workflows with images are allocating more dataloader resources and using accelerator devices for image processing. When training advanced vision-language models like BridgeTower, these optimizations make Habana Gaudi2 with Optimum Habana substantially faster than NVIDIA counterparts. Habana Gaudi2 is user-friendly, with only a few additional training arguments needed.

Amazon

Amazon