IBM will add AMD Instinct MI300X accelerators as a service on IBM Cloud to enhance performance and energy efficiency for GenAI Models.

IBM has announced an expanded collaboration with AMD to introduce AMD Instinct MI300X accelerators as a service on IBM Cloud. This solution enhances performance and energy efficiency for generative AI (GenAI) models and high-performance computing (HPC) applications, addressing the increasing demand for scalable AI solutions among enterprise clients.

The partnership extends support for AMD Instinct MI300X accelerators across IBM’s AI and data ecosystem, including the watsonx AI platform and Red Hat® Enterprise Linux® for AI inferencing. This aligns with IBM Cloud’s existing portfolio, which already features Intel Gaudi 3 accelerators and extends NVIDIA H100 Tensor Core GPU instances, further enhancing its ability to deliver high-performance AI and HPC workloads.

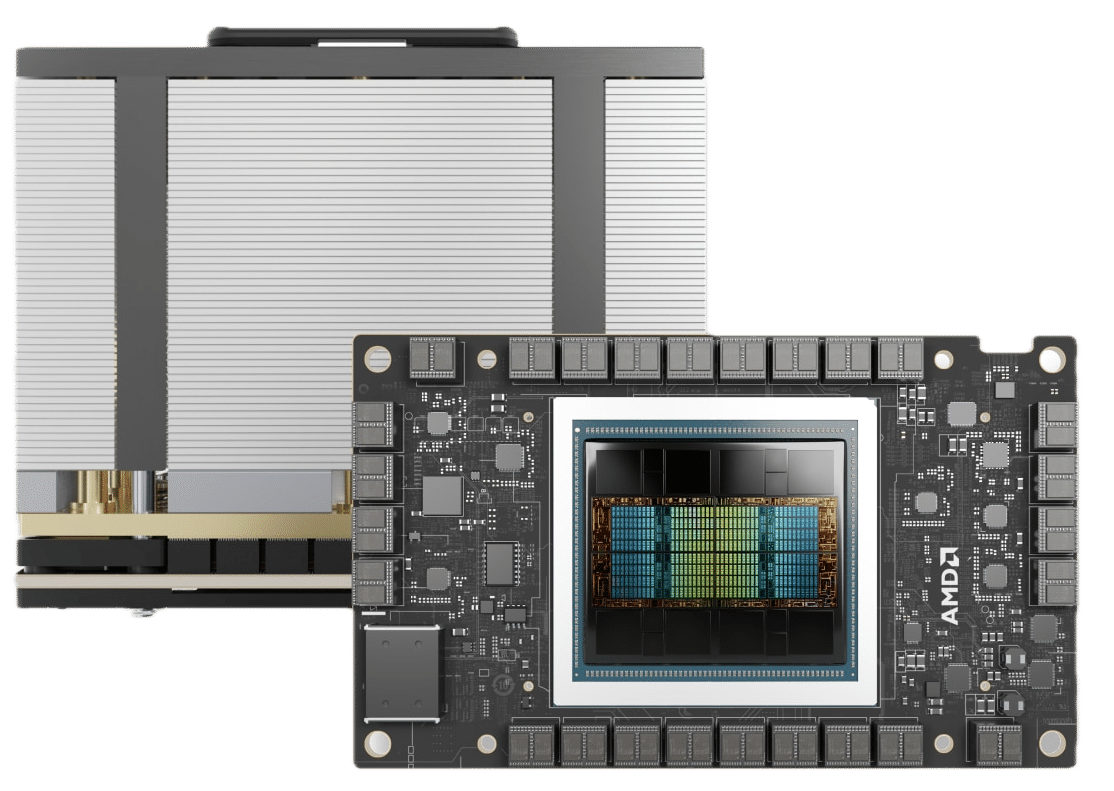

Advanced Capabilities of AMD Instinct MI300X Accelerators

The AMD Instinct MI300X accelerators, equipped with 192GB of high-bandwidth memory (HBM3), are engineered to support large model inferencing and fine-tuning for enterprise AI applications. Their high memory capacity enables enterprises to run larger AI models with fewer GPUs, reducing costs while maintaining performance and scalability. We recently tested these accelerators in our XE9680 review.

By offering these accelerators as a service on IBM Cloud Virtual Servers for VPC and via containerized solutions like the IBM Cloud Kubernetes Service and IBM Red Hat OpenShift, IBM aims to provide enterprise clients with a secure, high-performance environment optimized for their AI workloads. This flexibility allows organizations to scale their AI deployments efficiently while maintaining robust security and compliance—particularly vital for clients in highly regulated industries.

Integration with IBM watsonx and Red Hat Platforms

To address the needs of generative AI inferencing workloads, IBM plans to integrate AMD Instinct MI300X accelerators with its watsonx AI platform. This will equip watsonx clients with additional AI infrastructure resources, enabling them to scale workloads seamlessly across hybrid cloud environments. The accelerators will also support Red Hat Enterprise Linux AI and Red Hat OpenShift AI platforms, allowing enterprises to deploy large language models (LLMs) like the Granite family with advanced alignment tools like InstructLab.

These integrations underscore the accelerators’ ability to handle compute-intensive workloads with greater flexibility, enabling enterprises to prioritize performance, cost efficiency, and scalability in their AI deployments.

Philip Guido, Executive Vice President and Chief Commercial Officer at AMD, highlighted the importance of performance and flexibility in processing compute-intensive workloads, especially as enterprises adopt larger AI models. He noted that AMD Instinct accelerators, paired with AMD ROCm software, offer extensive ecosystem support for platforms such as IBM watsonx AI and Red Hat OpenShift AI, enabling clients to execute and scale GenAI inferencing without compromising efficiency or cost.

Alan Peacock, General Manager of IBM Cloud, echoed these sentiments, emphasizing AMD and IBM’s shared vision to bring AI solutions to enterprises. He stated that leveraging AMD accelerators on IBM Cloud provides enterprise clients with scalable, cost-effective options to meet their AI objectives, backed by IBM’s commitment to security, compliance, and outcome-driven solutions.

Collaboration brings Enhanced Security and Compliance

The collaboration leverages IBM Cloud’s renowned security and compliance capabilities, ensuring enterprises, including those in heavily regulated industries, can confidently adopt AI infrastructure powered by AMD accelerators. This commitment to security is integral to IBM and AMD’s strategy of supporting enterprise AI adoption at scale.

With this collaboration, IBM and AMD provide enterprises with a cutting-edge AI infrastructure that balances performance, scalability, and efficiency. The addition of AMD Instinct MI300X accelerators to IBM Cloud is poised to meet the growing demands of enterprise AI workloads, empowering organizations to unlock new possibilities in AI and HPC applications.

Availability

IBM Cloud services featuring AMD Instinct MI300X accelerators are expected to be generally available in the first half of 2025, further expanding IBM’s high-performance AI and HPC solutions portfolio.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed