Today, right in time for SuperComputing 2017, IBM announced new software aimed at delivering faster time to insight for High Performance Computing (HPC) and High Performance Data Analytics (HPDA), such as Spark, Tensor Flow and Caffé, for AI, Machine Learning and Deep Learning. This new software will be deployed for the Department of Energy’s CORAL Project at both Oak Ridge and Lawrence Livermore. The software includes new AI models, a simplified UI for IBM Spectrum LSF Suite, and the latest version of IBM Spectrum Scale software.

Accelerated computing can be hugely beneficial to many customers, especially when systems are deployed to be optimized for accelerated workloads. IBM’s Spectrum Computing family has been around fro some time and is designed to match workloads to platforms to get the right amount of performance needed. The family offers support for mixed environments including both x86 and IBM POWER platforms, and is also ready for the next generation of IBM PowerSystems.

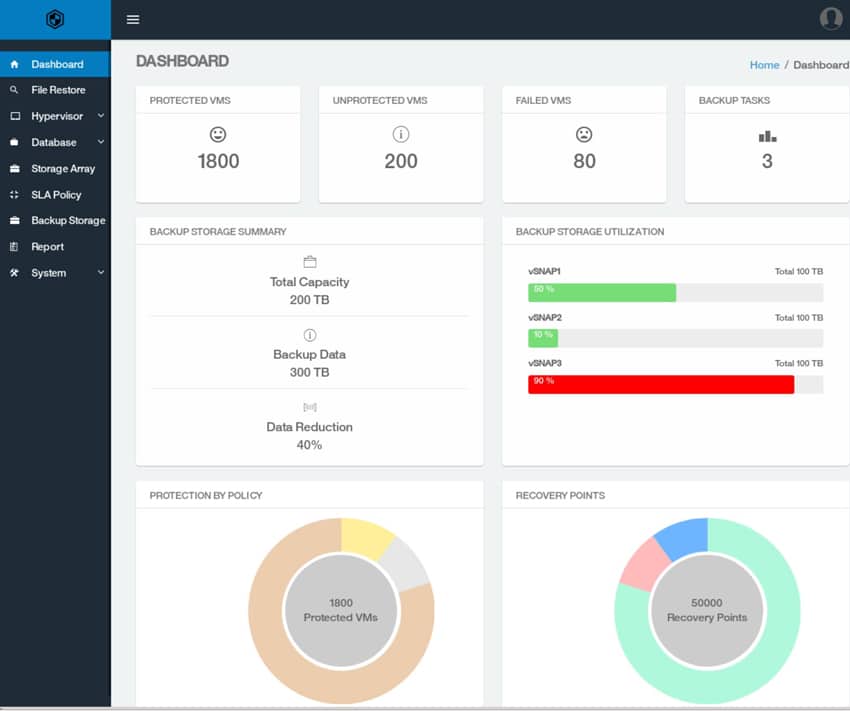

As stated above, the software announced today comes with new tools aimed at helping users develop AI models with the leading open source deep learning frameworks, like TensorFlow and Caffe thoruhg the launch of Deep Learning Impact. IBM has simplified the UI for its IBM Spectrum LSF Suite, easing management and adding remote access capabilities. And its software now supports the ability to move workloads such as unified file, object and HDFS from where it is stored to where it is analyzed.

Capabilities include:

- Simplify deployment – cluster virtualization to make many systems work together as one with easy to deploy packages that support multiple applications, users and departments on shared compute and data services designed to rapidly manage clusters for HPC and deep learning/machine learning;

- Deliver AI for the enterprise – from centralized management and reporting, multitenant access, and end-to-end security, to full IBM support and services, our offerings have been designed to offer the level of function and support expected in an enterprise data center;

- Ease adoption of cognitive workloads – end users can access and use cluster resources across more applications, lowering the need for specialized cluster knowledge;

- Provide elasticity to hybrid cloud – simplifying cloud usage in distributed clustered environments with automated workload driven cloud provisioning and de-provisioning, so you only pay for what you use, and intelligent workload and data transfer to and from the cloud, making cloud usage transparent to the end user;

- Open for the latest technology – IBM storage software supports the latest Open Source tools and protocols allowing the future adoption of Containers, Spark, or other modules like Deep Learning.

Uses cases for this type of technology ran the full gamut. Media/entertainment, security surveillance, manufacturing, and healthcare industries all generate massive amounts of data the can be combed through to gain insights from. IBM gives a handful of useful insights that can be gained through parallel processing compute resources such as identifying a car with a specific license plate, cross reference supply chain and weather forecasts or search for an anomaly across medical records.

Availability

The latest version of IBM Spectrum LSF Suites is expected to be generally available in late November. New versions of IBM Spectrum Conductor with Spark, Deep Learning Impact and IBM Spectrum Scale are expected to be available in early December.

Amazon

Amazon