IBM recently released a survey showing increasing interest in generative AI, though only 39% of organizations have started using it for innovation and research. However, this number is expected to grow, with 69% of companies planning to use generative AI by 2025, up from just 29% in 2022.

To meet the rising demand, IBM is expanding its high-performance computing (HPC) offerings by introducing NVIDIA Tensor Core GPU instances on IBM Cloud. These powerful GPUs are designed to support AI applications, including training large language models (LLMs). The new addition joins IBM Cloud’s existing \ computing solutions, helping businesses leverage AI tools like IBM’s watsonx AI studio, data lakehouse, and governance toolkit.

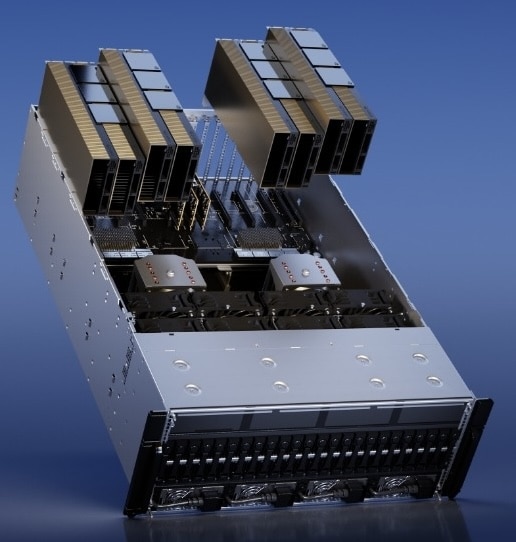

Building on its previous offerings of NVIDIA A100 Tensor Core GPUs, IBM now offers NVIDIA H100 GPUs, which NVIDIA claims can deliver up to 30 times faster inference performance. This gives IBM Cloud customers greater processing power while managing AI model tuning and inference costs. Businesses can start with smaller models or AI applications like chatbots and natural language search, and as their needs grow, they can scale up to more demanding workloads using the H100 GPU.

IBM is making these NVIDIA GPUs available on IBM Cloud, accessible through the virtual private cloud (VPC), and managed Red Hat OpenShift environments. With WatsonX for AI model building and tools for managing data and security, businesses can scale their AI initiatives more easily.

The NVIDIA H100 Tensor Core GPU instances are now available in IBM Cloud’s multi-zone regions (MZRs) across North America, Latin America, Europe, Japan, and Australia.

Amazon

Amazon