The Intel Habana Gaudi2 deep learning accelerator and 4th Gen Intel Xeon Scalable processors have demonstrated impressive results in the MLPerf Training 3.0 benchmark, according to a press release by the company. The benchmark, published by MLCommons, is a widely recognized industry standard for AI performance.

The Intel Habana Gaudi2 deep learning accelerator and 4th Gen Intel Xeon Scalable processors have demonstrated impressive results in the MLPerf Training 3.0 benchmark, according to a press release by the company. The benchmark, published by MLCommons, is a widely recognized industry standard for AI performance.

The results challenge the prevailing industry narrative that generative AI and large language models (LLMs) can only run on NVIDIA GPUs. Intel’s portfolio of AI solutions offers competitive alternatives for customers seeking to move away from closed ecosystems that limit efficiency and scalability.

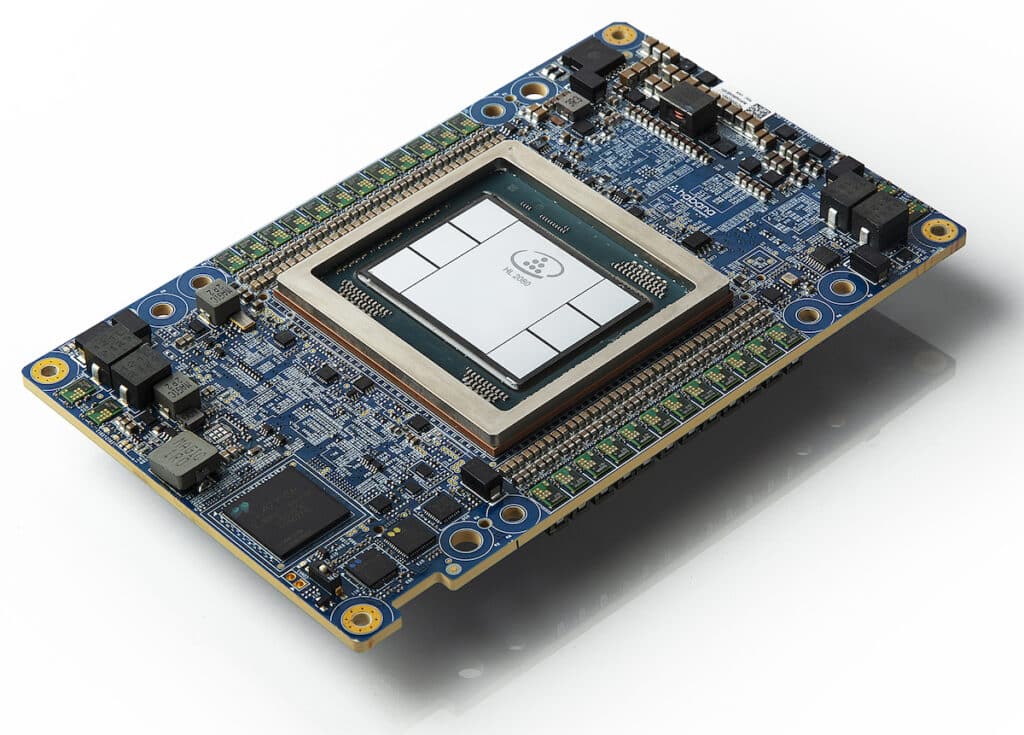

A photo shows the Habana Gaudi2 Mezzanine Card. On May 10, 2022, Habana Labs, Intel’s data center team focused on AI deep learning processor technologies, launched its second-generation deep learning processors for training and inference: Habana Gaudi2 and Habana Greco. (Credit: Intel Corporation)

What is MLPerf?

The MLPerf Training 3.0 benchmark suite measures the speed at which different systems can train models to meet a specified quality metric. The benchmarks cover various areas, including vision, language, and commerce, and use different datasets and quality targets.

Benchmark Details

| Area | Benchmark | Dataset | Quality Target | Reference Implementation Model |

|---|---|---|---|---|

| Vision | Image classification | ImageNet | 75.90% classification | ResNet-50 v1.5 |

| Vision | Image segmentation (medical) | KiTS19 | 0.908 Mean DICE score | 3D U-Net |

| Vision | Object detection (light weight) | Open Images | 34.0% mAP | RetinaNet |

| Vision | Object detection (heavy weight) | COCO | 0.377 Box min AP and 0.339 Mask min AP | Mask R-CNN |

| Language | Speech recognition | LibriSpeech | 0.058 Word Error Rate | RNN-T |

| Language | NLP | Wikipedia 2020/01/01 | 0.72 Mask-LM accuracy | BERT-large |

| Language | LLM | C4 | 2.69 log perplexity | GPT3 |

| Commerce | Recommendation | Criteo 4TB multi-hot | 0.8032 AUC | DLRM-dcnv2 |

In the field of vision, the benchmarks include image classification using the ImageNet dataset with a quality target of 75.90% classification accuracy. The reference model for this task is ResNet-50 v1.5. Other vision benchmarks include image segmentation using the KiTS19 medical dataset and object detection using the Open Images and COCO datasets.

For language tasks, the benchmarks include speech recognition using the LibriSpeech dataset with a quality target of a 0.058 Word Error Rate. The reference model for this task is RNN-T. Other language benchmarks include natural language processing (NLP) using the Wikipedia 2020/01/01 dataset and large language model (LLM) training using the C4 dataset.

In the commerce area, the benchmark is a recommendation task using the Criteo 4TB multi-hot dataset with a quality target of 0.8032 AUC. The reference model for this task is DLRM-dcnv2.

Measurement Metric

The benchmark suite measures the time to train a model on a specific dataset to reach a specified quality target. Due to the inherent variability in machine learning training times, the final results are obtained by running the benchmark multiple times, discarding the highest and lowest results, and then averaging the remaining results. Despite this, there is still some variance in the results, with imaging benchmark results having roughly +/- 2.5% variance and other benchmarks having around +/- 5% variance.

Benchmark Divisions

MLPerf encourages innovation in software and hardware by allowing participants to reimplement the reference implementations. There are two divisions in MLPerf: the Closed and Open divisions. The Closed division is designed to directly compare hardware platforms or software frameworks and requires using the same model and optimizer as the reference implementation. On the other hand, the Open division encourages the development of faster models and optimizers and allows any machine learning approach to achieve the target quality.

System Availability

MLPerf categorizes benchmark results based on system availability. Systems categorized as “Available” consist only of components that can be purchased or rented in the cloud. “Preview” systems are expected to be available in the next submission round. Lastly, systems categorized as “Research, Development, or Internal (RDI)” contain hardware or software that is experimental, in development, or for internal use.

Intel Habana Guadi2 Shows Up

The Gaudi2 deep learning accelerator, in particular, showed strong performance on the large language model, GPT-3, making it one of only two semiconductor solutions to submit performance results for LLM training of GPT-3. The Gaudi2 also offers significant cost advantages in server and system costs, making it a compelling price/performance alternative to NVIDIA’s H100.

The 4th Gen Xeon processors with Intel AI engines demonstrated that customers could build a universal AI system for data pre-processing, model training, and deployment, delivering AI performance, efficiency, accuracy, and scalability.

The Gaudi2 delivered impressive time-to-train on GPT-3, achieving 311 minutes on 384 accelerators and near-linear 95% scaling from 256 to 384 accelerators on the GPT-3 model. It also showed excellent training results in computer vision and natural language processing models. The Gaudi2 results were submitted “out of the box,” meaning customers can expect comparable performance results when implementing Gaudi2 on-premise or in the cloud.

The 4th Gen Xeon processors, as the lone CPU submission among numerous alternative solutions, proved that Intel Xeon processors provide enterprises with out-of-the-box capabilities to deploy AI on general-purpose systems, avoiding the cost and complexity of introducing dedicated AI systems.

Habana Gaudi2 8-node Cluster

In the Natural Language Processing (NLP) task using the Wikipedia dataset and the BERT-large model, the Gaudi2 achieved a training time of 2.103 minutes with 64 accelerators.

In the Image segmentation (medical) task using the KiTS19 dataset and the 3D U-Net model, the Gaudi2 achieved a training time of 16.460 minutes with TensorFlow and 20.516 minutes with PyTorch, both with eight accelerators.

In the Recommendation task using the Criteo 4TB dataset and the DLRM-dcnv2 model, the Gaudi2 achieved a training time of 14.794 minutes with PyTorch and 14.116 minutes with TensorFlow, both with eight accelerators.

In the closed division, 4th Gen Xeons could train BERT and ResNet-50 models in less than 50 and less than 90 mins, respectively. With BERT in the open division, Xeon trained the model in about 30 minutes when scaling out to 16 nodes.

These results highlight the excellent scaling efficiency possible using cost-effective and readily available Intel Ethernet 800 Series network adapters that utilize the open-source Intel Ethernet Fabric Suite Software based on Intel oneAPI.

Market Impact

The Intel Habana Gaudi2 results in the MLPerf Training 3.0 benchmark underscore the company’s commitment to providing competitive and efficient AI solutions for a broad array of applications, from the data center to the intelligent edge. NVIDIA is the clubhouse leader clearly in this regard, and every server vendor is tripping over themselves to show the industry a wide array of GPU-heavy boxes that are ready for AI workloads. But this data reaffirms that AI isn’t a one-size-fits-all category and Intel is doing its part to give the industry choices. The net result is a win for organizations deploying AI, as more competition and choice is usually a very good thing.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed