Today amongst its many announcements, Intel announced the new Intel Stratix 10 NX FPGA. The new FPGAs are AI-optimized, coming in with higher bandwidth and lower latency needed in AI acceleration. Intel states that its new FPGAs will be customizable, reconfigurable and scalable AI acceleration for compute-demanding applications such as natural language processing and fraud detection.

Today amongst its many announcements, Intel announced the new Intel Stratix 10 NX FPGA. The new FPGAs are AI-optimized, coming in with higher bandwidth and lower latency needed in AI acceleration. Intel states that its new FPGAs will be customizable, reconfigurable and scalable AI acceleration for compute-demanding applications such as natural language processing and fraud detection.

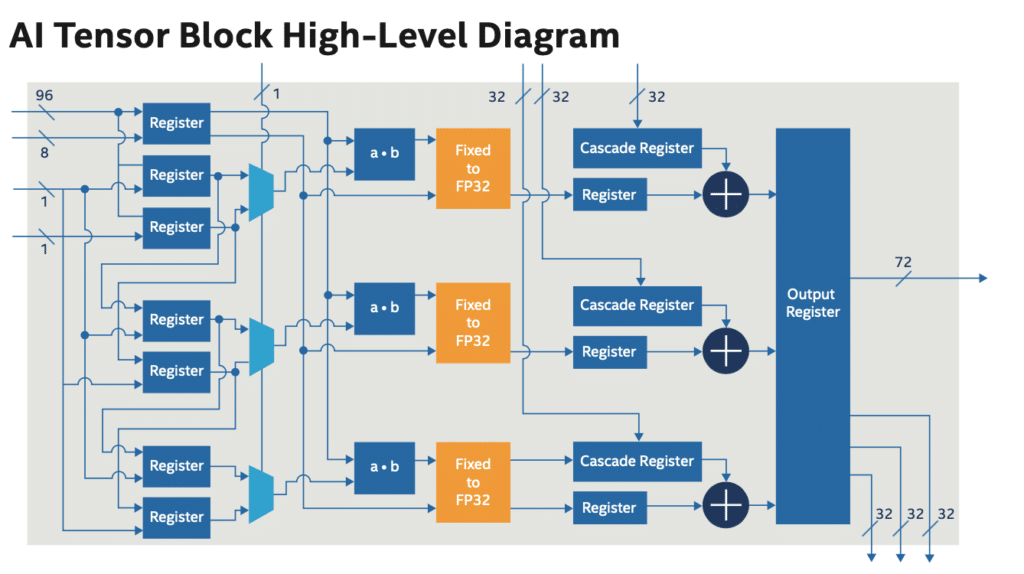

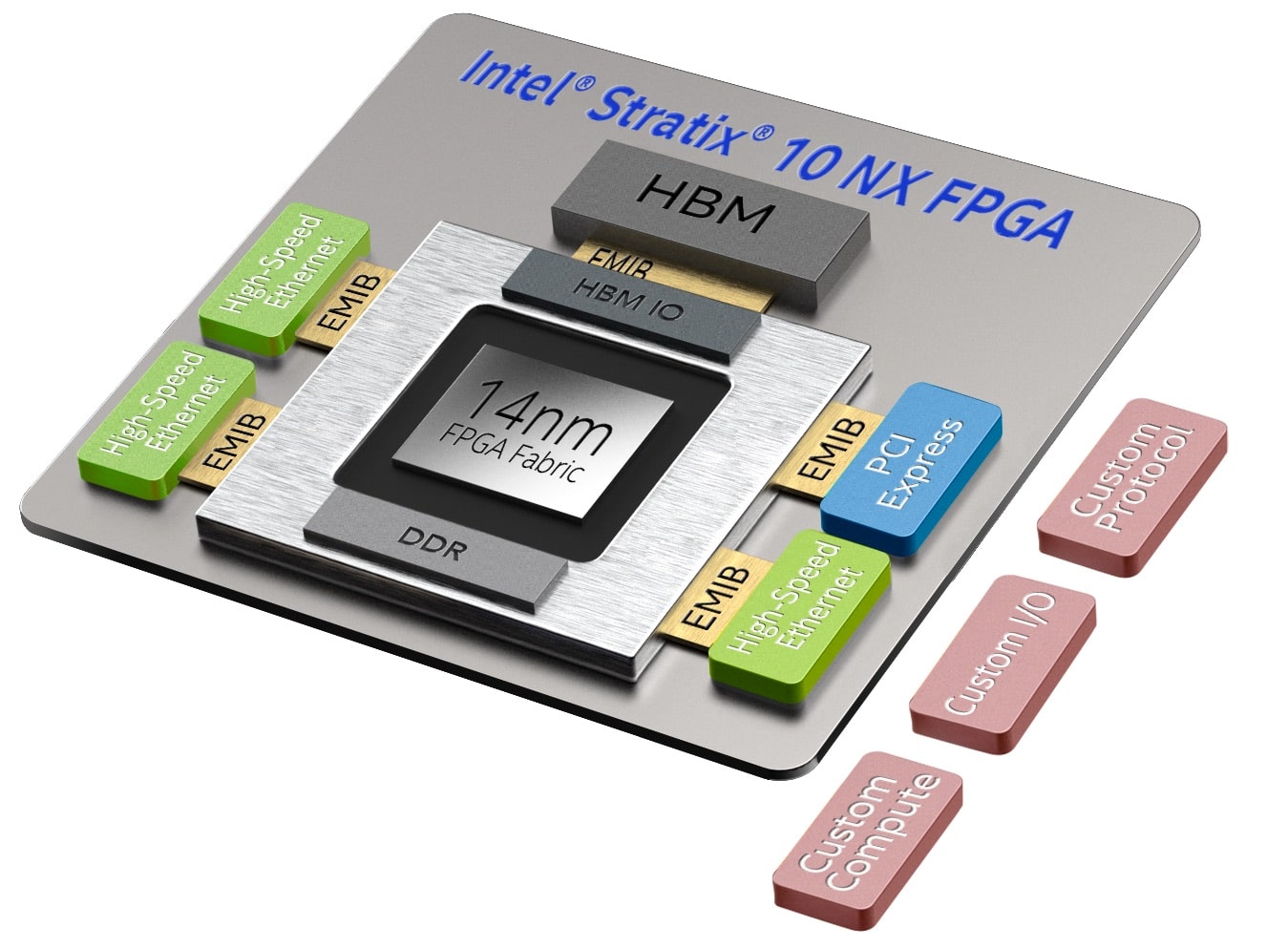

Like everything announced by Intel today, the new FPGA is all about AI. The new FPGAs will allow customers to tune around their AI workloads. To deliver the performance and latency needed, the FPGAs come with integrated high-bandwidth memory (HBM), high-performance networking capabilities. However, the big innovation from Intel are its new AI-optimized arithmetic blocks called AI Tensor Blocks. According to the company, these blocks contain dense arrays of lower-precision multipliers typically used in AI applications. The architecture of the new blocks is tuned for common matrix-matrix or vector-matrix multiplications with capabilities designed to work for both small and large matrix sizes.

The new FPGA is uniquely suited to address the trend towards low latency and larger AI models requiring greater compute density, memory bandwidth, and scalability across multiple nodes as well as reconfigurable custom functions.

Key Intel Stratix 10 NX FPGA Attributes:

- AI Tensor Block: Tuned for AI arithmetic, the AI Tensor Block is estimated to provide up up to 15X more INT8 throughput than standard Intel Stratix 10 FPGA DSP block1 for high-compute density needed for high-throughput AI inference applications.

- In package 3D stacked HBM2 high-bandwidth DRAM: Integrated memory stacks allow for large, persistent AI models to be stored on-chip, which results in lower latency with large memory bandwidth to prevent memory-bound performance challenges in large models.

- Transceiver data rates: With up to 57.8 G PAM4 transceivers, Intel Stratix 10 NX FPGAs provide the scalability and the flexibility to implement multi-node AI inference solutions, reducing or eliminating bandwidth connectivity as a limiting factor in multi-node designs. The Intel Stratix 10 NX FPGA also incorporate hard intellectual property (IP) such as PCI Express Gen3 x16 and 10/25/100G Ethernet media access control (MAC)/physical coding sublayer (PCS)/forward error correction (FEC). These transceivers provide a scalable and flexible connectivity solution to adapt to market requirements.

Availability

The Intel Stratix 10 NX FPGA are expected to be available in the second half of 2020.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed