During a presentation at ISC 23, Intel highlighted its performance leadership for high-performance computing (HPC) and artificial intelligence (AI) workloads, shared its portfolio of future HPC and AI products, and announced ambitious plans for an international effort to use the Aurora supercomputer to develop generative AI models for science and society.

During a presentation at ISC 23, Intel highlighted its performance leadership for high-performance computing (HPC) and artificial intelligence (AI) workloads, shared its portfolio of future HPC and AI products, and announced ambitious plans for an international effort to use the Aurora supercomputer to develop generative AI models for science and society.

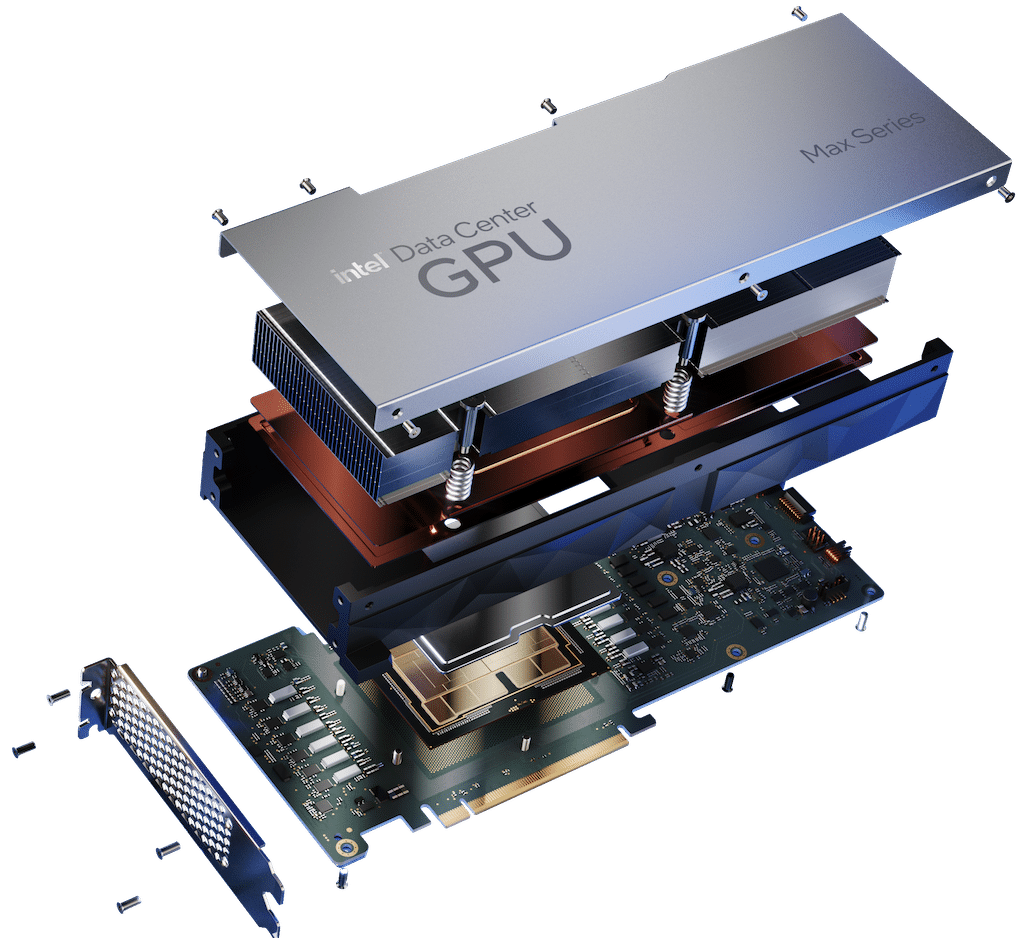

The competitive performance topped the bill, where Intel’s results were clear winners against the competition. Intel’s Data Center GPU Max Series outperformed NVIDIA H100 PCIe card by an average of 30 percent on diverse workloads. Independent results from software vendor Ansys indicated a 50 percent speedup for the Max Series GPU over the H100 on AI-accelerated HPC applications.

The Xeon Max Series CPU demonstrated a 65 percent improvement compared to AMD’s Genoa processor on the High-Performance Conjugate Gradients (HPCG) benchmark, and it used less power. An HPC favorite, the 4th Gen Intel Xeon Scalable Processor, delivered an average 50 percent speedup over AMD’s Milan4. BP’s newest 4th Gen Xeon HPC cluster showed an 8x increase in performance over its previous generation processor with improved energy efficiency. The Gaudi2 deep learning accelerator performed competitively on deep learning, training, and inference, with up to 2.4x faster performance than NVIDIA’s A100.

Next-Gen CPUs and AI-Optimized GPUs

Intel’s Jeff McVeigh, Intel corporate vice president and general manager of the Super Compute Group, introduced Intel’s next-generation CPUs designed to meet high memory bandwidth demands. Intel developed a new type of DIMM–Multiplexer Combined Ranks (MCR)– for Granite Rapids. MCR achieves speeds of 8,800 megatransfers per second based on DDR5 and greater than 1.5 terabytes/second (TB/s) of memory bandwidth capability in a two-socket system.

Intel also disclosed a new, AI-optimized x8 Max Series GPU-based subsystem from Supermicro, designed to accelerate deep learning training. OEMs are expected to offer solutions with Max Series GPUs x4 and x8 OAM subsystems and PCIe cards sometime this summer.

Intel’s next-generation Max Series GPU, Falcon Shores, will give customers the flexibility to implement system-level CPU and discrete GPU combinations for new and ever-changing workloads of the future. The Falcon Shores system utilizes a modular, tile-based architecture, which enables it to:

- Support HPC and AI data types from FP64 to BF16 to FP8.

- Enable up to 288GB of HBM3 memory with up to 9.8TB/s total bandwidth and vastly improved high-speed I/O.

- Empower the CXL programming model.

- Present a unified GPU programming interface through oneAPI.

Generative AI for Science

Argonne National Laboratory, in collaboration with Intel and HPE, announced plans to create a series of generative AI models for the scientific research community. These generative AI models for science will be trained on general text, code, scientific texts, and structured scientific data from biology, chemistry, materials science, physics, medicine, and other sources.

The resulting models (with as many as 1 trillion parameters) will be used in a variety of scientific applications, from the design of molecules and materials to the synthesis of knowledge across millions of sources to suggest new and exciting experiments in systems biology, polymer chemistry and energy materials, climate science, and cosmology. The model will also be used to accelerate the identification of biological processes related to cancer and other diseases and suggest targets for drug design.

To advance the project, Argonne is spearheading an international collaboration that includes:

- Intel

- HPE

- Department of Energy Laboratories

- US and International Universities

- Nonprofits

- International partners

Aurora is expected to offer more than two exaflops of peak double-precision compute performance when launched this year.

oneAPI Benefits HPC Applications

The latest Intel oneAPI tools deliver speedups for HPC applications with OpenMP GPU offload, extend support for OpenMP and Fortran, and accelerate AI and deep learning through optimized frameworks, including TensorFlow and PyTorch, and AI tools, enabling increased performance improvements.

Multiarchitecture programming is easier for programmers through oneAPI’s SYCL implementation, oneAPI plug-ins for NVIDIA and AMD processors developed by Codeplay, and the Intel DPC++ Compatibility Tool that migrates code from CUDA to SYCL and C++, where 90-95 percent of code typically migrates automatically. The resulting SYCL code shows comparable performance with the same code running on NVIDIA- and AMD-native systems languages. Data shows SYCL code for the DPEcho astrophysics application running on the Max Series GPU outperforms the same CUDA code on NVIDIA H100 by 48 percent.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed