Dell Tech World is highlighted not just by Dell’s robust product launches, but also by the solutions their partners had on display in the expo. Nothing garnered more attention this year than the variety of liquid-cooling solutions on the show floor. There’s so much interest in liquid cooling that our social media videos highlighting these technologies have garnered millions of views in just the last few weeks. Unless your workloads are entirely mundane, liquid cooling is coming to your data center. Here’s a primer that highlights which technologies may be a fit, depending on where you are in the liquid-cooling cycle.

Direct-to-Chip Internal Loop

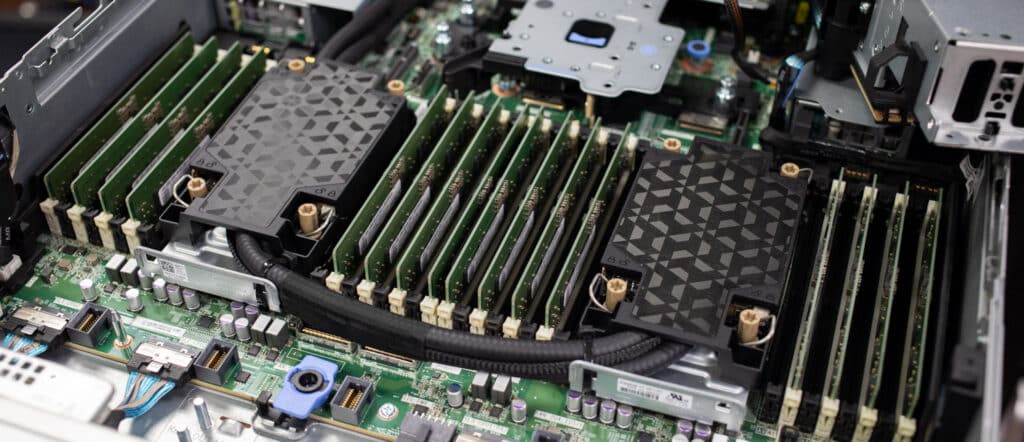

By far the easiest approach to adopting liquid cooling in the data center is via a closed internal loop. Much like a CPU cooler in a gaming PC, these systems leverage a cold plate with a large radiator to take the heat off key components. JetCool offers solutions like this, they were demonstrating both Intel and AMD systems from Dell with an internal closed loop.

View this post on Instagram

The best part about these systems is they deliver significant power savings, 10-15% according to JetCool, without the complexity of tapping into facility water. In some data centers, a full liquid cooling loop may not even be an option, so this method stands as the best alternative to air-cooled servers.

JetCool Internal Loop

While the power savings with a closed loop won’t be as high as other alternatives, even a 10% savings is huge in data centers that are hampered by the amount of power they can support in a single rack. A small power savings, thanks to a closed internal loop, may mean support for an additional server or two per rack.

It’s also worth noting that Dell is using an internal loop on the PowerEdge XE8640 GPU server, you can see more about that and the XE9640 in a YouTube video we made recently.

Direct-to-Chip Full Liquid Loop

The progression from an internal loop is one that’s connected to facility water, to help not just take the heat out of the servers, but out of the data center as well. There are half-measures though, we featured CoolIT Sytems in a recent review where we retrofitted an R760 for liquid-cooling, adding cold plates. We also installed a small manifold and coolant distribution unit, though our CDU is liquid to air. That means we’re taking the heat from the R760, but we’re still dumping it into the data center and need to remove it.

Our mini deployment can support a few servers but if you’re going to be prepared for the new liquid-cooled Dell PowerEdge XE9680L GPU server for instance, you’ll need a more robust solution. CoolIT has been a big part of Dell’s liquid-cooled roadmap so far, and they were showcasing their new Omni cold plates, new CDUs, and a variety of other cooling tech.

But even Direct-to-Chip cooling isn’t one thing, there are multiple ways to implement it. This is no more obvious than with the ZutaCore solution, which uses a unique two-phase approach to deliver cooling to the chips. ZutaCore had a few displays going, the highlight being a retrofitted XE9680 GPU server ZutaCore had converted with 14 cold plates – 8x for the GPUs, 4x for the switches, and 2x for the CPUs. This is a very compelling technology and one we have an extensive podcast about if you want to learn more.

View this post on Instagram

To highlight yet another version of Direct-to-Chip cooling, I’ll highlight Chilldyne. While not technically at the DTW expo, we did meet with some of their team at a bar in the hotel, which in our view is close enough. To be fair, Chilldyne is a Dell partner, we’ve seen their kit in the Dell labs.

Chilldyne’s claim to fame is a negative pressure liquid loop which means if a line is cut, there’s no loss of fluid. Leaks are the #1 fear holding back liquid adoption in the data center, so Chilldyne is definitely on to something here. We’ve put together a short video that highlights their technology, which is one of our most popular social videos this year.

View this post on Instagram

Rear Door Heat Exchangers (RDHx)

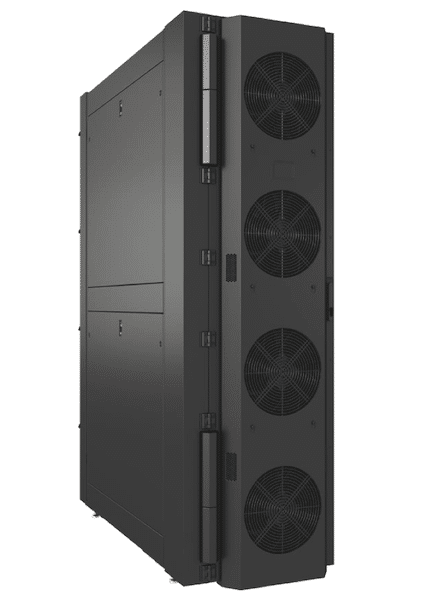

Rear Door Heat Exchangers can be passive or active heat exchangers that replace the back door of a server rack. They essentially suck up the heat from the server exhaust air, offloading it to a liquid loop for cooling. The passive doors are essentially silent, with no fans. Active RDHx can add fans to the RDHx for higher efficiency.

While RDHx weren’t a large portion of DTW, they are worth mentioning for two key reasons, on two ends of the spectrum. One, they’re a relatively easy first step at adding liquid cooling to your data center and can be deployed in a variety of stand-alone configurations. Alternatively, RDHx can be paired with other liquid cooling technologies to achieve 100% heat recapture via liquid cooling, something that was a topic of conversation in Las Vegas last week.

Liebert DCD50 RDHx

RDHx are available in some form or another from just about anyone working at rack scale, including companies at Dell Tech World like CoolIT and Vertiv. It’s also worth noting that Dell is pitching RDHx as part of the XE9680L push, “A 70KW design that uses air cooling with Rear Door Heat Exchangers (RDHx), supporting 64 GPUs – ideal for NVIDIA H100/H200/B100. We also have a 100KW design that uses liquid cooling with RDHx, supporting 72 B200 GPUs – this is the most compact rack scale architecture in the industry.”

Full Immersion Liquid Cooling

To this point, all of the data center liquid cooling options I’ve brought up are relatively mainstream. Full Immersion is where, according to the feedback we’re getting, things get a little more dicey. As the name implies, this technology essentially takes as-is servers, with a few modifications, and dunks them in an engineered fluid (proprietary dielectric coolants). BP and Shell both make fluids for this purpose, amongst others. We’ve seen full immersion racks showing up at trade shows like DTW for 3-4 years. This year both Submer and GRC offered immersion demos.

View this post on Instagram

The idea of single-phase immersion cooling (two-phase had a moment but has largely fallen out of favor) makes sense in a lot of ways and is a favorite amongst crypto miners. When considering enterprise servers like PowerEdge the rules change a little bit. With servers, step one is to remove the fans, which offers immediate power savings. Convection, or convection-assisted with pumps, moves the fluid over the server’s components. From there heat can be captured via heat exchanger and removed from the data center.

This system removed the need for air cooling and both GRC and Submer point to data that suggests servers in immersion cooling actually have longer lives and fewer service events than air-cooled servers. But herein lies one of the biggest hurdles, servers have to come out of the pool for service and while that’s not hard, it’s more awkward than servicing traditionally racked gear. A server in immersion has to come out of the fluid, dry off a bit, then be placed on a table for service. Not an impossible action, but one that takes a little effort.

There are other concerns over the weight of the tanks and fluid and floor space efficiency compared to standard vertical racks. The immersion industry argues tanks can be stacked and systems that are in immersion tanks are actually more efficient. We have a good podcast on immersion if you want to learn more.

Conclusion

There’s no stopping liquid cooling from coming to your data center if your organization is engaged with AI or other applications that make use of dense GPU boxes. It’s highly likely that if you buy an 8-way GPU server today, by the time it shows up on your loading dock in a year, it will have a closed loop inside if you haven’t invested in a full liquid loop by that point. The good news is that the industry is identifying the problems hampering adoption, like the lack of universal manifold connectors, and working toward solving those issues, so liquid cooling is easier for the enterprise to embrace.

Amazon

Amazon