Mellanox Technologies, supplier of high-performance, end-to-end interconnect solutions for data center servers and storage systems, has added to its line of end-to-end FDR 56GB/s InfiniBand interconnect solutions by announcing its new 12-port non-blocking fixed switches. These new switches are part of the Mellanox SX6000 series of FDR 56GB/s InfiniBand switches, all of which have seamless fabric management capabilities to help ensure the highest fabric performance. Mellanox FDR 56GB/s InfiniBand adapters and switches are the most efficient interconnect solutions for users wanting to connect data center servers and storage by delivering high throughput, low latency, and world leading applications performance.

Mellanox Technologies, supplier of high-performance, end-to-end interconnect solutions for data center servers and storage systems, has added to its line of end-to-end FDR 56GB/s InfiniBand interconnect solutions by announcing its new 12-port non-blocking fixed switches. These new switches are part of the Mellanox SX6000 series of FDR 56GB/s InfiniBand switches, all of which have seamless fabric management capabilities to help ensure the highest fabric performance. Mellanox FDR 56GB/s InfiniBand adapters and switches are the most efficient interconnect solutions for users wanting to connect data center servers and storage by delivering high throughput, low latency, and world leading applications performance.

Mellanox Technologies, supplier of high-performance, end-to-end interconnect solutions for data center servers and storage systems, has added to its line of end-to-end FDR 56GB/s InfiniBand interconnect solutions by announcing its new 12-port non-blocking fixed switches. These new switches are part of the Mellanox SX6000 series of FDR 56GB/s InfiniBand switches, all of which have seamless fabric management capabilities to help ensure the highest fabric performance. Mellanox FDR 56GB/s InfiniBand adapters and switches are the most efficient interconnect solutions for users wanting to connect data center servers and storage by delivering high throughput, low latency, and world leading applications performance.

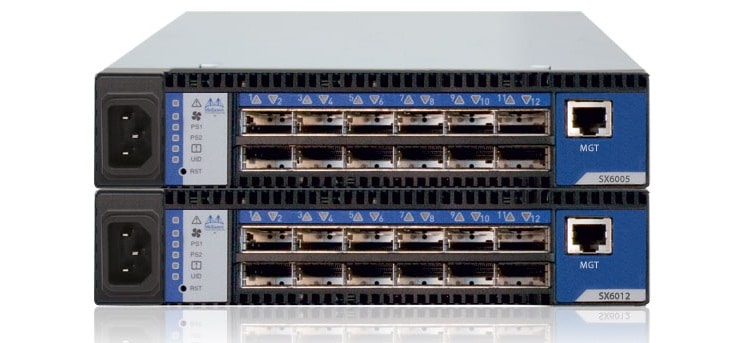

The SX6012 and SX6005 are built with Mellanox’s 6th generation SwitchX-2 InfiniBand switch device, providing up to twelve 56GB/s full bi-directional bandwidth per port. The 12-port switch series is designed for small departmental or back-end storage clustering that require, and depend on, high application performance. It boasts extremely low latency, high throughput, and highest resiliency using two 1/2" 19’’ boxes in a single RU, each with redundant power supplies, which is ideal for small-scale storage environments.

Mellanox also introduced the MetroX series of long distance InfiniBand switch solutions. InfiniBand solutions are currently being deployed within the data center to efficiently connect between servers as well as between servers and storage. MetroX gives users the ability to have native InfiniBand and RDMA connectivity between data centers across multiple geographically distributed sites.

Currently, MetroX can transfer data at distances up to 10 KM and will increase this number to 100km in the future. Additionally, InfiniBand ports enables star-like campus deployments, providing clear capital expense reduction (compared to single port-to-port long haul solutions), with its six long haul ports at 40GB/s and six downlink FDR 56GB/s

The MetroX TX6100 will be available in the sometime during the first quarter of 2013.

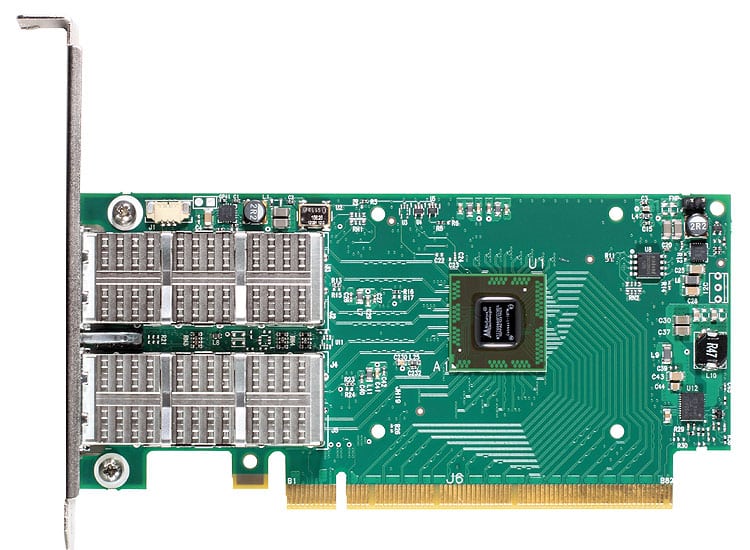

Mellanox’s newest InfiniBand adapter, the Connect-IB dual-port 56GB/s FDR, also recently achieved the industry’s highest throughput of more than 100Gb/s utilizing PCI Express 3.0 x16 and over 135 million messages per second, which is 4.5X higher than anything its competitors have accomplished. Connect-IB adapter cards are sampling today.

Discuss This Story