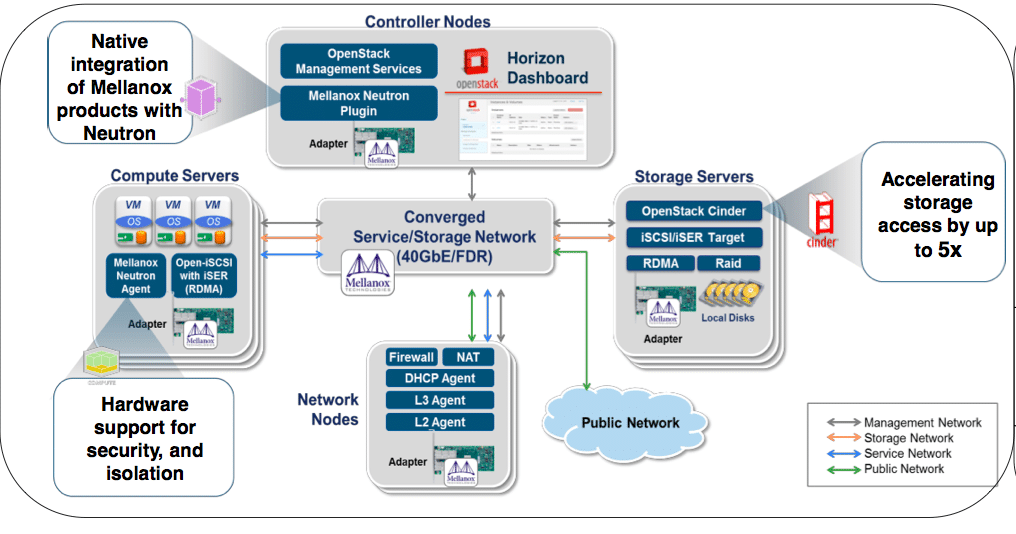

Mellanox today announced that it will be the first certified end-to-end interconnect vendor for OpenStack’s forthcoming Havana distribution. Mellanox 10/40GbE and FDR 56Gb/s adapters and switches can be matched with OpenStack Cinder block storage, OpenStack Neutron networking, and OpenStack Nova compute components in order to support cloud services vendors. Mellanox will also be the first interconnect adapter vendor listed on Red Hat’s Certified Solution Marketplace for Red Hat’s OpenStack-based Linux distributions.

OpenStack is a cloud operating system that leverages open APIs to implement pools of compute, storage, and networking resources with a central web-based dashboard for administrators and users provision cloud resources. On the compute end, OpenStack is comprised of OpenStack Compute – codenamed Nova – and OpenStack Image service, named Glance. The OpenStack Neutron plugin coordinates networking, while OpenStack Swift and OpenStack Cinder are responsible for object and block storage, respectively.

Mellanox 10GbE product portfolio

Mellanox 40/56GbE product portfolio

OpenStack consortium home page