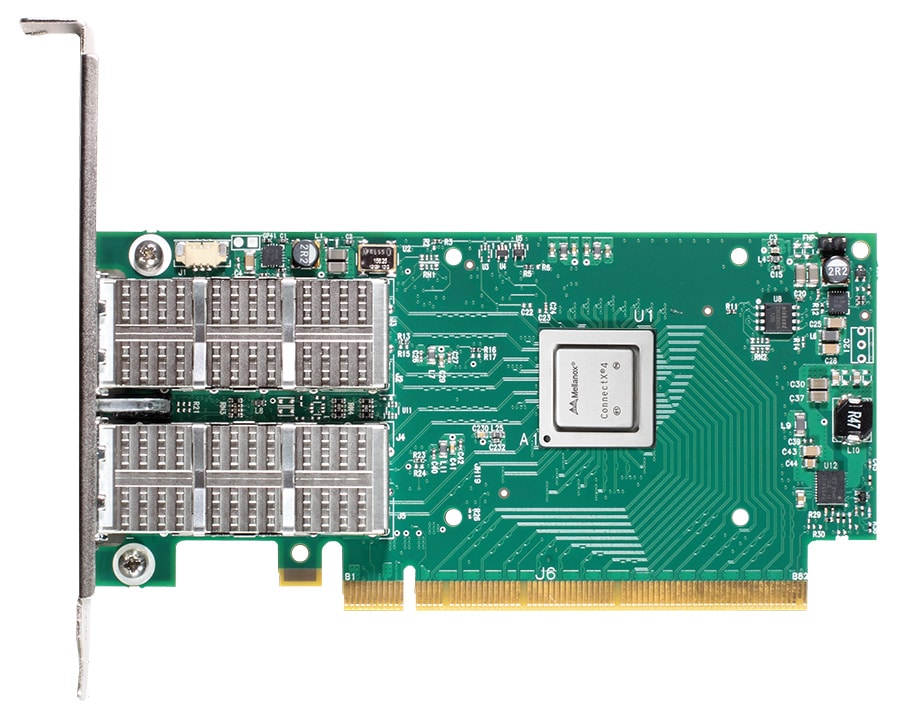

Today Mellanox Technologies introduced the ConnectX-4 Adapter, a single/dual-port 100Gb/s Virtual Protocol Interconnect (VPI) adapter, the final piece to the industry’s first complete end-to-end 100Gb/s InfiniBand interconnect solution. The ConnectX-4 will provide high-performance and low latency needs for high-performance computing (HPC), cloud, Web 2.0, and Big Data applications.

With the explosion of data, companies need to meet the demands of this growth. The introduction of 100Gb/s interconnects will help companies keep up with the growing demands for data processing and retrieval. Doubling the throughput of the previous generation, the ConnectX-4 will give enterprises a scalable, efficient, high-performance solution. The ConnectX-4 can deliver 10, 20, 25, 40, 50, 56 and 100Gb/s throughput supporting both the InfiniBand and the Ethernet standard protocols, and the flexibility to connect any CPU architecture – x86, GPU, POWER, ARM, FPGA and more. Mellanox claims performance of 150 million messages per second, 0.7usec of latency, and smart acceleration engines such as RDMA, GPUDirect and SR-IOV.

Mellanox will offer complete end-to-end 100Gb/s InfiniBand solution, including the EDR 100Gb/s Switch-IB InfiniBand switch and LinkX 100Gb/s copper and fiber cables. For Ethernet-based data centers, Mellanox’s ConnectX-4 provides the complete link speed options of 10, 25, 40, 50 and 100Gb/s. Supporting these speeds, Mellanox offers a complete copper and fiber cable options.

Key Features:

- EDR 100Gb/s InfiniBand or 100Gb/s Ethernet per port 10/20/25/40/50/56/100Gb/s speeds

- 150M messages/second

- Single and dual-port options available

- Erasure Coding offload

- T10-DIF Signature Handover

- Virtual Protocol Interconnect (VPI)

- Power8 CAPI support

- CPU offloading of transport operations

- Application offloading

- Mellanox PeerDirect communication acceleration

- Hardware offloads for NVGRE and VXLAN encapsulated traffic

- End-to-end QoS and congestion control

- Hardware-based I/O virtualization

- Ethernet encapsulation (EoIB)

- RoHS-R6E

Key Benefits:

- Highest performing silicon for applications requiring high bandwidth, low latency and high message rate

- World-class cluster, network, and storage performance

- Smart interconnect for x86, Power, ARM, and GPU-based compute and storage platforms

- Cutting-edge performance in virtualized overlay networks (VXLAN and NVGRE)

- Efficient I/O consolidation, lowering data center costs and complexity

- Virtualization acceleration

- Power efficiency

- Scalability to tens-of-thousands of nodes

Compatibility:

- PCIe Interface

- PCIe Gen 3.0 compliant, 1.1 and 2.0 compatible

- 2.5, 5.0, or 8.0GT/s link rate x16

- Auto-negotiates to x16, x8, x4, x2, or x1

- Support for MSI/MSI-X mechanisms

- Coherent Accelerator Processor Interface(CAPI)

- Connectivity

- Interoperable with InfiniBand or 10/25/40/50/100Gb Ethernet switches

- Passive copper cable with ESD protection – Powered connectors for optical and active cable support

- QSFP to SFP+ connectivity through QSA module

- Operating Systems/Distributions

- RHEL/CentOS – Windows

- FreeBSD

- VMware

- OpenFabrics Enterprise Distribution (OFED) – OpenFabrics Windows Distribution (WinOF)

Availability

Mellanox will begin sampling the ConnectX-4 with select customers in the first quarter of 2015