Microsoft unwrapped several new developments in custom chip design and AI infrastructure during this week’s Microsoft Ignite event, including expanding its silicon portfolio.

Microsoft announced several key developments in custom chip design and AI infrastructure at Ignite 2024. The company is expanding its silicon portfolio with new security and data processing chips while enhancing its partnership with NVIDIA for AI computing. These updates aim to improve efficiency, security, and performance across Microsoft’s cloud services.

Microsoft’s Custom Silicon Revolution

Microsoft is significantly expanding its custom silicon portfolio beyond the Azure Maia AI accelerators and Azure Cobalt CPUs. They have introduced the Azure Integrated HSM, an in-house security chip designed to enhance key management without compromising performance. In 2025, Microsoft will add the HSM security module in all new data center servers to protect confidential and general-purpose workloads.

Microsoft also introduced Azure Boost DPU, its first data processing unit. This specialized chip handles data-centric workloads with exceptional efficiency. New servers with this DPU will cut power use by two-thirds and perform four times better than current servers.

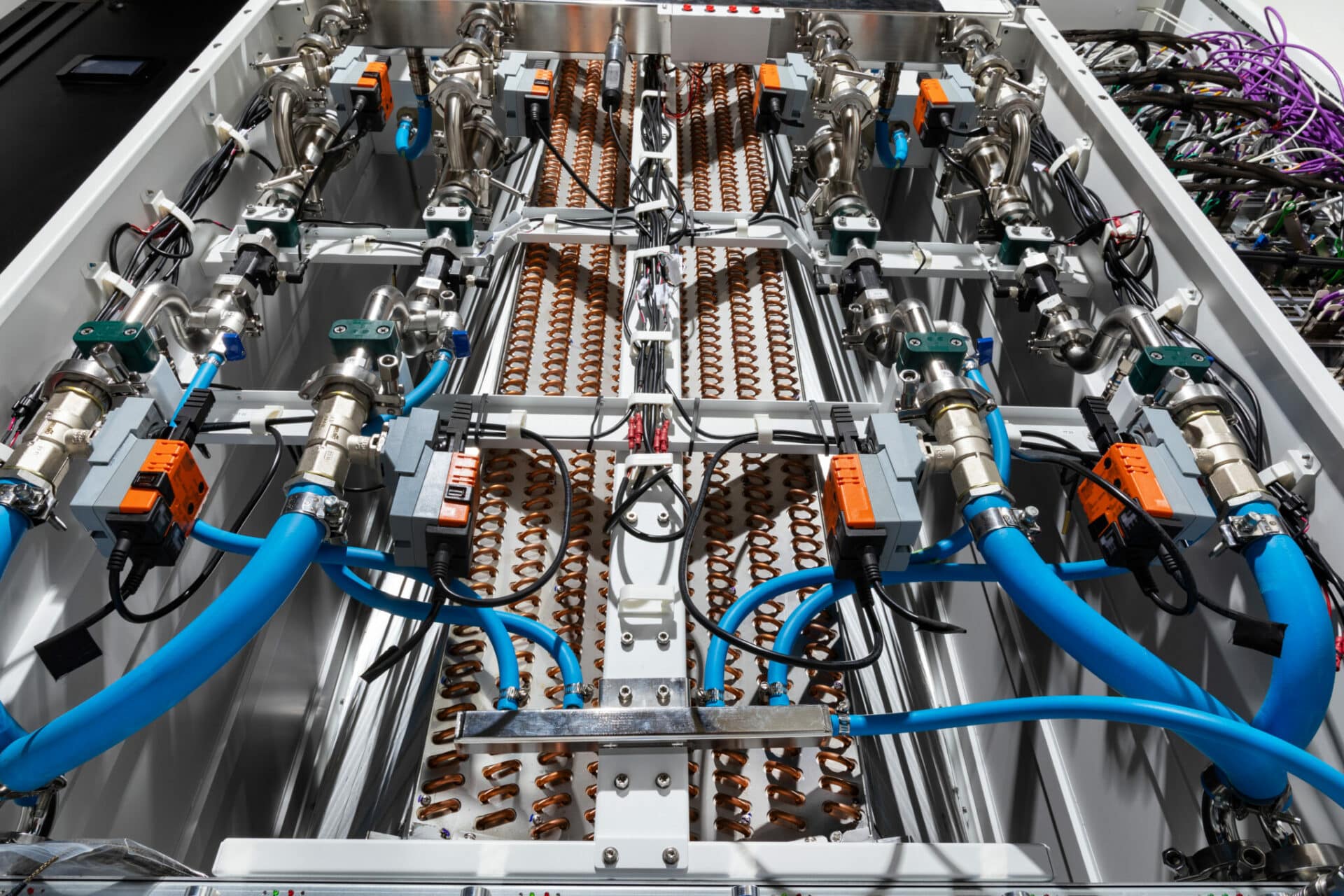

Cooling and Power Infrastructure

Microsoft has showcased its next-generation liquid cooling “sidekick” rack. This heat exchanger unit can be retrofitted into Azure data centers to support large-scale AI systems, including NVIDIA’s GB200 infrastructure. They have also collaborated with Meta on a disaggregated power rack design featuring 400-volt DC power, enabling up to 35% more AI accelerators in each server rack.

In a move towards industry-wide advancement, Microsoft is open-sourcing these cooling and power rack specifications through the Open Compute Project, allowing the entire industry to benefit from these innovations.

Next-Generation AI and Computing Infrastructure

Azure’s AI infrastructure continues to evolve with the introduction of the ND H200 V5 Virtual Machine series, featuring NVIDIA’s H200 GPUs. The platform has shown remarkable performance enhancements, surpassing the industry benchmarking standard by a factor of two between NVIDIA H100 and H200 GPUs.

Microsoft has also announced the Azure ND GB200 v6, a new AI-optimized VM series incorporating the NVIDIA GB200 NVL 72 rack-scale design with Quantum InfiniBand networking. This advancement enables AI supercomputing performance at scale, connecting tens of thousands of Blackwell GPUs.

For CPU-based supercomputing, the new Azure HBv5 virtual machines, powered by custom AMD EPYC™ 9V64H processors, promise up to eight times faster performance than current alternatives and will be available for preview in 2025.

Azure Container Apps and NVIDIA Integration

Azure Container Apps now supports NVIDIA GPUs, enabling simplified and scalable AI deployment. This serverless container platform simplifies deploying and managing microservices-based applications by abstracting away the underlying infrastructure. With per-second billing and scale-to-zero capabilities, customers pay only for the compute they use, ensuring economical and efficient resource utilization.

The NVIDIA AI platform on Azure includes new reference workflows for industrial AI and NVIDIA Omniverse Blueprint for creating immersive, AI-powered visuals. A reference workflow for 3D remote monitoring of industrial operations is coming soon, enabling developers to connect physically accurate 3D models of industrial systems to real-time data from Azure IoT Operations and Power BI.

RTX AI PCs and Advanced Computing

NVIDIA announced its new multimodal SLM, NVIDIA Nemovision-4B Instruct, for understanding visual imagery in the real world and on screen. This technology, which will soon be introduced to RTX AI PCs and workstations, will enhance digital human interactions with greater realism.

Updates to NVIDIA TensorRT Model Optimizer (ModelOpt) now offer Windows developers an improved path to optimize models for ONNX Runtime deployment. This enables developers to create AI models for PCs that are faster and more accurate when accelerated by RTX GPUs while making it easy to deploy across the PC ecosystem with ONNX runtimes.

Over 600 Windows apps and games run AI locally on over 100 million GeForce RTX AI PCs worldwide, delivering fast, reliable, and low-latency performance. The collaboration between NVIDIA and Microsoft continues to drive innovation in personal computing devices, bringing sophisticated AI capabilities to everyday users.

Microsoft Cloud and Hybrid Infrastructure

Microsoft’s commitment to multi-cloud and hybrid solutions is exemplified by Azure Arc, now serving over 39,000 customers globally. The new Azure Local delivers secure cloud-connected hybrid infrastructure with flexible options, including GPU servers for AI inferencing.

Microsoft has also announced Windows Server 2025 and SQL Server 2025, leveraging Azure Arc to deliver cloud capabilities across on-premises and cloud environments. SQL Server 2025 stands out with its new built-in AI capabilities, simplifying AI application development and RAG patterns with vector support.

Conclusion

Microsoft’s recent innovations underscore its dedication to advancing custom silicon, AI infrastructure, and hybrid cloud solutions. With groundbreaking developments like the Azure Integrated HSM and Azure Boost DPU, Microsoft is setting a new template for secure, efficient, high-performance data center operations. The introduction of next-generation cooling and power technologies and open-sourcing innovations highlight Microsoft’s commitment to fostering industry collaboration and sustainability.

The evolution of Azure’s AI and computing infrastructure, including the ND H200 V5 and ND GB200 v6 virtual machines, demonstrates Microsoft’s ability to deliver AI performance at scale. Meanwhile, the new HBv5 virtual machines promise performance for CPU-based supercomputing, ensuring Azure remains a leader in high-performance computing.

Finally, with Azure Arc’s robust hybrid capabilities and the introduction of AI-enhanced tools like SQL Server 2025, Microsoft is equipping enterprises with flexible, scalable solutions to tackle the complexities of modern workloads. Together, these advancements solidify Microsoft’s position as a leader in driving the future of AI, cloud, and hybrid infrastructure.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed