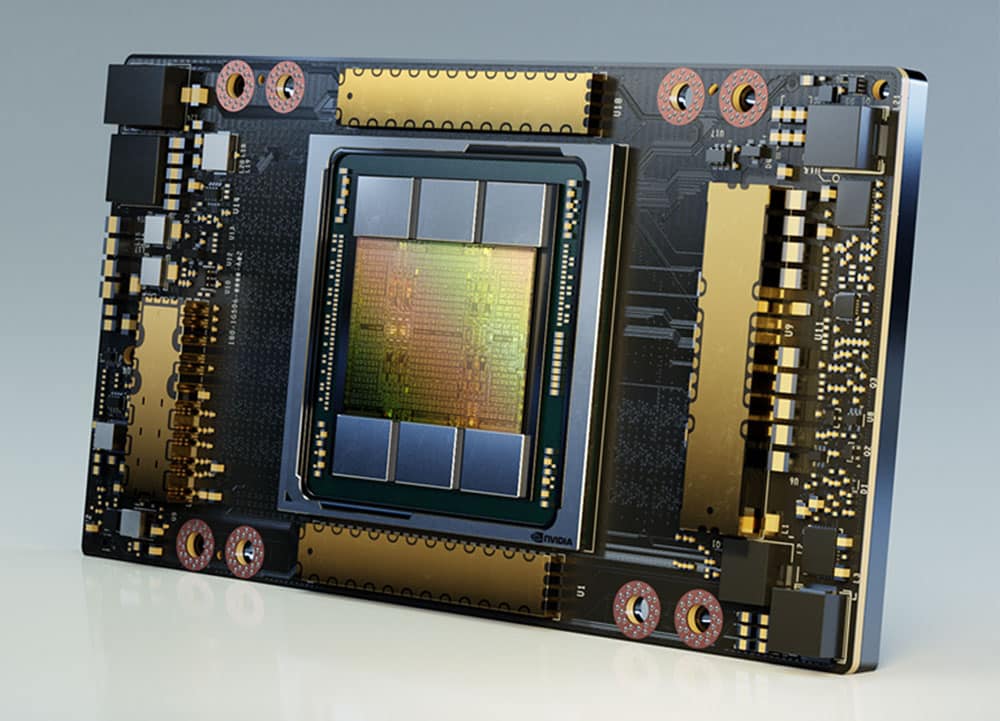

Today at SC20 NVIDIA announced that its popular A100 GPU will see a doubling of high-bandwidth memory with the unveiling of the NVIDIA A100 80GB GPU. This new GPU will be the innovation powering the new NVIDIA HGX AI supercomputing platform. With twice the memory, the new GPU hopes to help researchers and engineers hit unprecedented speed and performance to unlock the next wave of AI and scientific breakthroughs.

Earlier this year at GTC, NVICIA announced the release of its 7nm GPU, the NVIDIA A100. As we wrote at the time, the A100 is based on NVIDIA’s Ampere architecture and contains 54 billion transistors. Like previous NVIDIA data center GPUs, the A100 includes Tensor Cores. Tensor cores are specialized parts of the GPU designed specifically to quickly perform a type of matrix multiplication and addition calculation commonly used in inferencing.With new beefier GPUs, comes new, beefier Tensor Cores. Previously, NVIDIA’s Tensor Cores could only support up to thirty-two-bit floating-point numbers. The A100 supports sixty-four-bit floating-point operations, allowing for much greater precision.

Bumping up the high-bandwidth memory (HBM2e) to 80GB is said to be able to deliver 2 terabytes per second of memory bandwidth. This new boost helps with the emerging applications in HPC that have enormous data memory requirements, such as AI training, natural language processing, and big data analytics to name a few.

Key Features of A100 80GB

The A100 80GB includes the many groundbreaking features of the NVIDIA Ampere architecture:

- Third-Generation Tensor Cores: Provides up to 20x AI throughput of the previous Volta generation with a new format TF32, as well as 2.5x FP64 for HPC, 20x INT8 for AI inference and support for the BF16 data format.

- Larger, Faster HBM2e GPU Memory: Doubles the memory capacity and is the first in the industry to offer more than 2TB per second of memory bandwidth.

- MIG technology: Doubles the memory per isolated instance, providing up to seven MIGs with 10GB each.

- Structural Sparsity: Delivers up to 2x speedup inferencing sparse models.

- Third-Generation NVLink and NVSwitch: Provides twice the GPU-to-GPU bandwidth of the previous generation interconnect technology, accelerating data transfers to the GPU for data- intensive workloads to 600 gigabytes per second.

Availability

ading systems providers Atos, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Inspur, Lenovo, Quanta and Supermicro are expected to begin offering systems built using HGX A100 integrated baseboards featuring A100 80GB GPUs in the first half of next year.

Amazon

Amazon