Announced at GTC and becoming available as an AIC at ISC, NVIDIA A100 GPUs are now coming to Google Cloud. Considering it has only been roughly a month since the initial announcement, this is a really fast move for a GPU to a major public cloud. Google is the first major public cloud to introduce the A100, or Ampere GPUs, under its Accelerator-Optimized VM (A2) instance family.

Announced at GTC and becoming available as an AIC at ISC, NVIDIA A100 GPUs are now coming to Google Cloud. Considering it has only been roughly a month since the initial announcement, this is a really fast move for a GPU to a major public cloud. Google is the first major public cloud to introduce the A100, or Ampere GPUs, under its Accelerator-Optimized VM (A2) instance family.

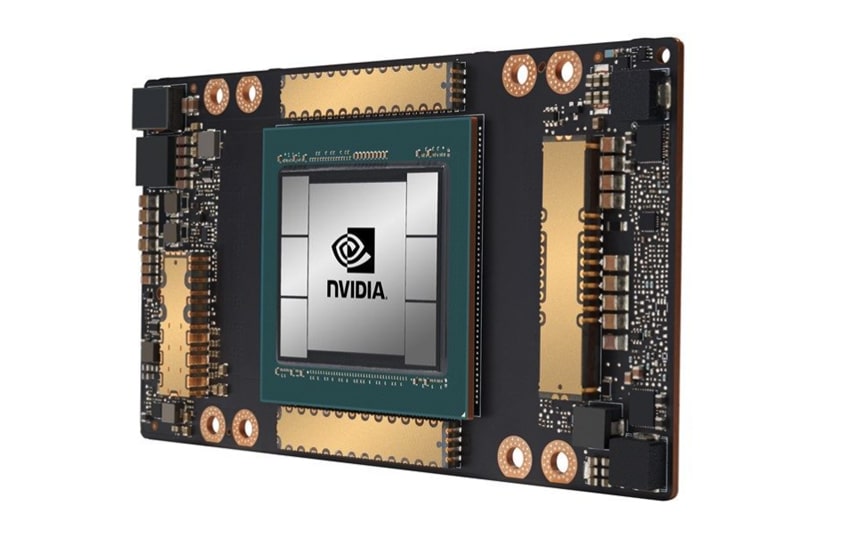

As we stated at the initial announcement, NVIDIA’s first 7nm GPU is the NVIDIA A100. The A100 is based on NVIDIA’s Ampere architecture and contains 54 billion transistors. Like previous NVIDIA data center GPUs, the A100 includes Tensor Cores. Tensor cores are specialized parts of the GPU designed specifically to quickly perform a type of matrix multiplication and addition calculation commonly used in inferencing. With new beefier GPUs, comes new, beefier Tensor Cores. Previously, NVIDIA’s Tensor Cores could only support up to thirty-two-bit floating-point numbers. The A100 supports sixty-four-bit floating-point operations, allowing for much greater precision.

Several cloud use cases need the type of computing power that GPUs can provide, specifically AI training and inference, data analytics, scientific computing, genomics, edge video analytics, 5G services, amongst others. The new NVIDIA A100 GPUs can boost the performance of training and inference performance by 20x over its predecessors, making it ideal for the above.

Google Compute Engine will be leveraging the A100 for multiple uses from scaling-up AI training and scientific computing, to scaling-out inference applications, to enabling real-time conversational AI. The new instance, A2 VM, can work in workloads of various sizes. The instance will work across CUDA-enabled machine learning training and inference, data analytics, as well as high performance computing. For the large workloads, Google offers the a2-megagpu-16g instance, which comes with 16 A100 GPUs, offering a total of 640GB of GPU memory and 1.3TB of system memory, all connected through NVSwitch with up to 9.6TB/s of aggregate bandwidth. For customers that don’t need quite that much power there will be smaller A2 VMs as well.

In the near future, Google Cloud will roll out A100 support for Google Kubernetes Engine, Cloud AI Platform and other Google Cloud services.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed