On the opening day of Computex 2022, NVIDIA announced that Taiwan’s leading computer makers are set to release the first wave of systems powered by NVIDIA Grace® CPU Superchip and Grace Hopper Superchip. The new computer systems will address various workloads, including digital twins, AI, HPC, cloud graphics, and gaming.

On the opening day of Computex 2022, NVIDIA announced that Taiwan’s leading computer makers are set to release the first wave of systems powered by NVIDIA Grace® CPU Superchip and Grace Hopper Superchip. The new computer systems will address various workloads, including digital twins, AI, HPC, cloud graphics, and gaming.

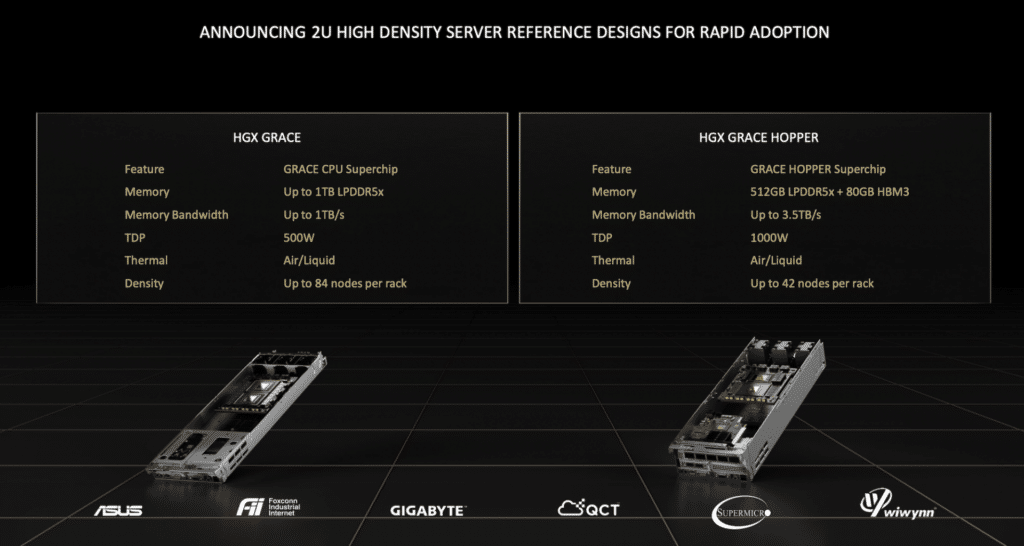

Server models from ASUS, Foxconn Industrial Internet, GIGABYTE, QCT, Supermicro, and Wiwynn are expected to begin shipping in the first half of 2023. Grace-powered systems will join x86 and other Arm-based servers, offering customers a broad range of choices to achieve high-performing and efficient data centers.

Grace CPU Superchip Reference Architecture

Ian Buck, vice president of Hyperscale and HPC at NVIDIA, said:

“A new type of data center is emerging — AI factories that process and refine mountains of data to produce intelligence — and NVIDIA is working closely with our Taiwan partners to build the systems that enable this transformation. These new systems from our partners, powered by our Grace Superchips, will bring the power of accelerated computing to new markets and industries globally.”

The servers are based on four new system designs featuring the Grace CPU Superchip and Grace Hopper Superchip, which NVIDIA announced at the recent GTC conference. The 2U form factor designs provide the blueprints and server baseboards for original design manufacturers and original equipment manufacturers to bring to market systems for the NVIDIA CGX cloud gaming, NIDIA OVX digital twin, and the NVIDIA HGX AI and HPC platforms.

The two NVIDIA Grace Superchip technologies enable a broad range of compute-intensive workloads across a number of system architectures.

- The Grace CPU Superchip features two CPU chips connected coherently through an NVIDIA NVLink-C2C interconnect, with up to 144 high-performance Arm V9 cores with scalable vector extensions and a 1 terabyte-per-second memory subsystem. The design provides the highest performance and twice the memory bandwidth and energy efficiency of today’s leading server processors to address the most demanding HPC, data analytics, digital twin, cloud gaming, and hyperscale computing applications.

- The Grace Hopper Superchip pairs an NVIDIA Hopper GPU with a Grace CPU over NVLink-C2C in an integrated module designed to address HPC and giant-scale AI applications. Using the NVLink-C2C interconnect, the Grace CPU transfers data to the Hopper GPU 15x faster than traditional CPUs.

The Grace server design portfolio includes systems available in single baseboards with one-, two- and four-way configurations available across four workload-specific designs that server manufacturers can customize according to customer needs:

- NVIDIA HGX Grace Hopper systems for AI training, inference, and HPC are available with the Grace Hopper Superchip and NVIDIA BlueField-3 DPUs.

- NVIDIA HGX Grace systems for HPC and supercomputing feature the CPU-only design with Grace CPU Superchip and BlueField-3.

- NVIDIA OVX systems for digital twins and collaboration workloads feature the Grace CPU Superchip, BlueField-3, and NVIDIA GPUs.

- NVIDIA CGX systems for cloud graphics and gaming feature the Grace CPU Superchip, BlueField-3, and NVIDIA A16 GPUs.

NVIDIA is also extending its NVIDIA-Certified Systems program to servers using the NVIDIA Grace CPU Superchip and Grace Hopper Superchip, in addition to x86 CPUs. The first certifications of OEM servers are expected soon after partner systems ship. The Grace server portfolio is optimized for NVIDIA HPC, NVIDIA AI, Omniverse, and NVIDIA RTX.

Learn more at NVIDIA.COM.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | TikTok | RSS Feed