Steve Poole is among many researchers around the globe tapping into the power of the network. The distinguished senior scientist at Los Alamos National Laboratory (LANL) foresees huge performance gains using accelerated computing that includes data processing units (DPUs) running on NVIDIA Quantum InfiniBand networks.

Steve Poole is among many researchers around the globe tapping into the power of the network. The distinguished senior scientist at Los Alamos National Laboratory (LANL) foresees huge performance gains using accelerated computing that includes data processing units (DPUs) running on NVIDIA Quantum InfiniBand networks.

Across Europe and the U.S., other HPC developers are developing ways to offload communications and computing jobs to DPUs. They’re supercharging supercomputers with the power of Arm cores and accelerators inside NVIDIA BlueField-2 DPUs.

An Open API for DPUs

Poole’s work is one part of a broad, multi-year collaboration with NVIDIA that targets 30x speedups in computational multi-physics applications. It includes pioneering techniques in computational storage, pattern matching, and more using BlueField and its NVIDIA DOCA software framework.

The efforts also will help further define OpenSNAPI, an application interface anyone can use to harness DPUs. Poole chairs the OpenSNAPI project at the Unified Communication Framework, a consortium enabling heterogeneous computing for HPC apps whose members include Arm, IBM, NVIDIA, U.S. national labs, and U.S. universities.

“DPUs are an integral part of our overall solution, and I see great potential in using DOCA and similar software packages in the near future,” Poole said.

10-30x Faster Flash Storage

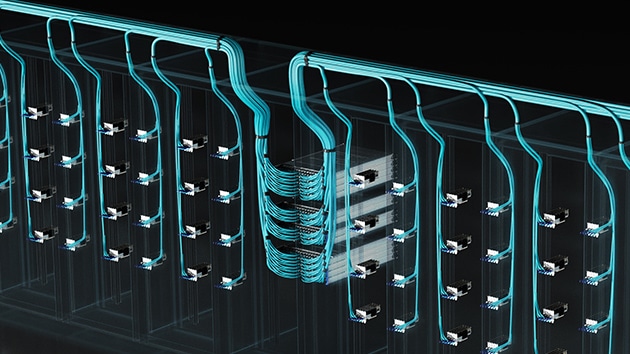

LANL is already feeling the power of in-network computing, thanks to a DPU-powered storage system it created. The Accelerated Box of Flash (ABoF, pictured below) combines solid-state storage with DPU and InfiniBand accelerators to speed up performance-critical parts of a Linux file system. It’s up to 30x faster than similar storage systems and is set to become a key component in LANL’s infrastructure.

A functional prototype of the Accelerated Box of Flash. Hardware components are all standard for ease of adoption. Accelerators and storage devices are placed in the U.2 slots in the front bays while there is also an internal PCIe (peripheral component interconnect express) slot used for accelerator hardware.

ABoF makes “more scientific discovery possible. Placing computation near storage minimizes data movement and improves the efficiency of both simulation and data-analysis pipelines,” said Dominic Manno, a LANL researcher, in a recent LANL blog.

An internal view of the Accelerated Box of Flash showing the connectivity of NVMe SSDs (front of chassis) to BlueField-2 DPUs. At top right is a PCIe location for an accelerator. This demonstration used an Eideticom NoLoad device.

DPUs in the Enterprise

We’ve seen our fair share of DPUs coming to mainstream enterprise data centers. VAST Data for instance, uses DPUs in their new Ceres all-flash storage nodes. And while it’s a competitive technology, Fungible too has gone to DPUs to create their storage and disaggregated GPU offering. Clearly we’re in the early stages, but network and systems administrators should be getting up to speed on what DPUs can do to enable more efficient systems and application delivery.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | TikTok | RSS Feed