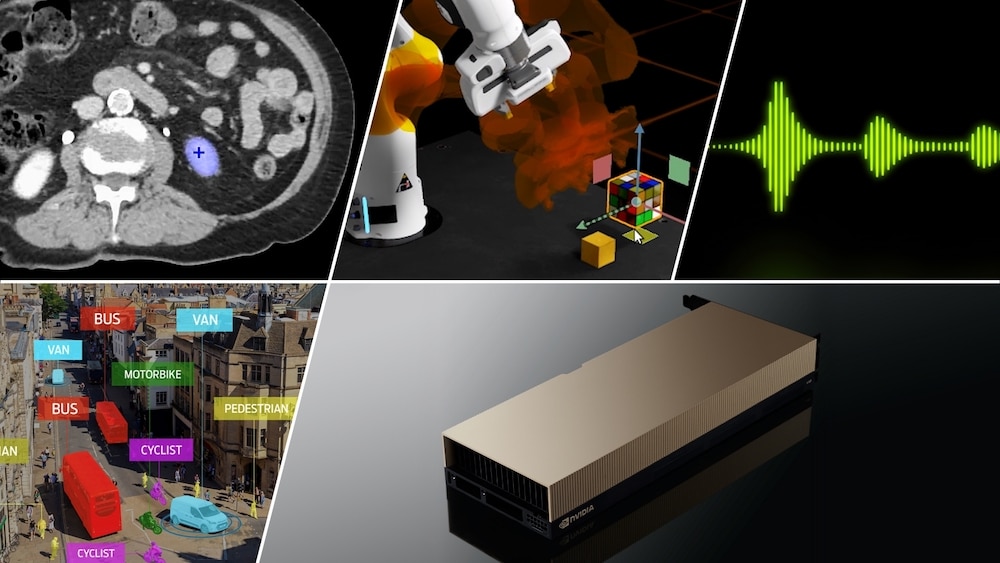

NVIDIA kicked off the GTC 2022 session with a keynote by CEO Jensen Huang that was heavy with impressive graphics and animation. The keynote had something for everyone. Gamers get a new GeForce RTX 40 GPU; impressive neural rendering for games and applications; NVIDIA Hopper goes full production; large language model cloud services advance AI; Omniverse Cloud Services; OVX Computing systems; a GPU for designers and creators; automotive get DRIVE Thor; Jetson Orin Nano for entry-level AI; and IGX Edge AI computing Platform.

NVIDIA kicked off the GTC 2022 session with a keynote by CEO Jensen Huang that was heavy with impressive graphics and animation. The keynote had something for everyone. Gamers get a new GeForce RTX 40 GPU; impressive neural rendering for games and applications; NVIDIA Hopper goes full production; large language model cloud services advance AI; Omniverse Cloud Services; OVX Computing systems; a GPU for designers and creators; automotive get DRIVE Thor; Jetson Orin Nano for entry-level AI; and IGX Edge AI computing Platform.

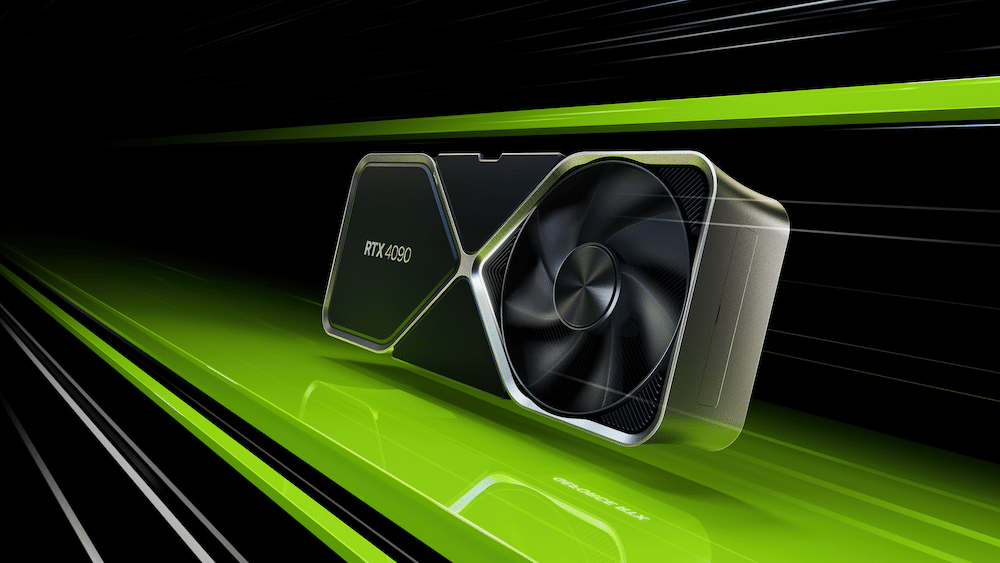

GeForce RTX 40

First on the agenda was the announcement of the next-generation GeForce RTX 40 series GPUS powered by ADA Lovelace, designed to deliver extreme performance for gamers and creators. The new flagship model, the RTX 4090 GPU, is up to 4x the performance of its predecessor.

The first GPU based on the new NVIDIA Ada Lovelace architecture, the RTX 40 Series delivers leaps in performance and efficiency and represents a new era of real-time ray tracing and neural rendering, which uses AI to generate pixels. The RTX 40 Series GPUs feature a range of new technological innovations, including:

- Streaming multiprocessors with up to 83 teraflops of shader power — 2x over the previous generation.

- Third-generation RT Cores with up to 191 effective ray-tracing teraflops — 2.8x over the previous generation.

- Fourth-generation Tensor Cores with up to 1.32 Tensor petaflops — 5x over the previous generation using FP8 acceleration.

- Shader Execution Reordering (SER) improves execution efficiency by rescheduling shading workloads on the fly to utilize the GPU’s resources better. SER improves ray-tracing performance up to 3x and in-game frame rates by up to 25%.

- Ada Optical Flow Accelerator with 2x faster performance allows DLSS 3 to predict movement in a scene, enabling the neural network to boost frame rates while maintaining image quality.

- Architectural improvements tightly coupled with custom TSMC 4N process technology results in an up to 2x leap in power efficiency.

- Dual NVIDIA Encoders (NVENC) cut export times by up to half and feature AV1 support. The NVENC AV1 encode is being adopted by OBS, Blackmagic Design, DaVinci Resolve, Discord, and more.

DLSS 3 Generates Entire Frames for Faster Game Play

Next was the NVIDIA DLSS 3, the next revolution in the company’s Deep Learning Super Sampling neural graphics technology for games and creative apps. The AI-powered technology can generate entire frames for extremely fast gameplay, overcoming CPU performance limitations in games by allowing the GPU to generate entire frames independently.

The technology is coming to popular game engines like Unity and Unreal Engine and has received support from leading game developers, with more than 35 games and apps coming soon.

H100 Tensor Core GPU

The NVIDIA H100 Tensor Core GPU is in full production, and partners are planning an October rollout for the first wave of products and services based on the NVIDIA Hopper architecture.

The H100, announced in March, is built with 80 billion transistors and benefits from a powerful new Transformer Engine and an NVIDIA NVLink interconnect to accelerate the largest AI models, like advanced recommender systems and large language models, and to drive innovations in such fields as conversational AI and drug discovery.

The H100 GPU is powered by several key innovations in NVIDIA’s accelerated compute data center platform, including second-generation Multi-Instance GPU, confidential computing, fourth-generation NVIDIA NVLink, and DPX Instructions.

A five-year license for the NVIDIA AI Enterprise software suite is now included with H100 for mainstream servers. This optimizes the development and deployment of AI workflows and ensures organizations have access to the AI frameworks and tools needed to build AI chatbots, recommendation engines, vision AI and more.

Coming to a Platform Near You

For customers who want to try the new technology immediately, NVIDIA announced that H100 on Dell PowerEdge servers is now available on NVIDIA LaunchPad, which provides free hands-on labs, giving companies access to the latest hardware and NVIDIA AI software.

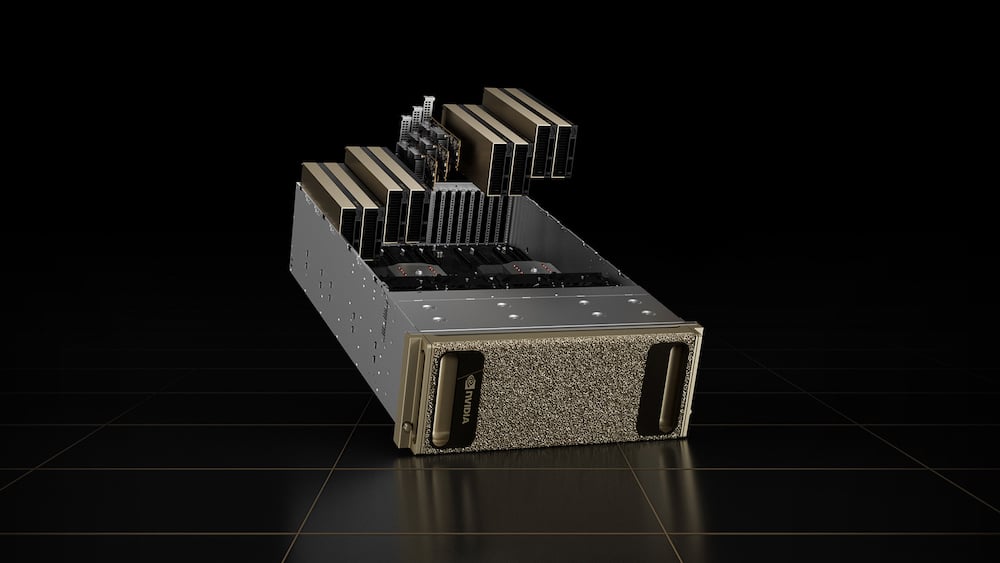

Customers can also begin ordering NVIDIA DGX H100 systems, which include eight H100 GPUs and deliver 32 petaflops of performance at FP8 precision. NVIDIA Base Command and NVIDIA AI Enterprise software power every DGX system, enabling deployments from a single node to an NVIDIA DGX SuperPOD, supporting advanced AI development of large language models and other massive workloads.

Partners building systems include Atos, Cisco, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Lenovo, and Supermicro.

Additionally, some of the world’s leading higher education and research institutions will use H100 to power their next-generation supercomputers. Among them are the Barcelona Supercomputing Center, Los Alamos National Lab, Swiss National Supercomputing Centre (CSCS), Texas Advanced Computing Center, and the University of Tsukuba.

H100 Coming to the Cloud

Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will be among the first to deploy H100-based instances in the cloud starting next year.

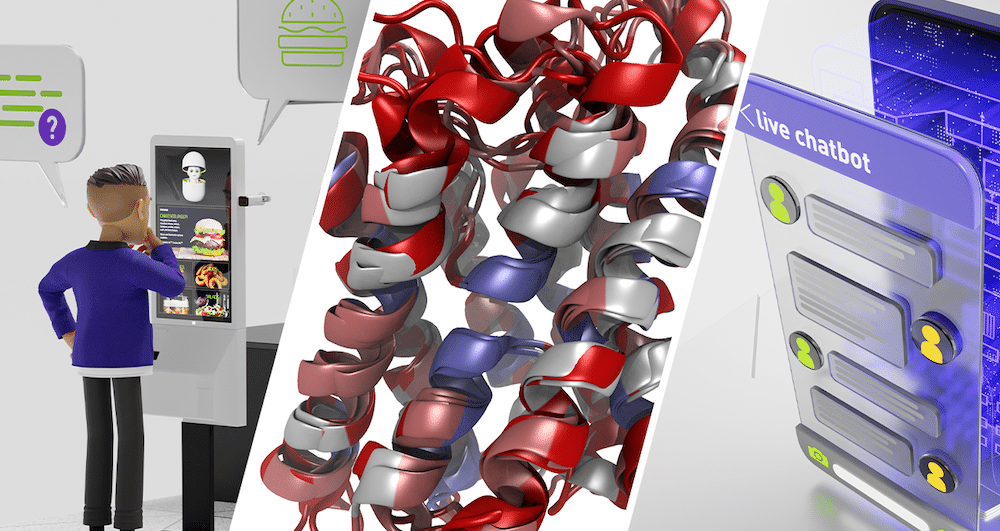

NVIDIA Large Language Model Cloud AI Services

NVIDIA NeMo Large Language Model Service and the NVIDIA BioNeMo LLM Service, large language model cloud AI services, were announced. This new LLM service enables developers to easily adapt LLMs and deploy customized AI applications for content generation, text summarization, chatbots, code development, protein structure and biomolecular property predictions, and more.

The NeMo LLM Service allows developers to rapidly tailor a number of pre-trained foundation models using a training method called prompt learning on NVIDIA-managed infrastructure. The NVIDIA BioNeMo Service is a cloud application programming interface (API) that expands LLM use cases beyond language and scientific applications to accelerate drug discovery for pharma and biotech companies.

Omniverse

NVIDIA Omniverse Cloud is the company’s first software- and infrastructure-as-a-service offering. Omniverse is a suite of cloud services for artists, developers, and enterprise teams to design, publish, operate and experience metaverse applications anywhere.

Using Omniverse Cloud, individuals and teams can experience in one click the ability to design and collaborate on 3D workflows without the need for any local compute power. Roboticists can train, simulate, test, and deploy AI-enabled intelligent machines with increased scalability and accessibility. Autonomous vehicle engineers can generate physically based sensor data and simulate traffic scenarios to test various road and weather conditions for safe self-driving deployment.

Early supporters of Omniverse Cloud include RIMAC Group, WPP, and Siemens.

Omniverse Cloud Services

Omniverse Cloud services run on the Omniverse Cloud Computer, a computing system comprised of NVIDIA OVX for graphics and physics simulation, NVIDIA HGX for advanced AI workloads, and the NVIDIA Graphics Delivery Network (GDN), a global-scale distributed data center network for delivering high-performance, low-latency metaverse graphics at the edge.

Omniverse Cloud services include:

- Omniverse Nucleus Cloud — provides 3D designers and teams the freedom to collaborate and access a shared Universal Scene Description (USD)-based 3D scene and data. Nucleus Cloud enables any designer, creator, or developer to save changes, share, make live edits, and view changes in a scene from nearly anywhere.

- Omniverse App Streaming — enables users without NVIDIA RTX™ GPUs to stream Omniverse reference applications like Omniverse Create, an app for designers and creators to build USD-based virtual worlds; Omniverse View, an app for reviews and approvals; and NVIDIA Isaac Sim, for training and testing robots.

- Omniverse Replicator — enables researchers, developers, and enterprises to generate physically accurate 3D synthetic data and easily build custom synthetic-data generation tools to accelerate the training and accuracy of perception networks and easily integrate with NVIDIA AI cloud services.

- Omniverse Farm — enables users and enterprises to harness multiple cloud compute instances to scale out Omniverse tasks such as rendering and synthetic data generation.

- NVIDIA Isaac Sim — a scalable robotics simulation application and synthetic-data generation tool that powers photorealistic, physically accurate virtual environments to develop, test, and manage AI-based robots.

- NVIDIA DRIVE Sim — an end-to-end simulation platform to run large-scale, physically accurate multisensor simulations to support autonomous vehicle development and validation from concept to deployment, improving developer productivity and accelerating time to market.

OVX Computing Services

NVIDIA announced the second generation of NVIDIA OVX, powered by the NVIDIA Ada Lovelace GPU architecture and enhanced networking technology, to deliver real-time graphics, AI, and digital twin simulation capabilities.

The new NVIDIA OVX systems are designed to build 3D virtual worlds using leading 3D software applications and to operate immersive digital twin simulations in NVIDIA Omniverse Enterprise, a scalable, end-to-end platform enabling enterprises to build and operate metaverse applications.

NVIDIA OVX is a computing system designed to power large-scale Omniverse digital twins. It will be delivered to some of the world’s most sophisticated design and engineering teams at companies like BMW Group and Jaguar Land Rover.

Powering the new OVX systems is the NVIDIA L40 GPU, also based on the NVIDIA Ada Lovelace GPU architecture, which brings the highest levels of power and performance for building complex industrial digital twins.

The L40 GPU’s third-generation RT Cores and fourth-generation Tensor Cores will deliver powerful capabilities to Omniverse workloads running on OVX, including accelerated ray-traced and path-traced rendering of materials, physically accurate simulations, and photorealistic 3D synthetic data generation. The L40 will also be available in NVIDIA-Certified Systems servers from major OEM vendors to power RTX workloads from the data center.

NVIDIA OVX also includes the NVIDIA ConnectX-7 SmartNIC, providing enhanced network and storage performance and the precision timing synchronization required for true-to-life digital twins. ConnectX-7 includes support for 200G networking on each port and fast in-line data encryption to speed up data movement and increase security for digital twins.

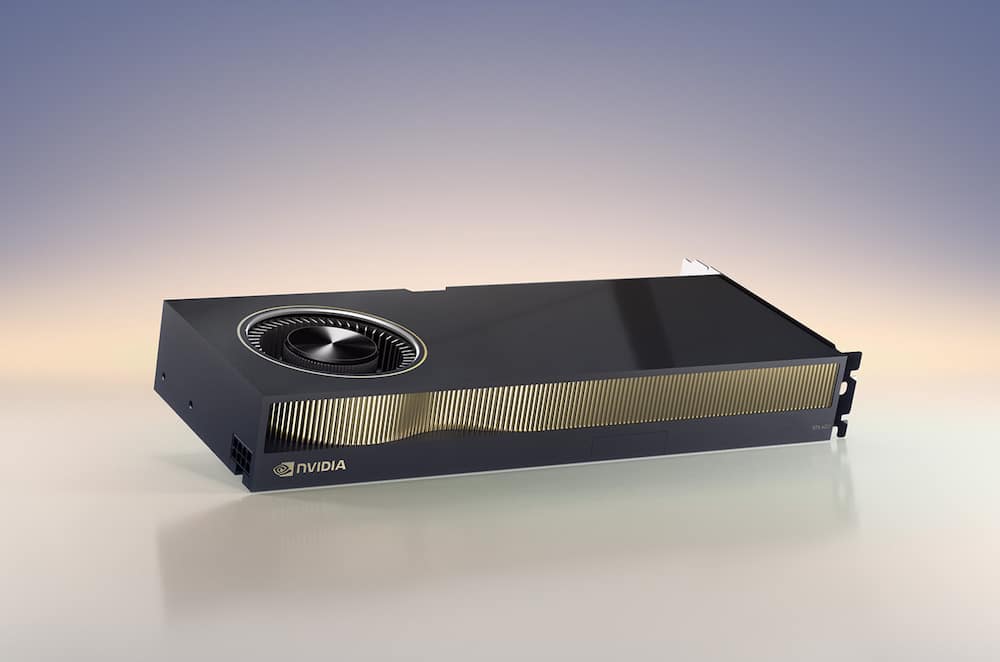

NVIDIA RTX 6000 Workstation GPU

The NVIDIA RTX 6000 Ada Generation GPU delivers real-time rendering, graphics, and AI. Designers and engineers can drive advanced, simulation-based workflows to build and validate more sophisticated designs. Artists can take storytelling to the next level, creating more compelling content and building immersive virtual environments. Scientists, researchers, and medical professionals can accelerate the development of life-saving medicines and procedures with supercomputing power on their workstations. All at up to 2-4x the performance of the previous-generation RTX A6000.

Designed for neural graphics and advanced virtual world simulation, the RTX 6000, with Ada generation AI and programmable shader technology, is the ideal platform for creating content and tools for the metaverse with NVIDIA Omniverse Enterprise. The RTX 6000 enables users to create detailed content, develop complex simulations, and form the building blocks required to construct compelling and engaging virtual worlds.

Next-Generation RTX Technology

Powered by the NVIDIA Ada architecture, the NVIDIA RTX 6000 features state-of-the-art NVIDIA RTX technology with features like:

- Third-generation RT Cores: Up to 2x the throughput of the previous generation with the ability to concurrently run ray tracing with either shading or denoising capabilities.

- Fourth-generation Tensor Cores: Up to 2x faster AI training performance than the previous generation with expanded support for the FP8 data format.

- CUDA cores: Up to 2x the single-precision floating-point throughput compared to the previous generation.

- GPU memory: Features 48GB of GDDR6 memory for working with the largest 3D models, render images, simulation, and AI datasets.

- Virtualization: Will support NVIDIA virtual GPU (vGPU) software for multiple high-performance virtual workstation instances, enabling remote users to share resources and drive high-end design, AI, and compute workloads.

- XR: Features 3x the video encoding performance of the previous generation for streaming multiple simultaneous XR sessions using NVIDIA CloudXR.

NVIDIA DRIVE Orin

Production starts for the NVIDIA DRIVE Orin autonomous vehicle computer, showcased new automakers adopting the NVIDIA DRIVE™ platform, and unveiled the next generation of its NVIDIA DRIVE Hyperion architecture. NVIDIA also announced that its automotive pipeline has increased to over $11 billion over the next six years, following a series of design wins with vehicle makers from around the globe.

More than 25 vehicle makers have adopted the NVIDIA DRIVE Orin system-on-a-chip (SoC). Starting this year, they are introducing software-defined vehicles built on the centralized AI compute platform.

DRIVE Hyperion with NVIDIA Orin serves as the central nervous system and AI brain for new energy vehicles, delivering constantly improving, cutting-edge AI features while ensuring safe and secure driving capabilities.

Also announced was the next generation of the DRIVE Hyperion architecture, built on the Atlan computer, for vehicles starting to ship in 2026. The DRIVE Hyperion platform is designed to scale across generations so that customers can leverage current investments for future architectures.

The next-generation platform will increase performance for processing sensor data and extend the operating domains of full self-driving. DRIVE Hyperion 9 will feature 14 cameras, nine radars, three lidars, and 20 ultrasonics as part of its sensor suite.

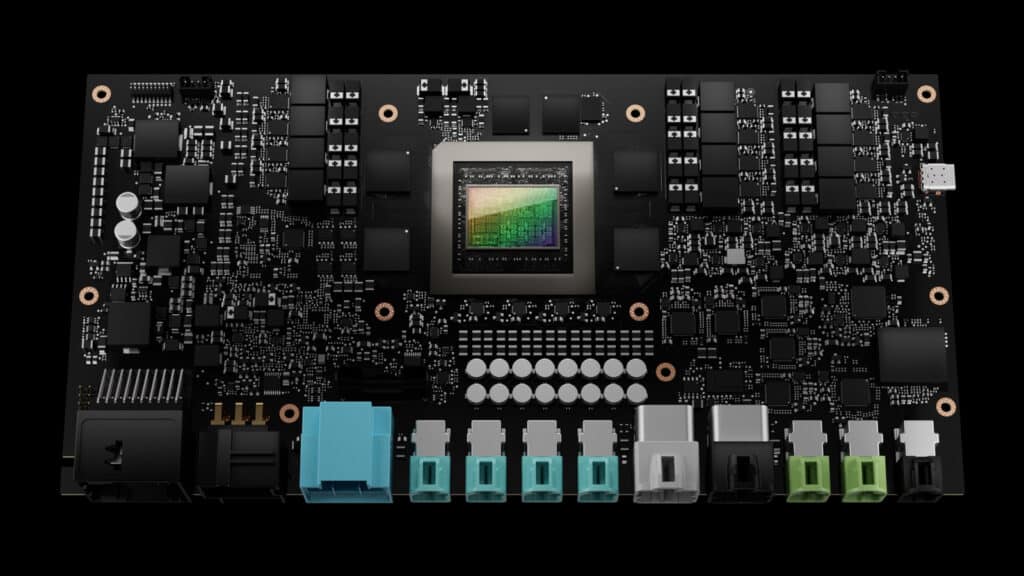

NVIDIA DRIVE Thor

NVIDIA DRIVE Thor is the next-generation centralized computer for safe and secure autonomous vehicles. DRIVE Thor achieves up to 2,000 teraflops and unifies intelligent functions, including automated and assisted driving, parking, driver and occupant monitoring, digital instrument cluster, in-vehicle infotainment (IVI), and rear-seat entertainment into a single architecture for greater efficiency and lower overall system cost.

The next-generation superchip comes packed with the cutting-edge AI capabilities first introduced in the NVIDIA Hopper Multi-Instance GPU architecture, along with the NVIDIA Grace CPU and NVIDIA Ada Lovelace GPU. DRIVE Thor, with MIG support for graphics and compute, enables IVI and advanced driver-assistance systems to run domain isolation, which allows concurrent time-critical processes to run without interruption. Available for automakers’ 2025 models, it will accelerate production roadmaps by bringing higher performance and advanced features to market in the same timeline.

NVIDIA Jetson Orin Nano

NVIDIA announced the expansion of the NVIDIA Jetson lineup with the launch of new Jetson Orin Nano system-on-modules that deliver up to 80x the performance over the prior generation, setting a new standard for entry-level edge AI and robotics.

The NVIDIA Jetson family now spans six Orin-based production modules supporting a full range of edge AI and robotics applications. This includes the Orin Nano, delivering up to 40 trillion operations per second (TOPS) of AI performance in the smallest Jetson form factor, up to the AGX Orin, delivering 275 TOPS for advanced autonomous machines.

Jetson Orin features an NVIDIA Ampere architecture GPU, Arm-based CPUs, next-generation deep learning and vision accelerators, high-speed interfaces, fast memory bandwidth, and multimodal sensor support. This performance and versatility empower more customers to commercialize products that once seemed impossible, from engineers deploying edge AI applications to Robotics Operating System (ROS) developers building next-generation intelligent machines.

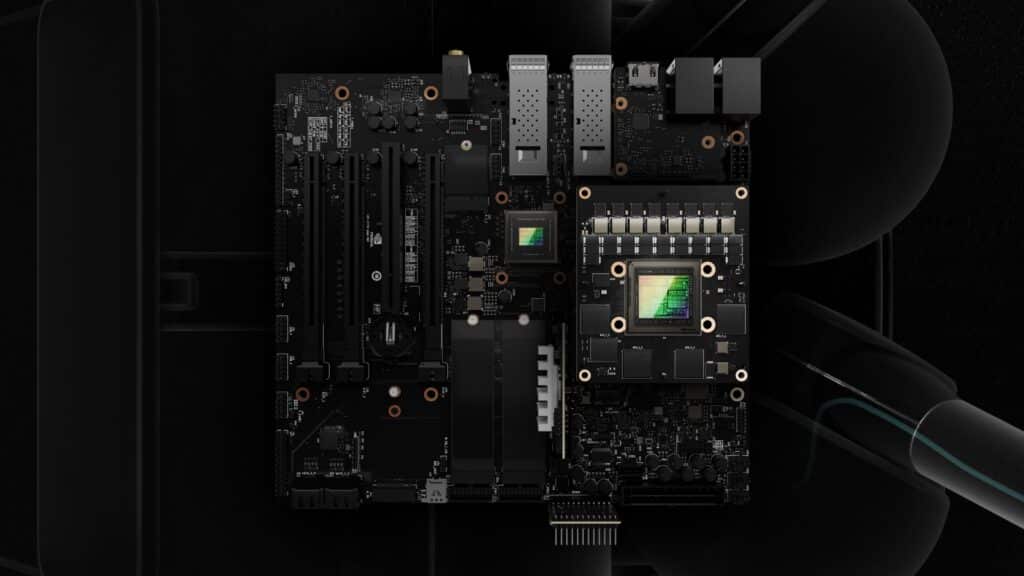

NVIDIA IGX Edge AI

The NVIDIA IGX platform was announced today. IGX is used for high-precision edge AI, bringing advanced security and proactive safety to sensitive industries such as manufacturing, logistics, and healthcare. In the past, such industries required costly solutions custom-built for specific use cases, but the IGX platform is easily programmable and configurable to suit different needs.

IGX provides an additional layer of safety in highly regulated physical-world factories and warehouses for manufacturing and logistics. For medical edge AI use cases, IGX delivers secure, low-latency AI inference to address the clinical demand for instantaneous insights from various instruments and sensors for medical procedures, such as robotic-assisted surgery and patient monitoring.

NVIDIA IGX Platform — Ensuring Compliance in Edge AI

The NVIDIA IGX platform is a powerful combination of hardware and software that includes NVIDIA IGX Orin, a powerful, compact, and energy-efficient AI supercomputer for autonomous industrial machines and medical devices.

IGX Orin developer kits will be available early next year for enterprises to prototype and test products. Each kit has an integrated GPU and CPU for high-performance AI compute and an NVIDIA ConnectX-7 SmartNIC to deliver high-performance networking with ultra-low latency and advanced security.

Also included is a powerful software stack with critical security and safety capabilities that can be programmed and configured for different use cases. These features allow enterprises to add proactive safety into environments where humans and robots work side-by-side, such as warehouse floors and operating rooms.

The IGX platform can run NVIDIA AI Enterprise software, optimizing the development and deployment of AI workflows, and ensuring organizations have access to necessary AI frameworks and tools. NVIDIA is also working with operating system partners like Canonical, Red Hat, and SUSE to bring full-stack, long-term support to the platform.

For management of IGX in industrial and medical environments, NVIDIA Fleet Command allows organizations to deploy secure, over-the-air software and system updates from a central cloud console.

Click the link to get the full story on the GTC 2022 Keynote.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed