NVIDIA GTC 2024 is here; back in person for the first time in many years. Jordan is at the event live bringing all of the latest news and analysis on the leading AI event.

NVIDIA’s GPU Technology Conference (GTC) is back in person after a number of years as a virtual-only event. This is a fantastic event for innovators, researchers, scientists, and technology enthusiasts alike to see the latest technology from the tech giant. This year’s NVIDIA GTC 2024, much anticipated in the tech community, showcases the latest breakthroughs in AI, deep learning, autonomous vehicles, and the new Blackwell architecture.

Here are the highlights from NVIDIA’s CEO, Jensen Huang’s, Monday keynote. It surrounded NVIDIA’s new Blackwell architecture, networking, quantum computing advancements, and software stack updates.

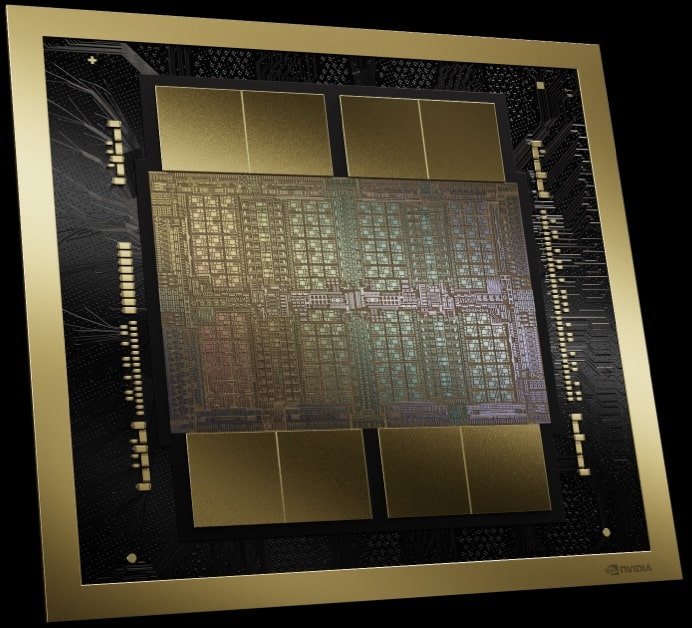

NVIDIA Blackwell

Six groundbreaking technologies poised to redefine accelerated computing are at the heart of Blackwell’s innovation. From enhancing data processing to revolutionizing drug design and beyond, NVIDIA is setting a new standard. High-profile adopters like Amazon and Microsoft are already lining up in anticipation of Blackwell’s transformative potential.

Let’s zoom into the engineering marvel that NVIDIA has accomplished. The Blackwell GPUs pack a whopping 208 billion transistors across two chips, made possible by leveraging a two-reticle limit 4NP TSMC process. This approach challenges the boundaries of semiconductor fabrication and introduces a novel way of connecting chips with a blazing 10TB/s interface. This move towards chiplet designs reflects NVIDIA’s ambition to push beyond traditional boundaries.

| Specification | H100 | B100 | B200 |

| Max Memory | 80GB HBM3 | 192GB HBM3e | 192GB HBM3e |

| Memory Bandwidth | 3.35TB/s | 8TB/s | 8TB/s |

| FP4 | – | 14 PFLOPS | 18 PFlops |

| FP6 | – | 7 PFLOPS | 9 PFLOPS |

| FP8/INT8 | 3.958 PFLOPS/POPS | 7 PFLOPS/POPS | 9 PFLOPS/POPS |

| FP16/BF16 | 1979 TFLOPS | 3.5 PFLOPS | 4.5 PFLOPS |

| TF32 | 989 TFLOPS | 1.8 PFLOPS | 2.2 PFLOPS |

| FP64 | 67 TFLOPS | 30 TFLOPS | 40 TFLOPS |

| Max Power Consumption | 700W | 700W | 1000W |

Note: All numbers here represent performance for sparse matrix computations.

It’s not just about packing more transistors. The introduction of FP4 and FP6 compute capability brings a new level of efficient model training, albeit with a slight trade-off in model performance. This trade-off is a nuanced aspect of the platform, reflecting a complex balancing act between efficiency and precision.

The second-generation transformer engine within Blackwell enables a leap in compute, bandwidth, and model size capabilities when using FP4, bringing improvements that are vital for the future of AI development. Furthermore, integrating PCIe Gen6 and new HBM3e memory technology delivers a substantial boost in bandwidth, which, when coupled with the fifth-generation NVLink, doubles the bandwidth from the previous generation to a staggering 1.8TB/s.

One of the more intriguing introductions is the RAS Engine, which enhances reliability, availability, and serviceability across massive AI deployments. This innovation could significantly improve model flop utilization, addressing one of the critical challenges in scaling AI applications.

With Blackwell, NVIDIA brings new confidential computing capabilities, including the first Trusted Execution Environment(TEE)-I/O capable GPU in the industry, extending the TEE beyond the CPUs to GPUs. This ensures secure and fast processing of private data, crucial for training generative AI. This innovation is particularly significant for industries dealing with privacy regulations or proprietary information. NVIDIA Blackwell’s Confidential Computing delivers unparalleled security without compromising performance, offering nearly identical throughput to unencrypted modes. This advancement not only secures large AI models but also enables confidential AI training and federated learning, safeguarding intellectual property in AI.

The Decompression Engine in NVIDIA Blackwell marks a significant leap in data analytics and database workflows. This engine can decompress data at an astonishing rate of up to 800GB/s, significantly enhancing the performance of data analytics and reducing the time to insights. In collaboration with 8TB/s HBM3e memory and the high-speed NVLink-C2C interconnect, it accelerates database queries, making Blackwell 18 times faster than CPUs and 6 times faster than previous NVIDIA GPUs in query benchmarks. This technology supports the latest compression formats and positions NVIDIA Blackwell as a powerhouse for data analytics and science, drastically speeding up the end-to-end analytics pipeline.

Despite the technical marvels, NVIDIA’s claim of reducing LLM inference operating costs and energy by up to 25x raises eyebrows, particularly given the lack of detailed power consumption data. This claim, while noteworthy, may benefit from further clarification to gauge their impact fully.

In summary, NVIDIA’s Blackwell platform is a testament to the company’s relentless pursuit of pushing the boundaries of what’s possible in AI and computing. With its revolutionary technologies and ambitious goals, Blackwell is not just a step but a giant leap forward, promising to fuel various advancements across various industries. As we delve deeper into this era of accelerated computing and generative AI, NVIDIA’s innovations may be the catalysts for the next industrial revolution..

NVIDIA Blackwell HGX

Embracing the Blackwell architecture, NVIDIA refreshed its HGX server and baseboard series. This significant evolution from prior models brings a compelling change, notably reducing the total cost of ownership while impressively boosting performance. The comparison is striking—when pitting FP8 against FP4, there’s a remarkable 4.5x performance enhancement. Even when matching FP8 with its predecessor, the performance nearly doubles. This isn’t just about raw speed; it’s a leap forward in memory efficiency, showcasing an 8x surge in aggregate memory bandwidth.

| Specification | HGX H100 | HGX H200 | HGX B100 | HGX B200 |

| Max Memory | 640GB HBM3 | 1.1TB HBM3e | 1.5TB HBM3e | 1.5TB HBM3e |

| Memory Bandwidth | 7.2TB/s | 7.2TB/s | 8TB/s | 8 TB/s |

| FP4 | – | – | 112 PFLOPS | 144 PFLOPS |

| FP6 | – | – | 56 PFLOPS | 72 PFLOPS |

| FP8/INT8 | 32 PFLOPS/POPS | 32 PFLOPS/POPS | 56 PFLOPS/POPS | 72 PFLOPS/POPS |

| FP16/BF16 | 16 PFLOPS | 16 PFLOPS | 28 PFLOPS | 36 PFLOPS |

NVIDIA Grace-Blackwell SuperChip

Diving deeper into the intricacies of NVIDIA’s latest announcement, focusing on the GB200, the cornerstone of the Blackwell platform’s arsenal. With NVIDIA continuously pushing the envelope in high-performance computing, the GB200 represents a significant evolution in its GPU offerings, blending cutting-edge technology with strategic advancements in connectivity and scalability. The GB200 houses two B200 GPUs; this configuration departs from the previous generation’s GH200, which featured a one-to-one connection between a GPU and a Grace CPU. This time, both B200 GPUs are linked to the same Grace CPU via a 900GB/s chip-to-chip (C2C) link.

| Specification | GH200 | GB200 |

| Max Memory | 144GB HBM3e | 384GB HBM3e |

| Memory Bandwidth | 8TB/s | 16TB/s (Aggregate) |

| FP4 | – | 40 PFLOPS |

| FP6 | – | 20 PFLOPS |

| FP8/INT8 | 3.958 PFLOPS/POPS | 20 PFLOPS |

| FP16/BF16 | 1979 TFLOPS | 10 PFLOPS |

| TF32 | 989 TFLOPS | 5 PFLOPS |

| FP64 | 67 TFLOPS | 90 TFLOPS |

| PCIe Lanes | 4x PCIe Gen 5 x16 | 2x PCIe Gen 6 x16 |

| Max Power Consumption | 1000W | 2700W |

# Note: All numbers here represent performance for sparse matrix computations.

At first glance, the decision to maintain the 900GB/s C2C link from the previous generation might seem like a limitation. However, this design choice underscores a calculated strategy to leverage existing technologies while paving the way for new levels of scalability. The GB200’s architecture allows it to communicate with up to 576 GPUs at a speed of 1.8TB/s, courtesy of the fifth-generation NVLink. This level of interconnectivity is crucial for building massively parallel computing environments necessary for training and deploying the largest and most complex AI models.

NVIDIA Networking Stack Update

Integrating the GB200 with NVIDIA’s latest networking technologies, the Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms raises interesting questions about connectivity and bandwidth. The mention of 800Gb/s capabilities hints at NVIDIA exploring the benefits PCIe Gen6 can bring to the table.

The GB200 configuration, with its dual-GPU setup and advanced networking options, represents NVIDIA’s vision for the future of HPC. This vision is not just about individual components’ raw power but how these components can be orchestrated in a coherent, scalable system. By enabling a higher degree of interconnectivity and maintaining a balance between compute power and data transfer rates, NVIDIA addresses some of the most critical challenges in AI research and development, particularly in handling exponentially growing model sizes and computational demands.

NVIDIA Fifth-Generation NVLink and NVLink Switches

The fifth-generation NVLink marks a significant milestone in high-performance computing and AI. This technology enhances the capacity to connect and communicate among GPUs, a crucial aspect for the rapidly evolving demands of foundational models in AI.

The fifth-generation NVLink boosts its GPU connectivity capacity to 576 GPUs, a substantial increase from the previous limit of 256 GPUs. This expansion is paired with a doubling of bandwidth compared to its predecessor, a critical enhancement for the performance of increasingly complex foundational AI models.

Each Blackwell GPU link boasts two high-speed differential pairs, similar to the Hopper GPU, but it achieves an effective bandwidth per link of 50GB/sec in each direction. These GPUs come equipped with 18 fifth-generation NVLink links, providing a staggering total bandwidth of 1.8 TB/s. This throughput is more than 14 times greater than that of current PCIe Gen 5.

Another remarkable feature is the NVIDIA NVLink Switch, which supports a 130TB/s GPU bandwidth in a single 72 GPU NVLink domain (NVL72), crucial for model parallelism. This switch also delivers a fourfold increase in bandwidth efficiency with the new NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) FP8 support.

In addition, the NVIDIA Unified Fabric Manager (UFM) complements the NVLink Switch by providing robust and proven management for the NVLink compute fabric.

Exascale Compute in a Rack

Building upon the formidable foundation laid by its predecessor, the GraceHopper GH200 NVL32, the DGX GB200 NVL72 is not just an upgrade; it’s a cornerstone advancement to expand what’s possible in computational power and efficiency. The DGX GB200 NVL72 platform showcases staggering advancements across the board. Each DGX GB200 NVL72 system comprises 18x GB200 SuperChip nodes, comprising 2x GB200 each.

This platform more than doubles the number of GPUs from 32 to 72 and modestly increases the CPUs from 32 to 36. However, the leap in memory is notable, jumping from 19.5TB to an impressive 30TB. This expansion is not merely about more significant numbers; it’s about enabling a new echelon of computational capabilities, particularly in handling the most complex AI models and simulations.

One of the most jaw-dropping upgrades is the leap in computational performance. The platform jumps from 127 PetaFLOPS to 1.4 ExaFLOPS when comparing FP4 performance, marking an approximate 11x increase. This comparison illuminates NVIDIA’s dedication to pushing the boundaries of precision and speed, particularly in AI and machine learning. However, even when comparing FP8 to FP8, the platform achieves a 5.6x increase, from 127PFs to 720PFs, underscoring significant efficiency and computational power advancements.

The commitment to maintaining a completely water-cooled system echoes NVIDIA’s focus on sustainability and performance optimization. This approach enhances the system’s operational efficiency and aligns with broader industry trends toward more environmentally friendly data center technologies.

NVIDIA DGX SuperPOD powered by NVIDIA GB200 Grace Blackwell Superchips

NVIDIA also announced its next-generation AI supercomputer, the DGX SuperPOD, equipped with 8 NVIDIA GB200 NVL72 Grace Blackwell systems. This formidable setup is designed for handling trillion-parameter models, boasting 11.5 exaflops of AI supercomputing power at FP4 precision across its liquid-cooled, rack-scale architecture. Each GB200 NVL72 system includes 36 NVIDIA GB200 Superchips, promising a 30x performance increase over its H100 predecessors for large language model inference workloads.

According to Jensen Huang, NVIDIA’s CEO, the DGX SuperPOD aims to be the “factory of the AI industrial revolution.”

Quantum Simulation Cloud

NVIDIA also unveiled the Quantum Simulation Cloud service, enabling researchers to explore quantum computing across various scientific domains. Based on the open-source CUDA-Q platform, this service offers powerful tools and integrations for building and testing quantum algorithms and applications. Collaborations with the University of Toronto and companies like Classiq and QC Ware highlight NVIDIA’s effort to accelerate quantum computing innovation.

NVIDIA NIM Software Stack

Another significant announcement was the NVIDIA NIM software stack launch, offering dozens of enterprise-grade generative AI microservices. These services allow businesses to create and deploy custom applications on their platforms, optimizing inference on popular AI models and enhancing development with NVIDIA CUDA-X microservices for a wide range of applications. Jensen Huang emphasized the potential of these microservices to transform enterprises across industries into AI-powered entities.

OVX Computing Systems

In response to the rapid growth of generative AI in various industries, NVIDIA has introduced the OVX computing systems, a solution designed to streamline complex AI and graphics-intensive workloads. Recognizing the crucial role of high-performance storage in AI deployments, NVIDIA has initiated a storage partner validation program with leading contributors like DDN, Dell PowerScale, NetApp, Pure Storage, and WEKA.

The new program standardizes the process for partners to validate their storage appliances, ensuring optimal performance and scalability for enterprise AI workloads. Through rigorous NVIDIA testing, these storage systems are validated against diverse parameters, reflecting the challenging requirements of AI applications.

Furthermore, NVIDIA-certified OVX servers, powered by NVIDIA L40S GPUs and integrated with comprehensive software and networking solutions, offer a flexible architecture to fit varied data center environments. This approach not only accelerates computing where data resides but also caters to the unique needs of generative AI, ensuring efficiency and cost-effectiveness. The NVIDIA OVX servers are equipped with robust GPUs, offering enhanced compute capabilities, high-speed storage access, and low-latency networking. This is particularly vital for demanding applications like chatbots and search tools that require extensive data processing.

Currently available and shipping from global vendors like GIGABYTE, Hewlett Packard Enterprise, Lenovo, and Supermicro, NVIDIA-Certified OVX servers represent a significant leap in handling complex AI workloads, promising enterprise-grade performance, security, and scalability.

Closing Thoughts

Additionally, there were announcements in the space of Automotive, Robotics, Healthcare, and Generative AI. All of these announcements showcase NVIDIA’s relentless pursuit of innovation, offering advanced tools and platforms to drive the future of AI and computing across multiple domains. All of them are highly technical and have many complexities, especially in the case of quantum computing and software releases. Stay tuned for analysis on the announcements as we get more information on each of these new releases.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed