NVIDIA leveraged GTC 2025 to announce new software advancing AI innovation and optimization.

During the GTC 2025 event, NVIDIA announced significant advancements in AI and decision optimization, unveiling the latest CUDA-X libraries and open-sourcing its powerful cuOpt optimization engine. These innovations dramatically accelerate data science workflows and real-time decision-making across industries.

As artificial intelligence and data science continue to evolve, the ability to rapidly process and analyze massive datasets has become a critical differentiator. NVIDIA’s CUDA-X libraries, built upon the CUDA platform, offer a collection of GPU-accelerated libraries that deliver significantly higher performance than traditional CPU-only alternatives.

The latest release, cuML 25.02, now available in open beta, enables data scientists and researchers to accelerate popular machine learning algorithms such as scikit-learn, UMAP, and HDBSCAN without code changes. This zero-code-change acceleration paradigm, first established by cuDF-pandas for DataFrame operations, now extends to machine learning tasks, reducing computation times from hours to seconds.

cuOpt Goes Open Source

NVIDIA also announced it will open-source cuOpt, its AI-powered decision optimization engine. This decision makes the powerful software freely available to developers, researchers, and enterprises, ushering in a new era of real-time optimization at an unprecedented scale.

Decision optimization is critical for businesses worldwide, from logistics companies determining optimal truck routes to airlines rerouting flights during disruptions. Traditional optimization methods often struggle with the exponential complexity of these problems, taking hours or even days to compute solutions. cuOpt, powered by NVIDIA GPUs, addresses this by dynamically evaluating billions of variables simultaneously: inventory levels, factory outputs, shipping delays, fuel costs, risk factors, and regulations, to deliver optimal solutions in near real-time.

Leading optimization companies, including Gurobi Optimization, IBM, FICO’s Xpress team, HiGHS, SimpleRose, and COPT, are already integrating or evaluating cuOpt to enhance their decision-making capabilities. Gurobi Optimization, for instance, is actively testing cuOpt solvers to refine first-order algorithms for next-level performance.

Early benchmarks demonstrate cuOpt’s remarkable performance:

- Linear programming acceleration: 70x faster on average than CPU-based solvers, with speedups ranging from 10x to 3,000x.

- Mixed-integer programming: 60x faster solves, as demonstrated by SimpleRose.

- Vehicle routing: 240x speedup in dynamic routing, enabling near-instantaneous route adjustments.

AI Data Platform for Accelerated Enterprise Storage

In addition, NVIDIA unveiled the NVIDIA AI Data Platform, a customizable reference architecture designed to accelerate AI inference workloads within enterprise storage systems. This new platform enables storage providers to integrate specialized AI query agents powered by NVIDIA’s accelerated computing, networking, and software technologies.

Leveraging NVIDIA AI Enterprise software, including NVIDIA NIM microservices for the new NVIDIA Llama Nemotron reasoning models and the NVIDIA AI-Q Blueprint, these AI query agents can rapidly generate insights from structured, semi-structured, and unstructured data sources. This includes text, PDFs, images, and videos.

The platform uses NVIDIA Blackwell GPUs, BlueField DPUs, Spectrum-X networking, and the NVIDIA Dynamo open-source inference library to enhance performance significantly. BlueField DPUs deliver up to 1.6x higher performance than traditional CPU-based storage solutions, while reducing power consumption by up to 50%. Spectrum-X networking further accelerates AI storage traffic by up to 48% compared to conventional Ethernet.

Leading storage providers, including DDN, Dell Technologies, VAST Data, HPE, Hitachi Vantara, IBM, NetApp, Nutanix, Pure Storage, and WEKA, are collaborating with NVIDIA to integrate these capabilities into their enterprise storage offerings. NVIDIA-Certified Storage providers plan to launch solutions based on the NVIDIA AI Data Platform starting this month.

NVIDIA Llama Nemotron Reasoning Models

NVIDIA also announced new models in the Llama Nemotron family, a series of open, business-ready AI reasoning models designed specifically for building advanced agents capable of solving complex tasks. Based on LLAMA, one of the most broadly adopted open models, the Llama Nemotron models have been algorithmically pruned to reduce model size, optimizing computational efficiency while preserving accuracy.

These models have been further post-trained to induce reasoning capabilities across critical benchmarks for math, tool calling, instruction following, and conversational tasks. NVIDIA is making these models openly available and releasing the datasets and training techniques used to achieve their high accuracy. These datasets comprise 60 billion tokens of NVIDIA-generated synthetic data, representing approximately 360,000 H100 inference hours and 45,000 human annotation hours, all openly accessible to developers.

A unique feature of the Llama Nemotron models is the ability to toggle reasoning capabilities on or off, providing developers unprecedented flexibility. The models are available in three variants, Nano, Super, and Ultra. Nano delivers the highest reasoning accuracy in its class. Super provides optimal accuracy combined with the highest throughput on a single data center GPU, and Ultra offers maximum agentic accuracy optimized for multi-GPU servers at data center scale.

The Nano and Super models are available immediately as NIM microservices, accessible and downloadable at ai.nvidia.com, with Ultra planned for release soon.

NVIDIA Omniverse Blueprint Advances AI Factory Design and Simulation

Recognizing the growing demand for AI factories, NVIDIA unveiled the NVIDIA Omniverse Blueprint for AI factory design and operations.

During his keynote, NVIDIA founder and CEO Jensen Huang demonstrated how NVIDIA’s data center engineering team leveraged Omniverse Blueprint to plan, optimize, and simulate a 1-gigawatt AI factory. Connected to leading simulation tools such as Cadence Reality Digital Twin Platform and ETAP, engineers can test and optimize power, cooling, and networking long before construction begins.

The Omniverse Blueprint integrates OpenUSD libraries, enabling developers to aggregate 3D data from diverse sources, including NVIDIA accelerated computing systems and power or cooling units from Schneider Electric and Vertiv. The blueprint helps engineers address component integration, cooling efficiency, power reliability, and networking optimization challenges.

Breaking down traditional engineering silos, the Omniverse Blueprint allows multidisciplinary teams to collaborate in real-time, optimizing energy usage, eliminating potential failure points, and modeling real-world conditions. Real-time simulations enable faster decision-making, significantly reducing the risk of costly downtime.

With $1 trillion projected for AI-driven data center upgrades, NVIDIA’s Omniverse Blueprint is set to lead the transformation, helping AI factory operators stay ahead of evolving workloads, minimize downtime, and maximize efficiency.

New Isaac GROOT N1 Accelerates Humanoid Robotics

NVIDIA introduced new technologies at GTC 2025 to accelerate humanoid robotics development. At the heart of this announcement is NVIDIA Isaac GR00T N1, the world’s first open and fully customizable foundation model designed for generalized humanoid reasoning and skills.

GR00T N1 leverages a dual-system architecture modeled after human cognition. System 1 functions as a rapid-action module, analogous to human reflexes, generating precise robotic movements trained on a blend of human demonstrations and synthetic data from NVIDIA Omniverse. System 2, a slower-thinking module powered by a vision language model, interprets surroundings and instructions for deliberate, methodical decision-making. These capabilities enable GR00T N1 to generalize complex, multistep tasks across applications such as material handling, packaging, and inspection.

“Generalist robotics is entering a transformative era,” remarked NVIDIA CEO Jensen Huang. He demonstrated this potential with a humanoid robot from 1X Technologies performing household tasks autonomously, showcasing the capabilities achievable through minimal additional post-training.

Bernt Børnich, CEO of 1X Technologies, emphasized adaptability and learning in robotics, noting how NVIDIA’s GR00T N1 substantially streamlined the deployment of their robot NEO Gamma, fostering humanoids that serve as companions and practical assistants.

Leading robotics innovators Agility Robotics, Boston Dynamics, Mentee Robotics, and NEURA Robotics have received early access to GR00T N1, positioning them at the forefront of this robotics evolution.

Newton: Open-source Physics Engine

NVIDIA also announced Newton, an open-source physics engine developed with Google DeepMind and Disney Research. Newton is optimized specifically for robotics and is compatible with established simulation platforms such as MuJoCo and NVIDIA Isaac Lab. MuJoCo-Warp, another collaborative effort between Google DeepMind and NVIDIA, promises a 70x acceleration in robotics machine learning workloads.

Disney Research will integrate Newton into its robotic character platform, fueling expressive next-generation entertainment robots like the Star Wars-inspired BDX droids featured during Huang’s keynote. Kyle Laughlin, Senior Vice President at Walt Disney Imagineering Research & Development, highlighted Newton’s role in creating more interactive and emotionally engaging robotic characters for future Disney experiences.

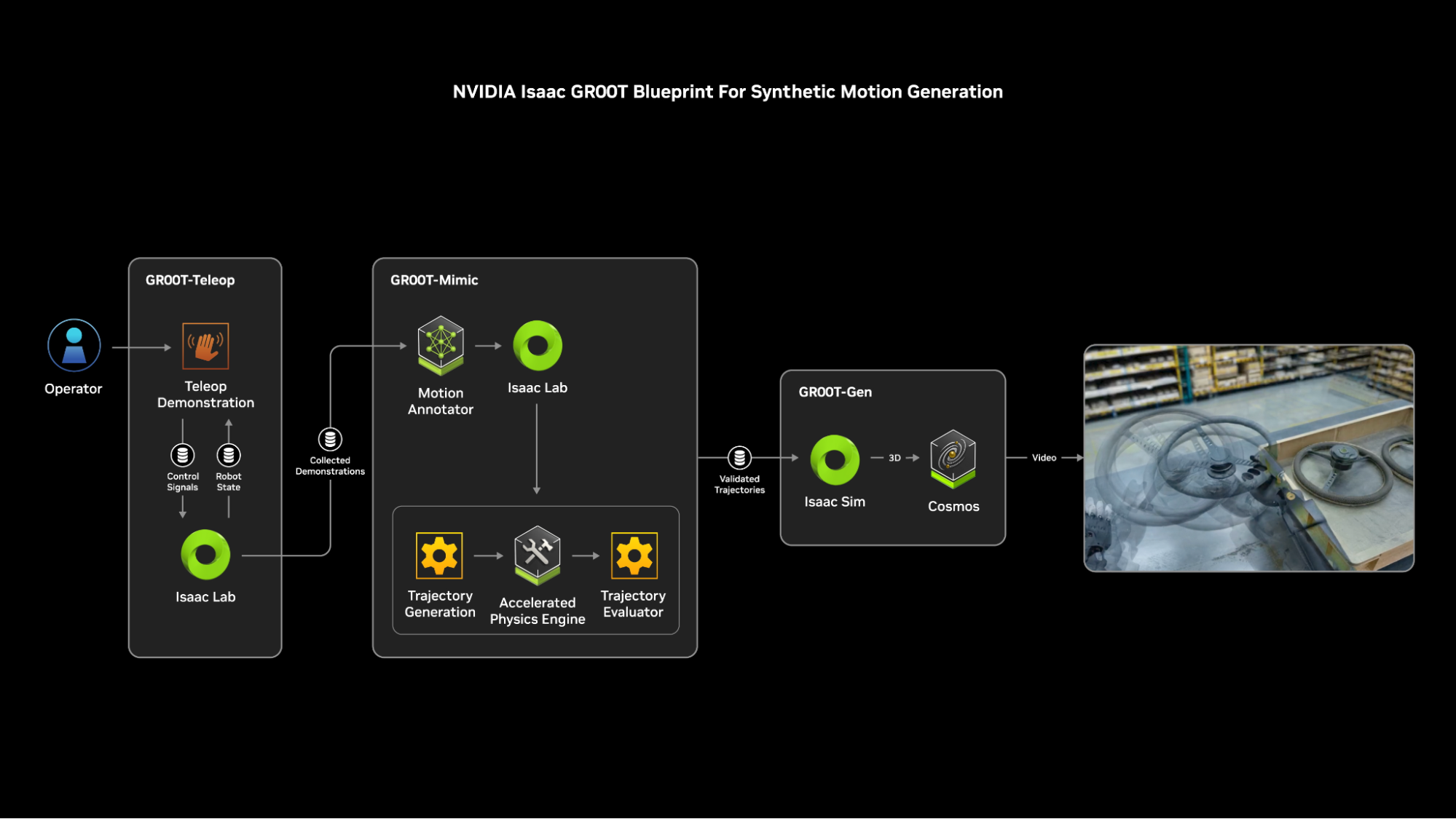

Isaac GROOT Blueprint Unveiled

GR00T N1 employs a dual-system architecture inspired by human cognition. System 1 represents a fast-thinking action model, reflecting human reflexes and intuition. System 2 serves as a slow-thinking model for deliberate and methodical decision-making.

Powered by a vision language model, System 2 analyzes its environment and the instructions it has received to plan actions. System 1 then translates these plans into precise, continuous movements for the robot. System 1 is trained using human demonstration data and a vast amount of synthetic data generated by the NVIDIA Omniverse platform.

Addressing robotics developers’ perpetual need for extensive, high-quality training data, NVIDIA unveiled the Isaac GR00T Blueprint for synthetic motion generation. This blueprint rapidly produces massive synthetic datasets — 780,000 synthetic trajectories (equivalent to nine months of continuous human data) were generated in 11 hours. Integrating this synthetic data boosted GR00T N1’s performance by 40% compared to real-world data alone.

To further support the robotics community, NVIDIA released GR00T N1 datasets and evaluation scenarios via Hugging Face and GitHub. Additionally, developers can access the Isaac GR00T Blueprint demo on build.nvidia.com and GitHub. The forthcoming Newton physics engine, expected later this year, will further complement these resources. NVIDIA’s DGX Spark personal AI supercomputer offers a turnkey solution for developers to expand GR00T N1’s capabilities for diverse robotics applications.

Advanced Cosmos World Foundation Models for Physical AI Development

At GTC 2025, NVIDIA announced a significant expansion of its Cosmos World Foundation Models (WFMs), offering developers open, fully customizable reasoning models to significantly enhance physical AI development. These innovations deliver unprecedented control and efficiency in generating synthetic training data for robotics and autonomous vehicles.

Among the key launches are two new blueprints powered by NVIDIA’s Omniverse and Cosmos platforms, accelerating synthetic data generation and post-training processes. Early adopters of these platforms include industry leaders such as 1X, Agility Robotics, Figure AI, Foretellix, Skild AI, and Uber, who are already leveraging Cosmos for improved data richness and scalability.

Cosmos Transfer, a significant advancement in synthetic data generation, takes structured video inputs—like segmentation maps, lidar scans, and pose estimation—and converts them into controllable, photorealistic video outputs. This streamlines the training of perception AI by efficiently transforming Omniverse-generated 3D simulations into realistic video datasets. Agility Robotics is among the first to integrate Cosmos Transfer, using it extensively to enhance its robot training processes.

Omniverse Blueprint Employs Cosmos Transfer

For autonomous vehicle simulations, NVIDIA introduced the Omniverse Blueprint that employs Cosmos Transfer to vary sensor data conditions, such as weather or lighting, significantly enriching datasets. Foretellix and Parallel Domain have adopted this blueprint, enabling enhanced and more diverse behavioral scenario simulations.

Additionally, the NVIDIA GR00T Blueprint utilizes both Omniverse and Cosmos Transfer platforms to rapidly generate large-scale synthetic manipulation motion datasets, dramatically reducing the time needed for data collection from days to mere hours.

Cosmos Predict and Cosmos Reason

Cosmos Predict, first showcased at CES in January, facilitates intelligent virtual world generation from multimodal inputs, including text, images, and videos. The latest Cosmos Predict models are enhanced to support multi-frame generation and predict intermediate actions from initial and final visual states. Utilizing NVIDIA’s Grace Blackwell NVL72 systems, developers achieve real-time, customizable virtual world generation. Organizations such as 1X, Skild AI, Nexar, and Oxa are leveraging Cosmos Predict for robotics and autonomous driving advancements.

NVIDIA also introduced Cosmos Reason, an open and customizable WFM capable of spatiotemporal reasoning. This model employs chain-of-thought methods to analyze and interpret video data, predicting interaction outcomes clearly described in natural language. Cosmos Reason streamlines data annotation, improves existing WFMs, and supports the creation of high-level action planners for physical AI systems.

NVIDIA offers robust post-training capabilities using PyTorch scripts or NVIDIA NeMo on DGX Cloud to expedite data curation and model refinement. NeMo Curator further accelerates large-scale video data processing, with Linker Vision, Milestone Systems, Virtual Incision, Uber, and Waabi adopting these solutions for advanced AI applications.

Aligned with NVIDIA’s responsible AI commitment, Cosmos WFMs incorporate transparent guardrails and content identification through a collaboration with Google DeepMind’s SynthID watermarking technology.

Cosmos WFMs are available in the NVIDIA API catalog and the Vertex AI Model Garden on Google Cloud. Cosmos Predict and Cosmos Transfer can be accessed openly on Hugging Face and GitHub, while Cosmos Reason is available in early access.

Oracle and NVIDIA Partner to Accelerate Enterprise AI Innovation

Oracle and NVIDIA have announced a groundbreaking integration of NVIDIA’s accelerated computing and inference software with Oracle’s AI infrastructure and generative AI services. This partnership is designed to speed the global deployment of agentic AI applications, significantly enhancing organizations’ capabilities in AI-driven workloads.

The integration allows Oracle Cloud Infrastructure (OCI) customers direct access to over 160 AI tools and more than 100 NVIDIA NIM microservices via the OCI Console. Oracle and NVIDIA are collaborating on no-code deployment solutions through Oracle and NVIDIA AI Blueprints and optimizing AI vector search capabilities in Oracle Database 23ai using NVIDIA’s cuVS library.

Tailored Solutions for Enterprise AI

Integrating NVIDIA AI Enterprise software into OCI Console dramatically reduces deployment times for reasoning models. Customers gain immediate access to optimized, cloud-native NVIDIA NIM microservices, supporting advanced AI models such as NVIDIA’s Llama Nemotron. NVIDIA AI Enterprise will be available as deployment images for OCI bare-metal instances and Kubernetes clusters via OCI Kubernetes Engine, with Oracle providing seamless direct billing and customer support.

Customers like Soley Therapeutics are leveraging OCI AI infrastructure combined with NVIDIA AI Enterprise and Blackwell GPUs to drive breakthroughs in AI-powered drug discovery. This integrated offering meets a range of enterprise needs, from data privacy and sovereignty to low-latency applications.

Accelerated AI Deployment Through Blueprints

OCI AI Blueprints provide customers with no-code, automated deployment recipes, dramatically simplifying the setup of AI workloads. NVIDIA Blueprints complement this with unified reference workflows, enabling enterprises to rapidly tailor and implement custom AI applications powered by NVIDIA AI Enterprise and NVIDIA Omniverse.

To further simplify AI application development, the NVIDIA Omniverse platform, Isaac Sim workstations, and Omniverse Kit App Streaming will be available through the OCI Marketplace later this year. These offerings will leverage NVIDIA L40S GPU-accelerated bare-metal compute instances. Pipefy, an AI-powered business automation platform, exemplifies the success of these blueprints, utilizing them for efficient document and image processing tasks.

Real-Time AI Inference With NVIDIA NIM on OCI Data Science

The OCI Data Science environment now includes pre-optimized NVIDIA NIM microservices, enabling rapid deployment of real-time AI inference solutions with minimal infrastructure management. Customers maintain data security and compliance as these models operate within their OCI tenancy, available through flexible pricing models, including hourly billing or Oracle Universal Credits.

Enhanced Oracle Database 23ai

Oracle and NVIDIA are enhancing Oracle Database 23ai’s AI Vector Search functionality by leveraging NVIDIA GPUs and cuVS. This collaboration significantly accelerates the creation and maintenance of vector embeddings and indexes, optimizing performance for intensive AI vector search workloads. Companies like DeweyVision already utilize this integration for advanced AI-powered media analysis and retrieval, transforming production workflows and content discoverability.

The partnership also introduces next-generation NVIDIA Blackwell GPUs across OCI’s global infrastructure, including public clouds, sovereign clouds, dedicated regions, Oracle Alloy, Compute Cloud@Customer, and Roving Edge Devices. OCI will soon feature NVIDIA GB200 NVL72 systems, delivering immense computational capabilities with significantly improved efficiency and cost-effectiveness. OCI is among the first to offer the powerful NVIDIA Blackwell accelerated computing platform, further advancing AI reasoning and physical AI capabilities.

SoundHound utilizes OCI’s NVIDIA GPU-accelerated infrastructure to power billions of conversational queries annually, showcasing this collaboration’s real-world effectiveness and scalability in voice and conversational AI solutions.

Omniverse Blueprint for Earth-2 Weather Analytics

NVIDIA introduced the Omniverse Blueprint for Earth-2 weather analytics, designed to accelerate the development and deployment of highly accurate weather forecasting solutions. This innovative framework equips enterprises with advanced technologies for enhancing risk management, disaster preparedness, and climate resilience, critical areas, considering climate-related weather events have caused global economic impacts totaling approximately $2 trillion over the past decade.

The Omniverse Blueprint for Earth-2 provides comprehensive reference workflows, incorporating NVIDIA GPU acceleration libraries, physics-AI frameworks, development tools, and accessible NVIDIA NIM microservices. These microservices include CorrDiff for high-resolution numerical weather prediction and FourCastNet for global atmospheric dynamics forecasting. Companies, researchers, and government agencies are already leveraging these microservices to derive insights and mitigate risks associated with extreme weather.

Ecosystem Adoption and Support

Prominent climate technology companies, such as AI-focused G42, JBA Risk Management, and Spire Global, are leveraging NVIDIA’s Earth-2 platform to create innovative AI-enhanced weather solutions. These organizations deliver dramatically accelerated weather forecasts by integrating proprietary enterprise data, reducing traditional prediction times from hours to seconds.

G42 is deploying elements of the Omniverse Blueprint with its AI forecasting models to enhance the UAE’s National Center of Meteorology’s weather prediction and disaster management capabilities. Spire Global employs blueprint-derived AI components and proprietary satellite data to offer medium-range and sub-seasonal forecasts, significantly surpassing traditional physics-based models by a factor of 1,000 in speed.

Additional early adopters and explorers include Taiwan’s Central Weather Administration, The Weather Company, Ecopia AI, ESRI, GCL Power, OroraTech, and Tomorrow.io, further expanding Earth-2’s ecosystem and application diversity.

Advanced Generative AI Capabilities

The Omniverse Blueprint includes CorrDiff, available as an NVIDIA NIM microservice, providing performance advantages of up to 500 times greater speed and 10,000 times more energy efficiency than traditional CPU-based methods. Independent software vendors (ISVs) can leverage this blueprint to rapidly build AI-driven weather analytics solutions, benefiting from enhanced observational data processing for improved accuracy and responsiveness.

Esri, the leading geospatial software provider, is partnering with NVIDIA to connect its ArcGIS platform with Earth-2. OroraTech and Tomorrow.io are integrating proprietary datasets into NVIDIA’s Earth-2 digital twin to improve the next generation of AI models.

Powered by NVIDIA DGX Cloud

The Omniverse Blueprint for Earth-2 leverages NVIDIA DGX Cloud infrastructure, including the DGX GB200, HGX B200, and OVX supercomputers, enabling the high-performance simulation and visualization of global climate scenarios. This comprehensive cloud-powered solution significantly advances AI-driven weather forecasting capabilities, helping organizations achieve unprecedented forecasting speed, scale, and accuracy.

Halos: Safety System for Autonomous Vehicles

NVIDIA introduced Halos, a comprehensive safety framework unifying its extensive automotive hardware, software solutions, and cutting-edge AI research to accelerate the safe development of autonomous vehicles (AVs). Designed to span the entire AV development lifecycle—from cloud training environments to vehicle deployment—Halos empowers developers to integrate state-of-the-art technologies that enhance the safety of drivers, passengers, and pedestrians.

Riccardo Mariani, Vice President of Industry Safety at NVIDIA, emphasized that Halos enhances existing safety measures and could accelerate regulatory compliance and standardization efforts, enabling partners to select tailored technology solutions that meet their AV safety needs.

Three Pillars of Halos

Halos offers a holistic safety approach structured around three key layers: technology, development, and computational safety. At the technology level, Halos addresses platform safety, algorithmic safety, and ecosystem safety. During development, it provides robust guardrails across design, deployment, and validation stages. Computationally, Halos covers AI training to AV deployment, powered by NVIDIA’s DGX systems for AI model training, Omniverse and Cosmos platforms running on NVIDIA OVX for simulation, and NVIDIA DRIVE AGX for vehicle deployment.

Marco Pavone, NVIDIA’s lead AV researcher, highlighted the significance of Halos’ integrated approach in environments that leverage generative AI for increasingly sophisticated AV systems—areas that traditional compositional design and verification methods struggle to address effectively.

AI Systems Inspection Lab

NVIDIA’s AI Systems Inspection Lab, a crucial part of Halos, is the first program worldwide accredited by the ANSI National Accreditation Board. This lab ensures automakers and developers safely integrate NVIDIA technologies into their solutions, incorporating functional safety, cybersecurity, AI safety, and compliance into a unified safety protocol. Initial members include industry leaders Ficosa, OMNIVISION, Onsemi, and Continental.

Platform, Algorithmic, and Ecosystem Safety

Halos emphasizes platform safety through a safety-assessed system-on-a-chip (SoC), safety-certified NVIDIA DriveOS software that spans CPU to GPU, and DRIVE AGX Hyperion, integrating the SoC, software, and sensors into a unified architecture. For algorithmic safety, Halos offers libraries and APIs for managing safety data, alongside advanced simulation and validation environments powered by NVIDIA Omniverse Blueprint and Cosmos foundation models. Ecosystem safety includes comprehensive safety datasets, automated safety evaluations, and continuous improvement processes.

Safety Credentials

Halos leverages NVIDIA’s deep commitment to AV safety, underscored by:

- Over 15,000 engineering years devoted to vehicle safety.

- More than 10,000 hours contributed to international standards committees.

- Over 1,000 patents filed in AV safety.

- More than 240 AV safety research papers have been published.

- Over 30 safety and cybersecurity certifications.

Key recent certifications for NVIDIA’s automotive products include the NVIDIA DriveOS 6.0’s compliance with ISO 26262 ASIL D, TÜV SÜD’s ISO/SAE 21434 cybersecurity process certification, and TÜV Rheinland’s independent safety assessment for the NVIDIA DRIVE AV platform.

Accelerated Quantum Research Center to Advance Quantum Computing Announced

NVIDIA announced the establishment of a new research center in Boston, the NVIDIA Accelerated Quantum Research Center (NVAQC), dedicated to advancing quantum computing technologies. This facility will merge state-of-the-art quantum hardware with NVIDIA’s AI-driven supercomputing resources, significantly accelerating quantum supercomputing capabilities.

NVAQC aims to address critical challenges facing quantum computing, including qubit noise reduction and transforming experimental quantum processors into practical, deployable devices. Key industry leaders in quantum computing, such as Quantinuum, Quantum Machines, and QuEra Computing, will leverage NVAQC’s resources by collaborating closely with top-tier academic institutions, including Harvard Quantum Initiative in Science and Engineering (HQI) and MIT’s Engineering Quantum Systems (EQuS) group.

The center will utilize NVIDIA’s GB200 NVL72 rack-scale systems, the most powerful hardware optimized for quantum computing applications. This advanced infrastructure will support sophisticated quantum system simulations and facilitate the deployment of low-latency quantum hardware control algorithms necessary for quantum error correction. Additionally, the powerful GB200 NVL72 platform will drive the integration of AI algorithms into quantum research, enhancing overall computational efficiency.

To address integration complexities between GPU and quantum processing unit (QPU) hardware, researchers at NVAQC will use NVIDIA’s CUDA-Q quantum development platform. CUDA-Q facilitates the creation of advanced hybrid quantum algorithms and applications, enabling smoother integration and more efficient research outcomes.

Collaborations with Harvard’s Quantum Initiative will focus on pioneering next-generation quantum computing technologies. At the same time, MIT’s EQuS group will leverage NVAQC’s advanced infrastructure to enhance techniques, particularly quantum error correction methods crucial for practical quantum computing implementations.

The NVAQC is scheduled to commence operations later this year, marking a significant step forward in quantum computing research and development.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed